ural1772 Ski-Trails for Robots

Ski-Trails for Robots

Memory limit: 64 MB

Input

Output

Sample

| input | output |

|---|---|

5 3 2 |

6 |

分析:参考http://blog.csdn.net/xcszbdnl/article/details/38494201;

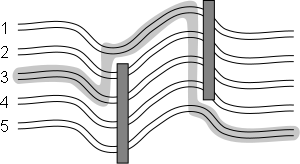

对于当前障碍物,在障碍物旁边的点必然是可到达的最短的路程的点;

代码:

#include <iostream>

#include <cstdio>

#include <cstdlib>

#include <cmath>

#include <algorithm>

#include <climits>

#include <cstring>

#include <string>

#include <set>

#include <map>

#include <queue>

#include <stack>

#include <vector>

#include <list>

#define rep(i,m,n) for(i=m;i<=n;i++)

#define rsp(it,s) for(set<int>::iterator it=s.begin();it!=s.end();it++)

#define mod 1000000007

#define inf 0x3f3f3f3f

#define vi vector<int>

#define pb push_back

#define mp make_pair

#define fi first

#define se second

#define ll long long

#define pi acos(-1.0)

#define pii pair<int,int>

#define Lson L, mid, rt<<1

#define Rson mid+1, R, rt<<1|1

const int maxn=1e5+;

const int dis[][]={{,},{-,},{,-},{,}};

using namespace std;

ll gcd(ll p,ll q){return q==?p:gcd(q,p%q);}

ll qpow(ll p,ll q){ll f=;while(q){if(q&)f=f*p%mod;p=p*p%mod;q>>=;}return f;}

int n,m,k,t,s;

set<int>p,q;

set<int>::iterator now,pr,la;

ll dp[maxn];

int main()

{

int i,j;

scanf("%d%d%d",&n,&s,&k);

rep(i,,n+)dp[i]=1e18;

p.insert(),p.insert(n+),p.insert(s);

dp[s]=;

while(k--)

{

int a,b;

scanf("%d%d",&a,&b);

if(a>)

{

a--;

p.insert(a);

now=p.find(a);

pr=--now;

++now;

la=++now;

--now;

if(dp[*now]>dp[*pr]+(*now)-(*pr))dp[*now]=dp[*pr]+(*now)-(*pr);

if(dp[*now]>dp[*la]+(*la)-(*now))dp[*now]=dp[*la]+(*la)-(*now);

a++;

}

if(b<n)

{

b++;

p.insert(b);

now=p.find(b);

pr=--now;

++now;

la=++now;

--now;

if(dp[*now]>dp[*pr]+(*now)-(*pr))dp[*now]=dp[*pr]+(*now)-(*pr);

if(dp[*now]>dp[*la]+(*la)-(*now))dp[*now]=dp[*la]+(*la)-(*now);

b--;

}

q.clear();

for(now=p.lower_bound(a);now!=p.end()&&*now<=b;now++)q.insert(*now);

for(int x:q)p.erase(x),dp[x]=1e18;

}

ll mi=1e18;

rep(i,,n)if(mi>dp[i])mi=dp[i];

printf("%lld\n",mi);

//system("pause");

return ;

}

ural1772 Ski-Trails for Robots的更多相关文章

- 网站 robots.txt 文件编写

网站 robots.txt 文件编写 Intro robots.txt 是网站根目录下的一个纯文本文件,在这个文件中网站管理者可以声明该网站中不想被robots访问的部分,或者指定搜索引擎只收录指定的 ...

- Robots.txt - 禁止爬虫(转)

Robots.txt - 禁止爬虫 robots.txt用于禁止网络爬虫访问网站指定目录.robots.txt的格式采用面向行的语法:空行.注释行(以#打头).规则行.规则行的格式为:Field: v ...

- (转载)robots.txt写法大全和robots.txt语法的作用

1如果允许所有搜索引擎访问网站的所有部分的话 我们可以建立一个空白的文本文档,命名为robots.txt放在网站的根目录下即可.robots.txt写法如下:User-agent: *Disallow ...

- 2016 ccpc 网络选拔赛 F. Robots

Robots Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/65536 K (Java/Others)Total Subm ...

- Codeforces 209 C. Trails and Glades

Vasya went for a walk in the park. The park has n glades, numbered from 1 to n. There are m trails b ...

- robots.txt文件没错,为何总提示封禁

大家好,我的robots.txt文件没错,为何百度总提示封禁,哪位高人帮我看看原因,在此谢过. 我的站点www.haokda.com,robots.txt如下: ## robots.txt for P ...

- robots笔记以免忘记

html头部标签写法: <meta name="robots" content="index,follow" /> content中的值决定允许抓取 ...

- [题解]USACO 1.3 Ski Course Design

Ski Course Design Farmer John has N hills on his farm (1 <= N <= 1,000), each with an integer ...

- springMVC robots.txt 处理

正常情况这样就好使 <mvc:resources mapping="/robots.txt" location="/lib/robots.txt"/> ...

随机推荐

- 浅谈h5移动端页面的适配问题

一.前言 昨天唠叨了哈没用的,今天说点有用的把.先说一下响应式布局吧,我一直认为响应式布局的分项目,一下布局简单得项目做响应式还是可以可以得.例如博客.后台管理系统等.但是有些会认为响应式很牛逼,尤其 ...

- js键盘键值大全

原文地址:http://blog.csdn.net/avenccssddnn/article/details/7950524 js键盘键值 keycode 8 = BackSpace BackSpac ...

- php file取重复

function FetchRepeatMemberInArray($array) { // 获取去掉重复数据的数组 $unique_arr = array_unique ( $array ); // ...

- 编写程序,从vector<char>初始化string

#include<iostream> #include<string> #include<vector> using namespace std; int main ...

- python zookeeper 在 uwsgi中 watcher不生效

def code_watcher(handle,type, state, path): print "zk code watcher,path is: ",path #da ...

- DDE复盘流程

开始复盘: 1 导入前面数据 重新复盘: 1.打开行情管理器 2.关闭图表 3.删除tick和1分钟图 4.关闭行情管理器 5.开启.

- DB层级

最上层: 业务层 负载均衡: LVS 代理层: DB-PROXY DB层: DB主库 DB从库 随着DB出 ...

- SQL SERVER 2000 遍历父子关系数据的表(二叉树)获得所有子节点 所有父节点及节点层数函数

---SQL SERVER 2000 遍历父子关系數據表(二叉树)获得所有子节点 所有父节点及节点层数函数---Geovin Du 涂聚文--建立測試環境Create Table GeovinDu([ ...

- Android得到视频缩略图

视频缩略图,可以通过接口类 MediaMetadataRetriever 来实现 具体可以看代码片段 public Bitmap getVideoThumbnail(String filePath) ...

- 使WiFi具有保存历史连接的功能

在wpa_supplicant.conf里面添加这个功能 update_config=1 就能更新了,保存了历史的连接AP,不用再输入密码