How Many Partitions Does An RDD Have

From https://databricks.gitbooks.io/databricks-spark-knowledge-base/content/performance_optimization/how_many_partitions_does_an_rdd_have.html

For tuning and troubleshooting, it's often necessary to know how many paritions an RDD represents. There are a few ways to find this information:

View Task Execution Against Partitions Using the UI

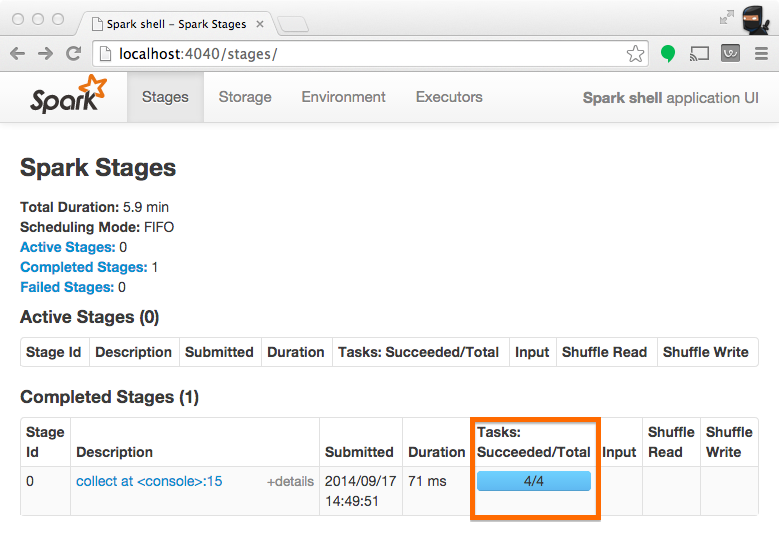

When a stage executes, you can see the number of partitions for a given stage in the Spark UI. For example, the following simple job creates an RDD of 100 elements across 4 partitions, then distributes a dummy map task before collecting the elements back to the driver program:

scala> val someRDD = sc.parallelize(1 to 100, 4)

someRDD: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.map(x => x).collect

res1: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100)

In Spark's application UI, you can see from the following screenshot that the "Total Tasks" represents the number of partitions:

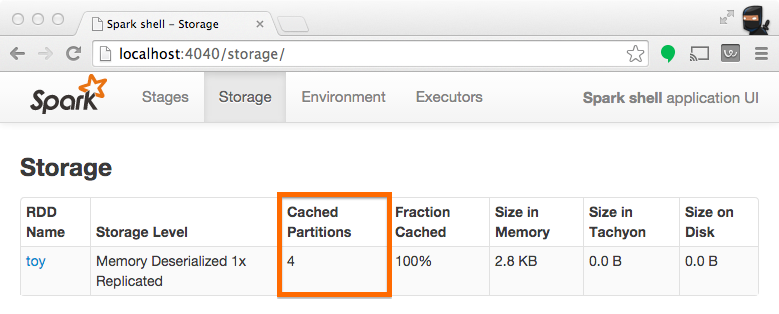

View Partition Caching Using the UI

When persisting (a.k.a. caching) RDDs, it's useful to understand how many partitions have been stored. The example below is identical to the one prior, except that we'll now cache the RDD prior to processing it. After this completes, we can use the UI to understand what has been stored from this operation.

scala> someRDD.setName("toy").cache

res2: someRDD.type = toy ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.map(x => x).collect

res3: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100)

Note from the screenshot that there are four partitions cached.

Inspect RDD Partitions Programatically

In the Scala API, an RDD holds a reference to it's Array of partitions, which you can use to find out how many partitions there are:

scala> val someRDD = sc.parallelize(1 to 100, 30)

someRDD: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.partitions.size

res0: Int = 30

In the python API, there is a method for explicitly listing the number of partitions:

In [1]: someRDD = sc.parallelize(range(101),30)

In [2]: someRDD.getNumPartitions()

Out[2]: 30

Note in the examples above, the number of partitions was intentionally set to 30 upon initialization.

How Many Partitions Does An RDD Have的更多相关文章

- Spark核心概念之RDD

RDD: Resilient Distributed Dataset RDD的特点: 1.A list of partitions 一系列的分片:比如说64M一片:类似于Hadoop中的s ...

- RDD的依赖关系

RDD的依赖关系 Rdd之间的依赖关系通过rdd中的getDependencies来进行表示, 在提交job后,会通过在DAGShuduler.submitStage-->getMissingP ...

- RDD.scala(源码)

---- map. --- flatMap.fliter.distinct.repartition.coalesce.sample.randomSplit.randomSampleWithRange. ...

- Spark函数详解系列之RDD基本转换

摘要: RDD:弹性分布式数据集,是一种特殊集合 ‚ 支持多种来源 ‚ 有容错机制 ‚ 可以被缓存 ‚ 支持并行操作,一个RDD代表一个分区里的数据集 RDD有两种操作算子: ...

- Spark编程模型及RDD操作

转载自:http://blog.csdn.net/liuwenbo0920/article/details/45243775 1. Spark中的基本概念 在Spark中,有下面的基本概念.Appli ...

- 【原创】大数据基础之Spark(4)RDD原理及代码解析

一 简介 spark核心是RDD,官方文档地址:https://spark.apache.org/docs/latest/rdd-programming-guide.html#resilient-di ...

- Spark源码系列:RDD repartition、coalesce 对比

在上一篇文章中 Spark源码系列:DataFrame repartition.coalesce 对比 对DataFrame的repartition.coalesce进行了对比,在这篇文章中,将会对R ...

- 【Spark-core学习之二】 RDD和算子

环境 虚拟机:VMware 10 Linux版本:CentOS-6.5-x86_64 客户端:Xshell4 FTP:Xftp4 jdk1.8 scala-2.10.4(依赖jdk1.8) spark ...

- spark 算子之RDD

map map(func) Return a new distributed dataset formed by passing each element of the source through ...

随机推荐

- keyup.enter.native&click.native.prevent

vue 监听键盘回车事件 @keyup.enter || @keyup.enter.native vue运行为v-on在监听键盘事件时,添加了特殊的键盘修饰符: <input v-on:keyu ...

- Python数据分析5-----数据规约

1.数据规约概念和目的 数据规约是产生更小且保留数据完整性的新数据集. 意义:降低无效错误数据的影响.更有效率.降低存储成本. 2.属性规约 (1)属性合并(降维):比如PCA (2)删除不相关属性 ...

- PostgreSQL 安装配置 (亲测可用)

转自:http://blog.csdn.net/jesseyoung/article/details/41348835 受作者博客限制,请访问上面的链接 ---------- 下面是另一个转载 --- ...

- HDU1867 - A + B for you again

Generally speaking, there are a lot of problems about strings processing. Now you encounter another ...

- 【Tool】Mac环境维护

1. 安装编译opencv https://blog.csdn.net/lijiang1991/article/details/50756065 /Users/yuhua.cheng/Opt/open ...

- Myeclipse中将项目上传到码云

公司实习之后习惯是代码上传到svn上,最近想起来个人的一些代码上传的到码云上比较方便,根据网上分享的博客内容结合自己的整理记录 其中大多数是参考了https://blog.csdn.net/izzyl ...

- 【ACM-ICPC 2018 南京赛区网络预赛 L】Magical Girl Haze

[链接] 我是链接,点我呀:) [题意] 在这里输入题意 [题解] 定义dis[i][j]表示到达i这个点. 用掉了j次去除边的机会的最短路. dis[1][0]= 0; 在写松弛条件的时候. 如果用 ...

- el表达式中的比较和包含

相等( equal ) :eq 不相等( not equal ): ne / neq 大于( greater than ): gt 小于( less than ): lt 大于等于( great th ...

- Android 对话框(Dialog) 及 自己定义Dialog

Activities提供了一种方便管理的创建.保存.回复的对话框机制,比如 onCreateDialog(int), onPrepareDialog(int, Dialog), showDialog( ...

- 从乐视和小米“最火电视”之战 看PR传播策略

今年的双11够热闹.一方面,阿里.京东.国美.苏宁等电商巨头卯足了劲儿.试图在双11期间斗个你死我活,剑拔弩张的气势超过了以往不论什么一场双11:还有一方面.不少硬件厂商.家电企业也来凑双11 ...