anchor_target_layer层解读

总结下来,用generate_anchors产生多种坐标变换,这种坐标变换由scale和ratio来,相当于提前计算好。anchor_target_layer先计算的是从feature map映射到原图的中点坐标,然后根据多种坐标变换生成不同的框。

anchor_target_layer层是产生在rpn训练阶段产生anchors的层

源代码:

# --------------------------------------------------------

# Faster R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick and Sean Bell

# -------------------------------------------------------- import os

import caffe

import yaml

from fast_rcnn.config import cfg

import numpy as np

import numpy.random as npr

from generate_anchors import generate_anchors

from utils.cython_bbox import bbox_overlaps

from fast_rcnn.bbox_transform import bbox_transform DEBUG = False class AnchorTargetLayer(caffe.Layer):

"""

Assign anchors to ground-truth targets. Produces anchor classification

labels and bounding-box regression targets.

""" def setup(self, bottom, top):

layer_params = yaml.load(self.param_str_)

anchor_scales = layer_params.get('scales', (8, 16, 32))

self._anchors = generate_anchors(scales=np.array(anchor_scales)) #generate_anchors函数根据ratio和scale产生坐标变换,这些坐标变换是让中心点产生不同的anchor

self._num_anchors = self._anchors.shape[0]

self._feat_stride = layer_params['feat_stride'] #feat_stride和roi_pooling中的spatial_scale是对应的,一个是16,一个是16分之

一,一个是把中心点坐标从feature map映射到原图,一个是把原图roi框坐标映射到feature map if DEBUG:

print 'anchors:'

print self._anchors

print 'anchor shapes:'

print np.hstack((

self._anchors[:, 2::4] - self._anchors[:, 0::4],

self._anchors[:, 3::4] - self._anchors[:, 1::4],

))

self._counts = cfg.EPS

self._sums = np.zeros((1, 4))

self._squared_sums = np.zeros((1, 4))

self._fg_sum = 0

self._bg_sum = 0

self._count = 0 # allow boxes to sit over the edge by a small amount

self._allowed_border = layer_params.get('allowed_border', 0) height, width = bottom[0].data.shape[-2:]

if DEBUG:

print 'AnchorTargetLayer: height', height, 'width', width A = self._num_anchors

# labels

top[0].reshape(1, 1, A * height, width)

# bbox_targets

top[1].reshape(1, A * 4, height, width) #reshape输出的形状

# bbox_inside_weights

top[2].reshape(1, A * 4, height, width)

# bbox_outside_weights

top[3].reshape(1, A * 4, height, width) def forward(self, bottom, top):

# Algorithm:

#

# for each (H, W) location i

# generate 9 anchor boxes centered on cell i

# apply predicted bbox deltas at cell i to each of the 9 anchors

# filter out-of-image anchors

# measure GT overlap assert bottom[0].data.shape[0] == 1, \

'Only single item batches are supported' # map of shape (..., H, W)

height, width = bottom[0].data.shape[-2:] #得到特征提取层最后一层feature map的高度和宽度,具体原因讲解看代码框下面的分析

# GT boxes (x1, y1, x2, y2, label)

gt_boxes = bottom[1].data

# im_info

im_info = bottom[2].data[0, :] if DEBUG:

print ''

print 'im_size: ({}, {})'.format(im_info[0], im_info[1])

print 'scale: {}'.format(im_info[2])

print 'height, width: ({}, {})'.format(height, width)

print 'rpn: gt_boxes.shape', gt_boxes.shape

print 'rpn: gt_boxes', gt_boxes # 1. Generate proposals from bbox deltas and shifted anchors

shift_x = np.arange(0, width) * self._feat_stride

shift_y = np.arange(0, height) * self._feat_stride

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shifts = np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose()

# add A anchors (1, A, 4) to

# cell K shifts (K, 1, 4) to get

# shift anchors (K, A, 4)

# reshape to (K*A, 4) shifted anchors

A = self._num_anchors

K = shifts.shape[0]

all_anchors = (self._anchors.reshape((1, A, 4)) +

shifts.reshape((1, K, 4)).transpose((1, 0, 2)))

all_anchors = all_anchors.reshape((K * A, 4))

total_anchors = int(K * A) # only keep anchors inside the image

inds_inside = np.where(

(all_anchors[:, 0] >= -self._allowed_border) &

(all_anchors[:, 1] >= -self._allowed_border) &

(all_anchors[:, 2] < im_info[1] + self._allowed_border) & # width

(all_anchors[:, 3] < im_info[0] + self._allowed_border) # height

)[0] if DEBUG:

print 'total_anchors', total_anchors

print 'inds_inside', len(inds_inside) # keep only inside anchors

anchors = all_anchors[inds_inside, :]

if DEBUG:

print 'anchors.shape', anchors.shape # label: 1 is positive, 0 is negative, -1 is dont care

labels = np.empty((len(inds_inside), ), dtype=np.float32)

labels.fill(-1) # overlaps between the anchors and the gt boxes

# overlaps (ex, gt)

overlaps = bbox_overlaps(

np.ascontiguousarray(anchors, dtype=np.float),

np.ascontiguousarray(gt_boxes, dtype=np.float))

argmax_overlaps = overlaps.argmax(axis=1) #argmax_overlaps是每个anchor对应最大overlap的gt_boxes的下标

max_overlaps = overlaps[np.arange(len(inds_inside)), argmax_overlaps]

gt_argmax_overlaps = overlaps.argmax(axis=0) #gt_argmax_overlaps是每个gt_boxes对应最大overlap的anchor的下标

gt_max_overlaps = overlaps[gt_argmax_overlaps,

np.arange(overlaps.shape[1])]

gt_argmax_overlaps = np.where(overlaps == gt_max_overlaps)[0] if not cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels first so that positive labels can clobber them

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0 # fg label: for each gt, anchor with highest overlap

labels[gt_argmax_overlaps] = 1 # fg label: above threshold IOU

labels[max_overlaps >= cfg.TRAIN.RPN_POSITIVE_OVERLAP] = 1 if cfg.TRAIN.RPN_CLOBBER_POSITIVES:

# assign bg labels last so that negative labels can clobber positives

labels[max_overlaps < cfg.TRAIN.RPN_NEGATIVE_OVERLAP] = 0 # subsample positive labels if we have too many

num_fg = int(cfg.TRAIN.RPN_FG_FRACTION * cfg.TRAIN.RPN_BATCHSIZE)

fg_inds = np.where(labels == 1)[0]

if len(fg_inds) > num_fg:

disable_inds = npr.choice(

fg_inds, size=(len(fg_inds) - num_fg), replace=False)

labels[disable_inds] = -1 # subsample negative labels if we have too many

num_bg = cfg.TRAIN.RPN_BATCHSIZE - np.sum(labels == 1)

bg_inds = np.where(labels == 0)[0]

if len(bg_inds) > num_bg:

disable_inds = npr.choice(

bg_inds, size=(len(bg_inds) - num_bg), replace=False)

labels[disable_inds] = -1

#print "was %s inds, disabling %s, now %s inds" % (

#len(bg_inds), len(disable_inds), np.sum(labels == 0)) bbox_targets = np.zeros((len(inds_inside), 4), dtype=np.float32)

bbox_targets = _compute_targets(anchors, gt_boxes[argmax_overlaps, :]) bbox_inside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32)

bbox_inside_weights[labels == 1, :] = np.array(cfg.TRAIN.RPN_BBOX_INSIDE_WEIGHTS) bbox_outside_weights = np.zeros((len(inds_inside), 4), dtype=np.float32)

if cfg.TRAIN.RPN_POSITIVE_WEIGHT < 0:

# uniform weighting of examples (given non-uniform sampling)

num_examples = np.sum(labels >= 0)

positive_weights = np.ones((1, 4)) * 1.0 / num_examples

negative_weights = np.ones((1, 4)) * 1.0 / num_examples

else:

assert ((cfg.TRAIN.RPN_POSITIVE_WEIGHT > 0) &

(cfg.TRAIN.RPN_POSITIVE_WEIGHT < 1))

positive_weights = (cfg.TRAIN.RPN_POSITIVE_WEIGHT /

np.sum(labels == 1))

negative_weights = ((1.0 - cfg.TRAIN.RPN_POSITIVE_WEIGHT) /

np.sum(labels == 0))

bbox_outside_weights[labels == 1, :] = positive_weights

bbox_outside_weights[labels == 0, :] = negative_weights if DEBUG:

self._sums += bbox_targets[labels == 1, :].sum(axis=0)

self._squared_sums += (bbox_targets[labels == 1, :] ** 2).sum(axis=0)

self._counts += np.sum(labels == 1)

means = self._sums / self._counts

stds = np.sqrt(self._squared_sums / self._counts - means ** 2)

print 'means:'

print means

print 'stdevs:'

print stds # map up to original set of anchors

labels = _unmap(labels, total_anchors, inds_inside, fill=-1)

bbox_targets = _unmap(bbox_targets, total_anchors, inds_inside, fill=0)

bbox_inside_weights = _unmap(bbox_inside_weights, total_anchors, inds_inside, fill=0)

bbox_outside_weights = _unmap(bbox_outside_weights, total_anchors, inds_inside, fill=0) if DEBUG:

print 'rpn: max max_overlap', np.max(max_overlaps)

print 'rpn: num_positive', np.sum(labels == 1)

print 'rpn: num_negative', np.sum(labels == 0)

self._fg_sum += np.sum(labels == 1)

self._bg_sum += np.sum(labels == 0)

self._count += 1

print 'rpn: num_positive avg', self._fg_sum / self._count

print 'rpn: num_negative avg', self._bg_sum / self._count # labels

labels = labels.reshape((1, height, width, A)).transpose(0, 3, 1, 2)

labels = labels.reshape((1, 1, A * height, width))

top[0].reshape(*labels.shape)

top[0].data[...] = labels # bbox_targets

bbox_targets = bbox_targets \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

top[1].reshape(*bbox_targets.shape)

top[1].data[...] = bbox_targets # bbox_inside_weights

bbox_inside_weights = bbox_inside_weights \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

assert bbox_inside_weights.shape[2] == height

assert bbox_inside_weights.shape[3] == width

top[2].reshape(*bbox_inside_weights.shape)

top[2].data[...] = bbox_inside_weights # bbox_outside_weights

bbox_outside_weights = bbox_outside_weights \

.reshape((1, height, width, A * 4)).transpose(0, 3, 1, 2)

assert bbox_outside_weights.shape[2] == height

assert bbox_outside_weights.shape[3] == width

top[3].reshape(*bbox_outside_weights.shape)

top[3].data[...] = bbox_outside_weights def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass def _unmap(data, count, inds, fill=0):

""" Unmap a subset of item (data) back to the original set of items (of

size count) """

if len(data.shape) == 1:

ret = np.empty((count, ), dtype=np.float32)

ret.fill(fill)

ret[inds] = data

else:

ret = np.empty((count, ) + data.shape[1:], dtype=np.float32)

ret.fill(fill)

ret[inds, :] = data

return ret def _compute_targets(ex_rois, gt_rois):

"""Compute bounding-box regression targets for an image.""" assert ex_rois.shape[0] == gt_rois.shape[0]

assert ex_rois.shape[1] == 4

assert gt_rois.shape[1] == 5 return bbox_transform(ex_rois, gt_rois[:, :4]).astype(np.float32, copy=False)

self._anchors:

我采用的是模型默认的3x3的anchor设置,np array的shape是(9,4)。第一个坐标是中心点的x坐标需要变化的值,生成的是框的最小x;第二个坐标是中心点的y坐标需要变化的值,生成的是框的最小y,这两个组成了框的左上坐标。第三个坐标是中心点的x坐标需要变化的值,生成的是框的最大x;第四个坐标是中心点的y坐标需要变化的值,生成的是框的最大y,这两个组成了框的右下坐标。

shift_x:

shift_x的长度是61,实际上这就是最后一层feature map的宽度大小。从0到60依次取整数,然后乘以16构成了shift_x。0到60是feature map上的每一个坐标点,也是anchor的中心点,乘以16之后就映射到了原图的坐标,这些就成了anchor在原图的中心点。

shift_y:

shift_y的长度是39,是最后一层feature map的长度大小,其他和shift_x类似。

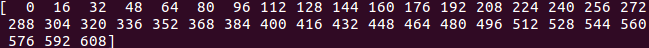

shift_x, shift_y = np.meshgrid(shift_x, shift_y),以下是这段代码生成的shift_x和shift_y:

shift_x和shift_y都变成了39x91的array,不同的是shift_x是按照行重复了39行,shift_y是按照列重复了61列

shifts = np.vstack((shift_x.ravel(), shift_y.ravel(), shift_x.ravel(), shift_y.ravel())).transpose(),以下是这段代码生成的shifts:shifts变成了2379x4的形状,shift_x.ravel()是把之前的61x39的shift_x reshape成2379x1的形状然后做第一列和第三列,shift_y.ravel()是把之前的61x39的shift_y reshape成2379x1的形状然后做

第二列和第四列

之前一直没搞懂为什么要弄两个shift_x。原因是,你要进行anchor的坐标变换是基于中心点进行加减,这一步生成的就是2379个anchor的中心点坐标。中心点坐标是二维的,只有x和y,但是因为之后需要进行坐标变换,即从anchor坐标中心点生成anchor框,anchor框是左上右下4个点,所以变成了4维。第一列生成的是框的最小x,第三列生成的是框的最大x,这两个都需要在中心点 的x坐标下进行加减变化。同理,第二列和第四列是在中心点的y坐标上进行操作的。

self._anchors.reshape((1, A, 4))进行的变化如下图,实际上增加了一维:

all_anchors = (self._anchors.reshape((1, A, 4)) + shifts.reshape((1, K, 4)).transpose((1, 0, 2)))生成的all_anchors如下图:

self._anchors的shape是(1,9,4),shifts经过变换后变成(2379,1,4),得到的all_anchors的shape是(2379,9,4),相当于把2379个中点坐标分别和9个anchor变换坐标相加(我在numpy里面写了一个+的计算总结,类似的)

比如第一维的第一个就是(0,0,0,0)和9个anchor坐标变换分别相加:

all_anchors = all_anchors.reshape((K * A, 4))就会生成2379*9个roi框坐标

layer {

name: 'rpn-data'

type: 'Python'

bottom: 'rpn_cls_score'

bottom: 'gt_boxes'

bottom: 'im_info'

bottom: 'data'

top: 'rpn_labels'

top: 'rpn_bbox_targets'

top: 'rpn_bbox_inside_weights'

top: 'rpn_bbox_outside_weights'

python_param {

module: 'rpn.anchor_target_layer'

layer: 'AnchorTargetLayer'

param_str: "'feat_stride': 16"

}

}

这是rpn-data层的prototxt,可以看到输入4个,输出4个

height, width = bottom[0].data.shape[-2:]

这一段代码得到的是特征提取层最后一层的高度和宽度。为什么呢?bottom[0]是rpn_cls_score,这是经过rpn3x3卷积和1x1卷积得到的某一类的框为前景、背景的预测概率值,可以发现这一个feature map的shape是(2*k,height,width)。这里的height,width实际上和特征层提取层最后一层的height,width一样大,因为这个3x3卷积stride和pad为1,1x1卷积本身不改变feature map的尺寸。论文中是在特征提取层最后一层进行rpn的滑动,在实际代码中,用rpn_cls_score的shape替代了特征提取层最后一层卷积。所以rpn_cls_score并不是要给rpn-data层输入概率值,而只是传rpn滑动所需的shape。

.data表示提取具体的data,.shape就是这个具体data的形状,[-2:]就是提取shape的倒数第二位到最后一位。

gt_boxes是输入的标准框,im_info包含了图片的尺寸(注意不是feature map尺寸,而是原图),data是这个图片本身的所有像素组成的array。

if DEBUG:

85 print ''

86 print 'im_size: ({}, {})'.format(im_info[0], im_info[1])

87 print 'scale: {}'.format(im_info[2])

88 print 'height, width: ({}, {})'.format(height, width)

89 print 'rpn: gt_boxes.shape', gt_boxes.shape

90 print 'rpn: gt_boxes', gt_boxes

从这个debug部分可以轻松看出这些信息。

anchor_target_layer层解读的更多相关文章

- anchor_target_layer层其他部分解读

inds_inside = np.where( (all_anchors[:, 0] >= -self._allowed_border) & (all_anchors[:, 1] > ...

- proposal_layer.py层解读

proposal_layer层是利用训练好的rpn网络来生成region proposal供fast rcnn使用. proposal_layer整个处理过程:1.生成所有的anchor,对ancho ...

- 【Android】Sensor框架Framework层解读

Sensor整体架构 整体架构说明 黄色部分表示硬件,它要挂在I2C总线上 红色部分表示驱动,驱动注册到Kernel的Input Subsystem上,然后通过Event Device把Sensor数 ...

- 【Android】Sensor框架HAL层解读

Android sensor构建 Android4.1 系统内置对传感器的支持达13种,他们分别是:加速度传感器(accelerometer).磁力传感器(magnetic field).方向传感器( ...

- caffe层解读系列-softmax_loss

转自:http://blog.csdn.net/shuzfan/article/details/51460895 Loss Function softmax_loss的计算包含2步: (1)计算sof ...

- slover层解读

void Solver<Dtype>::UpdateSmoothedLoss(Dtype loss, int start_iter, int average_loss) { if (los ...

- caffe层解读-softmax_loss

转自https://blog.csdn.net/shuzfan/article/details/51460895. Loss Function softmax_loss的计算包含2步: (1)计算so ...

- caffe︱ImageData层、DummyData层作为原始数据导入的应用

Part1:caffe的ImageData层 ImageData是一个图像输入层,该层的好处是,直接输入原始图像信息就可以导入分析. 在案例中利用ImageData层进行数据转化,得到了一批数据. 但 ...

- 让数据可视化变得简单 – JavaScript 图形库

作者 | 董叶 公司决策层会围绕着数据来制定相应的策略,数据的重要性与日俱增,政府.金融机构.互联网大厂正在以前所未有的速度收集数据,面对扑面而来的数据,没有抽象.视觉层的帮助,我们很难快速理解掌握其 ...

随机推荐

- 并不对劲的字符串专题(三):Trie树

据说这些并不对劲的内容是<信息学奥赛一本通提高篇>的配套练习. 并不会讲Trie树. 1.poj1056-> 模板题. 2.bzoj1212-> 设dp[i]表示T长度为i的前 ...

- bzoj 1941 Hide and Seek

题目大意: n个点,求每个点到其最远点距离-到其最近点距离(除自己之外)的最小值 思路: 对于估计函数的理解还不够深刻 #include<iostream> #include<cst ...

- SPOJ:NT Games(欧拉函数)

Katniss Everdeen after participating in Hunger Games now wants to participate in NT Games (Number Th ...

- 【SCOI 2005】 繁忙的都市

[题目链接] 点击打开链接 [算法] 题目描述比较繁琐,但细心观察后,发现其实就是用kruskal算法求最小生成树 [代码] #include<bits/stdc++.h> using n ...

- UI:SQL语句

sql语句一般不区分大小写,但是我们默认的是关键字要大写是一种好的习惯,比如SELECT 等效于 select.,但是表中的字段,属性区分大小写.Oracle 数据库是一种区分大小写的. Sql语句命 ...

- bzoj 3398: [Usaco2009 Feb]Bullcow 牡牛和牝牛【dp】

设f[i]为i为牡牛的方案数,f[0]=1,s为f的前缀和,f[i]=s[max(i-k-1,0)] #include<iostream> #include<cstdio> u ...

- Spark SQL概念学习系列之Spark SQL入门

前言 第1章 为什么Spark SQL? 第2章 Spark SQL运行架构 第3章 Spark SQL组件之解析 第4章 深入了解Spark SQL运行计划 第5章 测试环境之搭建 第6章 ...

- 【CSS】少年,你想拥有写轮眼么?

最近笔者在公司内部开展了一次CSS讲座,由于授课经验不太足,授课效果自我感觉并不太好,不过课中有一个笔者用CSS写的一个小效果,其中还是包含了蛮多CSS的常见知识点的,正好也有部分同学很感兴趣如何实现 ...

- C++入门知识点总结

阅读目录 1 C++中的命名空间 C++中使用命名空间来解决在相同文件或范围的同名变量问题,示例程序如下: #include <iostream> using namespace std; ...

- c#如何使用replace函数将"\"替换成"\\"

当我使用 String str="c:\aa.xls"; str=str.Replace("\","\\");时,括号为红色错误的,那么如何 ...