Holm–Bonferroni method

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

项目合作:QQ231469242

# -*- coding: utf-8 -*- # Import standard packages

import numpy as np

from scipy import stats

import pandas as pd

import os # Other required packages

from statsmodels.stats.multicomp import (pairwise_tukeyhsd,

MultiComparison)

from statsmodels.formula.api import ols

from statsmodels.stats.anova import anova_lm #数据excel名

excel="sample.xlsx"

#读取数据

df=pd.read_excel(excel)

#获取第一组数据,结构为列表

group_mental=list(df.StressReduction[(df.Treatment=="mental")])

group_physical=list(df.StressReduction[(df.Treatment=="physical")])

group_medical=list(df.StressReduction[(df.Treatment=="medical")]) multiComp = MultiComparison(df['StressReduction'], df['Treatment']) def Holm_Bonferroni(multiComp):

''' Instead of the Tukey's test, we can do pairwise t-test

通过均分a=0.05,矫正a,得到更小a''' # First, with the "Holm" correction

rtp = multiComp.allpairtest(stats.ttest_rel, method='Holm')

print((rtp[0])) # and then with the Bonferroni correction

print((multiComp.allpairtest(stats.ttest_rel, method='b')[0])) # Any value, for testing the program for correct execution

checkVal = rtp[1][0][0,0]

return checkVal Holm_Bonferroni(multiComp)

数据sample.xlsx

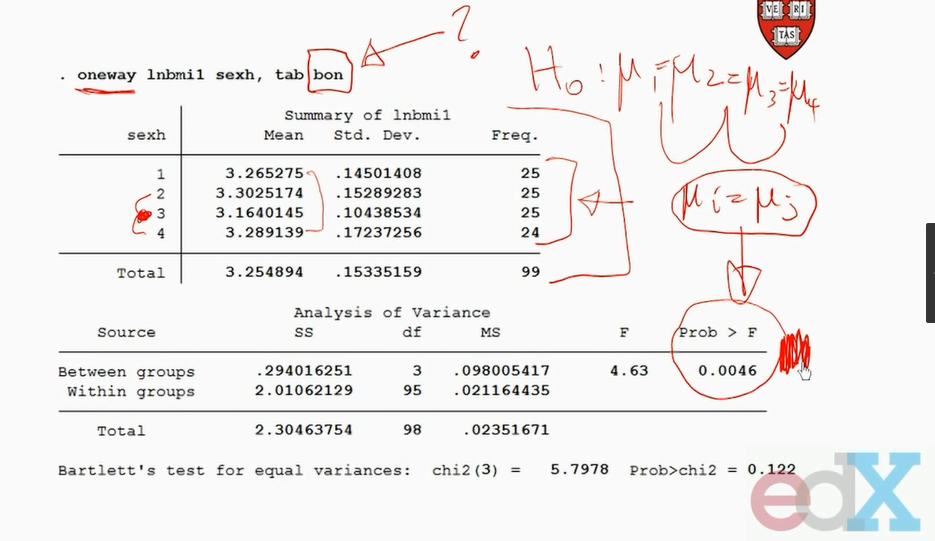

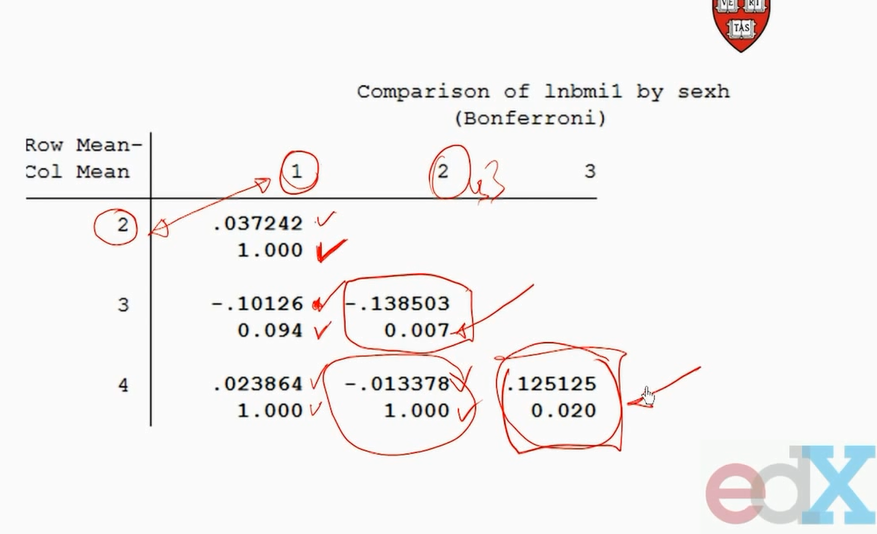

因为反复比较,一型错误概率会增加。

bonferroni 矫正一型错误的公式,它减少了a=0.05关键值

例如有5组数比较,比较的配对结果有10个

所以矫正的a=0.05/10=0.005

https://en.wikipedia.org/wiki/Holm%E2%80%93Bonferroni_method

In statistics, the Holm–Bonferroni method[1] (also called the Holm method or Bonferroni-Holm method) is used to counteract the problem of multiple comparisons. It is intended to control the familywise error rate and offers a simple test uniformly more powerful than the Bonferroni correction. It is one of the earliest usages of stepwise algorithms in simultaneous inference. It is named after Sture Holm, who codified the method, and Carlo Emilio Bonferroni.

Contents

Motivation

When considering several hypotheses, the problem of multiplicity arises: the more hypotheses we check, the higher the probability of a Type I error (false positive). The Holm–Bonferroni method is one of many approaches that control the family-wise error rate (the probability that one or more Type I errors will occur) by adjusting the rejection criteria of each of the individual hypotheses or comparisons.

Formulation

The method is as follows:

- Let H 1 , . . . , H m {\displaystyle H_{1},...,H_{m}}

be a family of hypotheses and P 1 , . . . , P m {\displaystyle P_{1},...,P_{m}}

the corresponding P-values.

- Start by ordering the p-values (from lowest to highest) P ( 1 ) … P ( m ) {\displaystyle P_{(1)}\ldots P_{(m)}}

and let the associated hypotheses be H ( 1 ) … H ( m ) {\displaystyle H_{(1)}\ldots H_{(m)}}

- For a given significance level α {\displaystyle \alpha }

, let k {\displaystyle k}

be the minimal index such that P ( k ) > α m + 1 − k {\displaystyle P_{(k)}>{\frac {\alpha }{m+1-k}}}

- Reject the null hypotheses H ( 1 ) … H ( k − 1 ) {\displaystyle H_{(1)}\ldots H_{(k-1)}}

and do not reject H ( k ) … H ( m ) {\displaystyle H_{(k)}\ldots H_{(m)}}

- If k = 1 {\displaystyle k=1}

then do not reject any of the null hypotheses and if no such k {\displaystyle k}

exist then reject all of the null hypotheses.

The Holm–Bonferroni method ensures that this method will control the F W E R ≤ α {\displaystyle FWER\leq \alpha }

Proof

Holm-Bonferroni controls the FWER as follows. Let H ( 1 ) … H ( m ) {\displaystyle H_{(1)}\ldots H_{(m)}}

Let us assume that we wrongly reject a true hypothesis. We have to prove that the probability of this event is at most α {\displaystyle \alpha }

So let us define A = { P i ≤ α m 0 for some i ∈ I 0 } {\displaystyle A=\left\{P_{i}\leq {\frac {\alpha }{m_{0}}}{\text{ for some }}i\in I_{0}\right\}}

Alternative proof

The Holm–Bonferroni method can be viewed as closed testing procedure,[2] with Bonferroni method applied locally on each of the intersections of null hypotheses. As such, it controls the familywise error rate for all the k hypotheses at level α in the strong sense. Each intersection is tested using the simple Bonferroni test.

It is a shortcut procedure since practically the number of comparisons to be made equal to m {\displaystyle m}

The closure principle states that a hypothesis H i {\displaystyle H_{i}}

In Holm-Bonferroni procedure, we first test H ( 1 ) {\displaystyle H_{(1)}}

If H ( 1 ) {\displaystyle H_{(1)}}

The same rationale applies for H ( 2 ) {\displaystyle H_{(2)}}

The same applies for each 1 ≤ i ≤ m {\displaystyle 1\leq i\leq m}

Example

Consider four null hypotheses H 1 , . . . , H 4 {\displaystyle H_{1},...,H_{4}}

Extensions

Holm–Šidák method

When the hypothesis tests are not negatively dependent, it is possible to replace α m , α m − 1 , . . . , α 1 {\displaystyle {\frac {\alpha }{m}},{\frac {\alpha }{m-1}},...,{\frac {\alpha }{1}}}

- 1 − ( 1 − α ) 1 / m , 1 − ( 1 − α ) 1 / ( m − 1 ) , . . . , 1 − ( 1 − α ) 1 {\displaystyle 1-(1-\alpha )^{1/m},1-(1-\alpha )^{1/(m-1)},...,1-(1-\alpha )^{1}}

resulting in a slightly more powerful test.

Weighted version

Let P ( 1 ) , . . . , P ( m ) {\displaystyle P_{(1)},...,P_{(m)}}

- P ( j ) ≤ w ( j ) ∑ k = j m w ( k ) α , j = 1 , . . . , i {\displaystyle P_{(j)}\leq {\frac {w_{(j)}}{\sum _{k=j}^{m}{w_{(k)}}}}\alpha ,\quad j=1,...,i}

Adjusted p-values

The adjusted p-values for Holm–Bonferroni method are:

- p ~ ( i ) = max j ≤ i { ( m − j + 1 ) p ( j ) } 1 {\displaystyle {\widetilde {p}}_{(i)}=\max _{j\leq i}\left\{(m-j+1)p_{(j)}\right\}_{1}}

, where { x } 1 ≡ min ( x , 1 ) {\displaystyle \{x\}_{1}\equiv \min(x,1)}

.

In the earlier example, the adjusted p-values are p ~ 1 = 0.03 {\displaystyle {\widetilde {p}}_{1}=0.03}

The weighted adjusted p-values are:[citation needed]

- p ~ ( i ) = max j ≤ i { ∑ k = j m w ( k ) w ( j ) p ( j ) } 1 {\displaystyle {\widetilde {p}}_{(i)}=\max _{j\leq i}\left\{{\frac {\sum _{k=j}^{m}{w_{(k)}}}{w_{(j)}}}p_{(j)}\right\}_{1}}

, where { x } 1 ≡ min ( x , 1 ) {\displaystyle \{x\}_{1}\equiv \min(x,1)}

.

A hypothesis is rejected at level α if and only if its adjusted p-value is less than α. In the earlier example using equal weights, the adjusted p-values are 0.03, 0.06, 0.06, and 0.02. This is another way to see that using α = 0.05, only hypotheses one and four are rejected by this procedure.

Alternatives and usage

The Holm–Bonferroni method is uniformly more powerful than the classic Bonferroni correction. There are other methods for controlling the family-wise error rate that are more powerful than Holm-Bonferroni.

In the Hochberg procedure, rejection of H ( 1 ) … H ( k ) {\displaystyle H_{(1)}\ldots H_{(k)}}

A similar step-up procedure is the Hommel procedure.[3]

Naming

Carlo Emilio Bonferroni did not take part in inventing the method described here. Holm originally called the method the "sequentially rejective Bonferroni test", and it became known as Holm-Bonferroni only after some time. Holm's motives for naming his method after Bonferroni are explained in the original paper: "The use of the Boole inequality within multiple inference theory is usually called the Bonferroni technique, and for this reason we will call our test the sequentially rejective Bonferroni test."

Bonferroni校正:如果在同一数据集上同时检验n个独立的假设,那么用于每一假设的统计显著水平,应为仅检验一个假设时的显著水平的1/n。

简介

维基百科原文

参考文献

- 参考资料

https://study.163.com/provider/400000000398149/index.htm?share=2&shareId=400000000398149( 欢迎关注博主主页,学习python视频资源,还有大量免费python经典文章)

Holm–Bonferroni method的更多相关文章

- SAGE|DNA微阵列|RNA-seq|lncRNA|scripture|tophat|cufflinks|NONCODE|MA|LOWESS|qualitile归一化|permutation test|SAM|FDR|The Bonferroni|Tukey's|BH|FWER|Holm's step-down|q-value|

生物信息学-基因表达分析 为了丰富中心法则,研究人员使用不断更新的技术研究lncRNA的方方面面,其中技术主要是生物学上的微阵列芯片技术和表达数据分析方法,方方面面是指lncRNA的位置特征. Bac ...

- jsp中出现onclick函数提示Cannot return from outside a function or method

在使用Myeclipse10部署完项目后,原先不出错的项目,会有红色的叉叉,JSP页面会提示onclick函数错误 Cannot return from outside a function or m ...

- Apply Newton Method to Find Extrema in OPEN CASCADE

Apply Newton Method to Find Extrema in OPEN CASCADE eryar@163.com Abstract. In calculus, Newton’s me ...

- 设计模式(九): 从醋溜土豆丝和清炒苦瓜中来学习"模板方法模式"(Template Method Pattern)

今天是五.四青年节,祝大家节日快乐.看着今天这标题就有食欲,夏天到了,醋溜土豆丝和清炒苦瓜适合夏天吃,好吃不上火.这两道菜大部分人都应该吃过,特别是醋溜土豆丝,作为“鲁菜”的代表作之一更是为大众所熟知 ...

- HTTP Method详细解读(`GET` `HEAD` `POST` `OPTIONS` `PUT` `DELETE` `TRACE` `CONNECT`)

前言 HTTP Method的历史: HTTP 0.9 这个版本只有GET方法 HTTP 1.0 这个版本有GET HEAD POST这三个方法 HTTP 1.1 这个版本是当前版本,包含GET HE ...

- IIS7.5上的REST服务的Put,Delete操作发生HTTP Error 405.0 - Method Not Allowed 解决方法

WebDAV 是超文本传输协议 (HTTP) 的一组扩展,为 Internet 上计算机之间的编辑和文件管理提供了标准.利用这个协议用户可以通过Web进行远程的基本文件操作,如拷贝.移动.删除等.在I ...

- The method getJspApplicationContext(ServletContext) is undefined for the type JspFactory

The method getJspApplicationContext(ServletContext) is undefined for the type JspFactory 这是由于项目里面的一些 ...

- Spring boot: Request method 'DELETE' not supported, Request method 'PUT' not supported, Request method 'POST' not supported

GET,POST,PUT,DELETE, Spring都支持,不要怀疑Spring, 一定是前端发送的rest 请求和后端的响应不匹配, 查找原因以及解决办法, 很简单 用chrome打开F12控制台 ...

- 异常:java.lang.LinkageError: loader constraint violation: when resolving interface method

异常:java.lang.LinkageError: loader constraint violation: when resolving interface method "javax. ...

随机推荐

- 剑指Offer66题的总结、目录

原文链接 剑指Offer每日6题系列终于在今天全部完成了,从2017年12月27日到2018年2月27日,历时两个月的写作,其中绝大部分的时间不是花在做题上,而是花在写作上,这个系列不适合大神,大牛, ...

- Qt绘图

Qt绘图的设置 QPainter::Antialiasing // 反锯齿 QPainter::TextAntialiasing // 文字反锯齿 QPainter::SmoothPixmapTran ...

- 大前端-全栈-node+easyui+express+vue+es6+webpack+react

作者声明:本博客中所写的文章,都是博主自学过程的笔记,参考了很多的学习资料,学习资料和笔记会注明出处,所有的内容都以交流学习为主.有不正确的地方,欢迎批评指正 视频来源:https://www.bil ...

- EXT4.1表单提交(非AJAX)

Ext.require([ 'Ext.form.*', 'Ext.data.*' ]); Ext.onReady(function(){ Ext.apply(Ext.form.VTypes, { re ...

- 严重: Failed to destroy end point associated with ProtocolHandler ["http-nio-8080"] java.lang.NullPointer

刚接触servlet类,按照课本的方法使用eclipse新建了一个servlet类. 新建完成后,在web.xml里面进行注册 这时候就会报错了. 五月 07, 2016 11:23:28 上午 or ...

- Dijkstra+优先队列 模板

#include<bits/stdc++.h> using namespace std; #define ll long long ; const ll inf=1e17; struct ...

- zuoyeQAQ

public class StringAPIDemo { /** * @param args */ public static void main(String[] args) { // TODO A ...

- jdk&tomcat环境变量配置及同时运行多个tomcat方法

一:jdk配置 安装jdk1.7.0_51,安装过程中所有选项保持默认:最后配置 JDK的环境变量: 在“我的电脑”上点右键—>“属性”—>“高级”—>“环境变量(N)”. 1.新建 ...

- Cobbler环境搭建

Cobbler服务器系统: CentOS 6.6 64位Cobbler版本: cobbler-2.6.11IP地址:192.168.166.136 1.安装epel库 rpm -ivh http:// ...

- c 结构体读取与保存

1.结构体保存到文本 #include <stdio.h> #include <stdlib.h> #include <string.h> #define max ...