spark的运行指标监控

sparkUi的4040界面已经有了运行监控指标,为什么我们还要自定义存入redis?

1.结合自己的业务,可以将监控页面集成到自己的数据平台内,方便问题查找,邮件告警

2.可以在sparkUi的基础上,添加一些自己想要指标统计

一、spark的SparkListener

sparkListener是一个接口,我们使用时需要自定义监控类实现sparkListener接口中的各种抽象方法,SparkListener 下各个事件对应的函数名非常直白,即如字面所表达意思。 想对哪个阶段的事件做一些自定义的动作,变继承SparkListener实现对应的函数即可,这些方法会帮助我监控spark运行时各个阶段的数据量,从而我们可以获得这些监控指标数据

abstract class SparkListener extends SparkListenerInterface {

//stage完成的时调用

override def onStageCompleted(stageCompleted: SparkListenerStageCompleted): Unit = { }

//stage提交时调用

override def onStageSubmitted(stageSubmitted: SparkListenerStageSubmitted): Unit = { }

override def onTaskStart(taskStart: SparkListenerTaskStart): Unit = { }

override def onTaskGettingResult(taskGettingResult: SparkListenerTaskGettingResult): Unit = { }

//task结束时调用

override def onTaskEnd(taskEnd: SparkListenerTaskEnd): Unit = { }

override def onJobStart(jobStart: SparkListenerJobStart): Unit = { }

override def onJobEnd(jobEnd: SparkListenerJobEnd): Unit = { }

override def onEnvironmentUpdate(environmentUpdate: SparkListenerEnvironmentUpdate): Unit = { }

override def onBlockManagerAdded(blockManagerAdded: SparkListenerBlockManagerAdded): Unit = { }

override def onBlockManagerRemoved(

blockManagerRemoved: SparkListenerBlockManagerRemoved): Unit = { }

override def onUnpersistRDD(unpersistRDD: SparkListenerUnpersistRDD): Unit = { }

override def onApplicationStart(applicationStart: SparkListenerApplicationStart): Unit = { }

override def onApplicationEnd(applicationEnd: SparkListenerApplicationEnd): Unit = { }

override def onExecutorMetricsUpdate(

executorMetricsUpdate: SparkListenerExecutorMetricsUpdate): Unit = { }

override def onExecutorAdded(executorAdded: SparkListenerExecutorAdded): Unit = { }

override def onExecutorRemoved(executorRemoved: SparkListenerExecutorRemoved): Unit = { }

override def onBlockUpdated(blockUpdated: SparkListenerBlockUpdated): Unit = { }

override def onOtherEvent(event: SparkListenerEvent): Unit = { }

}

1.实现自己SparkListener,对onTaskEnd方法是指标存入redis

(1)SparkListener是一个接口,创建一个MySparkAppListener类继承SparkListener,实现里面的onTaskEnd即可

(2)方法:override def onTaskEnd(taskEnd: SparkListenerTaskEnd): Unit = { }

SparkListenerTaskEnd类:

case class SparkListenerTaskEnd(

//spark的stageId

stageId: Int,

//尝试的阶段Id(也就是下级Stage?)

stageAttemptId: Int,

taskType: String,

reason: TaskEndReason,

//task信息

taskInfo: TaskInfo,

// task指标

@Nullable taskMetrics: TaskMetrics)

extends SparkListenerEvent

(3)在 onTaskEnd方法内可以通过成员taskinfo与taskMetrics获取的信息

/**

* 1、taskMetrics

* 2、shuffle

* 3、task运行(input output)

* 4、taskInfo

**/

(4)TaskMetrics可以获取的监控信息

class TaskMetrics private[spark] () extends Serializable {

// Each metric is internally represented as an accumulator

private val _executorDeserializeTime = new LongAccumulator

private val _executorDeserializeCpuTime = new LongAccumulator

private val _executorRunTime = new LongAccumulator

private val _executorCpuTime = new LongAccumulator

private val _resultSize = new LongAccumulator

private val _jvmGCTime = new LongAccumulator

private val _resultSerializationTime = new LongAccumulator

private val _memoryBytesSpilled = new LongAccumulator

private val _diskBytesSpilled = new LongAccumulator

private val _peakExecutionMemory = new LongAccumulator

private val _updatedBlockStatuses = new CollectionAccumulator[(BlockId, BlockStatus)]

val inputMetrics: InputMetrics = new InputMetrics()

/**

* Metrics related to writing data externally (e.g. to a distributed filesystem),

* defined only in tasks with output.

*/

val outputMetrics: OutputMetrics = new OutputMetrics()

/**

* Metrics related to shuffle read aggregated across all shuffle dependencies.

* This is defined only if there are shuffle dependencies in this task.

*/

val shuffleReadMetrics: ShuffleReadMetrics = new ShuffleReadMetrics()

/**

* Metrics related to shuffle write, defined only in shuffle map stages.

*/

val shuffleWriteMetrics: ShuffleWriteMetrics = new ShuffleWriteMetrics()

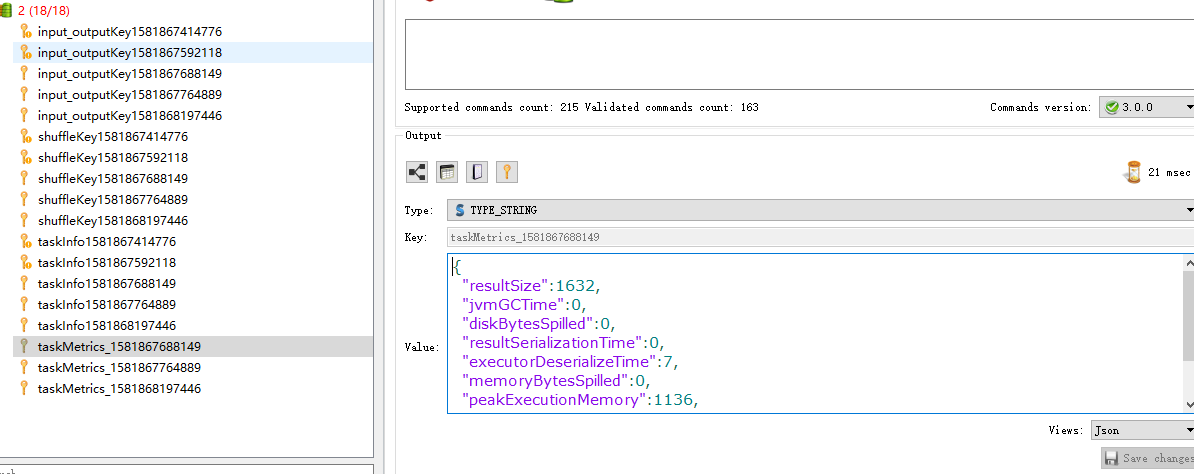

(5)代码实现并存入redis

/**

* 需求1.想自定义spark的job运行情况存入redis,集成到自己的业务后台展示中

*/

class MySparkAppListener extends SparkListener with Logging { val redisConf = "jedisConfig.properties" val jedis: Jedis = JedisUtil.getInstance().getJedis //父类的第一个方法

override def onTaskEnd(taskEnd: SparkListenerTaskEnd): Unit = {

//在 onTaskEnd方法内可以获取的信息有

/**

* 1、taskMetrics

* 2、shuffle

* 3、task运行(input output)

* 4、taskInfo

**/ val currentTimestamp = System.currentTimeMillis()

// TaskMetrics(task的指标)可以拿到的指标

/**

* private val _executorDeserializeTime = new LongAccumulator

* private val _executorDeserializeCpuTime = new LongAccumulator

* private val _executorRunTime = new LongAccumulator

* private val _executorCpuTime = new LongAccumulator

* private val _resultSize = new LongAccumulator

* private val _jvmGCTime = new LongAccumulator

* private val _resultSerializationTime = new LongAccumulator

* private val _memoryBytesSpilled = new LongAccumulator

* private val _diskBytesSpilled = new LongAccumulator

* private val _peakExecutionMemory = new LongAccumulator

* private val _updatedBlockStatuses = new CollectionAccumulator[(BlockId, BlockStatus)]

*/

val metrics = taskEnd.taskMetrics

val taskMetricsMap = scala.collection.mutable.HashMap(

"executorDeserializeTime" -> metrics.executorDeserializeTime, //executor的反序列化时间

"executorDeserializeCpuTime" -> metrics.executorDeserializeCpuTime, //executor的反序列化的 cpu时间

"executorRunTime" -> metrics.executorRunTime, //executoor的运行时间

"resultSize" -> metrics.resultSize, //结果集大小

"jvmGCTime" -> metrics.jvmGCTime, //

"resultSerializationTime" -> metrics.resultSerializationTime,

"memoryBytesSpilled" -> metrics.memoryBytesSpilled, //内存溢写的大小

"diskBytesSpilled" -> metrics.diskBytesSpilled, //溢写到磁盘的大小

"peakExecutionMemory" -> metrics.peakExecutionMemory //executor的最大内存

) val jedisKey = "taskMetrics_" + {

currentTimestamp

}

jedis.set(jedisKey, Json(DefaultFormats).write(jedisKey))

jedis.pexpire(jedisKey, 3600) //======================shuffle指标================================

val shuffleReadMetrics = metrics.shuffleReadMetrics

val shuffleWriteMetrics = metrics.shuffleWriteMetrics //shuffleWriteMetrics shuffle读过程的指标有这些

/**

* private[executor] val _bytesWritten = new LongAccumulator

* private[executor] val _recordsWritten = new LongAccumulator

* private[executor] val _writeTime = new LongAccumulator

*/

//shuffleReadMetrics shuffle写过程的指标有这些

/**

* private[executor] val _remoteBlocksFetched = new LongAccumulator

* private[executor] val _localBlocksFetched = new LongAccumulator

* private[executor] val _remoteBytesRead = new LongAccumulator

* private[executor] val _localBytesRead = new LongAccumulator

* private[executor] val _fetchWaitTime = new LongAccumulator

* private[executor] val _recordsRead = new LongAccumulator

*/ val shuffleMap = scala.collection.mutable.HashMap(

"remoteBlocksFetched" -> shuffleReadMetrics.remoteBlocksFetched, //shuffle远程拉取数据块

"localBlocksFetched" -> shuffleReadMetrics.localBlocksFetched, //本地块拉取

"remoteBytesRead" -> shuffleReadMetrics.remoteBytesRead, //shuffle远程读取的字节数

"localBytesRead" -> shuffleReadMetrics.localBytesRead, //读取本地数据的字节

"fetchWaitTime" -> shuffleReadMetrics.fetchWaitTime, //拉取数据的等待时间

"recordsRead" -> shuffleReadMetrics.recordsRead, //shuffle读取的记录总数

"bytesWritten" -> shuffleWriteMetrics.bytesWritten, //shuffle写的总大小

"recordsWritte" -> shuffleWriteMetrics.recordsWritten, //shuffle写的总记录数

"writeTime" -> shuffleWriteMetrics.writeTime

) val shuffleKey = s"shuffleKey${currentTimestamp}"

jedis.set(shuffleKey, Json(DefaultFormats).write(shuffleMap))

jedis.expire(shuffleKey, 3600) //=================输入输出========================

val inputMetrics = taskEnd.taskMetrics.inputMetrics

val outputMetrics = taskEnd.taskMetrics.outputMetrics val input_output = scala.collection.mutable.HashMap(

"bytesRead" -> inputMetrics.bytesRead, //读取的大小

"recordsRead" -> inputMetrics.recordsRead, //总记录数

"bytesWritten" -> outputMetrics.bytesWritten,//输出的大小

"recordsWritten" -> outputMetrics.recordsWritten//输出的记录数

)

val input_outputKey = s"input_outputKey${currentTimestamp}"

jedis.set(input_outputKey, Json(DefaultFormats).write(input_output))

jedis.expire(input_outputKey, 3600) //####################taskInfo#######

val taskInfo: TaskInfo = taskEnd.taskInfo val taskInfoMap = scala.collection.mutable.HashMap(

"taskId" -> taskInfo.taskId ,

"host" -> taskInfo.host ,

"speculative" -> taskInfo.speculative , //推测执行

"failed" -> taskInfo.failed ,

"killed" -> taskInfo.killed ,

"running" -> taskInfo.running ) val taskInfoKey = s"taskInfo${currentTimestamp}"

jedis.set(taskInfoKey , Json(DefaultFormats).write(taskInfoMap))

jedis.expire(taskInfoKey , 3600) }

(5)程序测试

sparkContext.addSparkListener方法添加自己监控主类

sc.addSparkListener(new MySparkAppListener())

使用wordcount进行简单测试

二、spark实时监控

1.StreamingListener是实时监控的接口,里面有数据接收成功、错误、停止、批次提交、开始、完成等指标,原理与上述相同

trait StreamingListener {

/** Called when a receiver has been started */

def onReceiverStarted(receiverStarted: StreamingListenerReceiverStarted) { }

/** Called when a receiver has reported an error */

def onReceiverError(receiverError: StreamingListenerReceiverError) { }

/** Called when a receiver has been stopped */

def onReceiverStopped(receiverStopped: StreamingListenerReceiverStopped) { }

/** Called when a batch of jobs has been submitted for processing. */

def onBatchSubmitted(batchSubmitted: StreamingListenerBatchSubmitted) { }

/** Called when processing of a batch of jobs has started. */

def onBatchStarted(batchStarted: StreamingListenerBatchStarted) { }

/** Called when processing of a batch of jobs has completed. */

def onBatchCompleted(batchCompleted: StreamingListenerBatchCompleted) { }

/** Called when processing of a job of a batch has started. */

def onOutputOperationStarted(

outputOperationStarted: StreamingListenerOutputOperationStarted) { }

/** Called when processing of a job of a batch has completed. */

def onOutputOperationCompleted(

outputOperationCompleted: StreamingListenerOutputOperationCompleted) { }

}

2.主要指标及用途

1.onReceiverError

监控数据接收错误信息,进行邮件告警

2.onBatchCompleted 该批次完成时调用该方法

(1)sparkstreaming的偏移量提交时,当改批次执行完,才进行offset的保存入库,(该无法保证统计入库完成后程序中断、offset未提交)

(2)批次处理时间大于了规定的窗口时间,程序出现阻塞,进行邮件告警

三、spark、yarn的web返回接口进行数据解析,获取指标信息

1.启动某个本地spark程序

访问 :http://localhost:4040/metrics/json/,得到一串json数据,解析gauges,则可获取所有的信息

{

"version": "3.0.0",

"gauges": {

"local-1581865176069.driver.BlockManager.disk.diskSpaceUsed_MB": {

"value": 0

},

"local-1581865176069.driver.BlockManager.memory.maxMem_MB": {

"value": 1989

},

"local-1581865176069.driver.BlockManager.memory.memUsed_MB": {

"value": 0

},

"local-1581865176069.driver.BlockManager.memory.remainingMem_MB": {

"value": 1989

},

"local-1581865176069.driver.DAGScheduler.job.activeJobs": {

"value": 0

},

"local-1581865176069.driver.DAGScheduler.job.allJobs": {

"value": 0

},

"local-1581865176069.driver.DAGScheduler.stage.failedStages": {

"value": 0

},

"local-1581865176069.driver.DAGScheduler.stage.runningStages": {

"value": 0

},

"local-1581865176069.driver.DAGScheduler.stage.waitingStages": {

"value": 0

}

},

"counters": {

"local-1581865176069.driver.HiveExternalCatalog.fileCacheHits": {

"count": 0

},

"local-1581865176069.driver.HiveExternalCatalog.filesDiscovered": {

"count": 0

},

"local-1581865176069.driver.HiveExternalCatalog.hiveClientCalls": {

"count": 0

},

"local-1581865176069.driver.HiveExternalCatalog.parallelListingJobCount": {

"count": 0

},

"local-1581865176069.driver.HiveExternalCatalog.partitionsFetched": {

"count": 0

}

},

"histograms": {

"local-1581865176069.driver.CodeGenerator.compilationTime": {

"count": 0,

"max": 0,

"mean": 0,

"min": 0,

"p50": 0,

"p75": 0,

"p95": 0,

"p98": 0,

"p99": 0,

"p999": 0,

"stddev": 0

},

"local-1581865176069.driver.CodeGenerator.generatedClassSize": {

"count": 0,

"max": 0,

"mean": 0,

"min": 0,

"p50": 0,

"p75": 0,

"p95": 0,

"p98": 0,

"p99": 0,

"p999": 0,

"stddev": 0

},

"local-1581865176069.driver.CodeGenerator.generatedMethodSize": {

"count": 0,

"max": 0,

"mean": 0,

"min": 0,

"p50": 0,

"p75": 0,

"p95": 0,

"p98": 0,

"p99": 0,

"p999": 0,

"stddev": 0

},

"local-1581865176069.driver.CodeGenerator.sourceCodeSize": {

"count": 0,

"max": 0,

"mean": 0,

"min": 0,

"p50": 0,

"p75": 0,

"p95": 0,

"p98": 0,

"p99": 0,

"p999": 0,

"stddev": 0

}

},

"meters": { },

"timers": {

"local-1581865176069.driver.DAGScheduler.messageProcessingTime": {

"count": 0,

"max": 0,

"mean": 0,

"min": 0,

"p50": 0,

"p75": 0,

"p95": 0,

"p98": 0,

"p99": 0,

"p999": 0,

"stddev": 0,

"m15_rate": 0,

"m1_rate": 0,

"m5_rate": 0,

"mean_rate": 0,

"duration_units": "milliseconds",

"rate_units": "calls/second"

}

}

}

解析json获取指标信息

val diskSpaceUsed_MB = gauges.getJSONObject(applicationId + ".driver.BlockManager.disk.diskSpaceUsed_MB").getLong("value")//使用的磁盘空间

val maxMem_MB = gauges.getJSONObject(applicationId + ".driver.BlockManager.memory.maxMem_MB").getLong("value") //使用的最大内存

val memUsed_MB = gauges.getJSONObject(applicationId + ".driver.BlockManager.memory.memUsed_MB").getLong("value")//内存使用情况

val remainingMem_MB = gauges.getJSONObject(applicationId + ".driver.BlockManager.memory.remainingMem_MB").getLong("value") //闲置内存

//#####################stage###################################

val activeJobs = gauges.getJSONObject(applicationId + ".driver.DAGScheduler.job.activeJobs").getLong("value")//当前正在运行的job

val allJobs = gauges.getJSONObject(applicationId + ".driver.DAGScheduler.job.allJobs").getLong("value")//总job数

val failedStages = gauges.getJSONObject(applicationId + ".driver.DAGScheduler.stage.failedStages").getLong("value")//失败的stage数量

val runningStages = gauges.getJSONObject(applicationId + ".driver.DAGScheduler.stage.runningStages").getLong("value")//正在运行的stage

val waitingStages = gauges.getJSONObject(applicationId + ".driver.DAGScheduler.stage.waitingStages").getLong("value")//等待运行的stage

//#####################StreamingMetrics###################################

val lastCompletedBatch_processingDelay = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.lastCompletedBatch_processingDelay").getLong("value")// 最近批次执行的延迟时间

val lastCompletedBatch_processingEndTime = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.lastCompletedBatch_processingEndTime").getLong("value")//最近批次执行结束时间(毫秒为单位)

val lastCompletedBatch_processingStartTime = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.lastCompletedBatch_processingStartTime").getLong("value")//最近批次开始执行时间

//执行时间

val lastCompletedBatch_processingTime = (lastCompletedBatch_processingEndTime - lastCompletedBatch_processingStartTime)

val lastReceivedBatch_records = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.lastReceivedBatch_records").getLong("value")//最近批次接收的数量

val runningBatches = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.runningBatches").getLong("value")//正在运行的批次

val totalCompletedBatches = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.totalCompletedBatches").getLong("value")//完成的数据量

val totalProcessedRecords = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.totalProcessedRecords").getLong("value")//总处理条数

val totalReceivedRecords = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.totalReceivedRecords").getLong("value")//总接收条数

val unprocessedBatches = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.unprocessedBatches").getLong("value")//为处理的批次

val waitingBatches = gauges.getJSONObject(applicationId + ".driver.query.StreamingMetrics.streaming.waitingBatches").getLong("value")//处于等待状态的批次

2.spark提交至yarn

val sparkDriverHost = sc.getConf.get("spark.org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter.param.PROXY_URI_BASES")

//监控信息页面路径为集群路径+/proxy/+应用id+/metrics/json

val url = s"${sparkDriverHost}/metrics/json"

3.作用

1.该job(endTime, applicationUniqueName, applicationId, sourceCount, costTime, countPerMillis)可以做表格,做链路统计

2.磁盘与内存信息做饼图,用来对内存和磁盘的监控

3.程序task的运行情况做表格,用来对job的监控

spark的运行指标监控的更多相关文章

- 通过案例对 spark streaming 透彻理解三板斧之三:spark streaming运行机制与架构

本期内容: 1. Spark Streaming Job架构与运行机制 2. Spark Streaming 容错架构与运行机制 事实上时间是不存在的,是由人的感官系统感觉时间的存在而已,是一种虚幻的 ...

- Linux 服务器运行健康状况监控利器 Spotlight on Unix 的安装与使用

1.本文背景 1.1.Linux 服务器情况 # cat /etc/issueRed Hat Enterprise Linux Server release 6.1 (Santiago)Kernel ...

- Spark程序运行常见错误解决方法以及优化

转载自:http://bigdata.51cto.com/art/201704/536499.htm Spark程序运行常见错误解决方法以及优化 task倾斜原因比较多,网络io,cpu,mem都有可 ...

- Spark的 运行模式详解

Spark的运行模式是多种多样的,那么在这篇博客中谈一下Spark的运行模式 一:Spark On Local 此种模式下,我们只需要在安装Spark时不进行hadoop和Yarn的环境配置,只要将S ...

- SpringBoot第十二集:度量指标监控与异步调用(2020最新最易懂)

SpringBoot第十二集:度量指标监控与异步调用(2020最新最易懂) Spring Boot Actuator是spring boot项目一个监控模块,提供了很多原生的端点,包含了对应用系统的自 ...

- 图解JanusGraph系列 - JanusGraph指标监控报警(Monitoring JanusGraph)

大家好,我是洋仔,JanusGraph图解系列文章,实时更新~ 图数据库文章总目录: 整理所有图相关文章,请移步(超链):图数据库系列-文章总目录 源码分析相关可查看github(码文不易,求个sta ...

- 【03】SpringBoot2核心技术-核心功能—数据访问_单元测试_指标监控

3.数据访问(SQL) 3.1 数据库连接池的自动配置-HikariDataSource 1.导入JDBC场景 <dependency> <groupId>org.spring ...

- 业务监控-指标监控(v1)

最近做了指标监控系统的后台,包括需求调研.代码coding.调试调优测试等,穿插其他杂事等前后花了一个月左右. 指标监控指的是用户通过接口上传某些指标信息,并且通过配置阈值公式和告警规则等信息监测自己 ...

- 通过案例对 spark streaming 透彻理解三板斧之二:spark streaming运行机制

本期内容: 1. Spark Streaming架构 2. Spark Streaming运行机制 Spark大数据分析框架的核心部件: spark Core.spark Streaming流计算. ...

随机推荐

- CSP-S 2020 题解

赛后我重拳出击,赛场上我却爆零.哎. 题解本人口胡.有错请各位大佬们指出. A. 儒略日 这题是大型模拟题. 介绍两种写法:一种代码量致死(赛 场 自 闭),一种是非常好写的. 写法 1 我在赛场的思 ...

- 四、java多线程核心技术——synchronized同步方法与synchronized同步快

一.synchronized同步方法 论:"线程安全"与"非线程安全"是多线程的经典问题.synchronized()方法就是解决非线程安全的. 1.方法内的变 ...

- 关于大视频video播放的问题以及解决方案(m3u8的播放)

在HTML5里,提供了<video>标签,可以直接播放视频,video的使用很简单: <video width="320" height="240&qu ...

- IDEA 2020.3 更新了,机器学习都整上了

Hello,大家好,我是楼下小黑哥~ 上周 Java 开发申请神器 IDEA 2020.3 新版正式发布: 小黑哥第一时间就在开发机上更新了新版本,并且完整体验了两周了. 下面介绍一下这个版本的主要功 ...

- win10新版wsl2使用指南

本篇文章会介绍win10中wsl2的安装和使用以及遇到的常见问题比如如何固定wsl2地址等问题的总结. 一.wsl2简介 wsl是适用于 Linux 的 Windows 子系统,安装指南:适用于 Li ...

- 阿里云Centos7.6上面部署基于redis的分布式爬虫scrapy-redis将任务队列push进redis

Scrapy是一个比较好用的Python爬虫框架,你只需要编写几个组件就可以实现网页数据的爬取.但是当我们要爬取的页面非常多的时候,单个服务器的处理能力就不能满足我们的需求了(无论是处理速度还是网络请 ...

- Websocket---认识篇

为什么需要 WebSocket ? 了解计算机网络协议的人,应该都知道:HTTP 协议是一种无状态的.无连接的.单向的应用层协议.它采用了请求/响应模型.通信请求只能由客户端发起,服务端对请求做出应答 ...

- .NET Core 使用MediatR CQRS模式 读写分离

前言 CQRS(Command Query Responsibility Segregation)命令查询职责分离模式,它主要从我们业务系统中进行分离出我们(Command 增.删.改)和(Query ...

- 【基础】Linux系统的运行级别

1.系统运行级别的配置文件 什么是运行级呢?简单的说,运行级就是操作系统当前正在运行的功能级别. 它让一些程序在一个级别启动,而另外一个级别的时候不启动. Linux系统的有效登录模式有0~9共十种, ...

- 【扫盲】i++和++i的区别

从学java开始,我们就听说过i++和++i的效果一样,都能使i的值累加1,效果如同i=i+1: 但是使用过程中,有和不同呢,今天我们来说说看. 案例一: int i=0; int j=i++; Sy ...