【NLP】Recurrent Neural Network and Language Models

0. Overview

What is language models?

A time series prediction problem.

It assigns a probility to a sequence of words,and the total prob of all the sequence equal one.

Many Natural Language Processing can be structured as (conditional) language modelling.

Such as Translation:

P(certain Chinese text | given English text)

Note that the Prob follows the Bayes Formula.

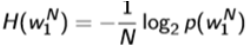

How to evaluate a Language Model?

Measured with cross entropy.

Three data sets:

1 Penn Treebank: www.fit.vutbr.cz/~imikolov/rnnlm/simple-examples.tgz

2 Billion Word Corpus: code.google.com/p/1-billion-word-language-modeling-benchmark/

3 WikiText datasets: Pointer Sentinel Mixture Models. Merity et al., arXiv 2016

|

Overview: Three approaches to build language models: Count based n-gram models: approximate the history of observed words with just the previous n words. Neural n-gram models: embed the same fixed n-gram history in a continues space and thus better capture correlations between histories. Recurrent Neural Networks: we drop the fixed n-gram history and compress the entire history in a fixed length vector, enabling long range correlations to be captured. |

1. N-Gram models:

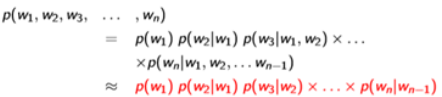

Assumption:

Only previous history matters.

Only k-1 words are included in history

Kth order Markov model

2-gram language model:

The conditioning context, wi−1, is called the history

Estimate Probabilities:

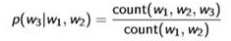

(For example: 3-gram)

(count w1,w2,w3 appearing in the corpus)

(count w1,w2,w3 appearing in the corpus)

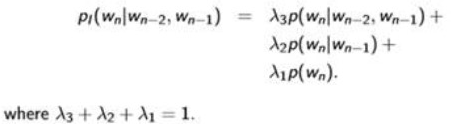

Interpolated Back-Off:

That is , sometimes some certain phrase don’t appear in the corpus so the Prob of them is zero. To avoid this situation, we use Interpolated Back-off. That is to say, Interpolate k-gram models(k = n-1、n-2…1) into the n-gram models.

A simpal approach:

Summary for n-gram:

Good: easy to train. Fast.

Bad: Large n-grams are sparse. Hard to capture long dependencies. Cannot capture correlations between similary word distributions. Cannot resolve the word morphological problem.(running – jumping)

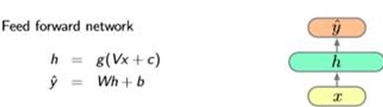

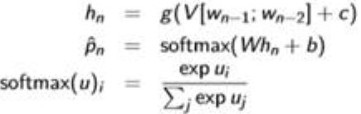

2. Neural N-Gram Language Models

Use A feed forward network like:

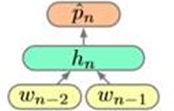

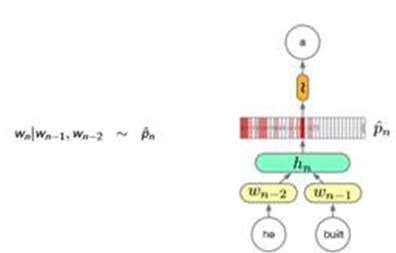

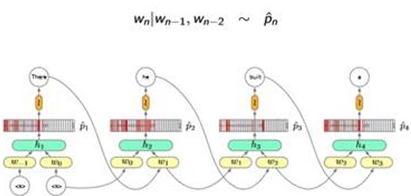

Trigram(3-gram) Neural Network Language Model for example:

Wi are hot-vectors. Pi are distributions. And shape is |V|(words in the vocabulary)

(a sampal:detail cal graph)

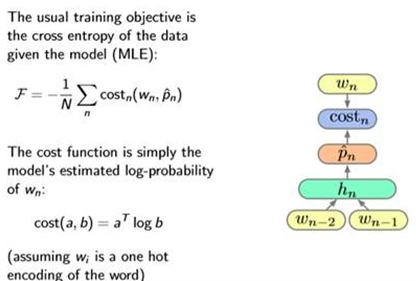

Define the loss:cross entopy:

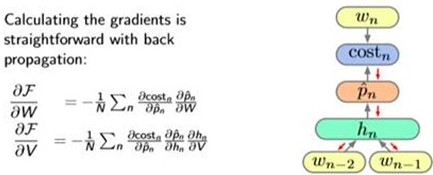

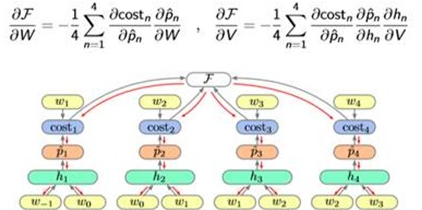

Training: use Gradient Descent

And a sampal of taining:

Comparsion with Count based n-gram LMs:

Good: Better performance on unseen n-grams But poorer on seen n-grams.(Sol: direct(linear) n-gram fertures). Use smaller memory than Counted based n-gram.

Bad: The number of parameters in the models scales with n-gram size. There is a limit on the longest dependencies that an be captured.

3. Recurrent Neural Network LM

That is to say, using a recurrent neural network to build our LM.

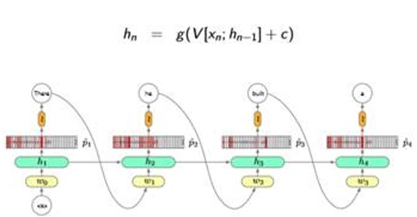

Model and Train:

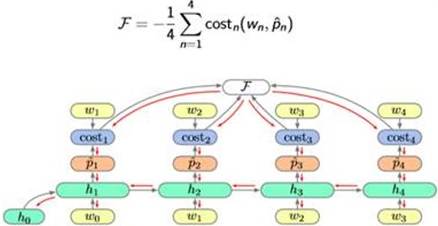

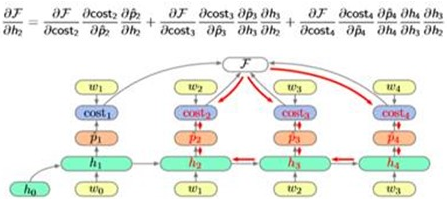

Algorithm: Back Propagation Through Time(BPTT)

Note:

Note that, the Gradient Descent depend heavily. So the improved algorithm is:

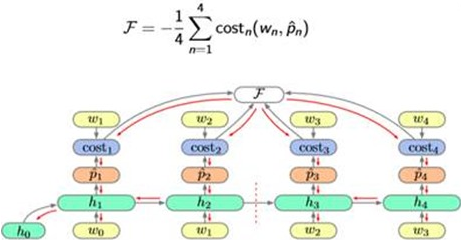

Algorithm: Truncated Back Propagation Through Time.(TBPTT)

So the Cal graph looks like this:

So the Training process and Gradient Descent:

Summary of the Recurrent NN LMs:

Good:

RNNs can represent unbounded dependencies, unlike models with a fixed n-gram order.

RNNs compress histories of words into a fixed size hidden vector.

The number of parameters does not grow with the length of dependencies captured, but they do grow with the amount of information stored in the hidden layer.

Bad:

RNNs are hard to learn and often will not discover long range dependencies present in the data(So we learn LSTM unit).

Increasing the size of the hidden layer, and thus memory, increases the computation and memory quadratically.

Mostly trained with Maximum Likelihood based objectives which do not encode the expected frequencies of words a priori.

Some blogs recommended:

|

Andrej Karpathy: The Unreasonable Effectiveness of Recurrent Neural Networks karpathy.github.io/2015/05/21/rnn-effectiveness/ Yoav Goldberg: The unreasonable effectiveness of Character-level Language Models nbviewer.jupyter.org/gist/yoavg/d76121dfde2618422139 Stephen Merity: Explaining and illustrating orthogonal initialization for recurrent neural networks. smerity.com/articles/2016/orthogonal_init.html |

【NLP】Recurrent Neural Network and Language Models的更多相关文章

- pytorch --Rnn语言模型(LSTM,BiLSTM) -- 《Recurrent neural network based language model》

论文通过实现RNN来完成了文本分类. 论文地址:88888888 模型结构图: 原理自行参考论文,code and comment: # -*- coding: utf-8 -*- # @time : ...

- Recurrent Neural Network系列1--RNN(循环神经网络)概述

作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 本文翻译自 RECURRENT NEURAL NETWORKS T ...

- 【NLP】自然语言处理:词向量和语言模型

声明: 这是转载自LICSTAR博士的牛文,原文载于此:http://licstar.net/archives/328 这篇博客是我看了半年的论文后,自己对 Deep Learning 在 NLP 领 ...

- Recurrent Neural Network Language Modeling Toolkit代码学习

Recurrent Neural Network Language Modeling Toolkit 工具使用点击打开链接 本博客地址:http://blog.csdn.net/wangxingin ...

- 课程五(Sequence Models),第一 周(Recurrent Neural Networks) —— 1.Programming assignments:Building a recurrent neural network - step by step

Building your Recurrent Neural Network - Step by Step Welcome to Course 5's first assignment! In thi ...

- Recurrent Neural Network(循环神经网络)

Reference: Alex Graves的[Supervised Sequence Labelling with RecurrentNeural Networks] Alex是RNN最著名变种 ...

- Recurrent Neural Network系列2--利用Python,Theano实现RNN

作者:zhbzz2007 出处:http://www.cnblogs.com/zhbzz2007 欢迎转载,也请保留这段声明.谢谢! 本文翻译自 RECURRENT NEURAL NETWORKS T ...

- Recurrent Neural Network[survey]

0.引言 我们发现传统的(如前向网络等)非循环的NN都是假设样本之间无依赖关系(至少时间和顺序上是无依赖关系),而许多学习任务却都涉及到处理序列数据,如image captioning,speech ...

- (zhuan) Recurrent Neural Network

Recurrent Neural Network 2016年07月01日 Deep learning Deep learning 字数:24235 this blog from: http:/ ...

随机推荐

- 史上最全的Spring Boot配置文件详解

Spring Boot在工作中是用到的越来越广泛了,简单方便,有了它,效率提高不知道多少倍.Spring Boot配置文件对Spring Boot来说就是入门和基础,经常会用到,所以写下做个总结以便日 ...

- 三、xadmin----内置插件

1.Action Xadmin 默认启用了批量删除的事件,代码见xadmin-->plugins-->action.py DeleteSelectedAction 如果要为list列表添 ...

- Django的路由层

U RL配置(URLconf)就像Django 所支撑网站的目录.它的本质是URL与要为该URL调用的视图函数之间的映射表:你就是以这种方式告诉Django,对于客户端发来的某个URL调用哪一段逻辑代 ...

- Git push提交时报错Permission denied(publickey)...Please make sure you have the correct access rights and the repository exists.

一.git push origin master 时出错 错误信息为: Permission denied(publickey). fatal: Could not read from remote ...

- java web 常见异常及解决办法

javax.servlet.ServletException: javax/servlet/jsp/SkipPageException 重启tomcat, javax.servlet.ServletE ...

- VO和DO转换(二) BeanUtils

VO和DO转换(一) 工具汇总 VO和DO转换(二) BeanUtils VO和DO转换(三) Dozer VO和DO转换(四) MapStruct BeanUtils是Spring提供的,通常项目都 ...

- Linux 典型应用之常用命令

软件操作相关命令 软件包管理 (yum) 安装软件 yum install xxx(软件的名字) 如 yum install vim 卸载软件 yum remove xxx(软件的名字) 如 yum ...

- 【Python3练习题 017】 两个乒乓球队进行比赛,各出三人。甲队为a,b,c三人,乙队为x,y,z三人。已抽签决定比赛名单。有人向队员打听比赛的名单。a说他不和x比,c说他不和x,z比。请编程序找出三队赛手的名单。

import itertools for i in itertools.permutations('xyz'): if i[0] != 'x' and i[2] != 'x' and i[ ...

- vue 使用sass 和less

npm i sass-loader --save -dev(-D)

- mybatis二级缓存详解

1 二级缓存简介 二级缓存是在多个SqlSession在同一个Mapper文件中共享的缓存,它是Mapper级别的,其作用域是Mapper文件中的namespace,默认是不开启的.看如下图: 1. ...