4. Orthogonality

4.1 Orthogonal Vectors and Suspaces

Orthogonal vectors have \(v^Tw=0\),and \(||v||^2 + ||w||^2 = ||v+w||^2 = ||v-w||^2\).

Subspaces \(V\) and \(W\) are orthogonal when \(v^Tw = 0\) for every \(v\) in V and every \(w\) in W.

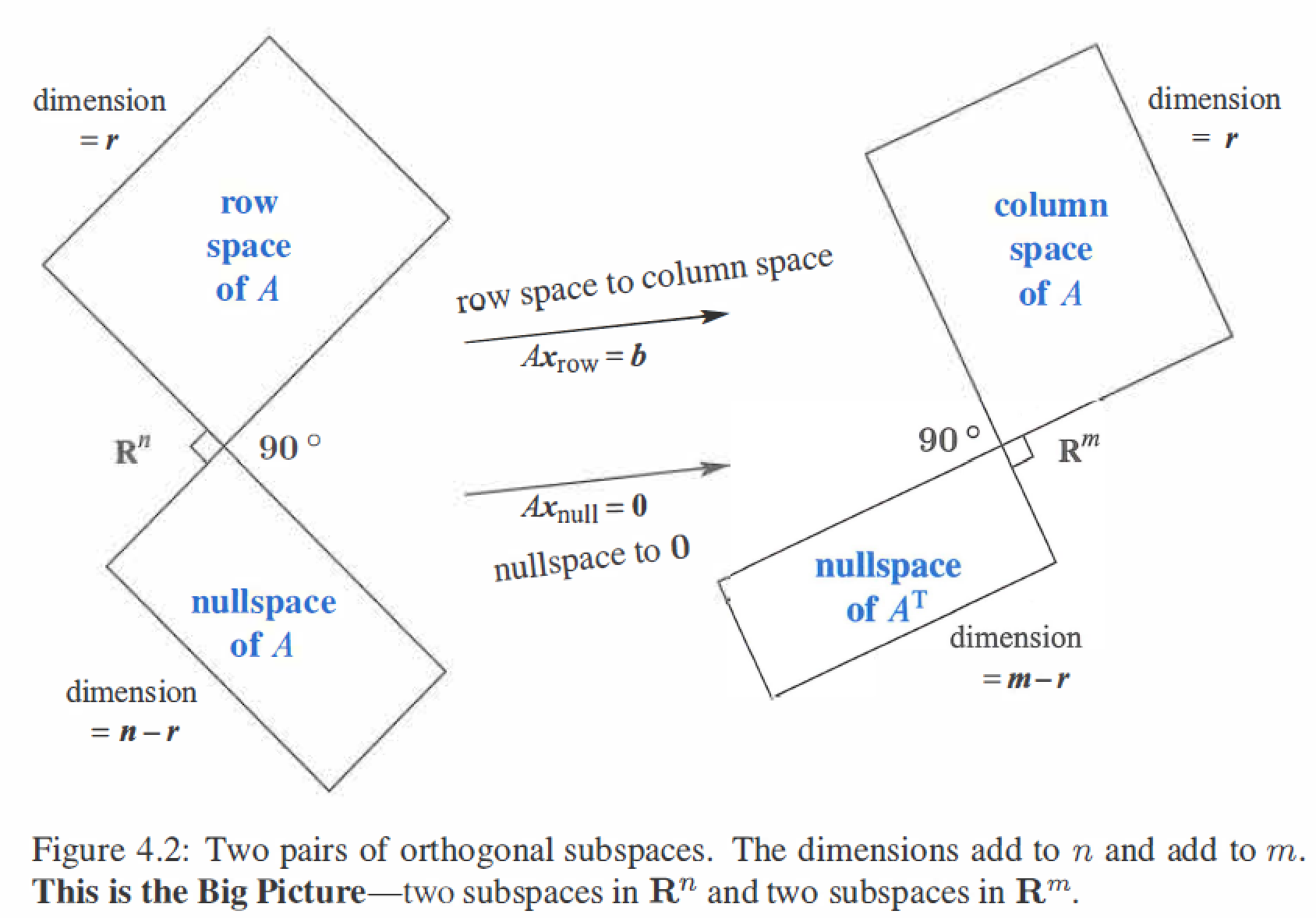

Four Subspaces Relations:

Every vector x in the nullspace is perpendicular to every row of A, because \(Ax=0\).The nullspace N(A) and the row space \(C(A^T)\) are orthogonal subspaces of \(R^n\). \(N(A) \bot C(A^T)\)

\[Ax = \left[ \begin{matrix} &row_1& \\ &\vdots \\ &row_m \end{matrix} \right]

\left[ \begin{matrix} && \\ &x \\ && \end{matrix} \right] = \left[ \begin{matrix} &0& \\ &\vdots \\ &0 \end{matrix} \right]

\]Every vector y in the nullspace of \(A^T\) is perpendicular to every column of A, because \(A^Ty=0\).The left nullspace \(N(A^T)\) and the column space \(C(A)\) are orthogonal subspaces of \(R^m\). \(N(A^T) \bot C(A)\)

\[A^Ty = \left[ \begin{matrix} &(column_1)^T& \\ &\vdots \\ &(column_m)^T \end{matrix} \right]

\left[ \begin{matrix} && \\ &y \\ && \end{matrix} \right] = \left[ \begin{matrix} &0& \\ &\vdots \\ &0 \end{matrix} \right]

\]The nullspace N(A) and the row space \(C(A^T)\) are orthogonal complements, with dimensions (n-r) + r = n. Similarly \(N(A^T)\) and C(A) are orthogonal complements with (m-r) + r = m.

4.2 Projection

When b is not in the columns space C(A),then Ax = b is unsolvable,so find the projection p of b onto C(A),make \(A\hat{x} = p\) is solvable.

Three Steps:

- Find \(\hat{x}\)

- Find p

- Find project matrix P

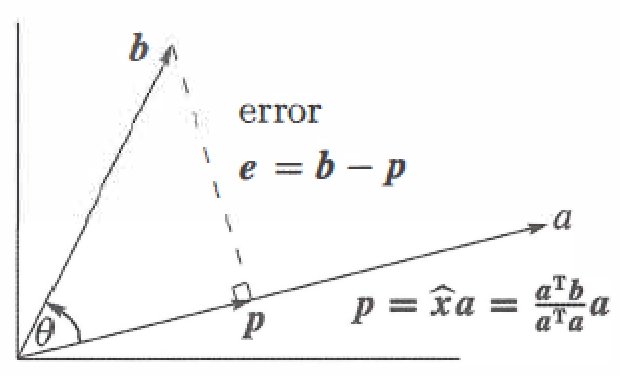

Projection Onto a Line

A line goes through the origin in the direction of \(a=(a_1, a_2,...,a_m)\).

Along that line, we want the point \(p\) closest to \(b=(b_1,b_2,...,b_m)\).

The key to projection is orthogonality : The line from b to p is perpendicular to the vector a.

Projecting b onto a with error \(e=b-\hat{x}a\) :

\Downarrow \\

\hat{x} = \frac{a \cdot b}{a \cdot a} = \frac{a^T b}{a^T a} \\

\Downarrow \\

p = a \hat{x} =a\frac{a^T b}{a^T a} = Pb \\

\Downarrow \\

P = \frac{aa^T}{a^Ta} \\

\]

\(P=P^T\) (P is symmetric) , \(P^2=P\)

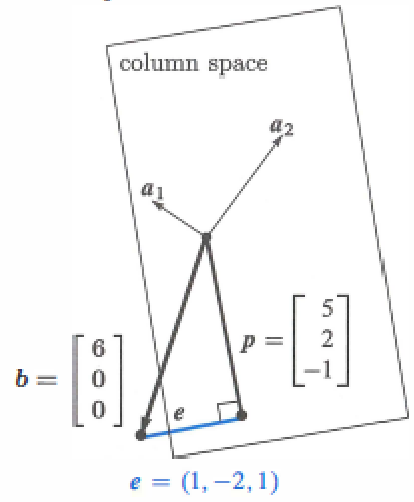

Projection Onto a Subspace

Start with n independent vectors \(A=(a_1,...,a_n)\) in \(R^m\), find the combination \(p=\hat{x}a_1 + \hat{x}a_2 +...+\hat{x_n}a_n\) closest to a givent vector b.

\left[ \begin{matrix} && \\ &b-A\hat{x} \\ && \end{matrix} \right]

= \left[ \begin{matrix} &0& \\ &\vdots \\ &0 \end{matrix} \right] \\

\Downarrow \\

A^T(b-A\hat{x}) = 0 \ \ 或 \ \ \ A^TA\hat{x} = A^Tb \\

\Downarrow A^TA \ \ invertible\\

p=A\hat{x} = A(A^TA)^{-1}A^Tb \\

\Downarrow \\

P=A(A^TA)^{-1}A^T

\]

\(P=P^T\) (P is symmetric) , \(P^2=P\)

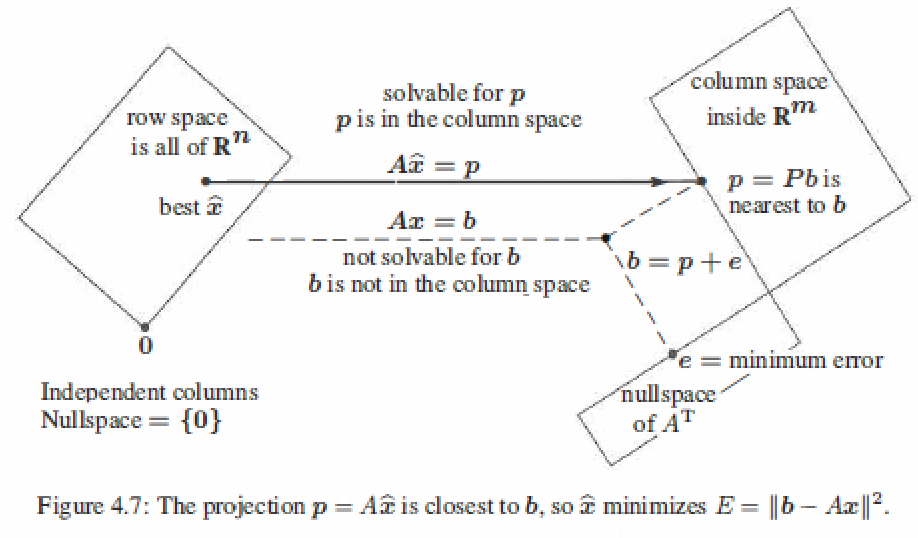

4.3 Least Squares Approximations

When b is outside the column space of A, that \(Ax=b\) has no solution. Find the length of \(e=b-Ax\) as small as possible, get a \(\hat{x}\) is a least squares solution.

If A has independent columns, then \(A^TA\) is invertible.

proof :

\Downarrow \\

x^TA^TAx = 0 \\

\Downarrow \\

(Ax)^TAx =0 \\

\Downarrow \\

Ax =0 \\

\ A \ \ is \ \ independent \\

\Downarrow \\

x=0

\]

so \(N(A^TA)\) has only zero vector, \(A^TA\) is invertible.

Linear algebra method (projection method)

Sovling \(A^TA\hat{x} = A^Tb\) gives the projection \(p=A\hat{x}\) of b onto the column space of A.

b = p+e \\

\Downarrow \\

A\hat{x} = p \ \ (solvable) \\

\Downarrow \\

\hat{x} = (A^TA)^{-1}A^Tb

\]

example: \(A = \left[ \begin{matrix} 1&1 \\ 1&2 \\ 1&3 \end{matrix} \right], x= \left[ \begin{matrix} C \\ D \end{matrix} \right], b=\left[ \begin{matrix} 1 \\ 2 \\ 2 \end{matrix} \right], Ax=b\) is unsolvable.

\Downarrow \\

A^TA = \left[ \begin{matrix} 1&1&1 \\ 1&2&3 \end{matrix} \right] \left[ \begin{matrix} 1&1 \\ 1&2 \\ 1&3 \end{matrix} \right] = \left[ \begin{matrix} 3&6 \\ 6&14 \end{matrix} \right] \\

A^Tb = \left[ \begin{matrix} 1&1&1 \\ 1&2&3 \end{matrix} \right] \left[ \begin{matrix} 1 \\ 2 \\ 2 \end{matrix} \right] = \left[ \begin{matrix} 5 \\ 11 \end{matrix} \right] \\

\Downarrow \\

\left[ \begin{matrix} 3&6 \\ 6&14 \end{matrix} \right] \left[ \begin{matrix} C \\ D\\ \end{matrix} \right] = \left[ \begin{matrix} 5 \\ 11 \end{matrix} \right] \\

\Downarrow \\

C = 2/3 \ , \ D=1/2 \\

\Downarrow \\

\hat{x} = \left[ \begin{matrix} 2/3 \\ 1/2\end{matrix} \right] \\

\]

Calculus method

The least squares solution \(\hat{x}\) makes the sum of errors \(E = ||Ax - b||^2\) as small as possible.

\]

example: \(A = \left[ \begin{matrix} 1&1 \\ 1&2 \\ 1&3 \end{matrix} \right], x= \left[ \begin{matrix} C \\ D \end{matrix} \right], b=\left[ \begin{matrix} 1 \\ 2 \\ 2 \end{matrix} \right], Ax=b\) is unsolvable.

\Downarrow \\

\part E / \part C = 0 \\

\part E / \part D = 0 \\

\Downarrow \\

C = 2/3 \ , \ D=1/2

\]

The Big Picture for Least Squares

4.4 Orthonormal Bases and Gram-Schmidt

- The columns \(q_1,q_2,...,q_n\) are orthonormal if \(q_i^Tq_j = \left \{ \begin{array}{rcl} 0 \ \ for \ \ i \neq j \\ 1 \ \ for \ \ i=j \end{array}\right\}, Q^TQ=I\).

- If Q is also square, the \(QQ^T=I\) and \(Q^T=Q^{-1}\), Q is an orthogonal matrix.

- The least squares solution to \(Qx = b\) is \(\hat{x} = Q^Tb\). Projection of b : \(p=QQ^Tb = Pb\).

Projection Using Orthonormal Bases: Q Repalces A

Orthogonal matrices are excellent and stable for computations.

Suppose the basis vectors are actually orthonormal, then \(A^TA\) simplifies to \(Q^TQ\), here is \(\hat{x} = Q^Tb\) and \(p=Q\hat{x}=QQ^Tb\).

\

p = \left[ \begin{matrix} |&&| \\ q_1&\cdots&q_n \\ |&&| \end{matrix} \right] \left[ \begin{matrix} q_1^Tb \\ \vdots \\ q_n^Tb \end{matrix} \right] = q_1(q_1^Tb) + ... + q_n(q_n^Tb)

\]

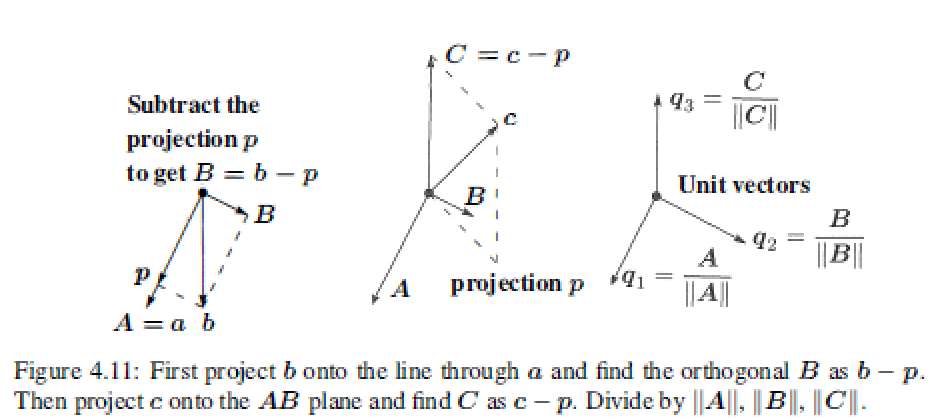

The Gram-Schmidt Process

"Gram-Schmidt way" is to create orthonormal vectors.Take independent column vecotrs \(a_i\) to orthonormal \(q_i\).

From independent vectors \(a_1,...,a_n\),Gram-Schmidt constructs orthonormal vectors \(q_1,...,q_n\).The matrices with these columns satisfy \(A=QR\). The \(R=Q^TA\) is upper triangular because later q's are orthoganal to a's.

Gram-Schmidt Process:

Subtract from every new vector its projections in the directions already set.

- First step : Chosing A=a and \(B = b-\frac{A^Tb}{A^TA}A\) -----> $A \bot B $

- Second step : \(C = c-\frac{A^Tc}{A^TA}A - \frac{B^Tc}{B^TB}B\) -----> $C \bot A \ \ C \bot B $

- Last step (orthonormal): \(q_1 = \frac{A}{||A||},q_2 = \frac{B}{||B||},q_3 = \frac{C}{||C||}\)

The \(q_1,q_2,q_3\) is the orthgonormal base. \(Q=\left[ \begin{matrix} && \\ q_1&q_2&q_3 \\ && \end{matrix} \right]\)

**The Factorization M = QR **:

Any m by n matrix M with independent columns can be factored into \(M=QR\).

\left[ \begin{matrix} q_1^Ta&q_1^Tb&q_1^Tc \\ &q_2^Tb&q_2^Tc \\ &&q_3^Tc \end{matrix} \right]

\]

example:

Suppose the independent not-orthogonal vectors \(a,b,c\) are

b = \left[ \begin{matrix} 2 \\ 0 \\ -2\end{matrix} \right] \ and \ \

c = \left[ \begin{matrix} 3 \\ -3 \\ 3\end{matrix} \right]

\]

Then A=a has \(A^TA=2, A^Tb=2\)

First step : \(B=b-\frac{A^Tb}{A^TA}A = \left[ \begin{matrix} 1 \\ 1 \\ -2\end{matrix} \right]\)

Second step : \(C=c-\frac{A^Tc}{A^TA}A -\frac{B^Tc}{B^TB}B = \left[ \begin{matrix} 1 \\ 1 \\ 1\end{matrix} \right]\)

Last step : \(q_1 = \frac{A}{||A||}= \frac{1}{\sqrt{2}}\left[ \begin{matrix} 1 \\ -1 \\ 0\end{matrix} \right],q_2 = \frac{B}{||B||}=\frac{1}{\sqrt{6}}\left[ \begin{matrix} 1 \\ 1 \\ -2\end{matrix} \right],q_3 = \frac{C}{||C||}=\frac{1}{\sqrt{3}}\left[ \begin{matrix} 1 \\ 1 \\ 1\end{matrix} \right]\)

Factorization :

= \left[ \begin{matrix} 1/\sqrt{2}&1/\sqrt{6}&1/\sqrt{3} \\ -1/\sqrt{2}&1/\sqrt{6}&1/\sqrt{3} \\ 0&-2/\sqrt{6}&1/\sqrt{3} \end{matrix} \right]

\left[ \begin{matrix} \sqrt{2}&\sqrt{2}&\sqrt{18} \\ 0&\sqrt{6}&-\sqrt{6} \\ 0&0&\sqrt{3} \end{matrix} \right] = QR

\]

Least squares

Instead of solving \(Ax=b\), which is impossible, we finally solve \(R\hat{x}=Q^Tb\) by back substitution which is very fast.

A^TA =(QR)^TQR = R^TQ^TQR=R^TR \\

\Downarrow \\

R^TR\hat{x} =R^TQ^Tb \quad or \quad R\hat{x} =Q^Tb \quad or \quad \hat{x} =R^{-1}Q^Tb \\

\]

4. Orthogonality的更多相关文章

- 【线性代数】4-1:四个正交子空间(Orthogonality of the Four Subspace)

title: [线性代数]4-1:四个正交子空间(Orthogonality of the Four Subspace) categories: Mathematic Linear Algebra k ...

- <<Numerical Analysis>>笔记

2ed, by Timothy Sauer DEFINITION 1.3A solution is correct within p decimal places if the error is l ...

- php学习笔记2016.1

基本类型 PHP是一种弱类型语言. PHP类型检查函数 is_bool() is_integer() is_double() is_string() is_objec ...

- FSL - MELODIC

Source: http://fsl.fmrib.ox.ac.uk/fsl/fslwiki/MELODIC; https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/MELODI ...

- Information retrieval信息检索

https://en.wikipedia.org/wiki/Information_retrieval 信息检索 (一种信息技术) 信息检索(Information Retrieval)是指信息按一定 ...

- [转]DCM Tutorial – An Introduction to Orientation Kinematics

原地址http://www.starlino.com/dcm_tutorial.html Introduction This article is a continuation of my IMU G ...

- FAQ: Machine Learning: What and How

What: 就是将统计学算法作为理论,计算机作为工具,解决问题.statistic Algorithm. How: 如何成为菜鸟一枚? http://www.quora.com/How-can-a-b ...

- OpenGL extension specification (from openGL.org)

Shader read/write/atomic into UAV global memory (need manual sync) http://www.opengl.org/registry/sp ...

- A geometric interpretation of the covariance matrix

A geometric interpretation of the covariance matrix Contents [hide] 1 Introduction 2 Eigendecomposit ...

- 知乎上关于c和c++的一场讨论_看看高手们的想法

为什么很多开源软件都用 C,而不是 C++ 写成? 余天升 开源社区一直都不怎么待见C++,自由软件基金会创始人Richard Stallman认为C++有语法歧义,这样子没有必要.非常琐碎还会和C不 ...

随机推荐

- 在RecyclerView.Adapter中使用 ViewBinding 的一个注意点

使用 viewpager2 时遇到如下错误, 使用 recyclerview 也有可能会遇到 : 2022-02-10 14:15:43.510 12151-12151/com.sharpcj.dem ...

- .NET Core 引发的异常:“sqlsugar.sqlsugarexception” 位于 system.private.corelib.dll 中

运行一个.NET Core 项目 报错:引发的异常:"sqlsugar.sqlsugarexception" 位于 system.private.corelib.dll 中 . 我 ...

- 【LeetCode链表#6】移除链表元素

移除链表元素 题目 力扣题目链接(opens new window) 题意:删除链表中等于给定值 val 的所有节点. 示例 1: 输入:head = [1,2,6,3,4,5,6], val = 6 ...

- 在矩池云使用Llama2-7B的方法

今天给大家分享如何在矩池云服务器使用 Llama2-7b模型. 硬件要求 矩池云已经配置好了 Llama 2 Web UI 环境,显存需要大于 8G,可以选择 A4000.P100.3090 以及更高 ...

- ZYNQ SD卡 CDn管脚的作用

## 什么 是CDn? card detect, active low,用于指示当前SD卡是否插入,主机通过检测CD脚的状态来识别当前SD卡的状态. CD可以连接到MIO或者EMIO的任意空闲管脚,通 ...

- 用BootstrapBlazor组件制作新增Customer Order的页面

1.在Shared目录下新建OrderCreateView.razor文件: 2.在OrderCreateView.razor里用最简单的表格准备好布局 3.准备好BootstrapBlazor的组件 ...

- 【Azure 媒体服务】记录使用Java调用Media Service API时候遇见的一些问题

问题一:java.lang.IllegalArgumentException: Parameter this.client.subscriptionId() is required and canno ...

- C++ //类模板中成员函数创建时机 //类模板中成员函数和普通类中成员函数创建时机是有区别的: //1.普通类中的成员函数一开始就可以创建 //2.类模板中的成员函数在调用时才创建

1 //类模板中成员函数创建时机 2 //类模板中成员函数和普通类中成员函数创建时机是有区别的: 3 //1.普通类中的成员函数一开始就可以创建 4 //2.类模板中的成员函数在调用时才创建 5 6 ...

- (一)Git 学习之为什么要学习 Git

一.版本控制 1.1 何为版本控制 版本控制(Revision control)是一种在开发的过程中用于管理我们对文件.目录或工程等内容的修改历史,方便查看更改历史记录.备份,以便恢复以前的版本的软件 ...

- linux 环境 打包 失败,一次解决过程

发现打包失败 测试发现 npm run build 打包失败 发现问题1 node_modules 库 没装 rm -rf node_modules 进行 npm install 安装失败 发现lin ...