An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling

An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling

2018-05-16 16:09:15

Introduction:

本文提出一种 TCN (Temporal Convolutional Networks) 网络结构,用卷积的方式进行序列数据的处理,并且取得了和更加复杂的 RNN、LSTM、GRU 等模型相当的精度。

Temporal Convolutional Networks :

TCNs 的特点有:

1). the convolutions in the architecture are casual, meaning that there is no information "leakage" from future to past;

2). the architecture can take a sequence of any length and map it to an output sequence of the same length, just as with an RNN.

3). we emphasize how to build very long effective history size using a combination of very deep networks and dilated convolutions.

1. Sequence Modeling :

输入是一个序列,如:x0, ... , xT;对应输出的 label 是 y0, ... , yT。而序列模型就是要学习这么一个映射函数,从输入到输出,即:

但是,这种方法以 autoregressive prediction 为核心,但是,不能直接捕获下面的 domain:machine translation, or sequence to sequence prediction,因为:在这些情况下,整个的输入可以被用来预测每一个输出(since in these cases the entire input sentence can be used to predict each output)。

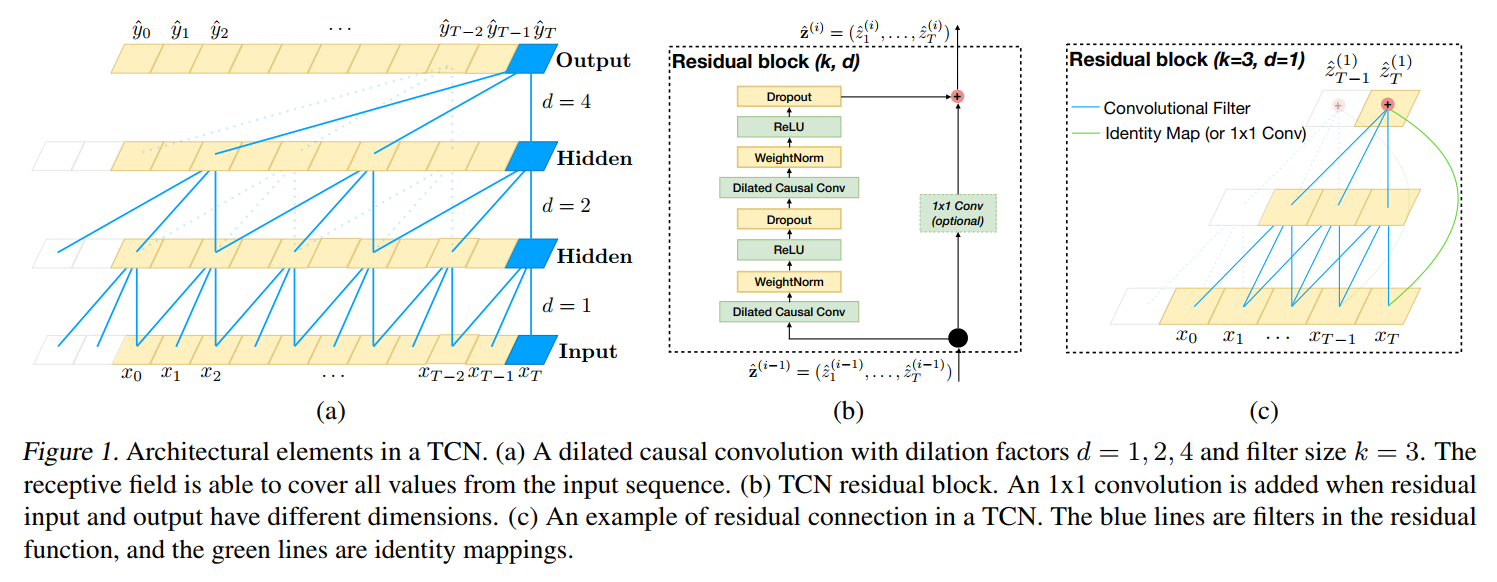

2. Casual Convlutions :

TCNs 是基于两个原则的:

(1)the fact that the network produces an output of the same length as the input(输入和输出保持一致),

(2)the fact that there can be no leakage from the future into the past(从未来到过去,没做信息泄露).

为了满足第一点,TCNs 采用全卷积网络结构。每一个 hidden layer 和 input layer 是相同的,and zero padding of length (kernel size -1) is added to keep subsequent layers the same length as previous ones.

为了满足第二点,TCNs 采用 casual convolutions, where an output at time t is convolved only with elements from time t and earlier in the previous layer.

那么,TCNs 就是:TCN = 1D FCN + casual convolutions.

这种基本的设计方法的主要不足之处在于:为了得到一个较长的历史尺寸,我们需要一个非常深的网络 或者 非常大的 filters。

3. Dilated Convolutions :

为了使得 filter size 尽可能大,我们采用 dilated convolutions,that enable an exponentially large receptive field.

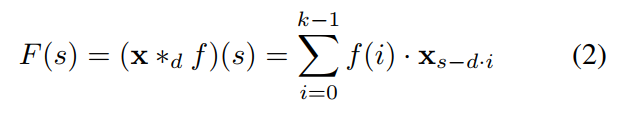

正式的,对于 1-D sequence input x , 以及 a filter f , 空洞卷积操作 F 在输入序列中元素 s 可以定义为:

其中,d 是空洞系数,k 是filter size,s-di accounts for the direction of the past.

Using larger dilation enables an output at the top level to represent a wider range of inputs, thus effectively expanding the receptive field of a ConvNet.

这给我们增加 TCN 的感受野,提供了两个思路:

(1). choosing larger filter sizes k ;

(2). increasing the dilation factor d, where the effective history of one such layer is (k-1)d.

4. Residual Connnections :

此处的残差连接,就是借鉴了何凯明的 residual network,即:将之前的信息和转换后的信息,都作为当前的输入,从而使得网络层数非常深的时候,仍然能够得到不错的效果:

This effectively allows layers to learn modifications to the identity mapping rather than the entire transformation, which has repeatly been shown to benefit very deep networks.

5. Discussion :

本小结总结了 TCN 用于 sequence modeling 的几个优势和劣势:

(1)Parallelilsm RNN 的预测总是需要上一个时刻完毕后,才可以进行。但是 CNN 的则没有这个约束,因为 the same filter is used in each layer.

(2)Flexible receptive field size TCN 可以在不同的 layer 采用不同的 receptive field size。

(3)Stable gradients 不同于 RNN 结构,TCN has a backpropagation path different from the temporal direction of the sequence. 所以 TCN 就没有 RNN 结构中梯度消失或者梯度爆炸的情况。

(4)Low memory requirement for training LSTM or GRU 由于需要存储很多 cell gates 的信息,所以需要很大的内存,但是由于 filter 是共享的,内存的利用仅仅依赖于网络的深度。而作者也发现:gated RNNs likely to use up to a multiplicative factor more memory than TCNs.

(5)Variable length inputs TCNs can also take in inputs of arbitrary length by sliding the 1D convolutional kernels.

两个明显的劣势在于:

(1)Data storage during evalution.

(2)Potential parameter change for a transfer of domain.

--- Reference

Code: http://github.com/locuslab/TCN.

An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling的更多相关文章

- 新手教程之:循环网络和LSTM指南 (A Beginner’s Guide to Recurrent Networks and LSTMs)

新手教程之:循环网络和LSTM指南 (A Beginner’s Guide to Recurrent Networks and LSTMs) 本文翻译自:http://deeplearning4j.o ...

- 论文笔记之:Fully Convolutional Attention Localization Networks: Efficient Attention Localization for Fine-Grained Recognition

Fully Convolutional Attention Localization Networks: Efficient Attention Localization for Fine-Grain ...

- 论文笔记之:UNSUPERVISED REPRESENTATION LEARNING WITH DEEP CONVOLUTIONAL GENERATIVE ADVERSARIAL NETWORKS

UNSUPERVISED REPRESENTATION LEARNING WITH DEEP CONVOLUTIONAL GENERATIVE ADVERSARIAL NETWORKS ICLR 2 ...

- Recurrent Neural Network Language Modeling Toolkit代码学习

Recurrent Neural Network Language Modeling Toolkit 工具使用点击打开链接 本博客地址:http://blog.csdn.net/wangxingin ...

- Empirical Analysis of Beam Search Performance Degradation in Neural Sequence Models

Empirical Analysis of Beam Search Performance Degradation in Neural Sequence Models 2019-06-13 10:2 ...

- Empirical Evaluation of Speaker Adaptation on DNN based Acoustic Model

DNN声学模型说话人自适应的经验性评估 年3月27日 发表于:Sound (cs.SD); Computation and Language (cs.CL); Audio and Speech Pro ...

- Combining STDP and Reward-Modulated STDP in Deep Convolutional Spiking Neural Networks for Digit Recognition

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! Abstract 灵长类视觉系统激发了深度人工神经网络的发展,使计算机视觉领域发生了革命性的变化.然而,这些网络的能量效率比它们的生物学对 ...

- RNN(2) ------ “《A Critical Review of Recurrent Neural Networks for Sequence Learning》RNN综述性论文讲解”(转载)

原文链接:http://blog.csdn.net/xizero00/article/details/51225065 一.论文所解决的问题 现有的关于RNN这一类网络的综述太少了,并且论文之间的符号 ...

- Learning to Rank Short Text Pairs with Convolutional Deep Neural Networks(paper)

本文重点: 和一般形式的文本处理方式一样,并没有特别大的差异,文章的重点在于提出了一个相似度矩阵 计算过程介绍: query和document中的首先通过word embedding处理后获得对应的表 ...

随机推荐

- hdu5422 最大表示法+KMP

#include <iostream> #include <algorithm> #include <string.h> #include <cstdio&g ...

- Sitecore CMS中删除项目

如何删除项目以及如何在Sitecore CMS中恢复已删除的项目. 删除项目 有多种方便的方法可以删除Sitecore中的项目. 从功能区 在内容树中选择您要删除的项目. 单击功能区中“主页”选项卡的 ...

- JavaScript--将秒数换算成时分秒

getTime() 返回距 1970 年 1 月 1 日之间的毫秒数 new Date(dateString) 定义 Date 对象的一种方式 <!DOCTYPE html> <h ...

- 20155228 2016-2017-2 《Java程序设计》第5周学习总结

20155228 2016-2017-2 <Java程序设计>第5周学习总结 教材学习内容总结 异常处理 try-catch语法:JVM执行try区块中的代码,如果发生错误就会跳到catc ...

- datetime处理日期和时间

datetime.now() # 获取当前datetimedatetime.utcnow() datetime(2017, 5, 23, 12, 20) # 用指定日期时间创建datetime 一.将 ...

- 【Linux学习二】文件系统

环境 虚拟机:VMware 10 Linux版本:CentOS-6.5-x86_64 客户端:Xshell4 FTP:Xftp4 一.文件系统 一切皆文件Filesystem Hierarchy St ...

- Nginx配置基础-location

location表达式类型 ~ 表示执行一个正则匹配,区分大小写~* 表示执行一个正则匹配,不区分大小写^~ 表示普通字符匹配.使用前缀匹配.如果匹配成功,则不再匹配其他location.= 进行普通 ...

- 【转】阿里出品的ETL工具dataX初体验

原文链接:https://www.imooc.com/article/15640 来源:慕课网 我的毕设选择了大数据方向的题目.大数据的第一步就是要拿到足够的数据源.现实情况中我们需要的数据源分布在不 ...

- flask请求钩子、HTTP响应、响应报文、重定向、手动返回错误码、修改MIME类型、jsonify()方法

请求钩子: 当我们需要对请求进行预处理和后处理时,就可以用Flask提供的回调函数(钩子),他们可用来注册在请求处理的不同阶段执行的处理函数.这些请求钩子使用装饰器实现,通过程序实例app调用,以 b ...

- Linux上查看大文件的开头几行内容以及结尾几行的内容

head -n 50 filePath 查看开头50行的内容 tail -n 50 filePath 查看文件结尾50行的内容