k8s资源对象

什么是资源对象?

所谓资源对象是指在k8s上创建的资源实例;即通过apiserver提供的各资源api接口(可以理解为各种资源模板),使用yaml文件或者命令行的方式向对应资源api接口传递参数赋值实例化的结果;比如我们在k8s上创建一个pod,那么我们就需要通过给apiserver交互,传递创建pod的相关参数,让apiserver拿着这些参数去实例化一个pod的相关信息存放在etcd中,然后再由调度器进行调度,由node节点的kubelet执行创建pod;简单讲资源对象就是把k8s之上的api接口进行实例化的结果;

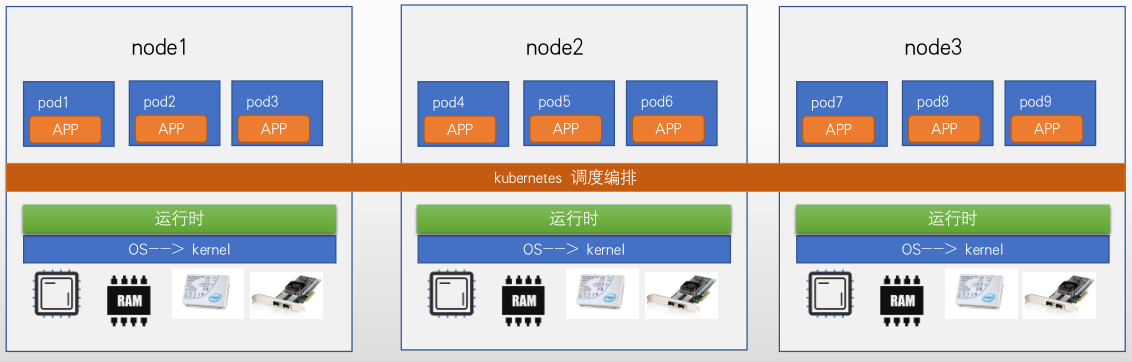

k8s逻辑运行环境

提示:k8s运行环境如上,k8s能够将多个node节点的底层提供的资源(如内存,cpu,存储,网络等)逻辑的整合成一个大的资源池,统一由k8s进行调度编排;用户只管在k8s上创建各种资源即可,创建完成的资源是通过k8s统一编排调度,用户无需关注具体资源在那个node上运行,也无需关注node节点资源情况;

k8s的设计理念——分层架构

k8s的设计理念——API设计原则

1、所有API应该是声明式的;

2、API对象是彼此互补而且可组合的,即“高内聚,松耦合”;

3、高层API以操作意图为基础设计;

4、低层API根据高层API的控制需要设计;

5、尽量避免简单封装,不要有在外部API无法显式知道的内部隐藏的机制;

6、API操作复杂度与对象数量成正比;

7、API对象状态不能依赖于网络连接状态;

8、尽量避免让操作机制依赖于全局状态,因为在分布式系统中要保证全局状态的同步是非常困难的;

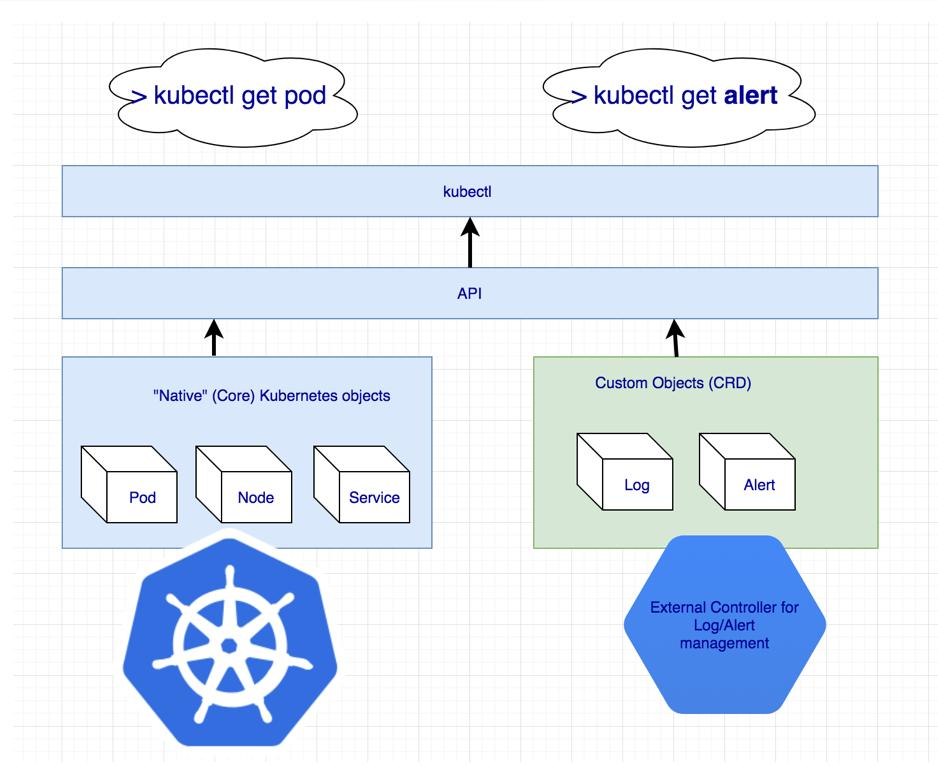

kubernetes API简介

提示:在k8s上api分内置api和自定义api;所谓内置api是指部署好k8s集群后自带的api接口;自定义api也称自定义资源(CRD,Custom Resource Definition),部署好k8s之后,通过安装其他组件等方式扩展出来的api;

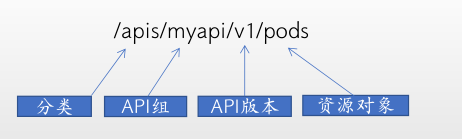

apiserver资源组织逻辑

提示:apiserver对于不同资源是通过分类,分组,分版本的方式逻辑组织的,如上图所示;

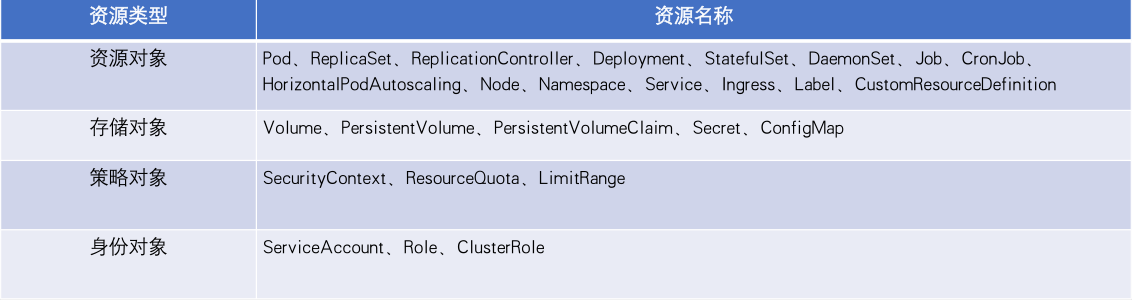

k8s内置资源对象简介

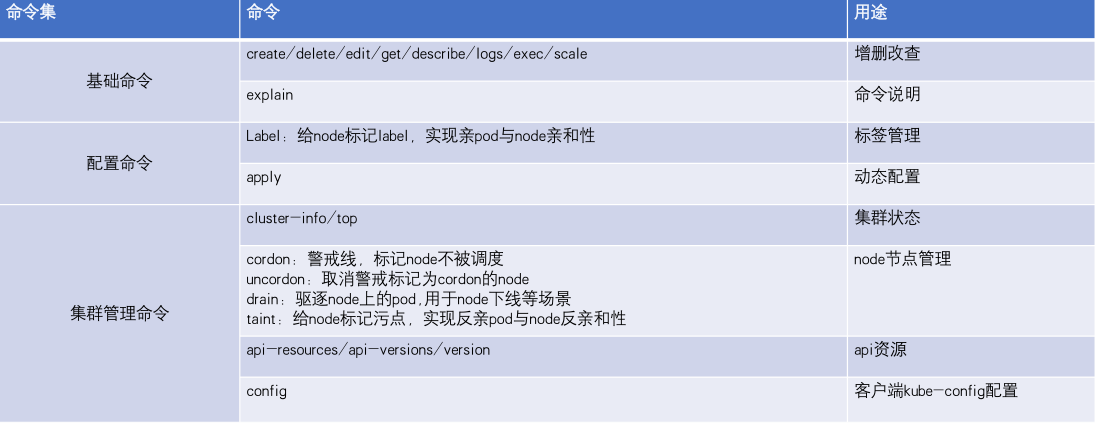

k8s资源对象操作命令

资源配置清单必需字段

1、apiVersion - 创建该对象所使用的Kubernetes API的版本;

2、kind - 想要创建的对象的类型;

3、metadata - 定义识别对象唯一性的数据,包括一个name名称 、可选的namespace,默认不写就是default名称空间;

4、spec:定义资源对象的详细规范信息(统一的label标签、容器名称、镜像、端口映射等),即用户期望对应资源处于什么状态;

5、status(Pod创建完成后k8s自动生成status状态),该字段信息由k8s自动维护,用户无需定义,即对应资源的实际状态;

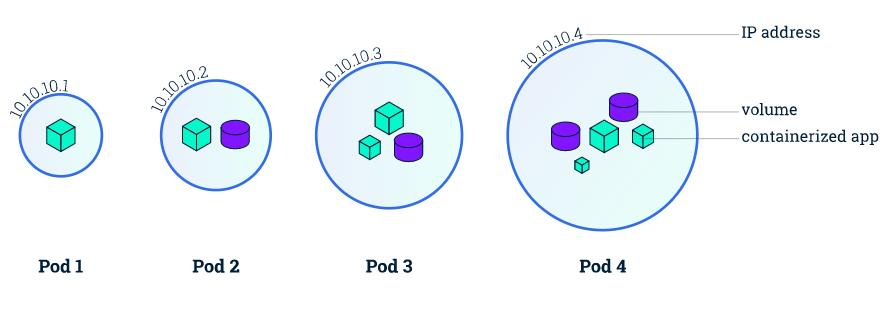

Pod资源对象

提示:pod是k8s中最小控制单元,一个pod中可以运行一个或多个容器;一个pod的中的容器是一起调度,即调度的最小单位是pod;pod的生命周期是短暂的,不会自愈,是用完就销毁的实体;一般我们通过Controller来创建和管理pod;使用控制器创建的pod具有自动恢复功能,即pod状态不满足用户期望状态,对应控制器会通过重启或重建的方式,让对应pod状态和数量始终和用户定义的期望状态一致;

示例:自主式pod配置清单

- apiVersion: v1

- kind: Pod

- metadata:

- name: "pod-demo"

- namespace: default

- labels:

- app: "pod-demo"

- spec:

- containers:

- - name: pod-demo

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- ports:

- - containerPort: 80

- name: http

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

应用配置清单

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- net-test1 1/1 Running 2 (4m35s ago) 7d7h

- test 1/1 Running 4 (4m34s ago) 13d

- test1 1/1 Running 4 (4m35s ago) 13d

- test2 1/1 Running 4 (4m35s ago) 13d

- root@k8s-deploy:/yaml# kubectl apply -f pod-demo.yaml

- pod/pod-demo created

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- net-test1 1/1 Running 2 (4m47s ago) 7d7h

- pod-demo 0/1 ContainerCreating 0 4s

- test 1/1 Running 4 (4m46s ago) 13d

- test1 1/1 Running 4 (4m47s ago) 13d

- test2 1/1 Running 4 (4m47s ago) 13d

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- net-test1 1/1 Running 2 (4m57s ago) 7d7h

- pod-demo 1/1 Running 0 14s

- test 1/1 Running 4 (4m56s ago) 13d

- test1 1/1 Running 4 (4m57s ago) 13d

- test2 1/1 Running 4 (4m57s ago) 13d

- root@k8s-deploy:/yaml#

提示:此pod只是在k8s上运行起来,它没有控制器的监视,对应pod删除,故障都不会自动恢复;

Job控制器,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14157306.html;

job控制器配置清单示例

- apiVersion: batch/v1

- kind: Job

- metadata:

- name: job-demo

- namespace: default

- labels:

- app: job-demo

- spec:

- template:

- metadata:

- name: job-demo

- labels:

- app: job-demo

- spec:

- containers:

- - name: job-demo-container

- image: harbor.ik8s.cc/baseimages/centos7:2023

- command: ["/bin/sh"]

- args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

- volumeMounts:

- - mountPath: /cache

- name: cache-volume

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: cache-volume

- hostPath:

- path: /tmp/jobdata

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- restartPolicy: Never

提示:定义job资源必须定义restartPolicy;

应用清单

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- net-test1 1/1 Running 3 (48m ago) 7d10h

- pod-demo 1/1 Running 1 (48m ago) 3h32m

- test 1/1 Running 5 (48m ago) 14d

- test1 1/1 Running 5 (48m ago) 14d

- test2 1/1 Running 5 (48m ago) 14d

- root@k8s-deploy:/yaml# kubectl apply -f job-demo.yaml

- job.batch/job-demo created

- root@k8s-deploy:/yaml# kubectl get pods -o wide

- NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- job-demo-z8gmb 0/1 Completed 0 26s 10.200.211.130 192.168.0.34 <none> <none>

- net-test1 1/1 Running 3 (49m ago) 7d10h 10.200.211.191 192.168.0.34 <none> <none>

- pod-demo 1/1 Running 1 (49m ago) 3h32m 10.200.155.138 192.168.0.36 <none> <none>

- test 1/1 Running 5 (49m ago) 14d 10.200.209.6 192.168.0.35 <none> <none>

- test1 1/1 Running 5 (49m ago) 14d 10.200.209.8 192.168.0.35 <none> <none>

- test2 1/1 Running 5 (49m ago) 14d 10.200.211.177 192.168.0.34 <none> <none>

- root@k8s-deploy:/yaml#

验证:查看192.168.0.34的/tmp/jobdata目录下是否有job执行的任务数据?

- root@k8s-deploy:/yaml# ssh 192.168.0.34 "ls /tmp/jobdata"

- data.log

- root@k8s-deploy:/yaml# ssh 192.168.0.34 "cat /tmp/jobdata/data.log"

- data init job at 2023-05-06_23-31-32

- root@k8s-deploy:/yaml#

提示:可以看到对应job所在宿主机的/tmp/jobdata/目录下有job执行过后的数据,这说明我们定义的job任务顺利完成;

定义并行job

- apiVersion: batch/v1

- kind: Job

- metadata:

- name: job-multi-demo

- namespace: default

- labels:

- app: job-multi-demo

- spec:

- completions: 5

- template:

- metadata:

- name: job-multi-demo

- labels:

- app: job-multi-demo

- spec:

- containers:

- - name: job-multi-demo-container

- image: harbor.ik8s.cc/baseimages/centos7:2023

- command: ["/bin/sh"]

- args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

- volumeMounts:

- - mountPath: /cache

- name: cache-volume

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: cache-volume

- hostPath:

- path: /tmp/jobdata

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- restartPolicy: Never

提示:spec字段下使用completions来指定执行任务需要的对应pod的数量;

应用清单

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- job-demo-z8gmb 0/1 Completed 0 24m

- net-test1 1/1 Running 3 (73m ago) 7d11h

- pod-demo 1/1 Running 1 (73m ago) 3h56m

- test 1/1 Running 5 (73m ago) 14d

- test1 1/1 Running 5 (73m ago) 14d

- test2 1/1 Running 5 (73m ago) 14d

- root@k8s-deploy:/yaml# kubectl apply -f job-multi-demo.yaml

- job.batch/job-multi-demo created

- root@k8s-deploy:/yaml# kubectl get job

- NAME COMPLETIONS DURATION AGE

- job-demo 1/1 5s 24m

- job-multi-demo 1/5 10s 10s

- root@k8s-deploy:/yaml# kubectl get pods -o wide

- NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- job-demo-z8gmb 0/1 Completed 0 24m 10.200.211.130 192.168.0.34 <none> <none>

- job-multi-demo-5vp9w 0/1 Completed 0 12s 10.200.211.144 192.168.0.34 <none> <none>

- job-multi-demo-frstg 0/1 Completed 0 22s 10.200.211.186 192.168.0.34 <none> <none>

- job-multi-demo-gd44s 0/1 Completed 0 17s 10.200.211.184 192.168.0.34 <none> <none>

- job-multi-demo-kfm79 0/1 ContainerCreating 0 2s <none> 192.168.0.34 <none> <none>

- job-multi-demo-nsmpg 0/1 Completed 0 7s 10.200.211.135 192.168.0.34 <none> <none>

- net-test1 1/1 Running 3 (73m ago) 7d11h 10.200.211.191 192.168.0.34 <none> <none>

- pod-demo 1/1 Running 1 (73m ago) 3h56m 10.200.155.138 192.168.0.36 <none> <none>

- test 1/1 Running 5 (73m ago) 14d 10.200.209.6 192.168.0.35 <none> <none>

- test1 1/1 Running 5 (73m ago) 14d 10.200.209.8 192.168.0.35 <none> <none>

- test2 1/1 Running 5 (73m ago) 14d 10.200.211.177 192.168.0.34 <none> <none>

- root@k8s-deploy:/yaml# kubectl get pods -o wide

- NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- job-demo-z8gmb 0/1 Completed 0 24m 10.200.211.130 192.168.0.34 <none> <none>

- job-multi-demo-5vp9w 0/1 Completed 0 33s 10.200.211.144 192.168.0.34 <none> <none>

- job-multi-demo-frstg 0/1 Completed 0 43s 10.200.211.186 192.168.0.34 <none> <none>

- job-multi-demo-gd44s 0/1 Completed 0 38s 10.200.211.184 192.168.0.34 <none> <none>

- job-multi-demo-kfm79 0/1 Completed 0 23s 10.200.211.140 192.168.0.34 <none> <none>

- job-multi-demo-nsmpg 0/1 Completed 0 28s 10.200.211.135 192.168.0.34 <none> <none>

- net-test1 1/1 Running 3 (73m ago) 7d11h 10.200.211.191 192.168.0.34 <none> <none>

- pod-demo 1/1 Running 1 (73m ago) 3h57m 10.200.155.138 192.168.0.36 <none> <none>

- test 1/1 Running 5 (73m ago) 14d 10.200.209.6 192.168.0.35 <none> <none>

- test1 1/1 Running 5 (73m ago) 14d 10.200.209.8 192.168.0.35 <none> <none>

- test2 1/1 Running 5 (73m ago) 14d 10.200.211.177 192.168.0.34 <none> <none>

- root@k8s-deploy:/yaml#

验证:查看192.168.0.34的/tmp/jobdata/目录下是否有job数据产生?

- root@k8s-deploy:/yaml# ssh 192.168.0.34 "ls /tmp/jobdata"

- data.log

- root@k8s-deploy:/yaml# ssh 192.168.0.34 "cat /tmp/jobdata/data.log"

- data init job at 2023-05-06_23-31-32

- data init job at 2023-05-06_23-55-44

- data init job at 2023-05-06_23-55-49

- data init job at 2023-05-06_23-55-54

- data init job at 2023-05-06_23-55-59

- data init job at 2023-05-06_23-56-04

- root@k8s-deploy:/yaml#

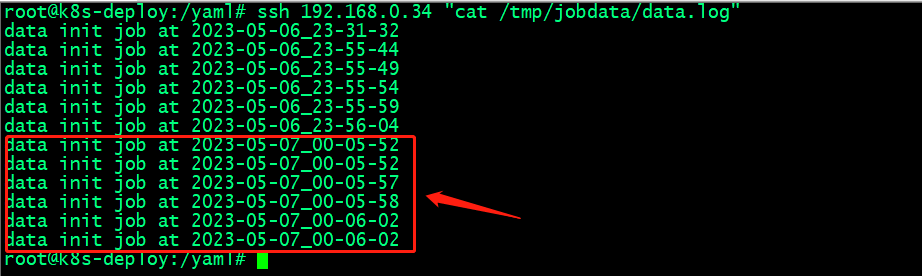

定义并行度

- apiVersion: batch/v1

- kind: Job

- metadata:

- name: job-multi-demo2

- namespace: default

- labels:

- app: job-multi-demo2

- spec:

- completions: 6

- parallelism: 2

- template:

- metadata:

- name: job-multi-demo2

- labels:

- app: job-multi-demo2

- spec:

- containers:

- - name: job-multi-demo2-container

- image: harbor.ik8s.cc/baseimages/centos7:2023

- command: ["/bin/sh"]

- args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

- volumeMounts:

- - mountPath: /cache

- name: cache-volume

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: cache-volume

- hostPath:

- path: /tmp/jobdata

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- restartPolicy: Never

提示:在spec字段下使用parallelism字段来指定并行度,即一次几个pod同时运行;上述清单表示,一次2个pod同时运行,总共需要6个pod;

应用清单

- root@k8s-deploy:/yaml# kubectl get jobs

- NAME COMPLETIONS DURATION AGE

- job-demo 1/1 5s 34m

- job-multi-demo 5/5 25s 9m56s

- root@k8s-deploy:/yaml# kubectl apply -f job-multi-demo2.yaml

- job.batch/job-multi-demo2 created

- root@k8s-deploy:/yaml# kubectl get jobs

- NAME COMPLETIONS DURATION AGE

- job-demo 1/1 5s 34m

- job-multi-demo 5/5 25s 10m

- job-multi-demo2 0/6 2s 3s

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- job-demo-z8gmb 0/1 Completed 0 34m

- job-multi-demo-5vp9w 0/1 Completed 0 10m

- job-multi-demo-frstg 0/1 Completed 0 10m

- job-multi-demo-gd44s 0/1 Completed 0 10m

- job-multi-demo-kfm79 0/1 Completed 0 9m59s

- job-multi-demo-nsmpg 0/1 Completed 0 10m

- job-multi-demo2-7ppxc 0/1 Completed 0 10s

- job-multi-demo2-mxbtq 0/1 Completed 0 5s

- job-multi-demo2-rhgh7 0/1 Completed 0 4s

- job-multi-demo2-th6ff 0/1 Completed 0 11s

- net-test1 1/1 Running 3 (83m ago) 7d11h

- pod-demo 1/1 Running 1 (83m ago) 4h6m

- test 1/1 Running 5 (83m ago) 14d

- test1 1/1 Running 5 (83m ago) 14d

- test2 1/1 Running 5 (83m ago) 14d

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- job-demo-z8gmb 0/1 Completed 0 34m

- job-multi-demo-5vp9w 0/1 Completed 0 10m

- job-multi-demo-frstg 0/1 Completed 0 10m

- job-multi-demo-gd44s 0/1 Completed 0 10m

- job-multi-demo-kfm79 0/1 Completed 0 10m

- job-multi-demo-nsmpg 0/1 Completed 0 10m

- job-multi-demo2-7ppxc 0/1 Completed 0 16s

- job-multi-demo2-8bh22 0/1 Completed 0 6s

- job-multi-demo2-dbjqw 0/1 Completed 0 6s

- job-multi-demo2-mxbtq 0/1 Completed 0 11s

- job-multi-demo2-rhgh7 0/1 Completed 0 10s

- job-multi-demo2-th6ff 0/1 Completed 0 17s

- net-test1 1/1 Running 3 (83m ago) 7d11h

- pod-demo 1/1 Running 1 (83m ago) 4h6m

- test 1/1 Running 5 (83m ago) 14d

- test1 1/1 Running 5 (83m ago) 14d

- test2 1/1 Running 5 (83m ago) 14d

- root@k8s-deploy:/yaml# kubectl get pods -o wide

- NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- job-demo-z8gmb 0/1 Completed 0 35m 10.200.211.130 192.168.0.34 <none> <none>

- job-multi-demo-5vp9w 0/1 Completed 0 10m 10.200.211.144 192.168.0.34 <none> <none>

- job-multi-demo-frstg 0/1 Completed 0 11m 10.200.211.186 192.168.0.34 <none> <none>

- job-multi-demo-gd44s 0/1 Completed 0 11m 10.200.211.184 192.168.0.34 <none> <none>

- job-multi-demo-kfm79 0/1 Completed 0 10m 10.200.211.140 192.168.0.34 <none> <none>

- job-multi-demo-nsmpg 0/1 Completed 0 10m 10.200.211.135 192.168.0.34 <none> <none>

- job-multi-demo2-7ppxc 0/1 Completed 0 57s 10.200.211.145 192.168.0.34 <none> <none>

- job-multi-demo2-8bh22 0/1 Completed 0 47s 10.200.211.148 192.168.0.34 <none> <none>

- job-multi-demo2-dbjqw 0/1 Completed 0 47s 10.200.211.141 192.168.0.34 <none> <none>

- job-multi-demo2-mxbtq 0/1 Completed 0 52s 10.200.211.152 192.168.0.34 <none> <none>

- job-multi-demo2-rhgh7 0/1 Completed 0 51s 10.200.211.143 192.168.0.34 <none> <none>

- job-multi-demo2-th6ff 0/1 Completed 0 58s 10.200.211.136 192.168.0.34 <none> <none>

- net-test1 1/1 Running 3 (84m ago) 7d11h 10.200.211.191 192.168.0.34 <none> <none>

- pod-demo 1/1 Running 1 (84m ago) 4h7m 10.200.155.138 192.168.0.36 <none> <none>

- test 1/1 Running 5 (84m ago) 14d 10.200.209.6 192.168.0.35 <none> <none>

- test1 1/1 Running 5 (84m ago) 14d 10.200.209.8 192.168.0.35 <none> <none>

- test2 1/1 Running 5 (84m ago) 14d 10.200.211.177 192.168.0.34 <none> <none>

- root@k8s-deploy:/yaml#

验证job数据

提示:可以看到后面job追加的时间几乎都是两个重复的,这说明两个pod同时执行了job里的任务;

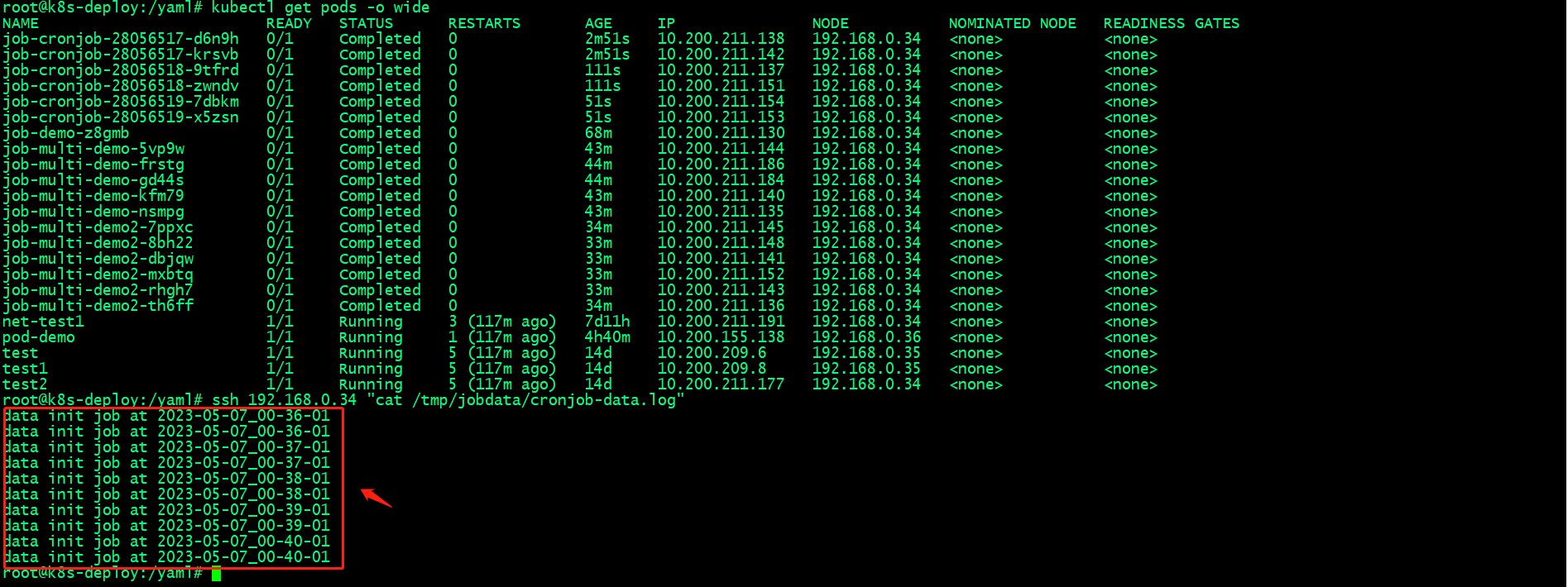

Cronjob控制器,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14157306.html;

示例:定义cronjob

- apiVersion: batch/v1

- kind: CronJob

- metadata:

- name: job-cronjob

- namespace: default

- spec:

- schedule: "*/1 * * * *"

- jobTemplate:

- spec:

- parallelism: 2

- template:

- spec:

- containers:

- - name: job-cronjob-container

- image: harbor.ik8s.cc/baseimages/centos7:2023

- command: ["/bin/sh"]

- args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/cronjob-data.log"]

- volumeMounts:

- - mountPath: /cache

- name: cache-volume

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: cache-volume

- hostPath:

- path: /tmp/jobdata

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- restartPolicy: OnFailure

应用清单

- root@k8s-deploy:/yaml# kubectl apply -f cronjob-demo.yaml

- cronjob.batch/job-cronjob created

- root@k8s-deploy:/yaml# kubectl get cronjob

- NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

- job-cronjob */1 * * * * False 0 <none> 6s

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- job-cronjob-28056516-njddz 0/1 Completed 0 12s

- job-cronjob-28056516-wgbns 0/1 Completed 0 12s

- job-demo-z8gmb 0/1 Completed 0 64m

- job-multi-demo-5vp9w 0/1 Completed 0 40m

- job-multi-demo-frstg 0/1 Completed 0 40m

- job-multi-demo-gd44s 0/1 Completed 0 40m

- job-multi-demo-kfm79 0/1 Completed 0 40m

- job-multi-demo-nsmpg 0/1 Completed 0 40m

- job-multi-demo2-7ppxc 0/1 Completed 0 30m

- job-multi-demo2-8bh22 0/1 Completed 0 30m

- job-multi-demo2-dbjqw 0/1 Completed 0 30m

- job-multi-demo2-mxbtq 0/1 Completed 0 30m

- job-multi-demo2-rhgh7 0/1 Completed 0 30m

- job-multi-demo2-th6ff 0/1 Completed 0 30m

- net-test1 1/1 Running 3 (113m ago) 7d11h

- pod-demo 1/1 Running 1 (113m ago) 4h36m

- test 1/1 Running 5 (113m ago) 14d

- test1 1/1 Running 5 (113m ago) 14d

- test2 1/1 Running 5 (113m ago) 14d

- root@k8s-deploy:/yaml# kubectl get cronjob

- NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

- job-cronjob */1 * * * * False 0 12s 108s

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- job-cronjob-28056516-njddz 0/1 Completed 0 77s

- job-cronjob-28056516-wgbns 0/1 Completed 0 77s

- job-cronjob-28056517-d6n9h 0/1 Completed 0 17s

- job-cronjob-28056517-krsvb 0/1 Completed 0 17s

- job-demo-z8gmb 0/1 Completed 0 65m

- job-multi-demo-5vp9w 0/1 Completed 0 41m

- job-multi-demo-frstg 0/1 Completed 0 41m

- job-multi-demo-gd44s 0/1 Completed 0 41m

- job-multi-demo-kfm79 0/1 Completed 0 41m

- job-multi-demo-nsmpg 0/1 Completed 0 41m

- job-multi-demo2-7ppxc 0/1 Completed 0 31m

- job-multi-demo2-8bh22 0/1 Completed 0 31m

- job-multi-demo2-dbjqw 0/1 Completed 0 31m

- job-multi-demo2-mxbtq 0/1 Completed 0 31m

- job-multi-demo2-rhgh7 0/1 Completed 0 31m

- job-multi-demo2-th6ff 0/1 Completed 0 31m

- net-test1 1/1 Running 3 (114m ago) 7d11h

- pod-demo 1/1 Running 1 (114m ago) 4h38m

- test 1/1 Running 5 (114m ago) 14d

- test1 1/1 Running 5 (114m ago) 14d

- test2 1/1 Running 5 (114m ago) 14d

- root@k8s-deploy:/yaml#

提示:cronjob 默认保留最近3个历史记录;

验证:查看周期执行任务的数据

提示:从上面的时间就可以看到每过一分钟就有两个pod执行一次任务;

RC/RS 副本控制器

RC(Replication Controller),副本控制器,该控制器主要负责控制pod副本数量始终满足用户期望的副本数量,该副本控制器是第一代pod副本控制器,仅支持selector = !=;

rc控制器示例

- apiVersion: v1

- kind: ReplicationController

- metadata:

- name: ng-rc

- spec:

- replicas: 2

- selector:

- app: ng-rc-80

- template:

- metadata:

- labels:

- app: ng-rc-80

- spec:

- containers:

- - name: pod-demo

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- ports:

- - containerPort: 80

- name: http

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

应用配置清单

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- test 1/1 Running 6 (11m ago) 16d

- test1 1/1 Running 6 (11m ago) 16d

- test2 1/1 Running 6 (11m ago) 16d

- root@k8s-deploy:/yaml# kubectl apply -f rc-demo.yaml

- replicationcontroller/ng-rc created

- root@k8s-deploy:/yaml# kubectl get pods -o wide

- NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

- ng-rc-l7xmp 1/1 Running 0 10s 10.200.211.136 192.168.0.34 <none> <none>

- ng-rc-wl5d6 1/1 Running 0 9s 10.200.155.185 192.168.0.36 <none> <none>

- test 1/1 Running 6 (11m ago) 16d 10.200.209.24 192.168.0.35 <none> <none>

- test1 1/1 Running 6 (11m ago) 16d 10.200.209.31 192.168.0.35 <none> <none>

- test2 1/1 Running 6 (11m ago) 16d 10.200.211.186 192.168.0.34 <none> <none>

- root@k8s-deploy:/yaml# kubectl get rc

- NAME DESIRED CURRENT READY AGE

- ng-rc 2 2 2 25s

- root@k8s-deploy:/yaml#

验证:修改pod标签,看看对应pod是否会重新创建?

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 2m32s app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 2m31s app=ng-rc-80

- test 1/1 Running 6 (13m ago) 16d run=test

- test1 1/1 Running 6 (13m ago) 16d run=test1

- test2 1/1 Running 6 (13m ago) 16d run=test2

- root@k8s-deploy:/yaml# kubectl label pod/ng-rc-l7xmp app=nginx-demo --overwrite

- pod/ng-rc-l7xmp labeled

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 4m42s app=nginx-demo

- ng-rc-rxvd4 0/1 ContainerCreating 0 3s app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 4m41s app=ng-rc-80

- test 1/1 Running 6 (15m ago) 16d run=test

- test1 1/1 Running 6 (15m ago) 16d run=test1

- test2 1/1 Running 6 (15m ago) 16d run=test2

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 4m52s app=nginx-demo

- ng-rc-rxvd4 1/1 Running 0 13s app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 4m51s app=ng-rc-80

- test 1/1 Running 6 (16m ago) 16d run=test

- test1 1/1 Running 6 (16m ago) 16d run=test1

- test2 1/1 Running 6 (16m ago) 16d run=test2

- root@k8s-deploy:/yaml# kubectl label pod/ng-rc-l7xmp app=ng-rc-80 --overwrite

- pod/ng-rc-l7xmp labeled

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 5m27s app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 5m26s app=ng-rc-80

- test 1/1 Running 6 (16m ago) 16d run=test

- test1 1/1 Running 6 (16m ago) 16d run=test1

- test2 1/1 Running 6 (16m ago) 16d run=test2

- root@k8s-deploy:/yaml#

提示:rc控制器是通过标签选择器来识别对应pod是否归属对应rc控制器管控,如果发现对应pod的标签发生改变,那么rc控制器会通过新建或删除的方法将对应pod数量始终和用户定义的数量保持一致;

RS(ReplicaSet),副本控制器,该副本控制器和rc类似,都是通过标签选择器来匹配归属自己管控的pod数量,如果标签或对应pod数量少于或多余用户期望的数量,该控制器会通过新建或删除pod的方式将对应pod数量始终和用户期望的pod数量保持一致;rs控制器和rc控制器唯一区别就是rs控制器支持selector = !=精确匹配外,还支持模糊匹配in notin;是k8s之上的第二代pod副本控制器;

rs控制器示例

- apiVersion: apps/v1

- kind: ReplicaSet

- metadata:

- name: rs-demo

- labels:

- app: rs-demo

- spec:

- replicas: 3

- selector:

- matchLabels:

- app: rs-demo

- template:

- metadata:

- labels:

- app: rs-demo

- spec:

- containers:

- - name: rs-demo

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- ports:

- - name: web

- containerPort: 80

- protocol: TCP

- env:

- - name: NGX_VERSION

- value: 1.16.1

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

应用配置清单

验证:修改pod标签,看看对应pod是否会发生变化?

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 18m app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 18m app=ng-rc-80

- rs-demo-nzmqs 1/1 Running 0 71s app=rs-demo

- rs-demo-v2vb6 1/1 Running 0 71s app=rs-demo

- rs-demo-x27fv 1/1 Running 0 71s app=rs-demo

- test 1/1 Running 6 (29m ago) 16d run=test

- test1 1/1 Running 6 (29m ago) 16d run=test1

- test2 1/1 Running 6 (29m ago) 16d run=test2

- root@k8s-deploy:/yaml# kubectl label pod/rs-demo-nzmqs app=nginx --overwrite

- pod/rs-demo-nzmqs labeled

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 19m app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 19m app=ng-rc-80

- rs-demo-bdfdd 1/1 Running 0 4s app=rs-demo

- rs-demo-nzmqs 1/1 Running 0 103s app=nginx

- rs-demo-v2vb6 1/1 Running 0 103s app=rs-demo

- rs-demo-x27fv 1/1 Running 0 103s app=rs-demo

- test 1/1 Running 6 (30m ago) 16d run=test

- test1 1/1 Running 6 (30m ago) 16d run=test1

- test2 1/1 Running 6 (30m ago) 16d run=test2

- root@k8s-deploy:/yaml# kubectl label pod/rs-demo-nzmqs app=rs-demo --overwrite

- pod/rs-demo-nzmqs labeled

- root@k8s-deploy:/yaml# kubectl get pods --show-labels

- NAME READY STATUS RESTARTS AGE LABELS

- ng-rc-l7xmp 1/1 Running 0 19m app=ng-rc-80

- ng-rc-wl5d6 1/1 Running 0 19m app=ng-rc-80

- rs-demo-nzmqs 1/1 Running 0 119s app=rs-demo

- rs-demo-v2vb6 1/1 Running 0 119s app=rs-demo

- rs-demo-x27fv 1/1 Running 0 119s app=rs-demo

- test 1/1 Running 6 (30m ago) 16d run=test

- test1 1/1 Running 6 (30m ago) 16d run=test1

- test2 1/1 Running 6 (30m ago) 16d run=test2

- root@k8s-deploy:/yaml#

提示:可以看到当我们修改pod标签为其他标签以后,对应rs控制器会新建一个pod,其标签为app=rs-demo,这是因为当我们修改pod标签以后,rs控制器发现标签选择器匹配的pod数量少于用户定义的数量,所以rs控制器会新建一个标签为app=rs-demo的pod;当我们把pod标签修改为rs-demo时,rs控制器发现对应标签选择器匹配pod数量多余用户期望的pod数量,此时rs控制器会通过删除pod方法,让app=rs-demo标签的pod和用户期望的pod数量保持一致;

Deployment 副本控制器,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14149042.html;

Deployment副本控制器时k8s第三代pod副本控制器,该控制器比rs控制器更高级,除了有rs的功能之外,还有很多高级功能,,比如说最重要的滚动升级、回滚等;

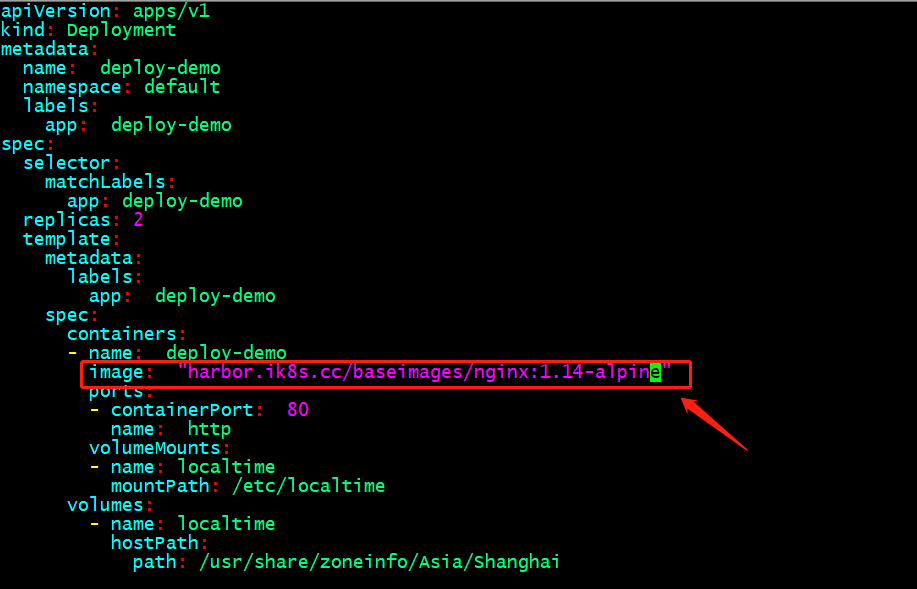

deploy控制器示例

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- name: deploy-demo

- namespace: default

- labels:

- app: deploy-demo

- spec:

- selector:

- matchLabels:

- app: deploy-demo

- replicas: 2

- template:

- metadata:

- labels:

- app: deploy-demo

- spec:

- containers:

- - name: deploy-demo

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- ports:

- - containerPort: 80

- name: http

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

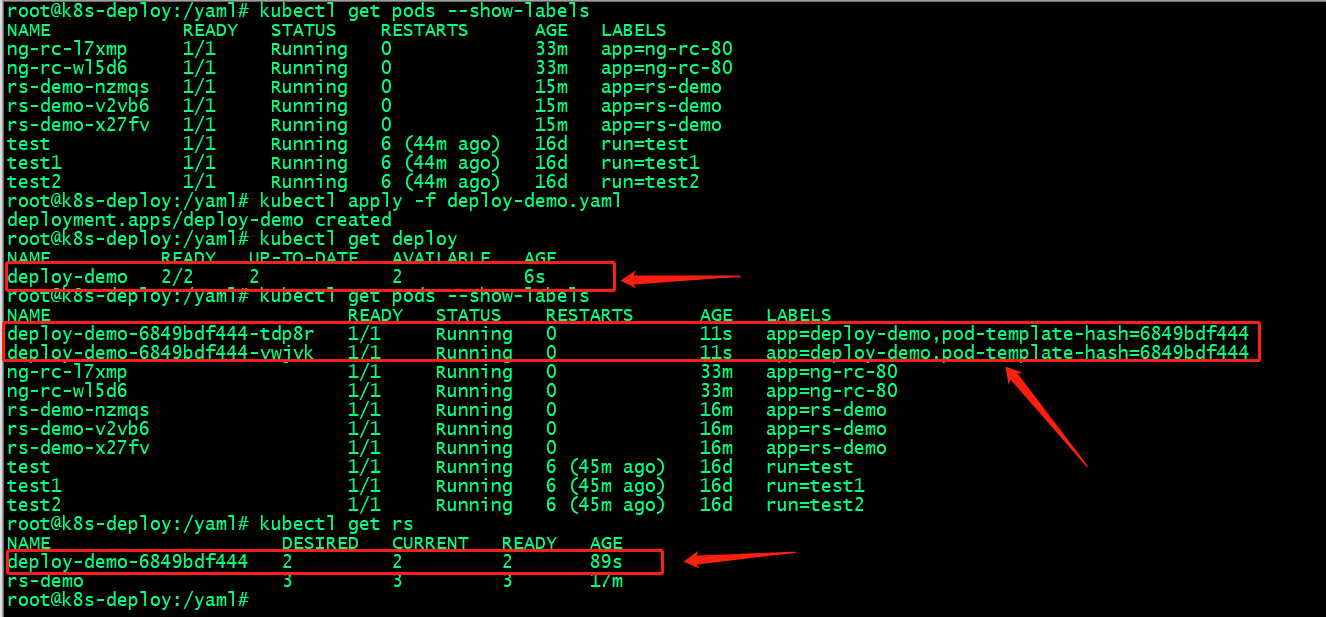

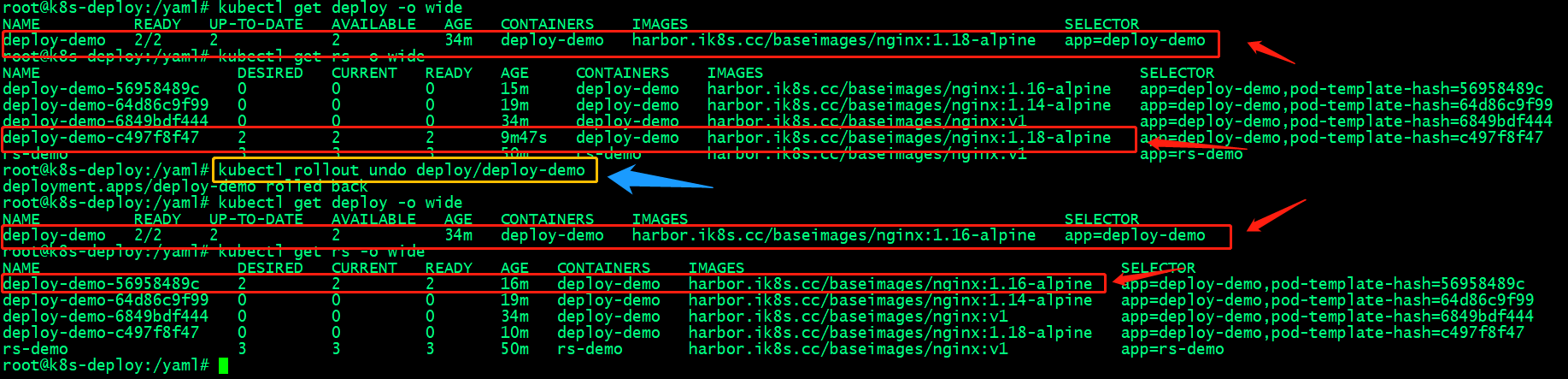

应用配置清单

提示:deploy控制器是通过创建rs控制器来实现管控对应pod数量;

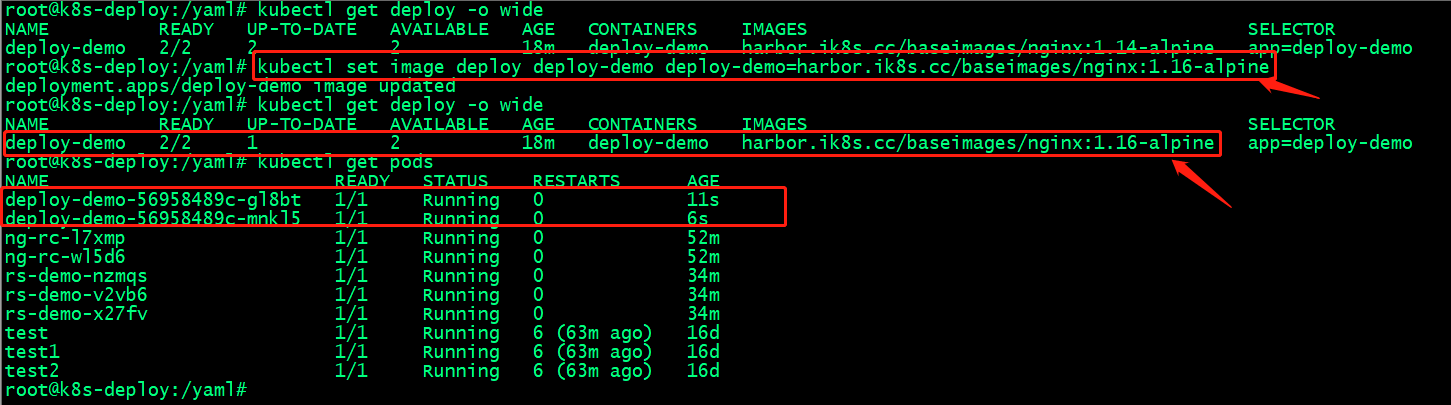

通过修改镜像版本来更新pod版本

应用配置清单

使用命令更新pod版本

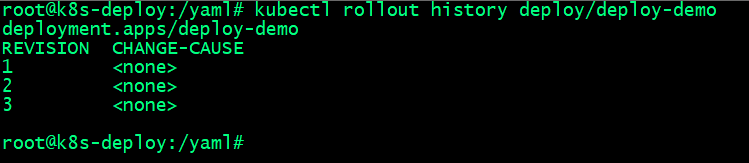

查看rs更新历史版本

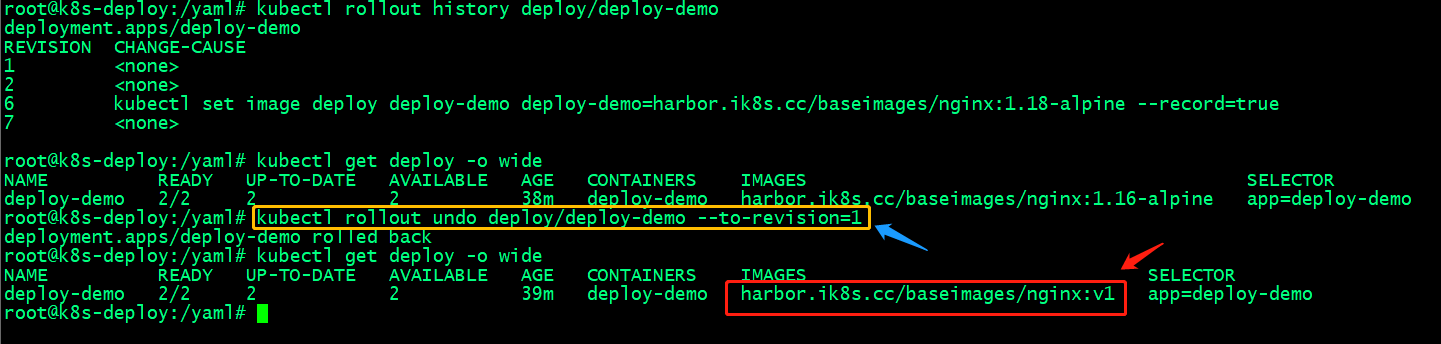

查看更新历史记录

提示:这里历史记录中没有记录版本信息,原因是默认不记录,需要记录历史版本,可以手动使用--record选项来记录版本信息;如下所示

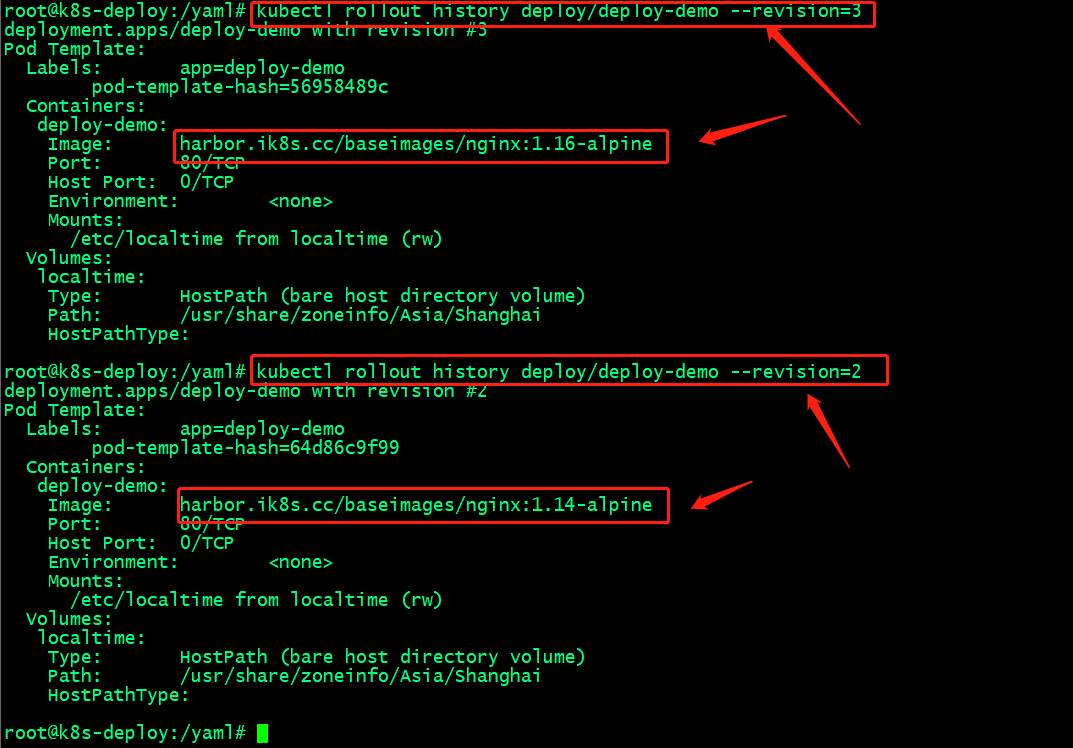

查看某个历史版本的详细信息

提示:查看某个历史版本的详细信息,加上--revision=对应历史版本的编号即可;

回滚到上一个版本

提示:使用kubectl rollout undo 命令可以将对应deploy回滚到上一个版本;

回滚指定编号的历史版本

提示:使用--to-revision选项来指定对应历史版本编号,即可回滚到对应编号的历史版本;

Service资源,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14161950.html;

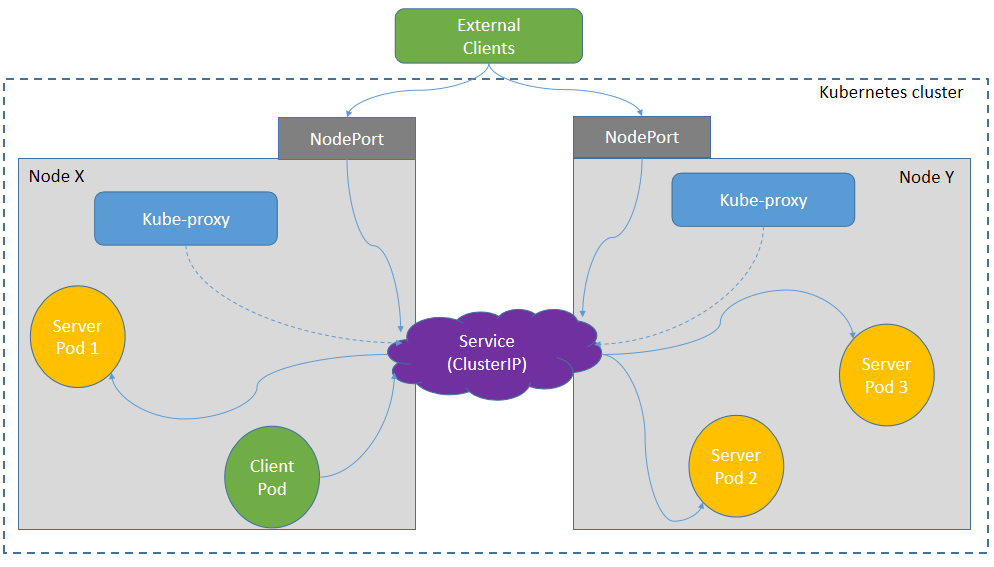

nodeport类型的service访问流程

nodeport类型service主要解决了k8s集群外部客户端访问pod,其流程是外部客户端访问k8s集群任意node节点的对应暴露的端口,被访问的node或通过本机的iptables或ipvs规则将外部客户端流量转发给对应pod之上,从而实现外部客户端访问k8s集群pod的目的;通常使用nodeport类型service为了方便外部客户端访问,都会在集群外部部署一个负载均衡器,即外部客户端访问对应负载均衡器的对应端口,通过负载均衡器将外部客户端流量引入k8s集群,从而完成对pod的访问;

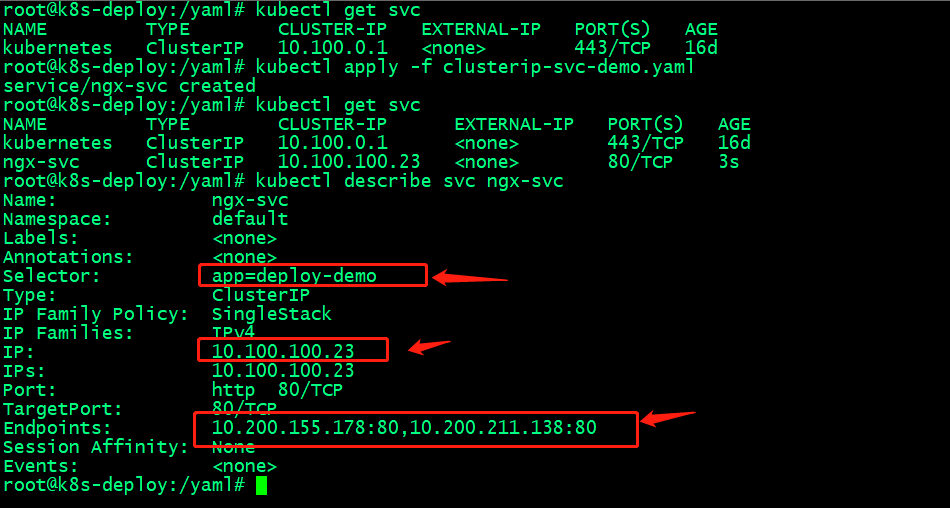

ClusterIP类型svc示例

- apiVersion: v1

- kind: Service

- metadata:

- name: ngx-svc

- namespace: default

- spec:

- selector:

- app: deploy-demo

- type: ClusterIP

- ports:

- - name: http

- protocol: TCP

- port: 80

- targetPort: 80

应用配置清单

提示:可以看到创建clusterip类型service以后,对应svc会有一个clusterip,后端endpoints会通过标签选择器去关联对应pod,即我们访问对应svc的clusterip,对应流量会被转发至后端endpoint pod之上进行响应;不过这种clusterip类型svc只能在k8s集群内部客户端访问,集群外部客户端是访问不到的,原因是这个clusterip是k8s内部网络IP地址;

验证,访问10.100.100.23的80端口,看看对应后端nginxpod是否可以正常被访问呢?

- root@k8s-node01:~# curl 10.100.100.23

- <!DOCTYPE html>

- <html>

- <head>

- <title>Welcome to nginx!</title>

- <style>

- html { color-scheme: light dark; }

- body { width: 35em; margin: 0 auto;

- font-family: Tahoma, Verdana, Arial, sans-serif; }

- </style>

- </head>

- <body>

- <h1>Welcome to nginx!</h1>

- <p>If you see this page, the nginx web server is successfully installed and

- working. Further configuration is required.</p>

- <p>For online documentation and support please refer to

- <a href="http://nginx.org/">nginx.org</a>.<br/>

- Commercial support is available at

- <a href="http://nginx.com/">nginx.com</a>.</p>

- <p><em>Thank you for using nginx.</em></p>

- </body>

- </html>

- root@k8s-node01:~#

nodeport类型service示例

- apiVersion: v1

- kind: Service

- metadata:

- name: ngx-nodeport-svc

- namespace: default

- spec:

- selector:

- app: deploy-demo

- type: NodePort

- ports:

- - name: http

- protocol: TCP

- port: 80

- targetPort: 80

- nodePort: 30012

提示:nodeport类型service只需要在clusterip类型的svc之上修改type为NodePort,然后再ports字段下用nodePort指定对应node端口即可;

应用配置清单

- root@k8s-deploy:/yaml# kubectl apply -f nodeport-svc-demo.yaml

- service/ngx-nodeport-svc created

- root@k8s-deploy:/yaml# kubectl get svc

- NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 16d

- ngx-nodeport-svc NodePort 10.100.209.225 <none> 80:30012/TCP 11s

- root@k8s-deploy:/yaml# kubectl describe svc ngx-nodeport-svc

- Name: ngx-nodeport-svc

- Namespace: default

- Labels: <none>

- Annotations: <none>

- Selector: app=deploy-demo

- Type: NodePort

- IP Family Policy: SingleStack

- IP Families: IPv4

- IP: 10.100.209.225

- IPs: 10.100.209.225

- Port: http 80/TCP

- TargetPort: 80/TCP

- NodePort: http 30012/TCP

- Endpoints: 10.200.155.178:80,10.200.211.138:80

- Session Affinity: None

- External Traffic Policy: Cluster

- Events: <none>

- root@k8s-deploy:/yaml#

验证:访问k8s集群任意node的30012端口,看看对应nginxpod是否能够被访问到?

- root@k8s-deploy:/yaml# curl 192.168.0.34:30012

- <!DOCTYPE html>

- <html>

- <head>

- <title>Welcome to nginx!</title>

- <style>

- html { color-scheme: light dark; }

- body { width: 35em; margin: 0 auto;

- font-family: Tahoma, Verdana, Arial, sans-serif; }

- </style>

- </head>

- <body>

- <h1>Welcome to nginx!</h1>

- <p>If you see this page, the nginx web server is successfully installed and

- working. Further configuration is required.</p>

- <p>For online documentation and support please refer to

- <a href="http://nginx.org/">nginx.org</a>.<br/>

- Commercial support is available at

- <a href="http://nginx.com/">nginx.com</a>.</p>

- <p><em>Thank you for using nginx.</em></p>

- </body>

- </html>

- root@k8s-deploy:/yaml#

提示:可以看到k8s外部客户端访问k8snode节点的30012端口是能够正常访问到nginxpod;当然集群内部的客户端是可以通过对应生成的clusterip进行访问的;

- root@k8s-node01:~# curl 10.100.209.225:30012

- curl: (7) Failed to connect to 10.100.209.225 port 30012 after 0 ms: Connection refused

- root@k8s-node01:~# curl 127.0.0.1:30012

- curl: (7) Failed to connect to 127.0.0.1 port 30012 after 0 ms: Connection refused

- root@k8s-node01:~# curl 192.168.0.34:30012

- <!DOCTYPE html>

- <html>

- <head>

- <title>Welcome to nginx!</title>

- <style>

- html { color-scheme: light dark; }

- body { width: 35em; margin: 0 auto;

- font-family: Tahoma, Verdana, Arial, sans-serif; }

- </style>

- </head>

- <body>

- <h1>Welcome to nginx!</h1>

- <p>If you see this page, the nginx web server is successfully installed and

- working. Further configuration is required.</p>

- <p>For online documentation and support please refer to

- <a href="http://nginx.org/">nginx.org</a>.<br/>

- Commercial support is available at

- <a href="http://nginx.com/">nginx.com</a>.</p>

- <p><em>Thank you for using nginx.</em></p>

- </body>

- </html>

- root@k8s-node01:~#

提示:集群内部客户端只能访问clusterip的80端口,或者访问node的对外IP的30012端口;

Volume资源,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14180752.html;

pod挂载nfs的使用

在nfs服务器上准备数据目录

- root@harbor:~# cat /etc/exports

- # /etc/exports: the access control list for filesystems which may be exported

- # to NFS clients. See exports(5).

- #

- # Example for NFSv2 and NFSv3:

- # /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

- #

- # Example for NFSv4:

- # /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

- # /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

- #

- /data/k8sdata/kuboard *(rw,no_root_squash)

- /data/volumes *(rw,no_root_squash)

- /pod-vol *(rw,no_root_squash)

- root@harbor:~# mkdir -p /pod-vol

- root@harbor:~# ls /pod-vol -d

- /pod-vol

- root@harbor:~# exportfs -av

- exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exporting *:/pod-vol

- exporting *:/data/volumes

- exporting *:/data/k8sdata/kuboard

- root@harbor:~#

在pod上挂载nfs目录

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- name: ngx-nfs-80

- namespace: default

- labels:

- app: ngx-nfs-80

- spec:

- selector:

- matchLabels:

- app: ngx-nfs-80

- replicas: 1

- template:

- metadata:

- labels:

- app: ngx-nfs-80

- spec:

- containers:

- - name: ngx-nfs-80

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- resources:

- requests:

- cpu: 100m

- memory: 100Mi

- limits:

- cpu: 100m

- memory: 100Mi

- ports:

- - containerPort: 80

- name: ngx-nfs-80

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- - name: nfs-vol

- mountPath: /usr/share/nginx/html/

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- - name: nfs-vol

- nfs:

- server: 192.168.0.42

- path: /pod-vol

- restartPolicy: Always

- ---

- apiVersion: v1

- kind: Service

- metadata:

- name: ngx-nfs-svc

- namespace: default

- spec:

- selector:

- app: ngx-nfs-80

- type: NodePort

- ports:

- - name: ngx-nfs-svc

- protocol: TCP

- port: 80

- targetPort: 80

- nodePort: 30013

应用配置清单

- root@k8s-deploy:/yaml# kubectl apply -f nfs-vol.yaml

- deployment.apps/ngx-nfs-80 created

- service/ngx-nfs-svc created

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- deploy-demo-6849bdf444-pvsc9 1/1 Running 1 (57m ago) 46h

- deploy-demo-6849bdf444-sg8fz 1/1 Running 1 (57m ago) 46h

- ng-rc-l7xmp 1/1 Running 1 (57m ago) 47h

- ng-rc-wl5d6 1/1 Running 1 (57m ago) 47h

- ngx-nfs-80-66c9697cf4-8pm9k 1/1 Running 0 7s

- rs-demo-nzmqs 1/1 Running 1 (57m ago) 47h

- rs-demo-v2vb6 1/1 Running 1 (57m ago) 47h

- rs-demo-x27fv 1/1 Running 1 (57m ago) 47h

- test 1/1 Running 7 (57m ago) 17d

- test1 1/1 Running 7 (57m ago) 17d

- test2 1/1 Running 7 (57m ago) 17d

- root@k8s-deploy:/yaml# kubectl get svc

- NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

- kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 18d

- ngx-nfs-svc NodePort 10.100.16.14 <none> 80:30013/TCP 15s

- ngx-nodeport-svc NodePort 10.100.209.225 <none> 80:30012/TCP 45h

- root@k8s-deploy:/yaml#

在nfs服务器上/pod-vol目录下提供index.html文件

- root@harbor:~# echo "this page from nfs server.." >> /pod-vol/index.html

- root@harbor:~# cat /pod-vol/index.html

- this page from nfs server..

- root@harbor:~#

访问pod,看看nfs服务器上的inde.html是否能够正常访问到?

- root@k8s-deploy:/yaml# curl 192.168.0.35:30013

- this page from nfs server..

- root@k8s-deploy:/yaml#

提示:能够看到访问pod对应返回的页面就是刚才在nfs服务器上创建的页面,说明pod正常挂载了nfs提供的目录;

PV、PVC资源,详细说明请参考https://www.cnblogs.com/qiuhom-1874/p/14188621.html;

nfs实现静态pvc的使用

在nfs服务器上准备目录

- root@harbor:~# cat /etc/exports

- # /etc/exports: the access control list for filesystems which may be exported

- # to NFS clients. See exports(5).

- #

- # Example for NFSv2 and NFSv3:

- # /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

- #

- # Example for NFSv4:

- # /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

- # /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

- #

- /data/k8sdata/kuboard *(rw,no_root_squash)

- /data/volumes *(rw,no_root_squash)

- /pod-vol *(rw,no_root_squash)

- /data/k8sdata/myserver/myappdata *(rw,no_root_squash)

- root@harbor:~# mkdir -p /data/k8sdata/myserver/myappdata

- root@harbor:~# exportfs -av

- exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver/myappdata".

- Assuming default behaviour ('no_subtree_check').

- NOTE: this default has changed since nfs-utils version 1.0.x

- exporting *:/data/k8sdata/myserver/myappdata

- exporting *:/pod-vol

- exporting *:/data/volumes

- exporting *:/data/k8sdata/kuboard

- root@harbor:~#

创建pv

- apiVersion: v1

- kind: PersistentVolume

- metadata:

- name: myapp-static-pv

- namespace: default

spec:- capacity:

- storage: 2Gi

- accessModes:

- - ReadWriteOnce

- nfs:

- path: /data/k8sdata/myserver/myappdata

- server: 192.168.0.42

创建pvc关联pv

- apiVersion: v1

- kind: PersistentVolumeClaim

- metadata:

- name: myapp-static-pvc

- namespace: default

- spec:

- volumeName: myapp-static-pv

- accessModes:

- - ReadWriteOnce

- resources:

- requests:

- storage: 2Gi

创建pod使用pvc

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- name: ngx-nfs-pvc-80

- namespace: default

- labels:

- app: ngx-pvc-80

- spec:

- selector:

- matchLabels:

- app: ngx-pvc-80

- replicas: 1

- template:

- metadata:

- labels:

- app: ngx-pvc-80

- spec:

- containers:

- - name: ngx-pvc-80

- image: "harbor.ik8s.cc/baseimages/nginx:v1"

- resources:

- requests:

- cpu: 100m

- memory: 100Mi

- limits:

- cpu: 100m

- memory: 100Mi

- ports:

- - containerPort: 80

- name: ngx-pvc-80

- volumeMounts:

- - name: localtime

- mountPath: /etc/localtime

- - name: data-pvc

- mountPath: /usr/share/nginx/html/

- volumes:

- - name: localtime

- hostPath:

- path: /usr/share/zoneinfo/Asia/Shanghai

- - name: data-pvc

- persistentVolumeClaim:

- claimName: myapp-static-pvc

- ---

- apiVersion: v1

- kind: Service

- metadata:

- name: ngx-pvc-svc

- namespace: default

- spec:

- selector:

- app: ngx-pvc-80

- type: NodePort

- ports:

- - name: ngx-nfs-svc

- protocol: TCP

- port: 80

- targetPort: 80

- nodePort: 30014

应用上述配置清单

- root@k8s-deploy:/yaml# kubectl apply -f nfs-static-pvc-demo.yaml

- persistentvolume/myapp-static-pv created

- persistentvolumeclaim/myapp-static-pvc created

- deployment.apps/ngx-nfs-pvc-80 created

- service/ngx-pvc-svc created

- root@k8s-deploy:/yaml# kubectl get pv

- NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

- myapp-static-pv 2Gi RWO Retain Bound default/myapp-static-pvc 4s

- root@k8s-deploy:/yaml# kubectl get pvc

- NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

- myapp-static-pvc Pending myapp-static-pv 0 7s

- root@k8s-deploy:/yaml# kubectl get pods

- NAME READY STATUS RESTARTS AGE

- deploy-demo-6849bdf444-pvsc9 1/1 Running 1 (151m ago) 47h

- deploy-demo-6849bdf444-sg8fz 1/1 Running 1 (151m ago) 47h

- ng-rc-l7xmp 1/1 Running 1 (151m ago) 2d1h

- ng-rc-wl5d6 1/1 Running 1 (151m ago) 2d1h

- ngx-nfs-pvc-80-f776bb6d-nwwwq 0/1 Pending 0 10s

- rs-demo-nzmqs 1/1 Running 1 (151m ago) 2d

- rs-demo-v2vb6 1/1 Running 1 (151m ago) 2d

- rs-demo-x27fv 1/1 Running 1 (151m ago) 2d

- test 1/1 Running 7 (151m ago) 18d

- test1 1/1 Running 7 (151m ago) 18d

- test2 1/1 Running 7 (151m ago) 18d

- root@k8s-deploy:/yaml#

在nfs服务器上/data/k8sdata/myserver/myappdata创建index.html,看看对应主页是否能够被访问?

- root@harbor:~# echo "this page from nfs-server /data/k8sdata/myserver/myappdata/index.html" >> /data/k8sdata/myserver/myappdata/index.html

- root@harbor:~# cat /data/k8sdata/myserver/myappdata/index.html

- this page from nfs-server /data/k8sdata/myserver/myappdata/index.html

- root@harbor:~#

访问pod

- root@harbor:~# curl 192.168.0.36:30014

- this page from nfs-server /data/k8sdata/myserver/myappdata/index.html

- root@harbor:~#

nfs实现动态pvc的使用

创建名称空间、服务账号、clusterrole、clusterrolebindding、role、rolebinding

- apiVersion: v1

- kind: Namespace

- metadata:

- name: nfs

- ---

- apiVersion: v1

- kind: ServiceAccount

- metadata:

- name: nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- ---

- kind: ClusterRole

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: nfs-client-provisioner-runner

- rules:

- - apiGroups: [""]

- resources: ["nodes"]

- verbs: ["get", "list", "watch"]

- - apiGroups: [""]

- resources: ["persistentvolumes"]

- verbs: ["get", "list", "watch", "create", "delete"]

- - apiGroups: [""]

- resources: ["persistentvolumeclaims"]

- verbs: ["get", "list", "watch", "update"]

- - apiGroups: ["storage.k8s.io"]

- resources: ["storageclasses"]

- verbs: ["get", "list", "watch"]

- - apiGroups: [""]

- resources: ["events"]

- verbs: ["create", "update", "patch"]

- ---

- kind: ClusterRoleBinding

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: run-nfs-client-provisioner

- subjects:

- - kind: ServiceAccount

- name: nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- roleRef:

- kind: ClusterRole

- name: nfs-client-provisioner-runner

- apiGroup: rbac.authorization.k8s.io

- ---

- kind: Role

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: leader-locking-nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- rules:

- - apiGroups: [""]

- resources: ["endpoints"]

- verbs: ["get", "list", "watch", "create", "update", "patch"]

- ---

- kind: RoleBinding

- apiVersion: rbac.authorization.k8s.io/v1

- metadata:

- name: leader-locking-nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- subjects:

- - kind: ServiceAccount

- name: nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- roleRef:

- kind: Role

- name: leader-locking-nfs-client-provisioner

- apiGroup: rbac.authorization.k8s.io

创建sc

- apiVersion: storage.k8s.io/v1

- kind: StorageClass

- metadata:

- name: managed-nfs-storage

- provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

- reclaimPolicy: Retain #PV的删除策略,默认为delete,删除PV后立即删除NFS server的数据

- mountOptions:

- #- vers=4.1 #containerd有部分参数异常

- #- noresvport #告知NFS客户端在重新建立网络连接时,使用新的传输控制协议源端口

- - noatime #访问文件时不更新文件inode中的时间戳,高并发环境可提高性能

- parameters:

- #mountOptions: "vers=4.1,noresvport,noatime"

- archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时为不保留数据

创建provision

- apiVersion: apps/v1

- kind: Deployment

- metadata:

- name: nfs-client-provisioner

- labels:

- app: nfs-client-provisioner

- # replace with namespace where provisioner is deployed

- namespace: nfs

- spec:

- replicas: 1

- strategy: #部署策略

- type: Recreate

- selector:

- matchLabels:

- app: nfs-client-provisioner

- template:

- metadata:

- labels:

- app: nfs-client-provisioner

- spec:

- serviceAccountName: nfs-client-provisioner

- containers:

- - name: nfs-client-provisioner

- #image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

- image: registry.cn-qingdao.aliyuncs.com/zhangshijie/nfs-subdir-external-provisioner:v4.0.2

- volumeMounts:

- - name: nfs-client-root

- mountPath: /persistentvolumes

- env:

- - name: PROVISIONER_NAME

- value: k8s-sigs.io/nfs-subdir-external-provisioner

- - name: NFS_SERVER

- value: 192.168.0.42

- - name: NFS_PATH

- value: /data/volumes

- volumes:

- - name: nfs-client-root

- nfs:

- server: 192.168.0.42

- path: /data/volumes

调用sc创建pvc

- apiVersion: v1

- kind: Namespace

- metadata:

- name: myserver

- ---

- # Test PVC

- kind: PersistentVolumeClaim

- apiVersion: v1

- metadata:

- name: myserver-myapp-dynamic-pvc

- namespace: myserver

- spec:

- storageClassName: managed-nfs-storage #调用的storageclass 名称

- accessModes:

- - ReadWriteMany #访问权限

- resources:

- requests:

- storage: 500Mi #空间大小

创建app使用pvc

- kind: Deployment

- #apiVersion: extensions/v1beta1

- apiVersion: apps/v1

- metadata:

- labels:

- app: myserver-myapp

- name: myserver-myapp-deployment-name

- namespace: myserver

- spec:

- replicas: 1

- selector:

- matchLabels:

- app: myserver-myapp-frontend

- template:

- metadata:

- labels:

- app: myserver-myapp-frontend

- spec:

- containers:

- - name: myserver-myapp-container

- image: nginx:1.20.0

- #imagePullPolicy: Always

- volumeMounts:

- - mountPath: "/usr/share/nginx/html/statics"

- name: statics-datadir

- volumes:

- - name: statics-datadir

- persistentVolumeClaim:

- claimName: myserver-myapp-dynamic-pvc

- ---

- kind: Service

- apiVersion: v1

- metadata:

- labels:

- app: myserver-myapp-service

- name: myserver-myapp-service-name

- namespace: myserver

- spec:

- type: NodePort

- ports:

- - name: http

- port: 80

- targetPort: 80

- nodePort: 30015

- selector:

- app: myserver-myapp-frontend

应用上述配置清单

- root@k8s-deploy:/yaml/myapp# kubectl apply -f .

- namespace/nfs created

- serviceaccount/nfs-client-provisioner created

- clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

- clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

- role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

- rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

- storageclass.storage.k8s.io/managed-nfs-storage created

- deployment.apps/nfs-client-provisioner created

- namespace/myserver created

- persistentvolumeclaim/myserver-myapp-dynamic-pvc created

- deployment.apps/myserver-myapp-deployment-name created

- service/myserver-myapp-service-name created

- root@k8s-deploy:

验证:查看sc、pv、pvc是否创建?pod是否正常运行?

- root@k8s-deploy:/yaml/myapp# kubectl get sc

- NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

- managed-nfs-storage k8s-sigs.io/nfs-subdir-external-provisioner Retain Immediate false 105s

- root@k8s-deploy:/yaml/myapp# kubectl get pv

- NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

- pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c 500Mi RWX Retain Bound myserver/myserver-myapp-dynamic-pvc managed-nfs-storage 107s

- root@k8s-deploy:/yaml/myapp# kubectl get pvc -n myserver

- NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

- myserver-myapp-dynamic-pvc Bound pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c 500Mi RWX managed-nfs-storage 117s

- root@k8s-deploy:/yaml/myapp# kubectl get pods -n myserver

- NAME READY STATUS RESTARTS AGE

- myserver-myapp-deployment-name-65ff65446f-xpd5p 1/1 Running 0 2m8s

- root@k8s-deploy:/yaml/myapp#

提示:可以看到pv自动由sc创建,pvc自动和pv关联;

验证:在nfs服务器上的/data/volumes/下创建index.html文件,访问pod service,看看对应文件是否能够正常被访问到?

- root@harbor:/data/volumes# ls

- myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c

- root@harbor:/data/volumes# cd myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c/

- root@harbor:/data/volumes/myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c# ls

- root@harbor:/data/volumes/myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c# echo "this page from nfs-server /data/volumes" >> index.html

- root@harbor:/data/volumes/myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c# cat index.html

- this page from nfs-server /data/volumes

- root@harbor:/data/volumes/myserver-myserver-myapp-dynamic-pvc-pvc-01709c7f-0cf9-4554-9ae9-72db89e7308c#

提示:在nfs服务器上的/data/volumes目录下会自动生成一个使用pvcpod所在名称空间+pvc名字+pv名字的一个目录,这个目录就是由provision创建;

访问pod

- root@harbor:~# curl 192.168.0.36:30015/statics/index.html

- this page from nfs-server /data/volumes

- root@harbor:~#

提示:能够访问到我们刚才创建的文件,说明pod正常挂载nfs服务器对应目录;

PV/PVC总结

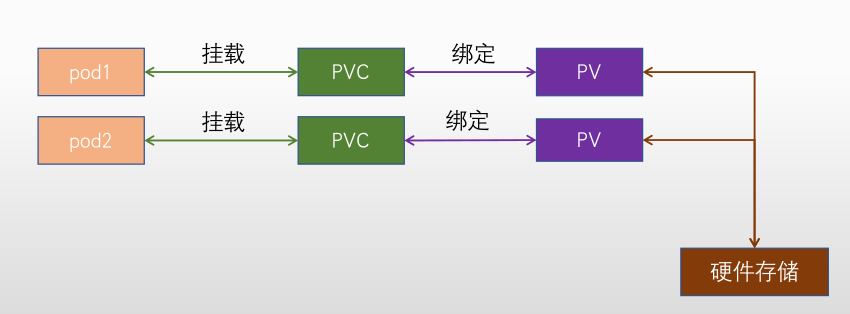

PV是对底层网络存储的抽象,即将网络存储定义为一种存储资源,将一个整体的存储资源拆分成多份后给不同的业务使用。

PVC是对PV资源的申请调用,pod是通过PVC将数据保存至PV,PV再把数据保存至真正的硬件存储。

PersistentVolume参数

Capacity: #当前PV空间大小,kubectl explain PersistentVolume.spec.capacity

accessModes :访问模式,#kubectl explain PersistentVolume.spec.accessModes

ReadWriteOnce – PV只能被单个节点以读写权限挂载,RWO

ReadOnlyMany – PV以可以被多个节点挂载但是权限是只读的,ROX

ReadWriteMany – PV可以被多个节点是读写方式挂载使用,RWX

persistentVolumeReclaimPolicy #删除机制即删除存储卷卷时候,已经创建好的存储卷由以下删除操作:

Retain – 删除PV后保持原装,最后需要管理员手动删除

Recycle – 空间回收,及删除存储卷上的所有数据(包括目录和隐藏文件),目前仅支持NFS和hostPath

Delete – 自动删除存储卷

volumeMode #卷类型,kubectl explain PersistentVolume.spec.volumeMode;定义存储卷使用的文件系统是块设备还是文件系统,默认为文件系统

mountOptions #附加的挂载选项列表,实现更精细的权限控制;

官方文档:持久卷 | Kubernetes;

PersistentVolumeClaim创建参数

accessModes :PVC 访问模式,#kubectl explain PersistentVolumeClaim.spec.volumeMode

ReadWriteOnce – PVC只能被单个节点以读写权限挂载,RWO

ReadOnlyMany – PVC以可以被多个节点挂载但是权限是只读的,ROX

ReadWriteMany – PVC可以被多个节点是读写方式挂载使用,RWX

resources: #定义PVC创建存储卷的空间大小

selector: #标签选择器,选择要绑定的PV

matchLabels #匹配标签名称

matchExpressions #基于正则表达式匹配

volumeName #要绑定的PV名称

volumeMode #卷类型,定义PVC使用的文件系统是块设备还是文件系统,默认为文件系统

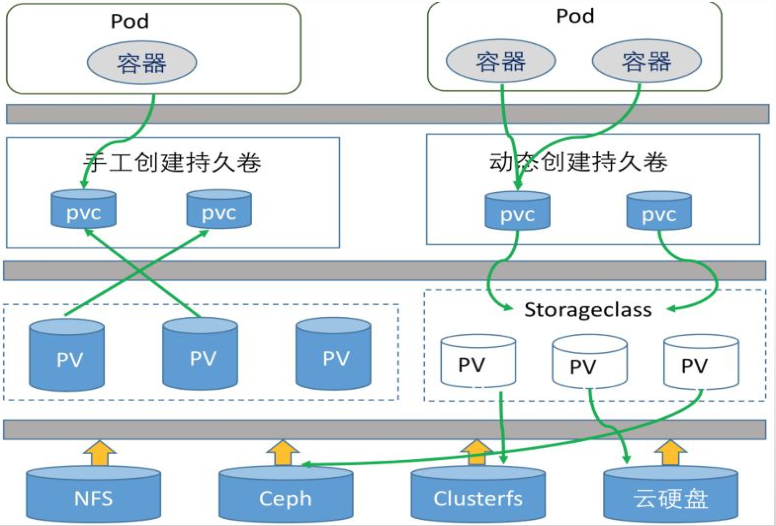

Volume- 存储卷类型

static:静态存储卷 ,需要在使用前手动创建PV、然后创建PVC并绑定到PV然后挂载至pod使用,适用于PV和PVC相对比较固定的业务场景。

dynamin:动态存储卷,先创建一个存储类storageclass,后期pod在使用PVC的时候可以通过存储类动态创建PVC,适用于有状态服务集群如MySQL一主多从、zookeeper集群等。

存储类官方文档:存储类 | Kubernetes

k8s资源对象的更多相关文章

- k8s资源对象及API群组

REST是representational state transfer的缩写,意为“表征状态转移”,它是一种程序架构风格,基本元素为资源(resource).表征(representation)和行 ...

- Prometheus 监控K8S 资源状态对象

Prometheus 监控K8S 资源状态对象 官方文档:https://github.com/kubernetes/kube-state-metrics kube-state-metrics是一个简 ...

- k8s管理pod资源对象(下)

一.标签与标签选择器 1.标签是k8s极具特色的功能之一,它能够附加于k8s的任何资源对象之上.简单来说,标签就是键值类型的数据,它们可于资源创建时直接指定,也可随时按需添加于活动对象中,而后即可由标 ...

- k8s管理pod资源对象(上)

一.容器于pod资源对象 现代的容器技术被设计用来运行单个进程时,该进程在容器中pid名称空间中的进程号为1,可直接接收并处理信号,于是,在此进程终止时,容器即终止退出.若要在一个容器中运行多个进程, ...

- 6.K8s集群升级、etcd备份和恢复、资源对象及其yaml文件使用总结、常用维护命令

1.K8s集群升级 集群升级有一定的风险,需充分测试验证后实施 集群升级需要停止服务,可以采用逐个节点滚动升级的方式 1.1 准备新版本二进制文件 查看现在的版本 root@k8-master1:~# ...

- k8s基本概念,资源对象

kubernetes里的master指的是集群控制节点 master负责是整个集群的管理和控制 kubernetes3大进程 API server 增删改查操作的关键入口 controller man ...

- [置顶]

kubernetes--优雅删除资源对象

当用户请求删除含有pod的资源对象时(如RC.deployment等),K8S为了让应用程序优雅关闭(即让应用程序完成正在处理的请求后,再关闭软件),K8S提供两种信息通知: 1).默认:K8S通知n ...

- [置顶]

kubernetes资源对象--Label

概念 Label机制是K8S中一个重要设计,通过Label进行对象弱关联,灵活地分类和选择不同服务或业务,让用户根据自己特定的组织结构以松耦合方式进行服务部署. Label是一对KV,对用户而言非常有 ...

- [置顶]

kubernetes资源对象--ResourceQuotas

概念 Resource Quotas(资源配额,简称quota)是对namespace进行资源配额,限制资源使用的一种策略. K8S是一个多用户架构,当多用户或者团队共享一个K8S系统时,SA使用qu ...

- [置顶]

kubernetes资源对象--ConfigMap

原理 很多生产环境中的应用程序配置较为复杂,可能需要多个config文件.命令行参数和环境变量的组合.使用容器部署时,把配置应该从应用程序镜像中解耦出来,以保证镜像的可移植性.尽管Secret允许类似 ...

随机推荐

- ZooKeeper启动报错,未成功开启服务

1.问题示例 (1)启动ZooKeeper服务报错 [Hadoop@master ~]$ zkServer.sh statusZooKeeper JMX enabled by defaultUsing ...

- Codeforces Round #803 (Div. 2) A-D 刚vp完还没补题

Codeforces Round #803 (Div. 2) 2022/7/24 上午VP 传送门:https://codeforces.com/contest/1698 A. XOR Mixup 随 ...

- 关于Electron环境配置与一些相关的错误解决

安装步骤: 1.安装NVM: 这个是nodejs的版本管理器,github上有一个nvm for windows,由于不能的nodejs的版本问题,可以自由进行切换. 下载地址:https://git ...

- ARFoundation在2019.2之后无法打包的问题

打包提示错误gradle无法完成打包.解决方案 转到首选项>外部工具> Android> Gradle ,然后将自定义Gradle设置为Gradle 5.6.4或更高版本.请参阅Gr ...

- 2021SWPUCTF-WEB(三)

error 双引号没有提示的注入,,那就是报错注入了,肯定是个恶心的东西呜呜呜 ?id=1' and updatexml(1,concat(0x7e,(select right(flag,30) ...

- CentOS7.6 单用户模式下修改root密码

第一种方法: 1.启动时用上下键选择要进入的内核,输入'e'进入编辑 2.可以使用上下键移动找到linux16这行编辑ro 为 rw init=/sysroot/bin/sh 并使用ctrl + x进 ...

- Android studio的基本使用--基础篇

一.新建项目 其实跟IDEA新建项目的流程基本一致,File->New->New project,这样就能够新建出来一个项目啦! 一般情况下,我们都会选择Empty Activity,之后 ...

- 【LeetCode贪心#12】图解监控二叉树(正宗hard题,涉及贪心分析、二叉树遍历以及状态转移)

监控二叉树 力扣题目链接(opens new window) 给定一个二叉树,我们在树的节点上安装摄像头. 节点上的每个摄影头都可以监视其父对象.自身及其直接子对象. 计算监控树的所有节点所需的最小摄 ...

- 深入理解 python 虚拟机:字节码灵魂——Code obejct

深入理解 python 虚拟机:字节码灵魂--Code obejct 在本篇文章当中主要给大家深入介绍在 cpython 当中非常重要的一个数据结构 code object! 在上一篇文章 深入理解 ...

- 聊一聊如何使用Crank给我们的类库做基准测试

目录 背景 什么是 Crank 入门示例 Pull Request 总结 参考资料 背景 当我们写了一个类库提供给别人使用时,我们可能会对它做一些基准测试来测试一下它的性能指标,好比内存分配等. 在 ...