Paper Reading_Computer Architecture

最近(以及预感接下来的一年)会读很多很多的paper......不如开个帖子记录一下读paper心得

Computer Architecture

Last level cache (llc) performance of data mining workloads on a cmp-a case study of parallel bioinformatics workloads

这是HPCA2006的文章,但里面的方法论放到现在也很有借鉴意义。

本文通过分析Bioinformatic workload在Last Level Cache上的性能,发现这种workload中很多cacheline其实都是shared by multiple cores,那么一些对于其他workload适用的策略(比如partitioning LLC into multiple private caches)就不行了。

前面介绍了一下他们的workload(这个就不看了)和simulator(是基于Pin开发的一个插件叫做SimCMPcache,但很可惜没有开源)。

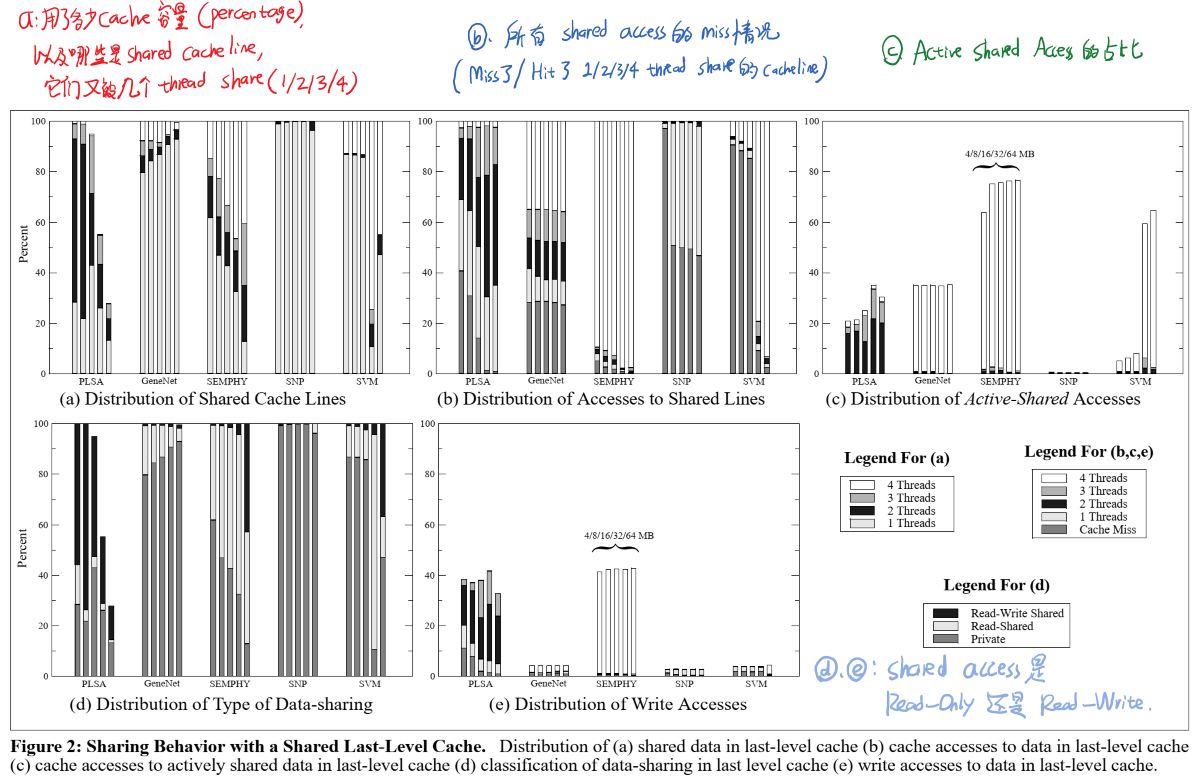

为了分析LLC的使用情况(sharing behavior of parallel workloads),本文定义了如下三种metrics:

- Shared Cache Line:被多个线程(core)共享的cacheline。这里又可以分为Read-only shared cacheline(只被读,不写)和Read-Write shared cacheline(既要读也要写,常用于线程通信)。我们这里要找出哪些cacheline被多少个core共享了,以及它们分别是Read-Only还是Read-Write。

- Shared Access:有哪些cache access是涉及到shared cacheline的。

- Active-Shared Access:被多个不同的core轮流访问的cacheline。

接下来就是展示实验结果了。我们先来看Fig2的结果(每个case中的五个柱表示测试了5种cache容量:4M/8M/16M/32M/64M LLC):

Managing shared last-level cache in a heterogeneous multicore processor

When integrating GPU and CPU on the same die of chip, they could share the same Last Level Cache(LLC). Due to much higher number of threads in GPU, GPU may dominate the access to the shared LLC. However, in many scenarios, CPU applications are more sensitive to LLC than GPU applications, while GPU applications can often tolerate relatively higher memory access latency (not really sensiable to LLC). It is because for CPU cores, changes in cache miss rate are a direct indicator of cache sensitivity. While in the GPU core, an increase in cache miss rate does not necessarily result in performance degradation, because the GPU kernel can tolerate memory access latency by context switching between a large number of concurrent active threads. So a new cache replacement policy, called HeLM, is proposed to solve this issue.

Under the HeLM policy, GPU LLC accesses are limited by allowing memory accesses to selectively bypass the LLC, so the cache sensitive CPU application is able to utilize a larger portion of the cache. It is achieved by allowing the GPU memory traffic to selectively bypass the LLC when the GPU cores exhibit sufficient TLP to tolerate memory access latency, or when the GPU application is not sensitive to LLC performance.

The author implemented 3 technologies: 1). Measure the sensitivity of GPU LLC and CPU LLC. 2). Determining Effective TLP Threshold. 3). Using Threshold Selection Algorithm(TSA) to monitors the workload characteristics continuously and re-evaluates the TLP threshold at the end of every sampling period. Then enforce this threshold on all GPU cores.

The author evaluated the performance of HeLM by comparing with DRRIP, MAT, SDBP, and TAP-RRIP, all normalized to LRU. HeLM outperforms all these mechanisms in overall system performance.

Adaptive Insertion Policies for High Performance Caching

The traditional cache LRU mechanism replaces the item at MRU position with the new coming line, which could lead to thrashing problem when a memory-intensive workload whose size is greater than the available cache size. The authors of this paper introduce new mechanism: Dynamic Insertion Policy that can protect cache from thrashing with trial overhead, and improve the average cache hit performance.

1. The authors propose LRU Insertion Policy (LIP), which inserts all incoming lines to the LRU position because of the fact that a long-enough fraction of a workload can lead to a cache hit even though the workload’s size exceeds the available size.

2. Based on LIP, the authors propose the Bimodal Insertion Policy (BIP), which is basically LIP but also implement traditional LRU. It has a parameter e: bimodal throttle parameter that is a very small number, which controls the percentage of incoming lines that be inserted to MRU position.

3. They design experiment to explore how the reduction in L2 MPKI performs with LIP and BIP with e = 1/64, 1/32, 1/16 on 16 different benchmarks. In general, BIP outperforms LIP and the value of e doesn’t affect much of the result. LIP and BIP reduce MPKI by 10% or more for nine benchmarks. The other benchmarks are either LRU-friendly or have knee of MPKI curve is less than cache size.

4. The authors further propose Dynamic Insertion Policy (DIP) which can dynamically make choices from BIP and LRU, which incurs the fewest misses. It introduces MTD be the main tag directory of the cache, ADT-LRU as traditional LRU tag, and ATD-BIP as the BIP tag. PSEL is Policy Selector which is a saturating counter. Using PSEL count for each set needs more hardware resources thus Dynamic Set Sampling (DSS) come to use.

The key idea of DSS is that the cache behavior can be estimated with high probability by sampling. It introduces Set Dueling, which only keeps the MTD and eliminate the need to store separate ATD entries. They use complement-select policy that identify a set as a dedicated for competing or a follower.

5. The experiments show that DIP can reduce MPKI of a 1MB 16-way L2 cache by 21% in average.

BEAR: Techniques for Mitigating Bandwidth Bloat in Gigascale DRAM Caches

The bandwidth of DRAM cache is not only used for data transferring. Some of its bandwidth are consumed by some secondary operations, like cache miss detection, fill on cache miss, and writeback lookup and content update on dirt evictions from the last-level on-chip cache. In order to decrease the bandwidth consumed by these secondary operations, the previous work, Alloy Cache, still consumes 3.8x bandwidth compared to an idealized DRAM cache that does not consume any bandwidth for secondary operations. In this work, the author proposed Bandwidth Efficient Architecture (BEAR), which include 3 components to reduce the bandwidth consumed by miss detection, miss fill, and writeback probes respectively.

The author found that DRAM cache bandwidth bloat is attributed to six different cache operations: Hit Probe, Miss Probe, Miss Fill, Writeback Probe, Writeback Update, and Writeback Fill. And only the Hit Probe leads useful bandwidth to service the LLC miss request. So the author concentrated on 3 sources of bandwidth bloat:

- Bandwidth-Efficient Miss Fill. The author proposed a Bandwidth Aware Bypass (BAB) scheme that ties to free up the bandwidth consumed by Miss Fills while limiting the degradation in hit rate to a predetermined amount. It is inspired by the fact that not all inserted cache lines will be re-referenced again immediately, so we can bypass some of the Miss Fills without impacting hit rate significantly.

- Bandwidth-Efficient Writeback Probe. A Writeback Probe is wasteful if the line evicted from the on-chip LLC (dirty line) already exists in the DRAM cache. Since the DRAM cache are generally much larger than on-chip LLC, the writeback request is less likely to be missed in the DRAM cache. So a majority of Writeback Probes are useless. So the cache architecture need to guarantee whether a dirty cache line evicted from the on-chip LLC exists in DRAM cache.

- Bandwidth-Efficient Miss Probe. If the cache architecture can provide guarantees on whether a line is present in the DRAM cache, Miss Probe bandwidth bloat can be minimized. So the author did a survey on some previous work, like Alloy Cache and Loh-Hill. Their designs locate tag and data together in the same DRAM row buffer, and hence accessing one cache line also reads tags of other adjacent lines, making additional information available.

Finally, the author integrated these components together, and proposed BEAR. The experiments show that BEAR improved performance over the Alloy Cache by 10.1%, and reduced the bandwidth consumption of DRAM cache by 32%.

BATMAN: Techniques for Maximizing System Bandwidth of Memory Systems with Stacked-DRAM

Tiered Memory System includes the following 2 components: 3D-DRAM with high bandwidth, and commodity-DRAM with high capacity. The previous works try to maximize the usage of 3D-DRAM bandwidth, but actually, the bandwidth of commodity-DRAM is the significant fraction of the overall system bandwidth. So they inefficiently utilized the total bandwidth of the tiered system.

For a tiered-memory system in which the Far Memory (DDR-based DRAM) accounts for a significant fraction of the overall bandwidth, distributing memory accesses to both memories has great ability to improve performance. In order to solve this issue, the author proposed Bandwidth-Aware Tiered-Memory Management (BATMAN), a runtime mechanism that manages the memory accesses distribution in proportion to the bandwidth ratio of the Near Memory and the Far Memory. It could explicitly control the data management. The main idea of BATMAN is that when 3D-DRAM memory access is greater than a threshold, it will move data from 3D-DRAM to commodity-DRAM.

The evaluation shows that BATMAN could improve performance by 10% and energy-delay product by 13%. Also, it incurs only an eight-byte hardware overhead and requires negligible software modification.

Paper Reading_Computer Architecture的更多相关文章

- how to build a paper's architecture?

1. problem? what's the problem? then do some extension of the problem. 2. related works ,which means ...

- Paper Reading

Paper Reading_SysML Paper Reading_Computer Architecture Paper Reading_Database Paper Reading_Distrib ...

- MongoDB 初见指南

技术若只如初见,那么还会踩坑么? 在系统引入 MongoDB 也有几年了,一开始是因为 MySQL 中有单表记录增长太快(每天几千万条吧)容易拖慢 MySQL 的主从复制.而这类数据增长迅速的流水表, ...

- Rethinking the inception architecture for computer vision的 paper 相关知识

这一篇论文很不错,也很有价值;它重新思考了googLeNet的网络结构--Inception architecture,在此基础上提出了新的改进方法; 文章的一个主导目的就是:充分有效地利用compu ...

- Paper | UNet++: A Nested U-Net Architecture for Medical Image Segmentation

目录 1. 故事 2. UNet++ 3. 实验 3.1 设置 作者的解读,讲得非常好非常推荐:https://zhuanlan.zhihu.com/p/44958351 这篇文章提出的嵌套U-Net ...

- 如何写好一篇高质量的paper

http://blog.csdn.net/tiandijun/article/details/41775223 这篇文章来源于中科院Zhouchen Lin 教授的report,有幸读到,和大家分享一 ...

- paper 13:计算机视觉研究群体及专家主页汇总

做机器视觉和图像处理方面的研究工作,最重要的两个问题:其一是要把握住国际上最前沿的内容:其二是所作工作要具备很高的实用背景.解决第一个问题 的办法就是找出这个方向公认最高成就的几个超级专家(看看他们都 ...

- Core Java Volume I — 1.2. The Java "White Paper" Buzzwords

1.2. The Java "White Paper" BuzzwordsThe authors of Java have written an influential White ...

- 100 open source Big Data architecture papers for data professionals

zhuan :https://www.linkedin.com/pulse/100-open-source-big-data-architecture-papers-anil-madan Big Da ...

随机推荐

- 浏览器主页在不知情的情况下设置为duba.com和newduba.cn

原来是安装了“驱动精灵”. 真是个垃圾! 不通知用户的情况下,自动给锁定主页. 真TMD恶心 离倒闭不远了,现在只能通过这种方式来获取流量.

- 【webpack4】webpack4配置需要注意的问题

需要注意的知识: 要全局安装webpack以及webpack cli,否则不能使用webpack指令 npm install webpack -g npm install webpack-cli -g ...

- windows10 gcc编译C程序(简单编译)

参考:http://c.biancheng.net/view/660.html gcc可以一次性完成C语言源程序的编译,也可以分步骤完成:下面先介绍一次性编译过程. 1.生成可执行程序 cd xxx ...

- tensorflow的变量作用域

一.由来 深度学习中需要使用大量的变量集,以往写代码我们只需要做全局限量就可以了,但在tensorflow中,这样做既不方便管理变量集,有不便于封装,因此tensorflow提供了一种变量管理方法:变 ...

- 【canvas学习笔记七】混合和裁剪

globalCompositeOperation 如果我们先画了一个图形,然后要在这个图形上面再画一个图形,那么这个图形会怎么样呢?是覆盖在原来的图形上面吗?这时候,就要用到globalComposi ...

- HTML 和 CSS 画三角形和画多边行基本原理及实践

基本 HTML 标签 <div class = 'test'></div> 基本 CSS 代码 .test { width: 100px; height: 100px; bac ...

- Shell test命令/流程控制

Shell test命令 Shell中的 test 命令用于检查某个条件是否成立,它可以进行数值.字符和文件三个方面的测试. 数值测试 参数,说明 -eq等于则为真 -ne不等于则为真 -gt 大于则 ...

- java-String与Integer的相互转化

一.Integer转String //方法一:Integer类的静态方法toString() Integer a = 2; String str = Integer.toString(a) //方 ...

- 使用node.js搭建本地服务器

第一步安装node:https://nodejs.org/zh-cn/download/ 接下来就需要安装http的镜像文件 打开cmd:输入以下命令 npm install http-server ...

- java中Thread (线程)

Thread 使用新线程的步骤: 通过覆写 Thread 的 run 方法,配置新线程需要做的事情 创建新线程对象 new YourThread() 开启线程 start 创建新线程的方法有很多,下面 ...