GreenPlum 大数据平台--segment 失效问题恢复

1,问题检查

[gpadmin@greenplum01 conf]$ psql -c "select * from gp_segment_configuration where status='d'"

dbid | content | role | preferred_role | mode | status | port | hostname | address | replication_por

t

------+---------+------+----------------+------+--------+-------+-------------+-------------+----------------

--

12 | 2 | m | m | s | d | 43002 | greenplum03 | greenplum03 | 4400

2

7 | 5 | m | p | s | d | 6001 | greenplum03 | greenplum03 | 3400

1

(2 rows)

发现状态的

[gpadmin@greenplum01 conf]$ gpstate -m

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-Starting gpstate with args: -m

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44'

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15'

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master...

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--Current GPDB mirror list and status

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--Type = Group

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg0 43000 Passive Synchronized

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg1 43001 Passive Synchronized

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-greenplum03 /greenplum/data2/mirror/gpseg2 43002 Failed <<<<<<<< 这个出现问题了

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg3 43003 Passive Synchronized

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg4 43000 Passive Synchronized

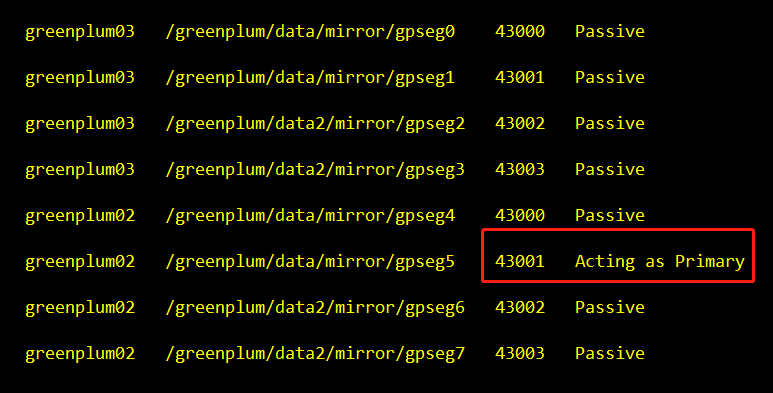

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg5 43001 Acting as Primary Change Tracking

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg6 43002 Passive Synchronized

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg7 43003 Passive Synchronized

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) are acting as primaries

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) have failed ------------看这里

20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 mirror segment(s) acting as primaries are in change tracking

01,连接问题

ping greenplum03

02,激活失效的segment

gprecoverseg 恢复过程会启动失效的Segment并且确定需要同步的已更改文件

在gprecoverseg完成后,系统会进入到Resynchronizing模式并且开始复制更改过的文件。这个过程在后台运行,而系统处于在线状态并且能够接受数据库请求。

当重新同步过程完成时,系统状态是Synchronized需要恢复两个 日志:

[gpadmin@greenplum01 conf]$ gprecoverseg

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Starting gprecoverseg with args:

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44'

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15'

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Checking if segments are ready to connect

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master...

20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master...

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Heap checksum setting is consistent between master and the segments that are candidates for recoverseg

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Greenplum instance recovery parameters

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:----------------------------------------------------------

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery type = Standard

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:----------------------------------------------------------

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery 1 of 2

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:----------------------------------------------------------

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Synchronization mode = Incremental

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance host = greenplum03

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance address = greenplum03

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance directory = /greenplum/data2/mirror/gpseg2

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance port = 43002

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance replication port = 44002

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance host = greenplum02

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance address = greenplum02

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance directory = /greenplum/data2/primary/gpseg2

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance port = 6002

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance replication port = 34002

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Target = in-place

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:----------------------------------------------------------

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery 2 of 2

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:----------------------------------------------------------

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Synchronization mode = Incremental

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance host = greenplum03

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance address = greenplum03

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance directory = /greenplum/data/primary/gpseg5

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance port = 6001

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance replication port = 34001

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance host = greenplum02

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance address = greenplum02

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance directory = /greenplum/data/mirror/gpseg5

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance port = 43001

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance replication port = 44001

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Target = in-place

20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- Continue with segment recovery procedure Yy|Nn (default=N):

> Y

20190711:17:11:31:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-2 segment(s) to recover

20190711:17:11:31:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Ensuring 2 failed segment(s) are stopped 20190711:17:11:32:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Ensuring that shared memory is cleaned up for stopped segments

updating flat files

20190711:17:11:32:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating configuration with new mirrors

20190711:17:11:33:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating mirrors

.

20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Starting mirrors

20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-era is 24a58010f9c5a05a_190711113124

20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Commencing parallel primary and mirror segment instance startup, please wait...

..

20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Process results...

20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating configuration to mark mirrors up

20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating primaries

20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Commencing parallel primary conversion of 2 segments, please wait...

.

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Process results...

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Done updating primaries

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-******************************************************************

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating segments for resynchronization is completed.

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-For segments updated successfully, resynchronization will continue in the background.

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Use gpstate -s to check the resynchronization progress.

20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-******************************************************************

03, 检测同步

gpstate -m

[gpadmin@greenplum01 conf]$ gpstate -m

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-Starting gpstate with args: -m

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44'

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15'

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master...

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--Current GPDB mirror list and status

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--Type = Group

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg0 43000 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg1 43001 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg2 43002 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg3 43003 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg4 43000 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg5 43001 Acting as Primary Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg6 43002 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg7 43003 Passive Synchronized

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--------------------------------------------------------------

20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) are acting as primaries

发现恢复出来了

04,恢复初始化状态

因为宕机一个主segment,镜像会激活另一个,并且成为主segment。运行gprecoverseg之后,主segment依旧没变化,失效的segment没有正式加进来,所以需要让他变成初始化的时候的segment状态,让所有segment重新恢复平衡系统

检查这个segment的状态

gpstate -e

运行gpstate -m来确保所有镜像都是Synchronized。 gpstate -m

一直在运行了

假如有Resynchronizing模式 ,需要耐心等待

用-r选项运行gprecoverseg,让Segment回到它们的首选角色。

gprecoverseg -r 在重新平衡之后,运行gpstate -e来确认所有的Segment都处于它们的首选角色。

gpstate -e

这个就没问题了

GreenPlum 大数据平台--segment 失效问题恢复的更多相关文章

- GreenPlum 大数据平台--segment 失效问题恢复《二》(全部segment宕机情况下)

01,情况描述 主Segment和它的镜像都宕掉.导致了greenplum数据库不可用状态 02,重启greenplum数据库 gpstop -r 03,恢复 gprecoverseg 04,状态检查 ...

- GreenPlum 大数据平台--segment 失效问题排查

01,segment 检查一: 在master节点上检查失效的segment 正常情况下: :::: gpstate:greenplum01:gpadmin-[INFO]:-Starting gpst ...

- GreenPlum 大数据平台--监控

数据库状态监控活动 活动 过程 纠正措施 列出当前状态为down的Segment.如果有任何行被返回,就会生成一个警告或者告警. 推荐频率:每5到10分钟 重要度: IMPORTANT 在postgr ...

- GreenPlum 大数据平台--介绍

一,GreenPlum 01,介绍: Greenplum是一种基于PostgreSQL的分布式数据库,其采用shared-nothing架构,主机.操作系统.内存.存储都是自我控制的,不存在共享. 官 ...

- GreenPlum 大数据平台--运维(三)

一,操作命令 01,启动gpstart 参数说明 COMMAND NAME: gpstart Starts a Greenplum Database system. ***************** ...

- GreenPlum 大数据平台--外部表(三)

一,外部表介绍 Greenplum 在数据加载上有一个明显的优势,就是支持数据的并发加载,gpfdisk是并发加载的工具,数据库中对应的就是外部表 所谓外部表,就是在数据库中只有表定义.没有数据,数据 ...

- GreenPlum 大数据平台--增加segment

01,增加机器的配置 需要增加的机器安装greenplum 软件(操作见greenplum安装部署章节) 02,分配机器存储区域 03,配置互信 使用gpssh-exkeys确保Segment主机能通 ...

- GreenPlum 大数据平台--集群恢复

一,问题描述 :::: gpinitstandby:greenplum01:gpadmin-[ERROR]:-Cannot use -n option when standby master has ...

- GreenPlum 大数据平台--非并行备份(六)

一,非并行备份(pg_dump) 1) GP依然支持常规的PostgreSQL备份命令pg_dump和pg_dumpall 2) 备份将在Master主机上创建一个包含所有Segment数据的大的备份 ...

随机推荐

- 如何给gridControl动态的添加合计

for (int i = 0; i < this.dsHz.Tables[0].Columns.Count; i++) { if (dsHz.Tables[0].Columns[i].DataT ...

- 机器学习(六)--------神经网络(Neural Networks)

无论是线性回归还是逻辑回归都有这样一个缺点,即:当特征太多时, 计算的负荷会非常大. 比如识别图像,是否是一辆汽车,可能就需要判断太多像素. 这时候就需要神经网络. 神经网络是模拟人类大脑的神经网络, ...

- 自然语言处理(NLP) - 数学基础(3) - 概率论基本概念与随机事件

好像所有讲概率论的文章\视频都离不开抛骰子或抛硬币这两个例子, 因为抛骰子的确是概率论产生的基础, 赌徒们为了赢钱就不在乎上帝了才导致概率论能突破宗教的绞杀, 所以我们这里也以抛骰子和抛硬币这两个例子 ...

- duba网址对firefox快捷方式的劫持

直接删除 “驱动精灵” 即可. 等我 二进制安全 学好了,一定开发一种病毒专干这种劫持的,煞笔软件.

- C# 学习笔记 多态(二)抽象类

多态是类的三大特性之一,抽象类又是多态的实现方法之一.抽象类是什么呢,如果把虚方法比作一个盛有纯净水的杯子,那么此时的“纯净水”就是事先定义好的方法,我们可以根据不同的需求来改变杯子中所事先盛放的是“ ...

- string 字符串 的一些使用方法

Java语言中,把字符串作为对象来处理,类String就可以用来表示字符串(类名首字母都是大写的). 字符串常量是用双引号括住的一串字符. 例如:"Hello World" Str ...

- java 图书馆初级编写

import java.util.Scanner; import java.util.Arrays; public class book { public static void main(Strin ...

- Django基本知识

一.安装及使用 下载安装 命令行:pip3 install django==1.11.21 pycharm 创建项目 命令行: 找一个文件夹存放项目文件,打开终端: django-admin star ...

- Hive:数据倾斜

数据倾斜问题 数据倾斜是大数据领域绕不开的拦路虎,当你所需处理的数据量到达了上亿甚至是千亿条的时候,数据倾斜将是横在你面前一道巨大的坎.很可能有几周甚至几月都要头疼于数据倾斜导致的各类诡异的问题. 数 ...

- GraphQL快速入门教程

摘要: 体验神奇的GraphQL! 原文:GraphQL 入门详解 作者:MudOnTire Fundebug经授权转载,版权归原作者所有. GraphQL简介 定义 一种用于API调用的数据查询语言 ...