nltk_29_pickle保存和导入分类器

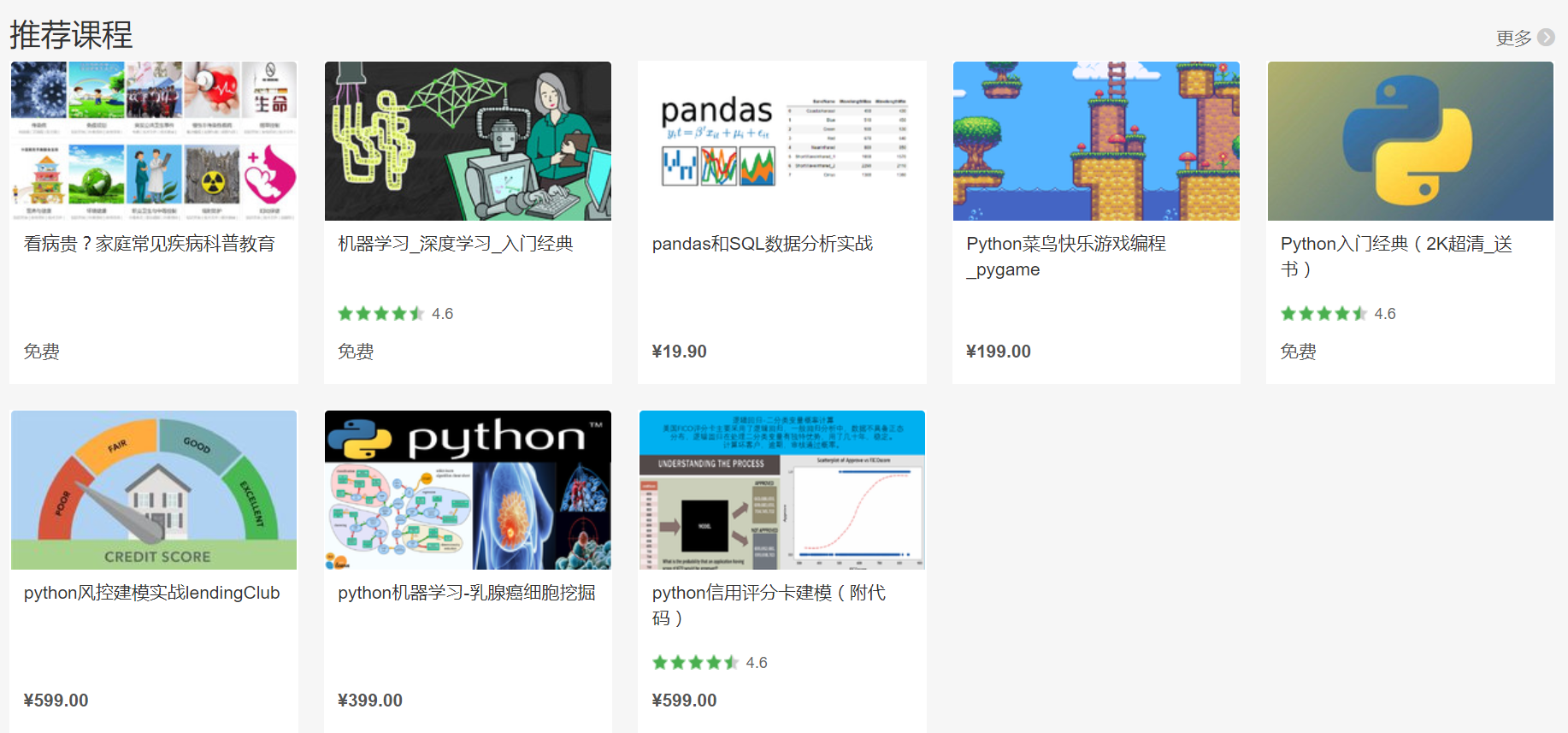

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

pickle用于持久化保存数据,分类器生成需要消耗大量时间,避免以后重复消耗时间

import pickle

#保存分类器

save_classifier = open("naivebayes.pickle","wb")

pickle.dump(classifier, save_classifier)

save_classifier.close()

#导入分类器

classifier_f = open("naivebayes.pickle", "rb")

classifier = pickle.load(classifier_f)

classifier_f.close()

Training classifiers and machine learning algorithms can take a very long time, especially if you're training against a larger data set. Ours is actually pretty small. Can you imagine having to train the classifier every time you wanted to fire it up and use it? What horror! Instead, what we can do is use the Pickle module to go ahead and serialize our classifier object, so that all we need to do is load that file in real quick.

So, how do we do this? The first step is to save the object. To do this, first you need to import pickle at the top of your script, then, after you have trained with .train() the classifier, you can then call the following lines:

This opens up a pickle file, preparing to write in bytes some data. Then, we use pickle.dump() to dump the data. The first parameter to pickle.dump() is what are you dumping, the second parameter is where are you dumping it.

After that, we close the file as we're supposed to, and that is that, we now have a pickled, or serialized, object saved in our script's directory!

Next, how would we go about opening and using this classifier? The .pickle file is a serialized object, all we need to do now is read it into memory, which will be about as quick as reading any other ordinary file. To do this:

Here, we do a very similar process. We open the file to read as bytes. Then, we use pickle.load() to load the file, and we save the data to the classifier variable. Then we close the file, and that is that. We now have the same classifier object as before!

Now, we can use this object, and we no longer need to train our classifier every time we wanted to use it to classify.

While this is all fine and dandy, we're probably not too content with the 60-75% accuracy we're getting. What about other classifiers? Turns out, there are many classifiers, but we need the scikit-learn (sklearn) module. Luckily for us, the people at NLTK recognized the value of incorporating the sklearn module into NLTK, and they have built us a little API to do it. That's what we'll be doing in the next tutorial.

把documents,word_features,classifier三个数据保存,以免以后做大量重复时间消耗

# -*- coding: utf-8 -*-

"""

Created on Thu Jan 12 10:44:19 2017 @author: Administrator 用于短评论分析-- Twitter 保存后的"positive.txt","negative.txt"需要转码为utf-8

在线转码网址

http://www.esk365.com/tools/GB2312-UTF8.asp features=5000,准确率百分之60以上

features=10000,准确率百分之 以上 运行时间可能长达一个小时

""" import nltk

import random

import pickle

from nltk.tokenize import word_tokenize

import time time1=time.time()

short_pos = open("positive.txt","r").read()

short_neg = open("negative.txt","r").read() # move this up here

documents = []

all_words = [] for r in short_pos.split('\n'):

documents.append( (r, "pos") ) for r in short_neg.split('\n'):

documents.append( (r, "neg") ) # j is adject, r is adverb, and v is verb

#allowed_word_types = ["J","R","V"] 允许形容词类别

allowed_word_types = ["J"] for p in short_pos.split('\n'):

documents.append( (p, "pos") )

words = word_tokenize(p)

pos = nltk.pos_tag(words)

for w in pos:

if w[1][0] in allowed_word_types:

all_words.append(w[0].lower()) for p in short_neg.split('\n'):

documents.append( (p, "neg") )

words = word_tokenize(p)

pos = nltk.pos_tag(words)

for w in pos:

if w[1][0] in allowed_word_types:

all_words.append(w[0].lower()) #保存文档

save_documents = open("documents.pickle","wb")

pickle.dump(documents, save_documents)

save_documents.close() #时间测试

time2=time.time()

print("time1 consuming:",time2-time1)

#保存特征

all_words = nltk.FreqDist(all_words)

#最好改成2万以上

word_features = list(all_words.keys())[:5000]

save_word_features = open("word_features5k.pickle","wb")

pickle.dump(word_features, save_word_features)

save_word_features.close() def find_features(document):

words = word_tokenize(document)

features = {}

for w in word_features:

features[w] = (w in words) return features featuresets = [(find_features(rev), category) for (rev, category) in documents] random.shuffle(featuresets)

print(len(featuresets)) testing_set = featuresets[10000:]

training_set = featuresets[:10000] classifier = nltk.NaiveBayesClassifier.train(training_set)

print("Original Naive Bayes Algo accuracy percent:", (nltk.classify.accuracy(classifier, testing_set))*100)

classifier.show_most_informative_features(15) #保存分类器

save_classifier = open("originalnaivebayes5k.pickle","wb")

pickle.dump(classifier, save_classifier)

save_classifier.close() time3=time.time()

print("time2 consuming:",time3-time2)

https://study.163.com/provider/400000000398149/index.htm?share=2&shareId=400000000398149(博主视频教学主页)

nltk_29_pickle保存和导入分类器的更多相关文章

- matlab学习(3) 保存和导入工作区

1.保存和导入工作区变量mat文件 假如创建了两个矩阵A=[1,2;3,4],B=[0,1;1,0] 则工作区就是这样的: 当函数有一个数据量非常大的返回值时,每次调用函数都要执行一遍函数,每次都要等 ...

- VS做简历的第三天(将文件中的样式保存并且导入)

VS做简历的第三天(将文件中的样式保存并且导入) 1.先在文件栏新建一个CSS文件 如 2.将第二天如下代码,删除<stype></stype>保留中间部分,复制在CSS文件并 ...

- docker 镜像的保存以及导入

docker 镜像的保存 docker save -o davename.tar images docker 镜像的导入 docker import - importname < tar ...

- TensorFlow 模型保存和导入、加载

在TensorFlow中,保存模型与加载模型所用到的是tf.train.Saver()这个类.我们一般的想法就是,保存模型之后,在另外的文件中重新将模型导入,我可以利用模型中的operation和va ...

- (qsf文件 、 tcl文件 和 csv(txt)文件的区别) FPGA管脚分配文件保存、导入导出方法

FPGA管脚分配文件保存方法 使用别人的工程时,有时找不到他的管脚文件,但可以把他已经绑定好的管脚保存下来,输出到文件里. 方法一: 查看引脚绑定情况,quartus -> assignment ...

- scikit_learn,NLTK导入分类器相关流程命令

- SecureCRT配置文件保存和导入

每次重装系统,都要重新配置SecureCRT,为了减少重复工作.直接在SecureCRT软件中找到:选项---全局选项---常规---配置文件夹下面路径:C:\Users\Administrator\ ...

- auto-keras 测试保存导入模型

# coding:utf-8 import time import matplotlib.pyplot as plt from autokeras import ImageClassifier# 保存 ...

- 多个Excel文件快速导入到DB里面

1 . 文件比较多,需要把这么多的数据都导入到DB里面,一个个导入太慢了,能想到的是先把数据整个到一个Excel中,然后再导入 2. 第一步准备合并Excel,新建一个新的excel,命名为total ...

随机推荐

- web06-PanduanLogin

电影网站:www.aikan66.com 项目网站:www.aikan66.com 游戏网站:www.aikan66.com 图片网站:www.aikan66.com 书籍网站:www.aikan66 ...

- Internet History, Technology and Security (Week7)

Week7 With reliable "pipes" available from the Transport layer, we can build applications ...

- Week2-作业1

第一章:引用:如果一架民用飞机上有一个功能,用户使用它的概率是百万分之一,你还要做这个功能么? 选择之后,这个功能是什么呢?谜底是飞机的安全功能. 个人认为,飞机的安全功能这个 ...

- iOS日期的加减

NSCalendar *calendar = [[NSCalendar alloc] initWithCalendarIdentifier:NSGregorianCalendar]; NSDateCo ...

- PROFIBUS-DP

PROFIBUS – DP的DP即Decentralized Periphery.它具有高速低成本,用于设备级控制系统与分散式I/O的通信.它与PROFIBUS-PA(Process Automati ...

- EJB是什么

EJB (enterprise java bean) EJB 概念的剖析 我们先看一下,EJB 的官方解释: 商务软件的核心部分是它的业务逻辑.业务逻辑抽象了整个商务过程的流程,并使用计 算机语言 ...

- grunt入门讲解6:grunt使用步骤和总结

Grunt是啥? 很火的前端自动化小工具,基于任务的命令行构建工具. Grunt能帮我们干啥? 假设有这样一个场景: 编码完成后,你需要做以下工作 HTML去掉注析.换行符 - HtmlMin CSS ...

- centos7开机出现try again to boot into default maintenance give root password for maintenance

开启centos7出现下面两句话,然后直接输出root密码,就可以登录,但是登录后,发现一些文字显示出来的是乱码 try again to boot into default maintenanceg ...

- 链表java实现

链表是用指针将多个节点联系在一起,通过头节点和尾节点还有节点数量,可以对链表进行一系列的操作.是线性表的链式存储实现. 1.链表是多个不连续的地址组成在一起根据指针链接在一起的,由多个节点组成,每个节 ...

- Concise and clear CodeForces - 991F(dfs 有重复元素的全排列)

就是有重复元素的全排列 #include <bits/stdc++.h> #define mem(a, b) memset(a, b, sizeof(a)) using namespace ...