Linear Decoders

Sparse Autoencoder Recap

In the sparse autoencoder, we had 3 layers of neurons: an input layer, a hidden layer and an output layer. In our previous description of autoencoders (and of neural networks), every neuron in the neural network used the same activation function. In these notes, we describe a modified version of the autoencoder in which some of the neurons use a different activation function. This will result in a model that is sometimes simpler to apply, and can also be more robust to variations in the parameters.

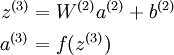

Recall that each neuron (in the output layer) computed the following:

where a(3) is the output. In the autoencoder, a(3) is our approximate reconstruction of the input x = a(1).

Because we used a sigmoid activation function for f(z(3)), we needed to constrain or scale the inputs to be in the range[0,1], since the sigmoid function outputs numbers in the range [0,1].

引入 —— 相同的activation function ,非线性映射会导致输入和输出不等

Linear Decoder

One easy fix for this problem is to set a(3) = z(3). Formally, this is achieved by having the output nodes use an activation function that's the identity function f(z) = z, so that a(3) = f(z(3)) = z(3). 输出结点不适用sigmoid 函数

This particular activation function

is called the linear activation function。Note however that in the hidden layer of the network, we still use a sigmoid (or tanh) activation function, so that the hidden unit activations are given by (say)

, where

is the sigmoid function, x is the input, and W(1) andb(1) are the weight and bias terms for the hidden units. It is only in the output layer that we use the linear activation function.

An autoencoder in this configuration--with a sigmoid (or tanh) hidden layer and a linear output layer--is called a linear decoder.

In this model, we have

. Because the output

is a now linear function of the hidden unit activations, by varying W(2), each output unit a(3) can be made to produce values greater than 1 or less than 0 as well. This allows us to train the sparse autoencoder real-valued inputs without needing to pre-scale every example to a specific range.

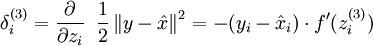

Since we have changed the activation function of the output units, the gradients of the output units also change. Recall that for each output unit, we had set set the error terms as follows:

where y = x is the desired output,

is the output of our autoencoder, and

is our activation function. Because in the output layer we now have f(z) = z, that implies f'(z) = 1 and thus the above now simplifies to:

output结点

Of course, when using backpropagation to compute the error terms for the hidden layer:

hidden结点

Because the hidden layer is using a sigmoid (or tanh) activation f, in the equation above

should still be the derivative of the sigmoid (or tanh) function.

Linear Decoders的更多相关文章

- (六)6.16 Neurons Networks linear decoders and its implements

Sparse AutoEncoder是一个三层结构的网络,分别为输入输出与隐层,前边自编码器的描述可知,神经网络中的神经元都采用相同的激励函数,Linear Decoders 修改了自编码器的定义,对 ...

- CS229 6.16 Neurons Networks linear decoders and its implements

Sparse AutoEncoder是一个三层结构的网络,分别为输入输出与隐层,前边自编码器的描述可知,神经网络中的神经元都采用相同的激励函数,Linear Decoders 修改了自编码器的定义,对 ...

- Unsupervised Feature Learning and Deep Learning(UFLDL) Exercise 总结

7.27 暑假开始后,稍有时间,“搞完”金融项目,便开始跑跑 Deep Learning的程序 Hinton 在Nature上文章的代码 跑了3天 也没跑完 后来Debug 把batch 从200改到 ...

- Deep learning:三十八(Stacked CNN简单介绍)

http://www.cnblogs.com/tornadomeet/archive/2013/05/05/3061457.html 前言: 本节主要是来简单介绍下stacked CNN(深度卷积网络 ...

- [UFLDL] Basic Concept

博客内容取材于:http://www.cnblogs.com/tornadomeet/archive/2012/06/24/2560261.html 参考资料: UFLDL wiki UFLDL St ...

- [UFLDL] Dimensionality Reduction

博客内容取材于:http://www.cnblogs.com/tornadomeet/archive/2012/06/24/2560261.html Deep learning:三十五(用NN实现数据 ...

- Deep Learning 教程翻译

Deep Learning 教程翻译 非常激动地宣告,Stanford 教授 Andrew Ng 的 Deep Learning 教程,于今日,2013年4月8日,全部翻译成中文.这是中国屌丝军团,从 ...

- ICLR 2013 International Conference on Learning Representations深度学习论文papers

ICLR 2013 International Conference on Learning Representations May 02 - 04, 2013, Scottsdale, Arizon ...

- Deep Learning基础--线性解码器、卷积、池化

本文主要是学习下Linear Decoder已经在大图片中经常采用的技术convolution和pooling,分别参考网页http://deeplearning.stanford.edu/wiki/ ...

随机推荐

- 关于Fragment的setUserVisibleHint() 方法和onCreateView()的执行顺序

1:setUserVisibleHint(boolean isVisibleToUser)的方法就很重要,根据方法名来看当前页面是否可见, 里面的boolean值就是判断当前页面是否可见的变量,所以大 ...

- 请教如何用 peewee 实现类似 django ORM 的这种查询效果。

本人新入坑的小白,如有不对的地方请包涵~~~! 在 django 中代码如下:模型定义: class Friends(models.Model): first_id = models.IntegerF ...

- python制造模块

制造模块: 方法一: 1.mkdir /xxcd /xx 2.文件包含: 模块名.py setup.py setup.py内容如下:#!/usr/bin/env pythonfrom distutil ...

- CodeForcesEducationalRound40-D Fight Against Traffic 最短路

题目链接:http://codeforces.com/contest/954/problem/D 题意 给出n个顶点,m条边,一个起点编号s,一个终点编号t 现准备在这n个顶点中多加一条边,使得st之 ...

- ubutun lunix 64安装neo4j 图形数据库

借鉴链接: https://my.oschina.net/zlb1992/blog/915038

- 恐怖的奴隶主(bob)

题目 试题3:恐怖的奴隶主(bob) 源代码:bob.cpp 输入文件:bob.in 输出文件:bob.out 时间限制:1s 空间限制:512MB 题目描述 小L热衷于undercards. 在un ...

- 洛谷 P1443 马的遍历

P1443 马的遍历 题目描述 有一个n*m的棋盘(1<n,m<=400),在某个点上有一个马,要求你计算出马到达棋盘上任意一个点最少要走几步 输入输出格式 输入格式: 一行四个数据,棋盘 ...

- C语言函数--E

函数名: ecvt 功 能: 把一个浮点数转换为字符串 用 法: char ecvt(double value, int ndigit, int *decpt, int *sign); 程序例: #i ...

- ImageLoader的简单分析(二)

在<ImageLoader的简单分析>这篇博客中对IImageLoader三大组件的创建过程以及三者之间的关系做了说明.同一时候文章的最后也简单的说明了一下ImageLoader是怎么通过 ...

- openssl之BIO系列之6---BIO的IO操作函数

BIO的IO操作函数 ---依据openssl doc/crypto/bio/bio_read.pod翻译和自己的理解写成 (作者:DragonKing Mail:wzhah ...