Kafka.net使用编程入门(三)

这个世界既不是有钱人的世界,也不是有权人的世界,它是有心人的世界。

一些有用的命令

1.列出主题:kafka-topics.bat --list --zookeeper localhost:21812.描述主题:kafka-topics.bat --describe --zookeeper localhost:2181 --topic [Topic Name]3.从头读取消息:kafka-console-consumer.bat --zookeeper localhost:2181 --topic [Topic Name] --from-beginning4.删除主题:E:\WorkSoftWare\kafka2.11\bin\windows>kafka-run-class.bat kafka.admin.TopicCommand --delete --topic test2016 --zookeeper localhost:2181实体类:

KafkaProducerMessage.cs代码:

[Table("KafkaProducerMessage")]

public partial class KafkaProducerMessage

{

public int KafkaProducerMessageID { get; set; }

[Required]

[StringLength(1000)]

public string Topic { get; set; }

[Required]

public string MessageContent { get; set; }

public DateTime CreatedAt { get; set; }

}KafkaConsumerMessage.cs代码:

[Table("KafkaConsumerMessage")]

public partial class KafkaConsumerMessage

{

public int KafkaConsumerMessageID { get; set; }

[Required]

[StringLength(1000)]

public string Topic { get; set; }

public int Partition { get; set; }

public long Offset { get; set; }

[Required]

public string MessageContent { get; set; }

public DateTime CreatedAt { get; set; }

}KafkaProducerMessageArchive.cs代码:

[Table("KafkaProducerMessageArchive")]

public partial class KafkaProducerMessageArchive

{

public int KafkaProducerMessageArchiveID { get; set; }

public int KafkaProducerMessageID { get; set; }

[Required]

[StringLength(1000)]

public string Topic { get; set; }

[Required]

public string MessageContent { get; set; }

public DateTime CreatedAt { get; set; }

public DateTime ArchivedAt { get; set; }

}vwMaxOffsetByPartitionAndTopic.cs代码:

[Table("vwMaxOffsetByPartitionAndTopic")]

public partial class vwMaxOffsetByPartitionAndTopic

{

[Key]

[Column(Order = 0)]

[StringLength(1000)]

public string Topic { get; set; }

[Key]

[Column(Order = 1)]

[DatabaseGenerated(DatabaseGeneratedOption.None)]

public int Partition { get; set; }

public long? MaxOffset { get; set; }

}KafkaConsumerRepository.cs代码:

public class KafkaConsumerRepository

{

private KafkaModel context;

public KafkaConsumerRepository ()

{

this.context = new KafkaModel();

}

public List<KafkaConsumerMessage> GetKafkaConsumerMessages()

{

return context.KafkaConsumerMessage.ToList();

}

public KafkaConsumerMessage GetKafkaConsumerMessageByID(int MessageID)

{

return context.KafkaConsumerMessage.Find(MessageID);

}

public List<KafkaConsumerMessage> GetKafkaConsumerMessageByTopic(string TopicName)

{

return context.KafkaConsumerMessage

.Where(a => a.Topic == TopicName)

.ToList();

}

public List<vwMaxOffsetByPartitionAndTopic> GetOffsetPositionByTopic(string TopicName)

{

return context.vwMaxOffsetByPartitionAndTopic

.Where(a => a.Topic == TopicName)

.ToList();

}

public void InsertKafkaConsumerMessage(KafkaConsumerMessage Message)

{

Console.WriteLine(String.Format("Insert {0}: {1}", Message.KafkaConsumerMessageID.ToString(), Message.MessageContent));

context.KafkaConsumerMessage.Add(Message);

context.SaveChanges();

Console.WriteLine(String.Format("Saved {0}: {1}", Message.KafkaConsumerMessageID.ToString(), Message.MessageContent));

}

public void Save()

{

context.SaveChanges();

}

public void Dispose()

{

context.Dispose();

}

}KafkaProducerRepository.cs代码:

public class KafkaProducerRepository

{

private KafkaModel context;

public KafkaProducerRepository()

{

this.context = new KafkaModel();

}

public List<KafkaProducerMessage> GetKafkaProducerMessages()

{

return context.KafkaProducerMessage.ToList();

}

public List<string> GetDistinctTopics()

{

return context.KafkaProducerMessage.Select(a => a.Topic)

.Distinct()

.ToList();

}

public KafkaProducerMessage GetKafkaProducerMessageByMessageID(int MessageID)

{

return context.KafkaProducerMessage.Find(MessageID);

}

public List<KafkaProducerMessage> GetKafkaProducerMessageByTopic(string TopicName)

{

return context.KafkaProducerMessage

.Where(a => a.Topic == TopicName)

.OrderBy(a => a.KafkaProducerMessageID)

.ToList<KafkaProducerMessage>();

}

public void InsertKafkaProducerMessage(KafkaProducerMessage Message)

{

context.KafkaProducerMessage.Add(Message);

context.SaveChanges();

}

public void ArchiveKafkaProducerMessage(int MessageID)

{

KafkaProducerMessage m = context.KafkaProducerMessage.Find(MessageID);

KafkaProducerMessageArchive archivedMessage = KafkaProducerMessageToKafkaProducerMessageArchive(m);

using (var dbContextTransaction = context.Database.BeginTransaction())

{

try

{

context.KafkaProducerMessageArchive.Add(archivedMessage);

context.KafkaProducerMessage.Remove(m);

dbContextTransaction.Commit();

context.SaveChanges();

}

catch (Exception)

{

dbContextTransaction.Rollback();

context.SaveChanges();

}

}

}

public void ArchiveKafkaProducerMessageList(List<KafkaProducerMessage> Messages)

{

foreach (KafkaProducerMessage Message in Messages)

{

ArchiveKafkaProducerMessage(Message.KafkaProducerMessageID);

}

}

public static KafkaProducerMessageArchive KafkaProducerMessageToKafkaProducerMessageArchive(KafkaProducerMessage Message)

{

return new KafkaProducerMessageArchive

{

KafkaProducerMessageID = Message.KafkaProducerMessageID,

MessageContent = Message.MessageContent,

Topic = Message.Topic,

CreatedAt = Message.CreatedAt,

ArchivedAt = DateTime.UtcNow

};

}

public void Save()

{

context.SaveChanges();

}

public void Dispose()

{

context.Dispose();

}

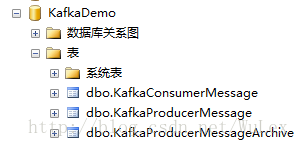

}数据库上下文KafkaModel.cs代码:

public partial class KafkaModel : DbContext

{

public KafkaModel()

: base("name=KafkaModel")

{

}

public virtual DbSet<KafkaConsumerMessage> KafkaConsumerMessage { get; set; }

public virtual DbSet<KafkaProducerMessage> KafkaProducerMessage { get; set; }

public virtual DbSet<KafkaProducerMessageArchive> KafkaProducerMessageArchive { get; set; }

public virtual DbSet<vwMaxOffsetByPartitionAndTopic> vwMaxOffsetByPartitionAndTopic { get; set; }

protected override void OnModelCreating(DbModelBuilder modelBuilder)

{

modelBuilder.Entity<KafkaConsumerMessage>()

.Property(e => e.Topic)

.IsUnicode(false);

modelBuilder.Entity<KafkaProducerMessage>()

.Property(e => e.Topic)

.IsUnicode(false);

modelBuilder.Entity<KafkaProducerMessageArchive>()

.Property(e => e.Topic)

.IsUnicode(false);

modelBuilder.Entity<vwMaxOffsetByPartitionAndTopic>()

.Property(e => e.Topic)

.IsUnicode(false);

}

}Program.cs代码:

class Program

{

private static string Topic;

static void Main(string[] args)

{

string invalidArgErrorMessage = "有效的args是:produce或consume";

if (args.Length < 1)

{

throw (new Exception(invalidArgErrorMessage));

}

string intent = args[1];

Topic = ConfigurationManager.AppSettings["Topic"];

if (String.Equals(intent, "consume", StringComparison.OrdinalIgnoreCase))

{

Console.WriteLine("开始消费者服务");

Consume();

}

else if (String.Equals(intent, "produce", StringComparison.OrdinalIgnoreCase))

{

Console.WriteLine("开始生产者服务");

Produce();

}

else

{

throw (new Exception(invalidArgErrorMessage));

}

}

private static BrokerRouter InitDefaultConfig()

{

List<Uri> ZKURIList = new List<Uri>();

foreach (string s in ConfigurationManager.AppSettings["BrokerList"].Split(','))

{

ZKURIList.Add(new Uri(s));

}

var Options = new KafkaOptions(ZKURIList.ToArray());

var Router = new BrokerRouter(Options);

return Router;

}

private static void Consume()

{

KafkaConsumerRepository KafkaRepo = new KafkaConsumerRepository();

bool FromBeginning = Boolean.Parse(ConfigurationManager.AppSettings["FromBeginning"]);

var Router = InitDefaultConfig();

var Consumer = new Consumer(new ConsumerOptions(Topic, Router));

//如果我们不想从头开始,使用最新偏移量。

if (!FromBeginning)

{

var MaxOffsetByPartition = KafkaRepo.GetOffsetPositionByTopic(Topic);

//如果我们得到一个结果使用它,否则默认

if (MaxOffsetByPartition.Count != 0)

{

List<OffsetPosition> offsets = new List<OffsetPosition>();

foreach (var m in MaxOffsetByPartition)

{

OffsetPosition o = new OffsetPosition(m.Partition, (long)m.MaxOffset + 1);

offsets.Add(o);

}

Consumer.SetOffsetPosition(offsets.ToArray());

}

else

{

Consumer.SetOffsetPosition(new OffsetPosition());

}

}

//消耗返回一个阻塞IEnumerable(ie:从没有结束流)

foreach (var message in Consumer.Consume())

{

string MessageContent = Encoding.UTF8.GetString(message.Value);

Console.WriteLine(String.Format("处理带有内容的消息: {0}", MessageContent));

KafkaRepo = new KafkaConsumerRepository();

KafkaConsumerMessage ConsumerMessage = new KafkaConsumerMessage()

{

Topic = Topic,

Offset = (int)message.Meta.Offset,

Partition = message.Meta.PartitionId,

MessageContent = MessageContent,

CreatedAt = DateTime.UtcNow

};

KafkaRepo.InsertKafkaConsumerMessage(ConsumerMessage);

KafkaRepo.Dispose();

}

}

private static void Produce()

{

KafkaProducerRepository KafkaRepo = new KafkaProducerRepository();

var Router = InitDefaultConfig();

var Client = new Producer(Router);

List<Message> Messages = new List<Message>();

foreach (KafkaProducerMessage message in KafkaRepo.GetKafkaProducerMessageByTopic(Topic))

{

Messages.Add(new Message(message.MessageContent));

KafkaRepo.ArchiveKafkaProducerMessage(message.KafkaProducerMessageID);

}

Client.SendMessageAsync(Topic, Messages).Wait();

KafkaRepo.Dispose();

}

}App.config内容:

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<configSections>

<section name="entityFramework" type="System.Data.Entity.Internal.ConfigFile.EntityFrameworkSection, EntityFramework, Version=6.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" requirePermission="false"/>

</configSections>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.5"/>

</startup>

<appSettings>

<add key="BrokerList" value="http://localhost:9092"/>

<add key="Topic" value="test2017"/>

<add key="FromBeginning" value="true"/>

</appSettings>

<entityFramework>

<defaultConnectionFactory type="System.Data.Entity.Infrastructure.LocalDbConnectionFactory, EntityFramework">

<parameters>

<parameter value="mssqllocaldb"/>

</parameters>

</defaultConnectionFactory>

<providers>

<provider invariantName="System.Data.SqlClient" type="System.Data.Entity.SqlServer.SqlProviderServices, EntityFramework.SqlServer"/>

</providers>

</entityFramework>

<connectionStrings>

<add name="KafkaModel" connectionString="Data Source=.;Initial Catalog=KafkaDemo;User ID=sa;Password=wu199010;multipleactiveresultsets=True;application name=EntityFramework" providerName="System.Data.SqlClient"/>

</connectionStrings>

<system.web>

<membership defaultProvider="ClientAuthenticationMembershipProvider">

<providers>

<add name="ClientAuthenticationMembershipProvider" type="System.Web.ClientServices.Providers.ClientFormsAuthenticationMembershipProvider, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" serviceUri=""/>

</providers>

</membership>

<roleManager defaultProvider="ClientRoleProvider" enabled="true">

<providers>

<add name="ClientRoleProvider" type="System.Web.ClientServices.Providers.ClientRoleProvider, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" serviceUri="" cacheTimeout="86400"/>

</providers>

</roleManager>

</system.web>

</configuration>sql脚本:

IF NOT EXISTS(SELECT 1 FROM sys.tables WHERE name = 'KafkaConsumerMessage')

BEGIN

CREATE TABLE [dbo].[KafkaConsumerMessage](

[KafkaConsumerMessageID] [int] IDENTITY(1,1) NOT NULL,

[Topic] [varchar](1000) NOT NULL,

[Partition] [int] NOT NULL,

[Offset] [bigint] NOT NULL,

[MessageContent] [nvarchar](max) NOT NULL,

[CreatedAt] [datetime] NOT NULL,

CONSTRAINT [PK_Kafka_Consumer_Message_KafkaConsumerMessageID] PRIMARY KEY CLUSTERED ([KafkaConsumerMessageID])

)

END

GO

IF NOT EXISTS(SELECT 1 FROM sys.tables WHERE name = 'KafkaProducerMessage')

BEGIN

CREATE TABLE [dbo].[KafkaProducerMessage](

[KafkaProducerMessageID] [int] IDENTITY(1,1) NOT NULL,

[Topic] [varchar](1000) NOT NULL,

[MessageContent] [nvarchar](max) NOT NULL,

[CreatedAt] [datetime] NOT NULL,

CONSTRAINT [PK_KafkaProducerMessage_KafkaProducerMessageID] PRIMARY KEY CLUSTERED ([KafkaProducerMessageID])

)

END

GO

IF NOT EXISTS(SELECT 1 FROM sys.tables WHERE name = 'KafkaProducerMessageArchive')

BEGIN

CREATE TABLE [dbo].[KafkaProducerMessageArchive](

[KafkaProducerMessageArchiveID] [int] IDENTITY(1,1) NOT NULL,

[KafkaProducerMessageID] [int] NOT NULL,

[Topic] [varchar](1000) NOT NULL,

[MessageContent] [nvarchar](max) NOT NULL,

[CreatedAt] [datetime] NOT NULL,

[ArchivedAt] [datetime] NOT NULL,

CONSTRAINT [PK_KafkaProducerMessageArchive_KafkaProducerMessageArchiveID] PRIMARY KEY CLUSTERED ([KafkaProducerMessageArchiveID])

)

END

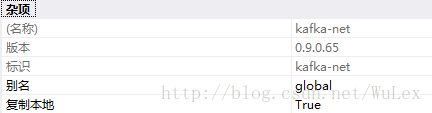

GO引用.net kafka 组件:

启动zookeeper服务器,kafka服务器

打开一个DOS环境窗口

zkServer- 1

打开第二个DOS窗口

kafka-server-start D:xxxxxxxxx\config\server.properties- 1

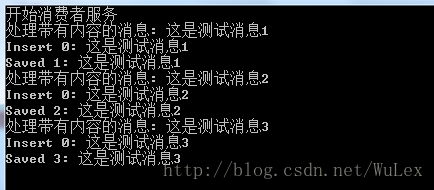

结果如图:

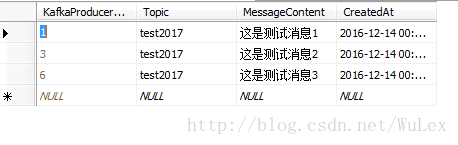

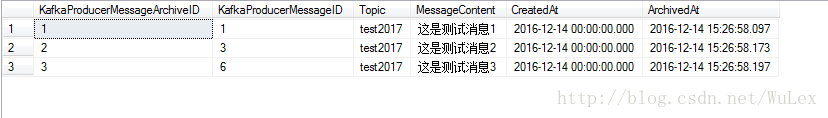

手动添加生产者表三条记录:

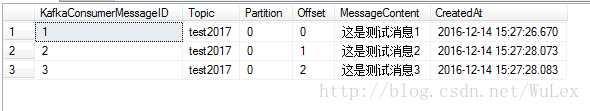

消费者表

Kafka.net使用编程入门(三)的更多相关文章

- 脑残式网络编程入门(三):HTTP协议必知必会的一些知识

本文原作者:“竹千代”,原文由“玉刚说”写作平台提供写作赞助,原文版权归“玉刚说”微信公众号所有,即时通讯网收录时有改动. 1.前言 无论是即时通讯应用还是传统的信息系统,Http协议都是我们最常打交 ...

- Minecraft Forge编程入门三 “初始化项目结构和逻辑”

经过前面两个教程Minecraft Forge编程入门一 "环境搭建"和Minecraft Forge编程入门二 "工艺和食谱",我们大体知道了如何自定义合成配 ...

- Kafka.net使用编程入门(四)

新建一个cmd窗口,zkServer命令启动zookeeper 打开另一个cmd窗口,输入: cd D:\Worksoftware\Apachekafka2.11\bin\windows kafka- ...

- Kafka.net使用编程入门(一)

最近研究分布式消息队列,分享下! 首先zookeeper 和 kafka 压缩包 解压 并配置好! 我本机zookeeper环境配置如下: D:\Worksoftware\ApacheZookeep ...

- Kafka.net使用编程入门

最近研究分布式消息队列,分享下! 首先zookeeper 和 kafka 压缩包 解压 并配置好! 我本机zookeeper环境配置如下: D:\Worksoftware\ApacheZookeep ...

- Kafka.net使用编程入门(二)

1.首先创建一个Topic,命令如下: kafka-topics --create --zookeeper localhost:2181 --replication-factor 1 --partit ...

- [转帖]脑残式网络编程入门(一):跟着动画来学TCP三次握手和四次挥手

脑残式网络编程入门(一):跟着动画来学TCP三次握手和四次挥手 http://www.52im.net/thread-1729-1-1.html 1.引言 网络编程中TCP协议的三次握手和 ...

- 脑残式网络编程入门(六):什么是公网IP和内网IP?NAT转换又是什么鬼?

本文引用了“帅地”发表于公众号苦逼的码农的技术分享. 1.引言 搞网络通信应用开发的程序员,可能会经常听到外网IP(即互联网IP地址)和内网IP(即局域网IP地址),但他们的区别是什么?又有什么关系呢 ...

- 脑残式网络编程入门(五):每天都在用的Ping命令,它到底是什么?

本文引用了公众号纯洁的微笑作者奎哥的技术文章,感谢原作者的分享. 1.前言 老于网络编程熟手来说,在测试和部署网络通信应用(比如IM聊天.实时音视频等)时,如果发现网络连接超时,第一时间想到的就是 ...

随机推荐

- 由table理解display:table-cell

转载自:https://segmentfault.com/a/1190000007007885 table标签(display:table) 1) table可设置宽高.margin.border.p ...

- 学习笔记46—如何使Word和EndNote关联

1)打开Word文件项目中的选项,然后点击加载项, 2)找到Endnote安装目录,选择目录中的Configure EndNote.exe,选中configuration endnote compon ...

- ajax和iframe区别

ajax和iframe https://segmentfault.com/a/1190000011967786 ajax和iframe的区别 1.都是局部刷新 2.iframe是同步的,而ajax是异 ...

- eslint简单的规范

module.exports = { root: true, parser: 'babel-eslint', parserOptions: { sourceType: 'module' }, // h ...

- 使用“rz -be”命令上传文件至服务器;使用“sz 文件名”从服务器下载文件到本地

注意:需要事先安装lrzsz服务 yum install -y lrzsz 因为服务器没有安装sftp服务,无法使用FileZilla.Xftp等连接服务器上传文件,这种情况可以利用rz命令上传文件. ...

- 质控工具之cutadapt的使用方法

cutadapt 参考:用cutadapt软件来对双端测序数据去除接头 fastqc可以用于检测,检测出来了怎么办? 看了几篇高水平文章,有不少再用cutadapt,虽然有时候数据真的不错,但是还是要 ...

- English trip M1 - AC9 Nosey people 爱管闲事的人 Teacher:Solo

In this lesson you will learn to talk about what happened. 在本课中,您将学习如何谈论发生的事情. 课上内容(Lesson) # four “ ...

- C#后台Post提交XML 及接收该XML的方法

//发送XML public void Send(object sender, System.EventArgs e) { string WebUrl = "http:/ ...

- ddt 实例

from :https://blog.csdn.net/wushuai150831/article/details/78453549

- selenium配置Chrome驱动

1.http://chromedriver.storage.googleapis.com/index.html chrome下载驱动地址 和对应的版本驱动,不用FQ 2.配置方法:如在e盘创建一个 ...