HADOOP/HDFS Essay

HDFS架构

the core of HADOOP/distributed systems is storeage(HDFS) and resource manager(YARN) for computing engines built on it.

Master/Slave: The character of distribution system follows M/S pattern.

Name Node

NN is the master and single active node. it contains / manges the namespace of files/dirs so called metadata , keeps block location andallocate data nodes.

Metadata is just like the one in linux file system including file name,size,owner/user,group,permission(umask) and HDFS specified elements like block ids , replication factor and block size and so on. Meta is in both memory and disk for fast access and restore respectively. NN also contains block location but it does not save it which is actually from DN. When a client access HDFS, it talks to NN first , NN checks the the permission, file existing just like the normal operation in a non-distributed linux system.

Data Node

The one actually stores files in form of blocks.

once a NN starts up, it sends its block report to NN like 'I(NN-1) has blocks #1,#2....' and it sends it periodically. So,NN can build a mapping of which bock in which DNs to serve file access request. DN also sends heartbeat information every 3 seconds to report its status along with actual data storage(total, free, used space and data transfer current in progress....) which is used for block allocating and and load banlancing by NN. a DN is considered dead if NN does not receive the HB within 10 mins. What is about the replication? It is for fault tolerance. If a block lost or a DN goes down, there is other nodes containing the same blocks. Also, based on the replciation, the system will also automatically replica the blocks if the number of copies does not meet the level.

Rack is nothing but a box with machines. dedicated power suply and network switch.

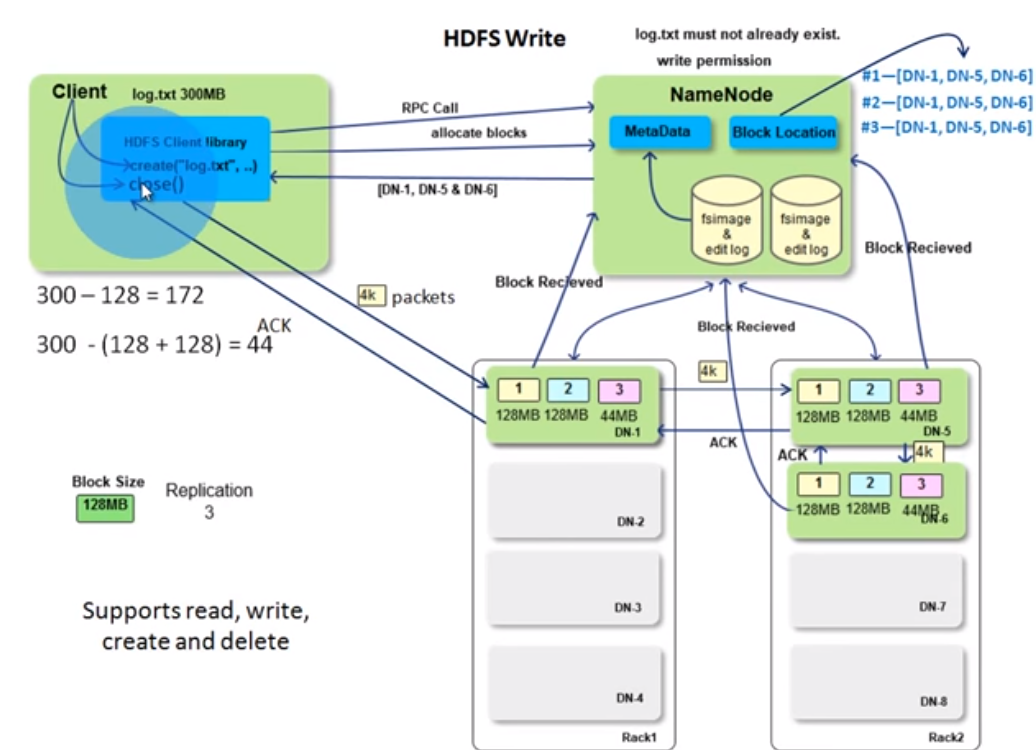

HDFS写

It should be easy to understand if you know the hdfs arch. client uses the hdfs client lib to manuplicate files. The communication is done thru RPC call. As mentioned before, NN will check metadata to see if client is qualified to write files. Then clients request NN to allocates blocks and NN returns the DN list(NN knows the status for each DN). for client to write the first 128MB block which is accumulated in the client library's managed space. This is the leader-folower pattern.The write to DNs is a pipleline so it is synchronized writing? client/leader writes data in a 4k packets and followers sends ACK so i guess it is synchronized writing. DNs also send the information to NN once it recieved the blocks. So, NN can build the block location in the write process as well. Then it contines to write the next 128 MB blocks. It loops till reach the EOF of the file. finally the client close() and indicates the operation is completed.

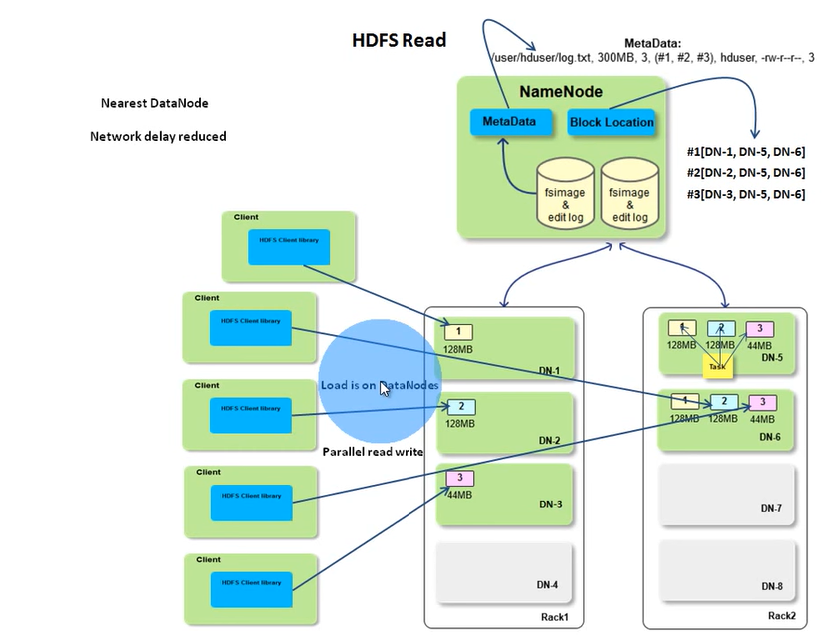

HDFS读

As mentioned before, you need HDFS java client library to perform the read operation like open the file, read the stream. client will call NN thru RPC to get the block id and block location. NN metadata has the block IDs for a file and the block location holds the mapping. Both of them are in memory and it should be fast. The actually read is between client and the DN. If the client is in a DN like a map task, the NN will return the block location with a list of network distance sort so the network delay will be reduced between racks. If the DN the map task is running on contains the blocks it needs, it will read directly locally. If the reading fails, client will switch to another node to read in the location list. Because of the data transfermation is between clients(you may have many current reads) and data nodes and name node only provides the block location, so, the load is distributed across the cluster. That's why HDFS is scalable. It may be not good to store vary large number of small files as name node may response poorly due to managing too much metadata.

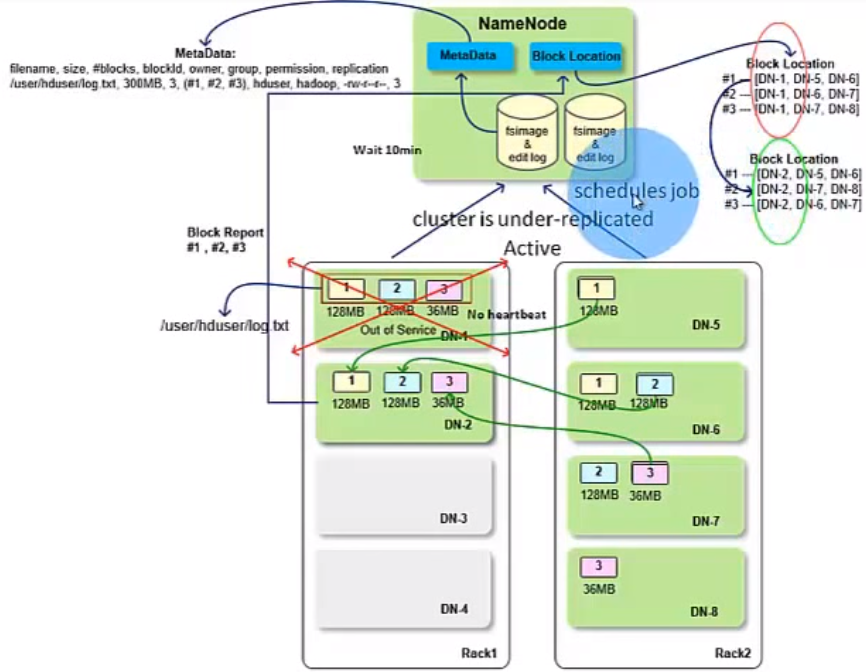

When a DN is down

As we know, HDFS is reliable. If a data node goes down(NN does not receive its heart beat within 10 mins), there must be other data nodes storing the copies of the blocks in the failed data node depending on the replication level. So, the system is still available. But in this situation, the cluster is unser-replicated, and NN will schedule MR jobs to write the blocks to available data nodes to meet the replication level. When the data node(s) will send block report to NN , the block location will be updated accordingly.

Trade off among reliability, performance/network bandwidth

If you want to gain more reliability, for example, make the replication level to a big value (5?), the write operation will be very expensive(it not only involes writing data to disk of multiple data nodes but also count in tranferring data across data nodes so the performance is low) and vice versa.

HADOOP/HDFS Essay的更多相关文章

- Hadoop HDFS 用户指南

This document is a starting point for users working with Hadoop Distributed File System (HDFS) eithe ...

- Hadoop HDFS负载均衡

Hadoop HDFS负载均衡 转载请注明出处:http://www.cnblogs.com/BYRans/ Hadoop HDFS Hadoop 分布式文件系统(Hadoop Distributed ...

- Hive:org.apache.hadoop.hdfs.protocol.NSQuotaExceededException: The NameSpace quota (directories and files) of directory /mydir is exceeded: quota=100000 file count=100001

集群中遇到了文件个数超出限制的错误: 0)昨天晚上spark 任务突然抛出了异常:org.apache.hadoop.hdfs.protocol.NSQuotaExceededException: T ...

- Hadoop程序运行中的Error(1)-Error: org.apache.hadoop.hdfs.BlockMissingException

15/03/18 09:59:21 INFO mapreduce.Job: Task Id : attempt_1426641074924_0002_m_000000_2, Status : FAIL ...

- Hadoop HDFS编程 API入门系列之HDFS_HA(五)

不多说,直接上代码. 代码 package zhouls.bigdata.myWholeHadoop.HDFS.hdfs3; import java.io.FileInputStream;import ...

- Hadoop HDFS编程 API入门系列之简单综合版本1(四)

不多说,直接上代码. 代码 package zhouls.bigdata.myWholeHadoop.HDFS.hdfs4; import java.io.IOException; import ja ...

- [转]hadoop hdfs常用命令

FROM : http://www.2cto.com/database/201303/198460.html hadoop hdfs常用命令 hadoop常用命令: hadoop fs 查看H ...

- org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create directory /user/hive/warehouse/page_view. Name node is in safe mode

FAILED: Error in metadata: MetaException(message:Got exception: org.apache.hadoop.ipc.RemoteExceptio ...

- Hadoop HDFS文件常用操作及注意事项

Hadoop HDFS文件常用操作及注意事项 1.Copy a file from the local file system to HDFS The srcFile variable needs t ...

随机推荐

- js内部事件机制--单线程原理

原文地址:https://www.xingkongbj.com/blog/js/event-loop.html http://www.haorooms.com/post/js_xiancheng ht ...

- LeetCode 中级 - 组合总和(105)

给定一个无重复元素的数组 candidates 和一个目标数 target ,找出 candidates 中所有可以使数字和为 target 的组合. candidates 中的数字可以无限制重复被选 ...

- Linux awk命令用法

概述 awk就是把文件逐行的读入,以空格为默认分隔符将每行切片,切开的部分再进行各种分析处理 awk工作流程是这样的:读入有'\n'换行符分割的一条记录,然后将记录按指定的域分隔符划分域,填充域,$0 ...

- go加密算法:非对称加密(一)--RSA

椭圆曲线加密__http://blog.51cto.com/11821908/2057726 // MyRas.go package main import ( "crypto/rand&q ...

- 典型的 ajax 异步请求及错误处理

$.ajax({ url: path + '/emergency/saveEmergency.do', async: false,//同步,会阻塞操作 type: 'POST',//PUT DELET ...

- Spring初始化机制

一.main的运行入口 ClassPathXmlApplicationContext ctx = new ClassPathXmlApplicationContext("spring.xml ...

- Python在线编程环境

除了安装Python的IDE之外,也可以使用在网页中随时随地编写Python程序. Python官网:https://www.python.org/shell Python123:https://py ...

- Java学习笔记二十三:Java的继承初始化顺序

Java的继承初始化顺序 当使用继承这个特性时,程序是如何执行的: 继承的初始化顺序 1.初始化父类再初始子类 2.先执行初始化对象中属性,再执行构造方法中的初始化 当使用继承这个特性时,程序是如何执 ...

- C Mingw gcc printf 刷新缓冲行

C Mingw gcc printf 刷新缓冲行 参考:https://stackoverflow.com/questions/13035075/printf-not-printing-on-cons ...

- golang 并发执行函数func类型slice

golang的slice支持func.使用func slice要注意func要完整描述入参出参. 如果需要执行一系列类型相同(入参出参格式相同)的函数,可以动态添加到一个slice里面.range s ...