Paper Reading - Show, Attend and Tell: Neural Image Caption Generation with Visual Attention ( ICML 2015 )

Link of the Paper: https://arxiv.org/pdf/1502.03044.pdf

Main Points:

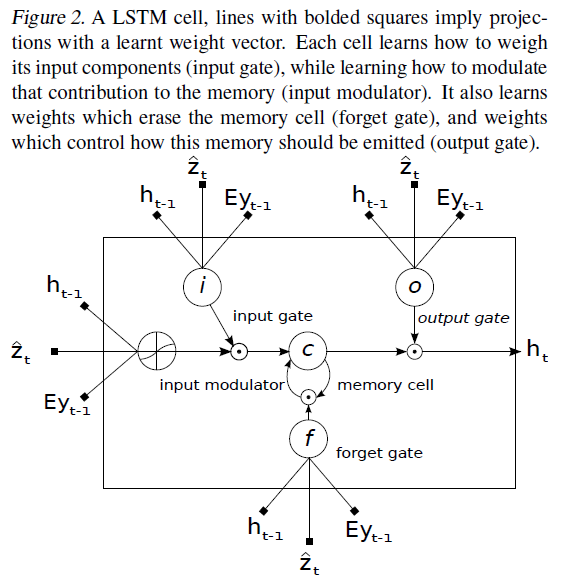

- Encoder-Decoder Framework: Encoder uses a convolutional neural network to extract a set of feature vectors which the authors refer to as annotation vectors. The extractor produces L vectors, each of which is a D-dimensional representation corresponding to a part of the image. a = { a1, ..., aL }, ai ∈ RD. In order to obtain a correspondence between the feature vectors and portions of the 2-D image, they extract features from a lower convolutional layer unlike previous work which instead used a fully connected layer. This allows the decoder to selectively focus on certain parts of an image by weighting a subset of all the feature vectors. Decoder uses a LSTM network to produce a caption by generating one word at every time step conditioned on a context vector, the previous hidden state and the previously generated words.

- Two attention-based image caption generators under a common framework: a "soft" deterministic attention mechanism trainable by standard back-propagation methods; and a "hard" stochastic attention mechanism trainable by maximizing an approximate variational lower bound or equivalently by Reinforce.

Other Key Points:

- Rather than compress an entire image into a static representation, attention allows for salient features to dynamically come to the forefront as needed. This is especially important when there is a lot of clutter in an image. Using representations ( such as those from the very top layer of a conv net ) that distill information in image down to the most salient objects is one effective solution that has been widely adopted in previous work. Unfortunately, this has one potential drawback of losing information which could be useful for richer, more descriptive captions. Using lower-level representation can help preserve this information.

Paper Reading - Show, Attend and Tell: Neural Image Caption Generation with Visual Attention ( ICML 2015 )的更多相关文章

- [Paper Reading] Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

论文链接:https://arxiv.org/pdf/1502.03044.pdf 代码链接:https://github.com/kelvinxu/arctic-captions & htt ...

- 论文笔记:Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention 2018-08-10 10:15:06 Pap ...

- 论文:Show, Attend and Tell: Neural Image Caption Generation with Visual Attention-阅读总结

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention-阅读总结 笔记不能简单的抄写文中的内容,得有自 ...

- Paper Reading - Show and Tell: A Neural Image Caption Generator ( CVPR 2015 )

Link of the Paper: https://arxiv.org/abs/1411.4555 Main Points: A generative model ( NIC, GoogLeNet ...

- [Paper Reading] Show and Tell: A Neural Image Caption Generator

论文链接:https://arxiv.org/pdf/1411.4555.pdf 代码链接:https://github.com/karpathy/neuraltalk & https://g ...

- [Paper Reading] Image Captioning using Deep Neural Architectures (arXiv: 1801.05568v1)

Main Contributions: A brief introduction about two different methods (retrieval based method and gen ...

- Paper Reading - CNN+CNN: Convolutional Decoders for Image Captioning

Link of the Paper: https://arxiv.org/abs/1805.09019 Innovations: The authors propose a CNN + CNN fra ...

- Paper Reading: Stereo DSO

开篇第一篇就写一个paper reading吧,用markdown+vim写东西切换中英文挺麻烦的,有些就偷懒都用英文写了. Stereo DSO: Large-Scale Direct Sparse ...

- Paper Reading - Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation ( CVPR 2015 )

Link of the Paper: https://ieeexplore.ieee.org/document/7298856/ A Correlative Paper: Learning a Rec ...

随机推荐

- python人工智能爬虫系列:怎么查看python版本_电脑计算机编程入门教程自学

首发于:python人工智能爬虫系列:怎么查看python版本_电脑计算机编程入门教程自学 http://jianma123.com/viewthread.aardio?threadid=431 本文 ...

- 使用 jTessBoxEditor 生成 tesseract-orc 的字典

本文使用图片方式记录使用 jTessBoxEditor 一站式生成自动文件的方式 首先感谢 Tesseract OCR 讨论群 389402579 的管理员[创世倾城 QQ:457606663] 的帮 ...

- HTML+css3 图片放大效果

<div class="enlarge"> <img src="xx" alt="图片"/> </div> ...

- MySQL5.7主从同步--点位方式及GTID方式

MySQL5.6加入了GTID的新特性,其全称是Global Transaction Identifier,可简化MySQL的主从切换以及Failover.GTID用于在binlog中唯一标识一个事务 ...

- thinkphp5访问sql2000数据库

大家都知道php跟mysql是绝配,但是因为有时候工作需要,要求php访问操作sql2000,怎么办呢? 一般来说有两种方式: 1. sqlsrv驱动方式 2. odbc方式 sqlsrv驱动方式,因 ...

- UEditor代码实现高亮显示

在公司开发一个论坛系统,由于用的是UEditor(百度编辑器),单独使用的话,里面的代码不会高亮,网上找了很多,最后决定使用 highlight.js 实现代码高亮显示.效果如下: 这个是我修改其他的 ...

- linux popen 获取 ip test ok

任务:unix,linux通过c程序获取本机IP. 1. 标准I/O库函数相对于系统调用的函数多了个缓冲区(,buf),安全性上通过buf 防溢出. 2.printf 这类输出函数中“ ”若包含“记得 ...

- java getter和setter的方法及内部类的调用

class Test{ public static void main(String[]args){ Person person=new Person(); person.age=22; person ...

- JavaScript’s “this”: how it works, where it can trip you up

JavaScript’s “this”: how it works, where it can trip you up http://speakingjs.com/es5/ch23.html#_ind ...

- Luogu P3120 [USACO15FEB]牛跳房子(金)Cow Hopscotch (Gold)

题目传送门 这是一道典型的记忆化搜索题. f[x][y]表示以x,y为右下角的方案数. code: #include <cstdio> #define mod 1000000007 usi ...