Tensorflowlite移植ARM平台iMX6

一、LINUX环境下操作:

1.安装交叉编译SDK (仅针对该型号:i.MX6,不同芯片需要对应的交叉编译SDK)

编译方法参考:手动编译用于i.MX6系列的交叉编译SDK

2.下载Tensorflow

git clone https://github.com/tensorflow/tensorflow.git

cd tensorflow

git checkout r1.10

Tensorflow与Bazel编译器(及CUDA,CUDNN)之间需要对应,否则会有兼容性问题。

tensorflowr1.10 python 2.7,3.6 Bazel:0.18.0-0.19.2

tensorflowr1.12 python 2.7,3.6 Bazel:0.18.0-0.19.2

tensorflowr1.14 python 2.7,3.6 Bazel:0.24.0 - 0.25.2

3、下载并安装编译工具Bazel

安装依赖包:

sudo apt-get install pkg-config zip g++ zilb1g-dev unzip

下载Bazel包:

wget https://github.com/bazelbuild/bazel/releases/download/0.18.1/bazel-0.181-installer-linux-86_64.sh

安装Bazel:

chmod +x bazel-0.18.1-installer-linux-86_64.sh

./bazel-0.18.1-installer-linux-86_64.sh --user

设置环境变量:

sudo vi ~/.bashrc,在文件最后添加:export PATH=$PATH":~/bin"

source ~/.bashrc

(如果仅仅是测试DEMO在ARM板上使用,可直接跳过4,5,6,7,8步,直接进行第9步)

4、编译配置:

在Tensorflow源码根目录运行:

./configure (编译LINUX平台时使用默认设置:-march=native,编译ARM平台时需设置成相应值:-march=armv7-a)

5、编译pip:

bazel build --config=opt //tensorflow/tools/pip_package:build_pip_package

7、编译包:

./bazel-bin/tensorflow/tools/pip__package/build_pip_package /tmp/tensorflow_pkg

8、安装包:

pip install /tmp/tensorflow_pkg/tensorflow-version-tags.whl

9、下载依赖库:

./tensorflow/contrib/lite/tools/make/download_dependencies.sh(不同版本,位置略有不同,本文路径为r1.10版本)

10、编译Tensorflow Lite:

方法1:(参考网上的,但我调试了几天,并未生成该库,可行性待验证!)

目前Tensorflow仅支持树莓派: ./tensorflow/contrib/lite/tools/make/build_rpi_lib.sh,该脚本的目标编译平台是ARMv7,即使目标平台是ARMv8,也不要更改。因为设置编译平台为ARMv7可以优化编译,提高运行速度。

所以,需要针对iMX6,制作一份build_imx6_lib.sh

set -e SCRIPT_DIR="$(cd "$(dirname "$(BASH_SOURCE[0]}")" && pwd)"

cd "$SCRIPT_DIR/../../.." CC_PREFIX=arm-poky-linux-gnueabi- make -j 3 -f tensorflow/contrib/lite/Makefile TARGET-imx6 TARGET_ARCH=armv7-a

生成静态库位置为:

./tensorflow/contrib/lite/toos/make/gen/rpi_arm7l/lib/libtensorflow-lite.a静态库。

方法2:(使用ARM(iMX6)交叉编译包,编译出来的包可以用于iMX6平台)

1.打开Tensorflow/contrib/lite/kernels/internal/BUILD

config_setting(

name = "armv7a",

values = {"cpu":"armv7a",},

) "armv7a":[

"-O3",

"-mfpu=vfpv3",

"-mfloat-abi=hard",

], add code above to NEON_FLAGS_IF_APPLICABLE ":armv6":[ ":neon_tensor_utils",

],

":armv7a":[

":neon_tensor_utils",

], add code above to cc_library tensor_utils select options

2.在根目录下创建一个文件:build_armv7_tflite.sh

#!/bin/bash bazel build --copt="-fPIC" --copt="-march=armv6" \

--copt="-mfloat-abi=hard" \

--copt="-mfpu=vfpv3" \

--copt="-funsafe-math-optimizations" \

--copt="-Wno-unused-function" \

--copt="-Wno-sign-compare" \

--copt="-ftree-vectorize" \

--copt="-fomit-frame-pointer" \

--cxxopt='--std=c++11' \

--cxxopt="-Wno-maybe-uninitialized" \

--cxxopt="-Wno-narrowing" \

--cxxopt="-Wno-unused" \

--cxxopt="-Wno-comment" \

--cxxopt="-Wno-unused-function" \

--cxxopt="-Wno-sign-compare" \

--cxxopt="-funsafe-math-optimizations" \

--linkopt="-lstdc++" \

--linkopt="-mfloat-abi=hard" \

--linkopt="-mfpu=vfpv3" \

--linkopt="-funsafe-math-optimizations" \

--verbose_failures \

--strip=always \

--crosstool_top=//armv6-compiler:toolchain --cpu=armv7 --config=opt \

33 tensorflow/contrib/lite/examples/label_image

3.编译该文件build_armv7_tflite.sh,会碰到一个错误:.../.../read-ld:unrecognized options : --icf=all

解决方法:找到文件./build_def.bzl ,打开,去除所有--icf=all标识的信息即可。

编译后,会在bazel_bin下生成指定的文件label_image。

4.生成头和库文件:

libbuiltin_ops.a libframework.a libneon_tensor_utils.a libquantization_util.a libtensor_utils.a

libcontext.a libfarmhash.a libgemm_support.a libportable_tensor_utils.a libstring_util.a

11、编译模型:

默认情况下label_image并未编译进去,需要修改Makefile,可参考minimal APK,主要修改以下三部分内容:

LABELIMAGE_SRCS

LABELIMAGE_BINARY

LABEL_OBJS

# Find where we're running from, so we can store generated files here.

ifeq ($(origin MAKEFILE_DIR), undefined)

MAKEFILE_DIR := $(shell dirname $(realpath $(lastword $(MAKEFILE_LIST))))

endif # Try to figure out the host system

HOST_OS :=

ifeq ($(OS),Windows_NT)

HOST_OS = WINDOWS

else

UNAME_S := $(shell uname -s)

ifeq ($(UNAME_S),Linux)

HOST_OS := LINUX

endif

ifeq ($(UNAME_S),Darwin)

HOST_OS := OSX

endif

endif #HOST_ARCH := $(shell if [[ $(shell uname -m) =~ i[345678]86 ]]; then echo x86_32; else echo $(shell uname -m); fi) # Self-hosting

TARGET_ARCH := ${HOST_ARCH}

CROSS := imx6

$(warning "CROSS :$(CROSS) HOST_ARCH:$(HOST_ARCH) TARGET_ARCH:$(TARGET_ARCH) TARGET_TOOLCHAIN_PREFIX:$(TARGET_TOOLCHAIN_PREFIX)")

# Cross compiling

ifeq ($(CROSS),imx6)

TARGET_ARCH := armv7-a

TARGET_TOOLCHAIN_PREFIX := arm-poky-linux-gnueabi-

endif ifeq ($(CROSS),rpi)

TARGET_ARCH := armv7l

TARGET_TOOLCHAIN_PREFIX := arm-linux-gnueabihf-

endif ifeq ($(CROSS),riscv)

TARGET_ARCH := riscv

TARGET_TOOLCHAIN_PREFIX := riscv32-unknown-elf-

endif

ifeq ($(CROSS),stm32f7)

TARGET_ARCH := armf7

TARGET_TOOLCHAIN_PREFIX := arm-none-eabi-

endif

ifeq ($(CROSS),stm32f1)

TARGET_ARCH := armm1

TARGET_TOOLCHAIN_PREFIX := arm-none-eabi-

endif # Where compiled objects are stored.

OBJDIR := $(MAKEFILE_DIR)/gen/obj/

BINDIR := $(MAKEFILE_DIR)/gen/bin/

LIBDIR := $(MAKEFILE_DIR)/gen/lib/

GENDIR := $(MAKEFILE_DIR)/gen/obj/ LIBS :=

ifeq ($(TARGET_ARCH),x86_64)

CXXFLAGS += -fPIC -DGEMMLOWP_ALLOW_SLOW_SCALAR_FALLBACK -pthread # -msse4.2

endif ifeq ($(TARGET_ARCH),armv7-a)

CXXFLAGS += -pthread -fPIC

LIBS += -ldl

endif ifeq ($(TARGET_ARCH),armv7l)

CXXFLAGS += -mfpu=neon -pthread -fPIC

LIBS += -ldl

endif ifeq ($(TARGET_ARCH),riscv)

# CXXFLAGS += -march=gap8

CXXFLAGS += -DTFLITE_MCU

LIBS += -ldl

BUILD_TYPE := micro

endif ifeq ($(TARGET_ARCH),armf7)

CXXFLAGS += -DGEMMLOWP_ALLOW_SLOW_SCALAR_FALLBACK -DTFLITE_MCU

CXXFLAGS += -fno-rtti -fmessage-length=0 -fno-exceptions -fno-builtin -ffunction-sections -fdata-sections

CXXFLAGS += -funsigned-char -MMD

CXXFLAGS += -mcpu=cortex-m7 -mthumb -mfpu=fpv5-sp-d16 -mfloat-abi=softfp

CXXFLAGS += '-std=gnu++11' '-fno-rtti' '-Wvla' '-c' '-Wall' '-Wextra' '-Wno-unused-parameter' '-Wno-missing-field-initializers' '-fmessage-length=0' '-fno-exceptions' '-fno-builtin' '-ffunction-sections' '-fdata-sections' '-funsigned-char' '-MMD' '-fno-delete-null-pointer-checks' '-fomit-frame-pointer' '-Os'

LIBS += -ldl

BUILD_TYPE := micro

endif

ifeq ($(TARGET_ARCH),armm1)

CXXFLAGS += -DGEMMLOWP_ALLOW_SLOW_SCALAR_FALLBACK -mcpu=cortex-m1 -mthumb -DTFLITE_MCU

CXXFLAGS += -fno-rtti -fmessage-length=0 -fno-exceptions -fno-builtin -ffunction-sections -fdata-sections

CXXFLAGS += -funsigned-char -MMD

LIBS += -ldl

endif # Settings for the host compiler.

#CXX := $(CC_PREFIX) ${TARGET_TOOLCHAIN_PREFIX}g++

#CXX := ${TARGET_TOOLCHAIN_PREFIX}g++ -march=armv7-a -mfpu=neon -mfloat-abi=hard -mcpu=cortex-a9 --sysroot=/opt/fsl-imx-x11/4.1.15-2.1.0/sysroots/x86_64-pokysdk-linux/usr/bin/arm-poky-linux-gnueabi

CXXFLAGS += -O3 -DNDEBUG

CCFLAGS := ${CXXFLAGS}

#CXXFLAGS += --std=c++11

CXXFLAGS += --std=c++0x #wly

#CC := ${TARGET_TOOLCHAIN_PREFIX}gcc

#AR := ${TARGET_TOOLCHAIN_PREFIX}ar

CFLAGS :=

LDOPTS :=

LDOPTS += -L/usr/local/lib

ARFLAGS := -r INCLUDES := \

-I. \

-I$(MAKEFILE_DIR)/../../../ \

-I$(MAKEFILE_DIR)/downloads/ \

-I$(MAKEFILE_DIR)/downloads/eigen \

-I$(MAKEFILE_DIR)/downloads/gemmlowp \

-I$(MAKEFILE_DIR)/downloads/neon_2_sse \

-I$(MAKEFILE_DIR)/downloads/farmhash/src \

-I$(MAKEFILE_DIR)/downloads/flatbuffers/include \

-I$(GENDIR)

# This is at the end so any globally-installed frameworks like protobuf don't

# override local versions in the source tree.

#INCLUDES += -I/usr/local/include

INCLUDES += -I/usr/include

LIBS += \

-lstdc++ \

-lpthread \

-lm \

-lz # If we're on Linux, also link in the dl library.

ifeq ($(HOST_OS),LINUX)

LIBS += -ldl

endif include $(MAKEFILE_DIR)/ios_makefile.inc

ifeq ($(CROSS),rpi)

#include $(MAKEFILE_DIR)/rpi_makefile.inc

endif

ifeq ($(CROSS),imx6)

include $(MAKEFILE_DIR)/imx6_makefile.inc

endif

# This library is the main target for this makefile. It will contain a minimal

# runtime that can be linked in to other programs.

LIB_NAME := libtensorflow-lite.a

LIB_PATH := $(LIBDIR)$(LIB_NAME) # A small example program that shows how to link against the library.

MINIMAL_PATH := $(BINDIR)minimal

LABEL_IMAGE_PATH :=$(BINDIR)label_image MINIMAL_SRCS := \

tensorflow/contrib/lite/examples/minimal/minimal.cc

MINIMAL_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(MINIMAL_SRCS)))) LABEL_IMAGE_SRCS := \

tensorflow/contrib/lite/examples/label_image/label_image.cc \

tensorflow/contrib/lite/examples/label_image/bitmap_helpers.cc

LABEL_IMAGE_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(LABEL_IMAGE_SRCS)))) # What sources we want to compile, must be kept in sync with the main Bazel

# build files. PROFILER_SRCS := \

tensorflow/contrib/lite/profiling/time.cc

PROFILE_SUMMARIZER_SRCS := \

tensorflow/contrib/lite/profiling/profile_summarizer.cc \

tensorflow/core/util/stats_calculator.cc CORE_CC_ALL_SRCS := \

$(wildcard tensorflow/contrib/lite/*.cc) \

$(wildcard tensorflow/contrib/lite/*.c)

ifneq ($(BUILD_TYPE),micro)

CORE_CC_ALL_SRCS += \

$(wildcard tensorflow/contrib/lite/kernels/*.cc) \

$(wildcard tensorflow/contrib/lite/kernels/internal/*.cc) \

$(wildcard tensorflow/contrib/lite/kernels/internal/optimized/*.cc) \

$(wildcard tensorflow/contrib/lite/kernels/internal/reference/*.cc) \

$(PROFILER_SRCS) \

$(wildcard tensorflow/contrib/lite/kernels/*.c) \

$(wildcard tensorflow/contrib/lite/kernels/internal/*.c) \

$(wildcard tensorflow/contrib/lite/kernels/internal/optimized/*.c) \

$(wildcard tensorflow/contrib/lite/kernels/internal/reference/*.c) \

$(wildcard tensorflow/contrib/lite/downloads/farmhash/src/farmhash.cc) \

$(wildcard tensorflow/contrib/lite/downloads/fft2d/fftsg.c)

endif

# Remove any duplicates.

CORE_CC_ALL_SRCS := $(sort $(CORE_CC_ALL_SRCS))

CORE_CC_EXCLUDE_SRCS := \

$(wildcard tensorflow/contrib/lite/*test.cc) \

$(wildcard tensorflow/contrib/lite/*/*test.cc) \

$(wildcard tensorflow/contrib/lite/*/*/*test.cc) \

$(wildcard tensorflow/contrib/lite/*/*/*/*test.cc) \

$(wildcard tensorflow/contrib/lite/kernels/test_util.cc) \

$(MINIMAL_SRCS) \

$(LABEL_IMAGE_SRCS)

ifeq ($(BUILD_TYPE),micro)

CORE_CC_EXCLUDE_SRCS += \

tensorflow/contrib/lite/model.cc \

tensorflow/contrib/lite/nnapi_delegate.cc

endif

# Filter out all the excluded files.

TF_LITE_CC_SRCS := $(filter-out $(CORE_CC_EXCLUDE_SRCS), $(CORE_CC_ALL_SRCS))

# File names of the intermediate files target compilation generates.

TF_LITE_CC_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(TF_LITE_CC_SRCS))))

LIB_OBJS := $(TF_LITE_CC_OBJS) # Benchmark sources

BENCHMARK_SRCS_DIR := tensorflow/contrib/lite/tools/benchmark

BENCHMARK_ALL_SRCS := $(TFLITE_CC_SRCS) \

$(wildcard $(BENCHMARK_SRCS_DIR)/*.cc) \

$(PROFILE_SUMMARIZER_SRCS) BENCHMARK_SRCS := $(filter-out \

$(wildcard $(BENCHMARK_SRCS_DIR)/*_test.cc), \

$(BENCHMARK_ALL_SRCS)) BENCHMARK_OBJS := $(addprefix $(OBJDIR), \

$(patsubst %.cc,%.o,$(patsubst %.c,%.o,$(BENCHMARK_SRCS)))) # For normal manually-created TensorFlow C++ source files.

$(OBJDIR)%.o: %.cc

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) -c $< -o $@

# For normal manually-created TensorFlow C++ source files.

$(OBJDIR)%.o: %.c

@mkdir -p $(dir $@)

$(CC) $(CCFLAGS) $(INCLUDES) -c $< -o $@ # The target that's compiled if there's no command-line arguments.

all: $(LIB_PATH) $(MINIMAL_PATH) $(LABEL_IMAGE_PATH) $(BENCHMARK_BINARY) # The target that's compiled for micro-controllers

micro: $(LIB_PATH) # Gathers together all the objects we've compiled into a single '.a' archive.

$(LIB_PATH): $(LIB_OBJS)

@mkdir -p $(dir $@)

$(AR) $(ARFLAGS) $(LIB_PATH) $(LIB_OBJS) $(MINIMAL_PATH): $(MINIMAL_OBJS) $(LIB_PATH)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(MINIMAL_PATH) $(MINIMAL_OBJS) \

$(LIBFLAGS) $(LIB_PATH) $(LDFLAGS) $(LIBS) # $(LABEL_IMAGE_PATH): $(LABEL_IMAGE_OBJS) $(LIBS) $(LABEL_IMAGE_PATH) : $(LABEL_IMAGE_OBJS) $(LIB_PATH)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(LABEL_IMAGE_PATH) $(LABEL_IMAGE_OBJS)\

$(LIBFLAGS) $(LIB_PATH) $(LDFLAGS) $(LIBS) $(BENCHMARK_LIB) : $(LIB_PATH) $(BENCHMARK_OBJS)

@mkdir -p $(dir $@)

$(AR) $(ARFLAGS) $(BENCHMARK_LIB) $(LIB_OBJS) $(BENCHMARK_OBJS) benchmark_lib: $(BENCHMARK_LIB)

$(info $(BENCHMARK_BINARY))

$(BENCHMARK_BINARY) : $(BENCHMARK_LIB)

@mkdir -p $(dir $@)

$(CXX) $(CXXFLAGS) $(INCLUDES) \

-o $(BENCHMARK_BINARY) \

$(LIBFLAGS) $(BENCHMARK_LIB) $(LDFLAGS) $(LIBS) benchmark: $(BENCHMARK_BINARY) # Gets rid of all generated files.

clean:

rm -rf $(MAKEFILE_DIR)/gen # Gets rid of target files only, leaving the host alone. Also leaves the lib

# directory untouched deliberately, so we can persist multiple architectures

# across builds for iOS and Android.

cleantarget:

rm -rf $(OBJDIR)

rm -rf $(BINDIR) $(DEPDIR)/%.d: ;

.PRECIOUS: $(DEPDIR)/%.d -include $(patsubst %,$(DEPDIR)/%.d,$(basename $(TF_CC_SRCS)))

执行: ./tensorflow/contrib/lite/tools/make/build_imx6_lib.sh

编译过程中可能会出现一些问题,依次解决即可!

编译完成后,在./tensorflow/contrib/lite/tools/make/gen/rpi_arm7l/bin 目录下生成可执行文件label_img

附上自己碰到的一些问题:

1、a/tensorflow/contrib/lite/tools/benchmark/benchmark_params.h

diff --git a/tensorflow/contrib/lite/profiling/profile_summarizer.cc b/tensorflow/contrib/lite/profiling/profile_summarizer.cc

old mode 100644

new mode 100755

index c37a096..590cd21

--- a/tensorflow/contrib/lite/profiling/profile_summarizer.cc

+++ b/tensorflow/contrib/lite/profiling/profile_summarizer.cc

@@ -83,7 +83,8 @@ OperatorDetails GetOperatorDetails(const tflite::Interpreter& interpreter,

OperatorDetails details;

details.name = op_name;

if (profiling_string) {

- details.name += ":" + string(profiling_string);

+ //wly

+ details.name += ":" + std::string(profiling_string);

}

details.inputs = GetTensorNames(interpreter, inputs);

details.outputs = GetTensorNames(interpreter, outputs);

2、./tensorflow/contrib/lite/profiling/profile_summarizer.cc

diff --git a/tensorflow/contrib/lite/tools/benchmark/benchmark_params.h b/tensorflow/contrib/lite/tools/benchmark/benchmark_params.h

old mode 100644

new mode 100755

index 33448dd..e7f63ff

--- a/tensorflow/contrib/lite/tools/benchmark/benchmark_params.h

+++ b/tensorflow/contrib/lite/tools/benchmark/benchmark_params.h

@@ -47,7 +47,8 @@ class BenchmarkParam {

virtual ~BenchmarkParam() {}

BenchmarkParam(ParamType type) : type_(type) {} - private:

+ //private:

+ public: //wly

static void AssertHasSameType(ParamType a, ParamType b);

template <typename T>

static ParamType GetValueType();

3、./tensorflow/contrib/lite/kernels/internal/reference/reference_ops.h

diff --git a/tensorflow/contrib/lite/kernels/internal/reference/reference_ops.h b/tensorflow/contrib/lite/kernels/internal/reference/reference_ops.h

old mode 100644

new mode 100755

index 2d40f17..43c54dc

--- a/tensorflow/contrib/lite/kernels/internal/reference/reference_ops.h

+++ b/tensorflow/contrib/lite/kernels/internal/reference/reference_ops.h

@@ -24,8 +24,9 @@ limitations under the License.

#include <type_traits> - #include "third_party/eigen3/Eigen/Core"

+//#include "third_party/eigen3/Eigen/Core""

#include "tensorflow/contrib/lite/kernels/internal/common.h"

#include "tensorflow/contrib/lite/kernels/internal/quantization_util.h"

#include "tensorflow/contrib/lite/kernels/internal/round.h"

4、需要下载包:protobuf

5、将c++11 改为c++0x

12、在PC上测试label_image

./label_image -v 1 -m ./mobilenet_v1_1.0_224.tflite -i ./grace_hopper.jpg -l ./imagenet_slim_labels.txt

报错信息: bash ./label_image: cannot execute binary file:Exec format error

原因有两个:

一是GCC编译时多加了一个-C,生成了二进制文件;

解决方法:找到GCC编译处,去除-C选项。

二就是编译环境不同(平台芯片不一致)导致

解决方法:需要在对应平台编译。

在tensorflow根目录执行:bazel build tensorflow/examples/label_image:label_image

如果是第一次编译,时间较久;

编译完成后,生成可执行文件:bazel_bin/tensorflow/examples/label_image/label_image

本地测试该文件:

拷贝label_image和libtensorflow_framework.so到tensorflow/examples/label_image下

(第一次测试时,未拷贝libtensorflow_framework.so,直接提示:error while loading shared libraryies:libtensorflow_framework.so:cannot open shared object file:No such file or directory)

再次运行./tensorflow/examples/label_image/label_image

显示结果:military uniform(653):0.834306

mortarboard(668):0.0218695

academic gown(401):0.0103581

pickelhaube(716):0.00800814

bulletproof vest(466):0.00535084

说明测试OK!

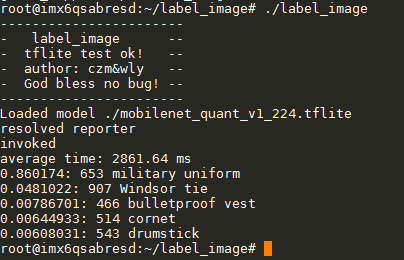

以下操作在ARM板子上:

1、拷贝生成的label_image到板子上(bazel_bin/tensorflow/contrib/lite/examples/label_image/)(1.7M)

拷贝生成的label_image到板子上(bazel_bin/tensorflow/examples/label_image)(52.2M)

2、拷贝图片./tensorflow/examples/label_image/data/grace_hopper.jpg到板子上

3、下载模型mobilenet_quant_v1_224.tflite

(如果下载其它模型,可参考该文件:/tensorflow/contrib/lite/g3doc/models.md)

4、下载模型所需文件:

curl -L "https://storage.googleapis.com/download.tensorflow.org/models/inception_v3_2016_08_28_frozen.pb.tar.gz" | tar -C tensorflow/examples/label_image/data -xz

5、拷贝libtensorflow_framework.so到板子上,文件位于目录bazel_bin/tensorflow/下(14M)

4、运行label_image

确保如下所需文件都已完成:

(拷贝文件1:label_image)

(拷贝文件2:grace_hopper.jpg)

(拷贝文件3:mobilenet_quant_v1_224.tflite)

(拷贝文件4:imagenet_slim_labels.txt)

(拷贝文件5:libtensorflow_framework.so)

ARM板上常用操作:

mount /dev/sba1 /mnt/usb

ls /mnt/usb

cp /mnt/usb/ .

执行脚本:

./label_image

如果出现-sh: ./label_image: not found,可能是编译器不一致导致。

尝试方法1:重定向:ln -s ld-linux.so.3 ld-linux-armhf.so.3

新报错:./label_image:/lib/libm.so.6: version 'GLIBC_2.27' not found (required by ./label_image)

出现以下信息,说明已经OK,恭喜你!

Tensorflowlite移植ARM平台iMX6的更多相关文章

- 移植mysql到嵌入式ARM平台

移植MySQL到嵌入式ARM平台 MySQL没有专门针对ARM的版本,移植到ARM没有官方文档可参考,因此,暂时参考这样一篇文档: http://blog.chinaunix.net/space.p ...

- 移植strace调试工具到arm平台

strace工具是一个非常强大的工具,是调试程序的好工具.要移植到arm平台,就需要使用交叉编译工具编译生成静态链接的可执行文件.具体步骤如下:1.下载 strace-4.5.16 移植str ...

- 移植 libevent-2.0.22-stable 到ARM平台

ARM 移植: 移植简单来讲就是使用ARM的编译环境,重新编译一份ARM平台上可以使用的库或执行文件,一般只需要重新制定C编译器和C++编译器即可. 特别注意的地方: 不能从windows解压文件后再 ...

- 怎样将lua移植到arm平台的linux内核

将脚本移植到内核是一件非常酷的事情,lua已经被移植到NetBSD的内核中,也有一个叫lunatik的项目把lua移植到了linux内核.仅仅可惜仅仅支持x86.不支持arm,在网上搜索了下,没有找到 ...

- 嵌入式开发之移植OpenCv可执行程序到arm平台

0. 序言 PC操作系统:Ubuntu 16.04 OpenCv版本:4.0 交叉工具链:arm-linux-gnueabihf,gcc version 5.4.0 目标平台:arm 编译时间:201 ...

- QT之ARM平台的移植

在开发板中运行QT程序的基本条件是具备QT环境,那么QT的移植尤为重要,接下载我将和小伙伴们一起学习QT的移植. 一.准备材料 tslib源码 qt-everywhere-src-5.12.9.t ...

- QtCreator动态编译jsoncpp完美支持x86和arm平台

如果是做嵌入式开发. 在Qt下支持JSon最好的办法,可能不是采用qjson这个库.QJson这个库的实例只提供了x86环境下的编译方法. Installing QJson-------------- ...

- 深入浅出 - Android系统移植与平台开发(一)

深入浅出 - Android系统移植与平台开发(一) 分类: Android移植2012-09-05 14:16 16173人阅读 评论(12) 收藏 举报 androidgitgooglejdkub ...

- NDK编程的一个坑—Arm平台下的类型转换

最近在做DNN定点化相关的工作,DNN定点化就是把float表示的模型压缩成char表示,虽然会损失精度,但是由于DNN训练的模型值比较接近且范围较小,实际上带来的性能损失非常小.DNN定点化的好处是 ...

随机推荐

- 利用ExpandableListView实现常用号码查询功能的实现

package com.loaderman.expandablelistviewdemo; import android.content.Context; import android.databas ...

- 动态执行表不可访问,或在v$session

PLSQL Developer报“动态执行表不可访问,本会话的自动统计被禁止”的解决方案 PLSQL Developer报“动态执行表不可访问,本会话的自动统计被禁止”的解决方案 现象: 第一次用PL ...

- python 生成随机红包

假设红包金额为money,数量是num,并且红包金额money>=num*0.01 原理如下,从1~money*100的数的集合中,随机抽取num-1个数,然后对这些数进行排序,在排序后的集合前 ...

- 数据中心网络架构的问题与演进 — SDN

目录 文章目录 目录 前文列表 OpenFlow 源起 从 OpenFlow 衍生 SDN 前文列表 <数据中心网络架构的问题与演进 - 传统路由交换技术与三层网络架构> <数据中心 ...

- jmeter beanShell修改http请求参数

jmeter beanShell修改http请求参数 在使用jmeter进行测试时,需要对上一步响应的明文参数,如userName='tom' token='%sdf%sdkdfj'之类的参数,加密一 ...

- Python re 正则表达式【一】【转】

数量词的贪婪模式与非贪婪模式 正则表达式通常用于在文本中查找匹配的字符串.Python里数量词默认是贪婪的(在少数语言里也可能是默认非贪婪),总是尝试匹配尽可能多的字符:非贪婪的则相反,总是尝试匹配尽 ...

- MongoDB数据库数据清理

清理MongoDB集群数据: 1.登录MongoDB集群(mongos): # mongo -u username -p password --authenticationDatabase admin ...

- Eclipse进行Debug时断点上有一个斜杠,并且debug没有停在断点处

断点上有斜杠,这是由于设置了Skip All Breakpoints的缘故,调试会忽略所有断点,执行完,只需取消Skip All Breakpoints即可,操作:Run-->Skip All ...

- JavaScript基础入门05

目录 JavaScript 基础入门05 严格模式 严格模式的设计目的 如何开启使用严格模式 显式报错 字符串 字符串的创建 字符串实例方法之常用API JavaScript 基础入门05 严格模式 ...

- app测试自动化操作方法之二

3.进行APP的滑动操作 方法一:#获取窗口大小def get_size(): size=dr.get_window_size() return size print(get_size())#向上滑动 ...