Research Guide: Pruning Techniques for Neural Networks

Research Guide: Pruning Techniques for Neural Networks

2019-11-15 20:16:54

Original: https://heartbeat.fritz.ai/research-guide-pruning-techniques-for-neural-networks-d9b8440ab10d

Pruning is a technique in deep learning that aids in the development of smaller and more efficient neural networks. It’s a model optimization technique that involves eliminating unnecessary values in the weight tensor. This results in compressed neural networks that run faster, reducing the computational cost involved in training the networks. This is even more crucial when deploying models to mobile phones or other edge devices. In this guide, we’ll look at some of the research papers in the field of pruning neural networks.

Pruning from Scratch (2019)

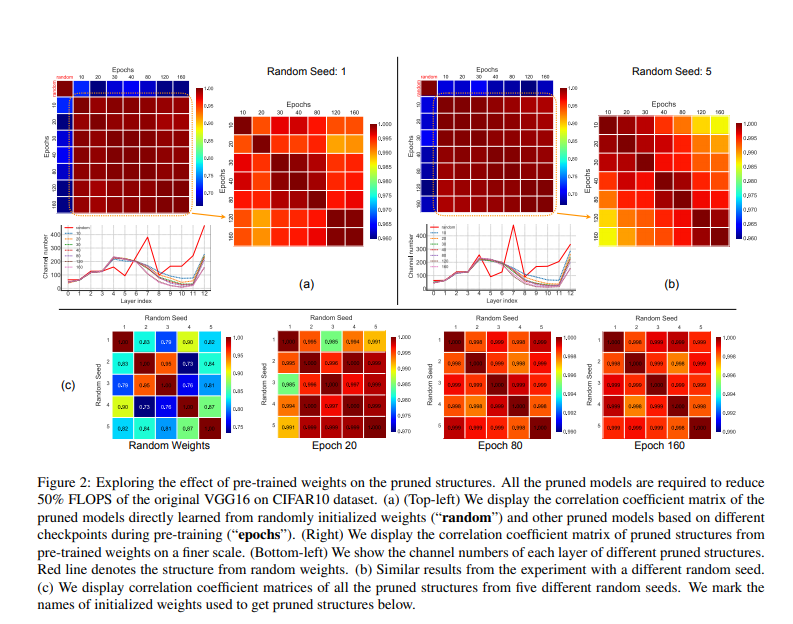

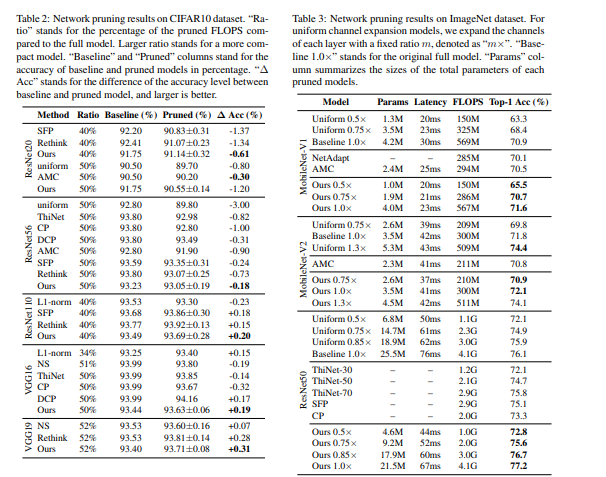

The authors of this paper propose a network pruning pipeline that allows for pruning from scratch. Based on experimentation with compression classification models on CIFAR10 and ImageNet datasets, the pipeline reduces pre-training overhead incurred while using normal pruning methods, and also increases the accuracy of the networks.

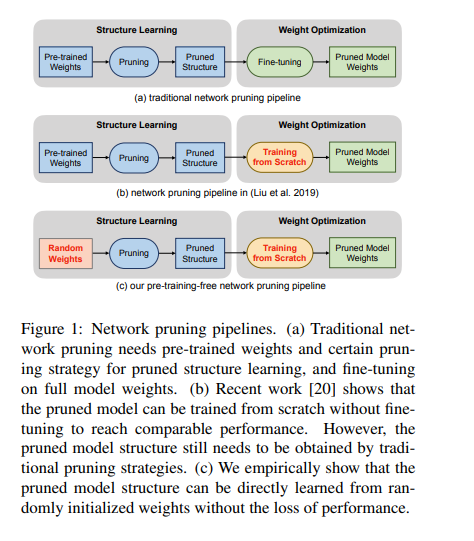

Below is an illustration of the three stages involved in the traditional pruning process. This process involves pre-training, pruning, and fine-tuning.

The pruning technique proposed in this paper involves building a pruning pipeline that can be learned from randomly initialized weights. Channel importance is learned by associating scalar gate values with each network layer.

The channel importance is optimized to improve the model performance under the sparsity regularization. During this process, the random weights are not updated. Afterward, a binary search strategy is used to determine the channel number configurations of the pruned model, given resource constraints.

Here’s a look at model accuracy obtained on various datasets:

Optimizing ML models is especially important (and tricky) when deploying to low-power devices like smartphones. Fritz AI has the expertise and the tools designed to help make this process as easy as possible.

Adversarial Neural Pruning (2019)

This paper considers the distortion problem of latent features of a network in the presence of adversarial perturbation. The proposed method learns a bayesian pruning mask to suppress the higher distorted features in order to maximize its robustness on adversarial deviations.

The authors consider the vulnerability of latent features in deep neural networks. The method proposed prunes out vulnerable features while preserving robust ones. This is done by adversarially learning the pruning mask in a Bayesian framework.

Adversarial Neural Pruning (ANP) combines the concept of adversarial training with the Bayesian pruning methods. The baseline for this method is:

- a standard convolutional neural network

- the adversarial trained network

- adversarial neural pruning with beta-Bernoulli dropout

- the adversarial trained network regularized with vulnerability suppression loss

- the adversarial neural pruning network regularized with vulnerability suppression loss

Here’s a table showing the performance of the model.

Rethinking the Value of Network Pruning (ICLR 2019)

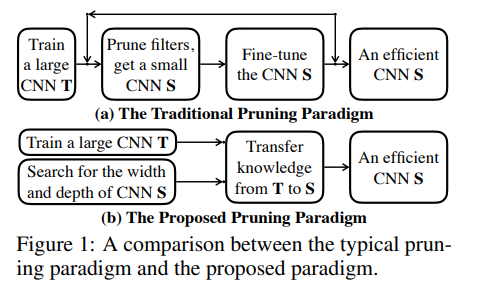

The network pruning methods proposed in this paper are divided into two categories. The target pruned model’s architecture is determined by either a human or a pruning algorithm. In experimentation, the authors also compare the results obtained by training pruned models from scratch and fine-tuning from inherited weights for both predefined and automatic methods.

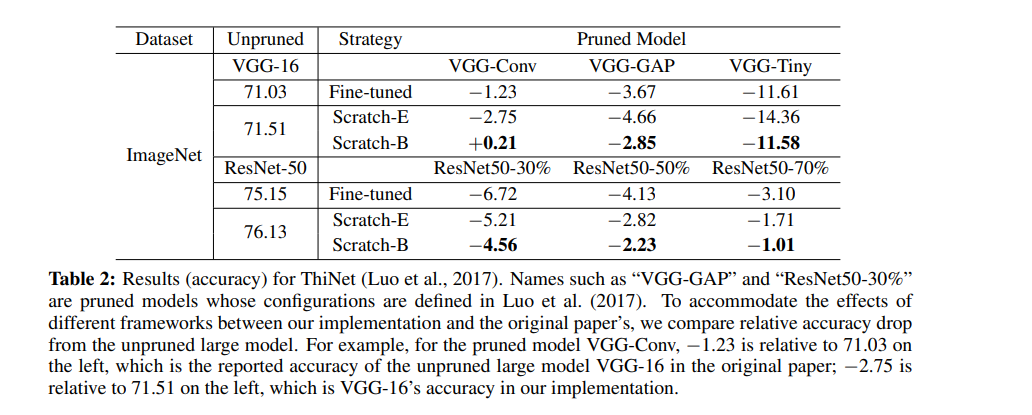

The figure below shows the results obtained for predefined structured pruning using L1-norm based filter pruning. Each layer involves pruning a certain percentage of filters with smaller L1-norm. The Pruned Model column represents the list of predefined target models used to configure each model. The observation is that in each row, scratch-trained models achieve at least the same level of accuracy as fine-tuned models.

As shown below, ThiNet greedily prunes the channel that has the smallest effect on the next layer’s activation values.

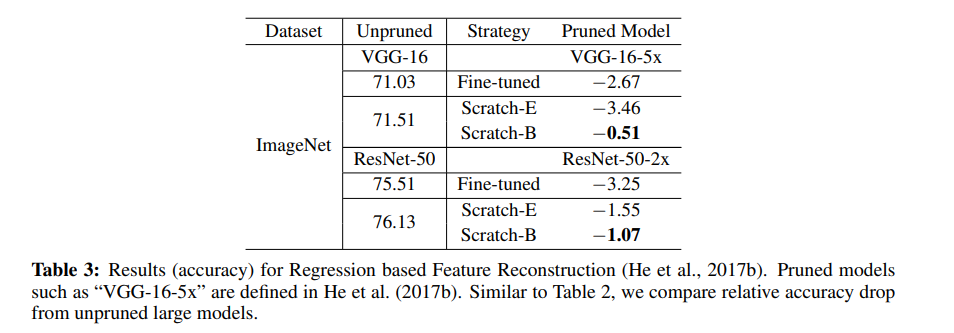

The next table shows the results obtained by Regression-based Feature Reconstruction. The method prunes channels by minimizing the feature map reconstruction error of the next layer. This optimization problem is solved by LASSO regression.

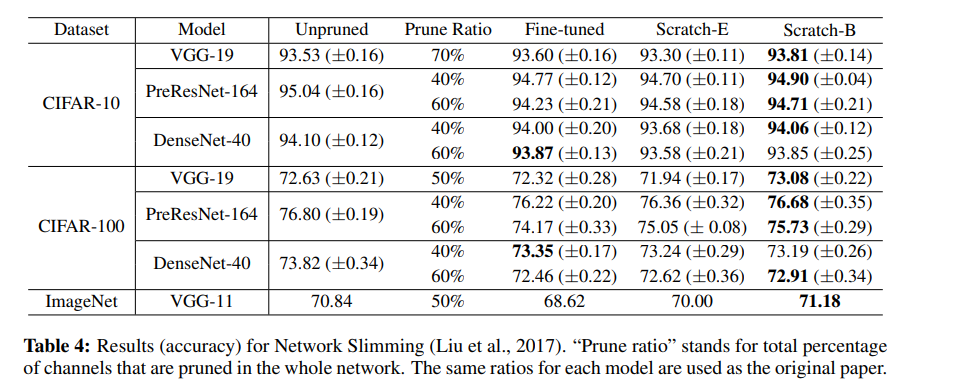

For Network Slimming, L1-sparsity is imposed on channel-wise scaling factors from Batch Normalization layers during training. It prunes channels with lower scaling factors afterward. This method produces automatically discovered target architectures since the channel scaling factors are compared across layers.

Deep learning — for experts, by experts. We’re using our decades of experience to deliver the best deep learning resources to your inbox each week.

Network Pruning via Transformable Architecture Search (NeurIPS 2019)

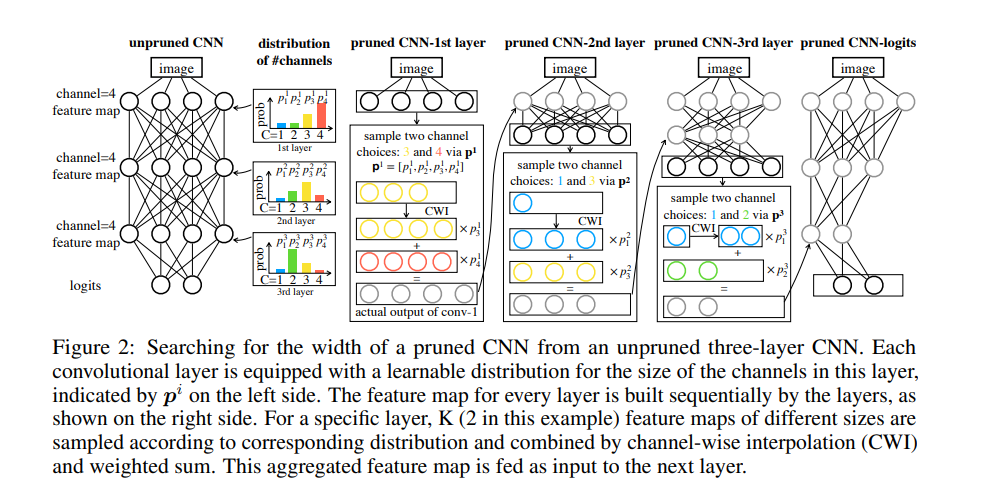

This paper proposes applying neural architecture search directly for a network with a flexible channel and layer sizes. Minimizing the loss of the pruned networks aids in learning the number of channels. The feature map of the pruned network is made up of K feature map fragments that are sampled based on the probability distribution. The loss is back-propagated to the network weights and to the parameterized distribution.

The width and depth of the pruned network are obtained from the maximum probability for the size in each distribution. These parameters are learned by knowledge transfer from the original networks. Experiments on the model are done on CIFAR-10, CIFAR-100, and ImageNet.

This approach of pruning consists of three stages:

- Training an unpruned large network with a standard classification training procedure.

- Searching for the depth and width of a small network via Transformable Architecture Search (TAS). TAS aims at searching for the best size of a network.

- Transferring the information from the unpruned network to the searched small network by a simple knowledge distillation (KD) approach.

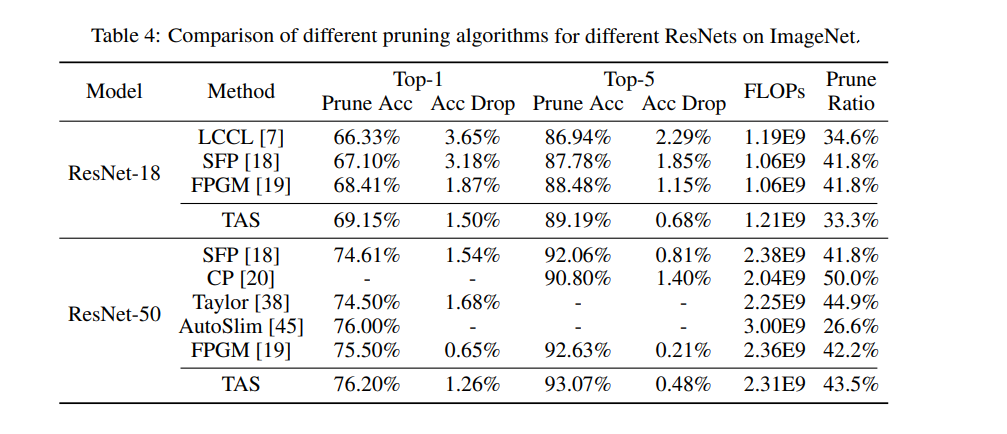

Here’s a comparison of different pruning algorithms for different ResNets on ImageNet:

Self-Adaptive Network Pruning (ICONIP 2019)

This paper proposes reducing the computational cost of CNNs via a self-adaptive network pruning method (SANP). The method does so by introducing a Saliency-and-Pruning Module (SPM) for each convolutional layer. This module learns to predict saliency scores and applies pruning to each channel. SANP determines the pruning strategy with respect to each layer and each sample.

As seen in the architecture diagram below, the Saliency-and-Pruning module is embedded in each layer of the convolutional network. The module predicts saliency scores for the channels. This is done based on input features. Pruning decisions for each channel are then generated.

The convolution operation is skipped for channels whose corresponding pruning decision is 0. The backbone network and the SPMs are then jointly trained with the classification and cost objectives. The computation costs are estimated depending on the pruning decision in each layer.

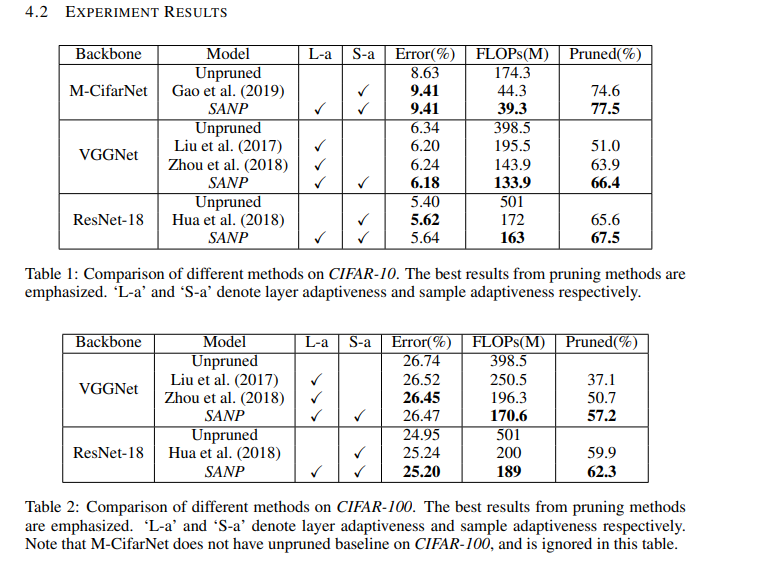

Some of the results obtained by this method are shown below:

Structured Pruning of Large Language Models (2019)

The pruning method proposed in this paper is based on low-rank factorization and augmentedLagrangian 10 norm regularization. 10 regularization relaxes the constraints imposed from structured pruning, while low-rank factorization enables retention of the dense structure of the matrices.

Regularization enables the network to choose which weights to remove. The weight matrices are factorized into two smaller matrices. A diagonal mask between these two matrices is then set. The mask is pruned during training via 10 regularization. The augmented Lagrangian approach is used to control the final sparsity level of the model. The authors refer to their method as FLOP (Factorized L0 Pruning).

The character-level language model used is the enwik8 dataset that contains 100M bytes of data taken from Wikipedia. FLOP is evaluated on SRU and Transformer-XL. Some of the results obtained are shown below.

Conclusion

We should now be up to speed on some of the most common — and a couple of very recent — pruning techniques

The papers/abstracts mentioned and linked to above also contain links to their code implementations. We’d be happy to see the results you obtain after testing them.

Editor’s Note: Heartbeat is a contributor-driven online publication and community dedicated to exploring the emerging intersection of mobile app development and machine learning. We’re committed to supporting and inspiring developers and engineers from all walks of life.

Editorially independent, Heartbeat is sponsored and published by Fritz AI, the machine learning platform that helps developers teach devices to see, hear, sense, and think. We pay our contributors, and we don’t sell ads.

If you’d like to contribute, head on over to our call for contributors. You can also sign up to receive our weekly newsletters (Deep Learning Weekly and Heartbeat), join us on Slack, and follow Fritz AI on Twitter for all the latest in mobile machine learning.

Research Guide: Pruning Techniques for Neural Networks的更多相关文章

- (转)A Beginner's Guide To Understanding Convolutional Neural Networks Part 2

Adit Deshpande CS Undergrad at UCLA ('19) Blog About A Beginner's Guide To Understanding Convolution ...

- A Beginner's Guide To Understanding Convolutional Neural Networks(转)

A Beginner's Guide To Understanding Convolutional Neural Networks Introduction Convolutional neural ...

- (转)A Beginner's Guide To Understanding Convolutional Neural Networks

Adit Deshpande CS Undergrad at UCLA ('19) Blog About A Beginner's Guide To Understanding Convolution ...

- A Beginner's Guide To Understanding Convolutional Neural Networks Part One (CNN)笔记

原文链接:https://adeshpande3.github.io/adeshpande3.github.io/A-Beginner's-Guide-To-Understanding-Convolu ...

- 论文笔记——Data-free Parameter Pruning for Deep Neural Networks

论文地址:https://arxiv.org/abs/1507.06149 1. 主要思想 权值矩阵对应的两列i,j,如果差异很小或者说没有差异的话,就把j列与i列上(合并,也就是去掉j列),然后在下 ...

- 提高神经网络的学习方式Improving the way neural networks learn

When a golf player is first learning to play golf, they usually spend most of their time developing ...

- [转]An Intuitive Explanation of Convolutional Neural Networks

An Intuitive Explanation of Convolutional Neural Networks https://ujjwalkarn.me/2016/08/11/intuitive ...

- An Intuitive Explanation of Convolutional Neural Networks

https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/ An Intuitive Explanation of Convolu ...

- 一目了然卷积神经网络 - An Intuitive Explanation of Convolutional Neural Networks

An Intuitive Explanation of Convolutional Neural Networks 原文地址:https://ujjwalkarn.me/2016/08/11/intu ...

随机推荐

- Java 面向对象(九)内部类

一.概述 1.引入 类的成员包括: 1.属性:成员变量2.方法:成员方法3.构造器4.代码块5.内部类:成员内部类 其中 1.2是代表这类事物的特征 其中3.4是初始化类和对象用的 其中5协助 ...

- centos7快速安装coreDns

1.下载二进制文件 wget https://github.com/coredns/coredns/releases/download/v1.5.0/coredns_1.5.0_linux_amd64 ...

- springboot使用阿里fastjson来解析数据

1.spring boot默认使用的json解析框架是jackson,使用fastjson需要配置,首先引入fastjson依赖 pom.xml配置如下: <project xmlns=&quo ...

- 【爬虫】使用selenium设置cookie

https://segmentfault.com/a/1190000015826749

- ansible-playbook安装zabbix_server,agent监控

主要完成通过playbook自动生成zabbix_server,agent,这里没有完全实现自动化,这里机器的获取还是需要人为手工填写,如果感兴趣想通过自动获取需要部署的机器可以通过namp扫描工具a ...

- 关于苹果手机设置fiddler代理后无网络无法抓包的问题

1.设置代理后,需要在苹果手机的关于本机中,打开证书信任 这样就可以抓包咯

- jmeter4+win10+jdk1.8环境下,jmeter输入中文就卡死的问题

问题描述:jmeter4+win10+jdk1.8环境下,输入中文jmeter卡死: 解决思路: 起初以为是win10系统不兼容的问题,装了个虚拟机,在虚拟机里面装了win7,然后再装了jmeter, ...

- 后端将Long类型数据传输到前端出现精度丢失的问题

当将超过16位的数字传输到前端的时候,就会出现精度丢失的问题,然后我按照网上的几种方法实验的时候,只有一种方法成功了.可能是因为环境等方面的问题. 我这里成功是因为:最后使用的是配置mvc的方式,然后 ...

- 算法学习笔记——sort 和 qsort 提供的快速排序

这里存放的是笔者在学习算法和数据结构时相关的学习笔记,记录了笔者通过网络和书籍资料中学习到的知识点和技巧,在供自己学习和反思的同时为有需要的人提供一定的思路和帮助. 从排序开始 基本的排序算法包括冒泡 ...

- 音视频RTP数据包封装

对于语音通信而言,语音码率较低,添加适当冗余是对抗网络丢包的常见方式.冗余方式有多种,包括RED,FEC等都是冗余的一种,如果冗余份数较多,可以采取交织的方式实现.RFC 3350是RTP的基础标准协 ...