编译Flink 1.9.0

闲来无事,编个Flink 1.9 玩玩

1、下载flink、flink-shaded 源码、解压

[venn@venn release]$ ll

total

drwxrwxr-x. venn venn Sep : flink-release-1.9.

drwxrwxr-x. venn venn Sep : flink-shaded-release-7.0

-rw-rw-r--. venn venn Sep : release-1.9..tar.gz

-rw-rw-r--. venn venn Sep : release-7.0.tar.gz

最开始直接拿flink 的 releast-1.9分支,发现都带了SNAPSHOT,遂放弃

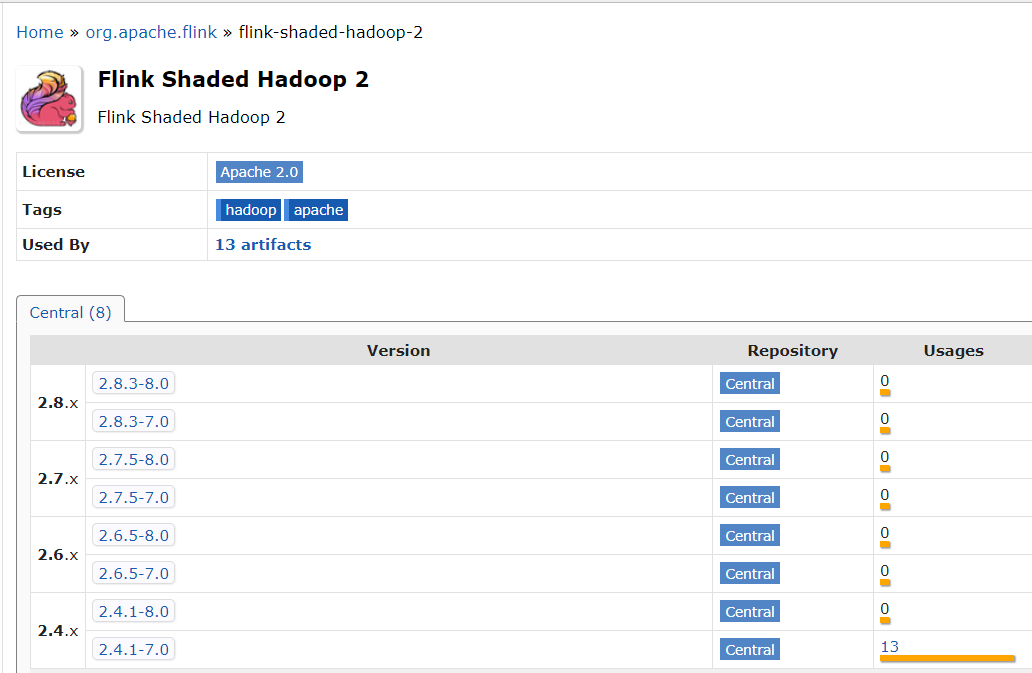

flink-shaded 包含flink 的很多依赖,比如 flink-shaded-hadoop-2,中央仓库只提供了几个hadoop 版本的包,可能没有与自己hadoop 对应的 flink-shaded-hadoop-2 的包。flink1.9 对应的flink-shaded 版本是 7.0

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-hadoop-</artifactId>

<version>${hadoop.version}-${flink.shaded.version}</version>

<optional>true</optional>

</dependency>

我用的hadoop 版本是2.7.7,然而并没有(2.7.5应该可以用)。

2、编译flink-shaded

指定hadoop版本2.7.7, 把编译好的包打到本地maven仓库,后面编译flink 用

[venn@venn flink-shaded-release-7.0]$ mvn clean install -DskipTests -Dhadoop.version=2.7. [INFO] Reactor Summary:

[INFO]

[INFO] flink-shaded 7.0 ................................... SUCCESS [ 3.483 s]

[INFO] flink-shaded-force-shading 7.0 ..................... SUCCESS [ 0.597 s]

[INFO] flink-shaded-asm- 6.2.-7.0 ....................... SUCCESS [ 0.627 s]

[INFO] flink-shaded-guava- 18.0-7.0 ..................... SUCCESS [ 0.994 s]

[INFO] flink-shaded-netty- 4.1..Final-7.0 .............. SUCCESS [ 3.469 s]

[INFO] flink-shaded-netty-tcnative-dynamic 2.0..Final-7.0 SUCCESS [ 0.572 s]

[INFO] flink-shaded-jackson-parent 2.9.-7.0 .............. SUCCESS [ 0.035 s]

[INFO] flink-shaded-jackson- 2.9.-7.0 ................... SUCCESS [ 1.479 s]

[INFO] flink-shaded-jackson-module-jsonSchema- 2.9.-7.0 . SUCCESS [ 0.857 s]

[INFO] flink-shaded-hadoop-2 2.7.7-7.0 .................... SUCCESS [ 9.132 s]

[INFO] flink-shaded-hadoop--uber 2.7.-7.0 ............... SUCCESS [ 17.173 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 38.612 s

[INFO] Finished at: --12T15::+:

[INFO] ------------------------------------------------------------------------

3、编译 flink

指定hadoop 版本 2.7.7

[venn@venn flink-release-1.9.]$ mvn clean package -DskipTests -Dhadoop.version=2.7. [INFO] Reactor Summary for flink 1.9.:

[INFO]

[INFO] force-shading ...................................... SUCCESS [ 1.281 s]

[INFO] flink .............................................. SUCCESS [ 1.829 s]

[INFO] flink-annotations .................................. SUCCESS [ 1.280 s]

[INFO] flink-shaded-curator ............................... SUCCESS [ 1.501 s]

[INFO] flink-metrics ...................................... SUCCESS [ 0.188 s]

[INFO] flink-metrics-core ................................. SUCCESS [ 0.736 s]

[INFO] flink-test-utils-parent ............................ SUCCESS [ 0.157 s]

[INFO] flink-test-utils-junit ............................. SUCCESS [ 0.928 s]

[INFO] flink-core ......................................... SUCCESS [ 33.771 s]

[INFO] flink-java ......................................... SUCCESS [ 4.827 s]

[INFO] flink-queryable-state .............................. SUCCESS [ 0.100 s]

[INFO] flink-queryable-state-client-java .................. SUCCESS [ 0.643 s]

[INFO] flink-filesystems .................................. SUCCESS [ 0.082 s]

[INFO] flink-hadoop-fs .................................... SUCCESS [ 1.168 s]

[INFO] flink-runtime ...................................... SUCCESS [: min]

[INFO] flink-scala ........................................ SUCCESS [ 29.960 s]

[INFO] flink-mapr-fs ...................................... SUCCESS [ 0.394 s]

[INFO] flink-filesystems :: flink-fs-hadoop-shaded ........ SUCCESS [ 2.916 s]

[INFO] flink-s3-fs-base ................................... SUCCESS [ 5.740 s]

[INFO] flink-s3-fs-hadoop ................................. SUCCESS [ 8.235 s]

[INFO] flink-s3-fs-presto ................................. SUCCESS [ 12.510 s]

[INFO] flink-swift-fs-hadoop .............................. SUCCESS [ 13.618 s]

[INFO] flink-oss-fs-hadoop ................................ SUCCESS [ 4.483 s]

[INFO] flink-azure-fs-hadoop .............................. SUCCESS [ 5.729 s]

[INFO] flink-optimizer .................................... SUCCESS [ 11.589 s]

[INFO] flink-clients ...................................... SUCCESS [ 1.179 s]

[INFO] flink-streaming-java ............................... SUCCESS [ 8.882 s]

[INFO] flink-test-utils ................................... SUCCESS [ 1.453 s]

[INFO] flink-runtime-web .................................. SUCCESS [: min]

[INFO] flink-examples ..................................... SUCCESS [ 0.112 s]

[INFO] flink-examples-batch ............................... SUCCESS [ 10.577 s]

[INFO] flink-connectors ................................... SUCCESS [ 0.096 s]

[INFO] flink-hadoop-compatibility ......................... SUCCESS [ 4.225 s]

[INFO] flink-state-backends ............................... SUCCESS [ 0.070 s]

[INFO] flink-statebackend-rocksdb ......................... SUCCESS [ 1.102 s]

[INFO] flink-tests ........................................ SUCCESS [ 28.820 s]

[INFO] flink-streaming-scala .............................. SUCCESS [ 26.143 s]

[INFO] flink-table ........................................ SUCCESS [ 0.054 s]

[INFO] flink-table-common ................................. SUCCESS [ 1.771 s]

[INFO] flink-table-api-java ............................... SUCCESS [ 1.416 s]

[INFO] flink-table-api-java-bridge ........................ SUCCESS [ 0.570 s]

[INFO] flink-table-api-scala .............................. SUCCESS [ 5.054 s]

[INFO] flink-table-api-scala-bridge ....................... SUCCESS [ 7.626 s]

[INFO] flink-sql-parser ................................... SUCCESS [ 3.073 s]

[INFO] flink-libraries .................................... SUCCESS [ 0.056 s]

[INFO] flink-cep .......................................... SUCCESS [ 2.437 s]

[INFO] flink-table-planner ................................ SUCCESS [: min]

[INFO] flink-orc .......................................... SUCCESS [ 0.579 s]

[INFO] flink-jdbc ......................................... SUCCESS [ 0.546 s]

[INFO] flink-table-runtime-blink .......................... SUCCESS [ 3.868 s]

[INFO] flink-table-planner-blink .......................... SUCCESS [: min]

[INFO] flink-hbase ........................................ SUCCESS [ 1.725 s]

[INFO] flink-hcatalog ..................................... SUCCESS [ 4.158 s]

[INFO] flink-metrics-jmx .................................. SUCCESS [ 0.272 s]

[INFO] flink-connector-kafka-base ......................... SUCCESS [ 1.671 s]

[INFO] flink-connector-kafka-0.9 .......................... SUCCESS [ 0.768 s]

[INFO] flink-connector-kafka-0.10 ......................... SUCCESS [ 0.547 s]

[INFO] flink-connector-kafka-0.11 ......................... SUCCESS [ 0.692 s]

[INFO] flink-formats ...................................... SUCCESS [ 0.054 s]

[INFO] flink-json ......................................... SUCCESS [ 0.332 s]

[INFO] flink-connector-elasticsearch-base ................. SUCCESS [ 0.810 s]

[INFO] flink-connector-elasticsearch2 ..................... SUCCESS [ 8.239 s]

[INFO] flink-connector-elasticsearch5 ..................... SUCCESS [ 9.715 s]

[INFO] flink-connector-elasticsearch6 ..................... SUCCESS [ 0.747 s]

[INFO] flink-csv .......................................... SUCCESS [ 0.251 s]

[INFO] flink-connector-hive ............................... SUCCESS [ 1.942 s]

[INFO] flink-connector-rabbitmq ........................... SUCCESS [ 0.332 s]

[INFO] flink-connector-twitter ............................ SUCCESS [ 1.304 s]

[INFO] flink-connector-nifi ............................... SUCCESS [ 0.349 s]

[INFO] flink-connector-cassandra .......................... SUCCESS [ 2.205 s]

[INFO] flink-avro ......................................... SUCCESS [ 1.565 s]

[INFO] flink-connector-filesystem ......................... SUCCESS [ 0.861 s]

[INFO] flink-connector-kafka .............................. SUCCESS [ 0.973 s]

[INFO] flink-connector-gcp-pubsub ......................... SUCCESS [ 0.582 s]

[INFO] flink-sql-connector-elasticsearch6 ................. SUCCESS [ 4.583 s]

[INFO] flink-sql-connector-kafka-0.9 ...................... SUCCESS [ 0.256 s]

[INFO] flink-sql-connector-kafka-0.10 ..................... SUCCESS [ 0.312 s]

[INFO] flink-sql-connector-kafka-0.11 ..................... SUCCESS [ 0.413 s]

[INFO] flink-sql-connector-kafka .......................... SUCCESS [ 0.641 s]

[INFO] flink-connector-kafka-0.8 .......................... SUCCESS [ 0.700 s]

[INFO] flink-avro-confluent-registry ...................... SUCCESS [ 0.843 s]

[INFO] flink-parquet ...................................... SUCCESS [ 0.726 s]

[INFO] flink-sequence-file ................................ SUCCESS [ 0.247 s]

[INFO] flink-examples-streaming ........................... SUCCESS [ 10.970 s]

[INFO] flink-examples-table ............................... SUCCESS [ 5.554 s]

[INFO] flink-examples-build-helper ........................ SUCCESS [ 0.069 s]

[INFO] flink-examples-streaming-twitter ................... SUCCESS [ 0.464 s]

[INFO] flink-examples-streaming-state-machine ............. SUCCESS [ 0.298 s]

[INFO] flink-examples-streaming-gcp-pubsub ................ SUCCESS [ 3.640 s]

[INFO] flink-container .................................... SUCCESS [ 0.304 s]

[INFO] flink-queryable-state-runtime ...................... SUCCESS [ 0.555 s]

[INFO] flink-end-to-end-tests ............................. SUCCESS [ 0.056 s]

[INFO] flink-cli-test ..................................... SUCCESS [ 0.151 s]

[INFO] flink-parent-child-classloading-test-program ....... SUCCESS [ 0.154 s]

[INFO] flink-parent-child-classloading-test-lib-package ... SUCCESS [ 0.098 s]

[INFO] flink-dataset-allround-test ........................ SUCCESS [ 0.153 s]

[INFO] flink-dataset-fine-grained-recovery-test ........... SUCCESS [ 0.141 s]

[INFO] flink-datastream-allround-test ..................... SUCCESS [ 1.119 s]

[INFO] flink-batch-sql-test ............................... SUCCESS [ 0.136 s]

[INFO] flink-stream-sql-test .............................. SUCCESS [ 0.183 s]

[INFO] flink-bucketing-sink-test .......................... SUCCESS [ 0.233 s]

[INFO] flink-distributed-cache-via-blob ................... SUCCESS [ 0.138 s]

[INFO] flink-high-parallelism-iterations-test ............. SUCCESS [ 5.724 s]

[INFO] flink-stream-stateful-job-upgrade-test ............. SUCCESS [ 0.636 s]

[INFO] flink-queryable-state-test ......................... SUCCESS [ 1.688 s]

[INFO] flink-local-recovery-and-allocation-test ........... SUCCESS [ 0.221 s]

[INFO] flink-elasticsearch2-test .......................... SUCCESS [ 3.299 s]

[INFO] flink-elasticsearch5-test .......................... SUCCESS [ 3.969 s]

[INFO] flink-elasticsearch6-test .......................... SUCCESS [ 2.275 s]

[INFO] flink-quickstart ................................... SUCCESS [ 0.781 s]

[INFO] flink-quickstart-java .............................. SUCCESS [ 0.350 s]

[INFO] flink-quickstart-scala ............................. SUCCESS [ 0.145 s]

[INFO] flink-quickstart-test .............................. SUCCESS [ 0.323 s]

[INFO] flink-confluent-schema-registry .................... SUCCESS [ 1.202 s]

[INFO] flink-stream-state-ttl-test ........................ SUCCESS [ 3.172 s]

[INFO] flink-sql-client-test .............................. SUCCESS [ 0.671 s]

[INFO] flink-streaming-file-sink-test ..................... SUCCESS [ 0.221 s]

[INFO] flink-state-evolution-test ......................... SUCCESS [ 0.822 s]

[INFO] flink-e2e-test-utils ............................... SUCCESS [ 6.170 s]

[INFO] flink-mesos ........................................ SUCCESS [ 17.591 s]

[INFO] flink-yarn ......................................... SUCCESS [ 0.915 s]

[INFO] flink-gelly ........................................ SUCCESS [ 2.601 s]

[INFO] flink-gelly-scala .................................. SUCCESS [ 13.500 s]

[INFO] flink-gelly-examples ............................... SUCCESS [ 7.711 s]

[INFO] flink-metrics-dropwizard ........................... SUCCESS [ 0.193 s]

[INFO] flink-metrics-graphite ............................. SUCCESS [ 0.123 s]

[INFO] flink-metrics-influxdb ............................. SUCCESS [ 0.594 s]

[INFO] flink-metrics-prometheus ........................... SUCCESS [ 0.371 s]

[INFO] flink-metrics-statsd ............................... SUCCESS [ 0.179 s]

[INFO] flink-metrics-datadog .............................. SUCCESS [ 0.237 s]

[INFO] flink-metrics-slf4j ................................ SUCCESS [ 0.157 s]

[INFO] flink-cep-scala .................................... SUCCESS [ 9.399 s]

[INFO] flink-table-uber ................................... SUCCESS [ 1.794 s]

[INFO] flink-table-uber-blink ............................. SUCCESS [ 2.050 s]

[INFO] flink-sql-client ................................... SUCCESS [ 1.090 s]

[INFO] flink-state-processor-api .......................... SUCCESS [ 0.598 s]

[INFO] flink-python ....................................... SUCCESS [ 0.621 s]

[INFO] flink-scala-shell .................................. SUCCESS [ 8.821 s]

[INFO] flink-dist ......................................... SUCCESS [ 10.765 s]

[INFO] flink-end-to-end-tests-common ...................... SUCCESS [ 0.285 s]

[INFO] flink-metrics-availability-test .................... SUCCESS [ 0.153 s]

[INFO] flink-metrics-reporter-prometheus-test ............. SUCCESS [ 0.132 s]

[INFO] flink-heavy-deployment-stress-test ................. SUCCESS [ 6.045 s]

[INFO] flink-connector-gcp-pubsub-emulator-tests .......... SUCCESS [ 0.516 s]

[INFO] flink-streaming-kafka-test-base .................... SUCCESS [ 0.192 s]

[INFO] flink-streaming-kafka-test ......................... SUCCESS [ 5.923 s]

[INFO] flink-streaming-kafka011-test ...................... SUCCESS [ 5.786 s]

[INFO] flink-streaming-kafka010-test ...................... SUCCESS [ 5.000 s]

[INFO] flink-plugins-test ................................. SUCCESS [ 0.095 s]

[INFO] flink-tpch-test .................................... SUCCESS [ 1.210 s]

[INFO] flink-contrib ...................................... SUCCESS [ 0.052 s]

[INFO] flink-connector-wikiedits .......................... SUCCESS [ 0.258 s]

[INFO] flink-yarn-tests ................................... SUCCESS [ 2.919 s]

[INFO] flink-fs-tests ..................................... SUCCESS [ 0.330 s]

[INFO] flink-docs ......................................... SUCCESS [ 0.531 s]

[INFO] flink-ml-parent .................................... SUCCESS [ 0.052 s]

[INFO] flink-ml-api ....................................... SUCCESS [ 0.284 s]

[INFO] flink-ml-lib ....................................... SUCCESS [ 0.164 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: : min

[INFO] Finished at: --12T17::+:

[INFO] ------------------------------------------------------------------------

正常情况耗时15分钟,异常是可能会卡在 flink-runtime和 flink-runtime-web 上面

4、遇到的问题

1)、 flink 1.9 中移除了 map-r 的包,所有不用在这上面耗时间,但是我在编译的时候,还是遇到很多包无法下载(阿里云的镜像都有),只好在本地下载,上传上去(centos 的虚拟机里编译的,虚拟机下不下的包,本地都可以正常下载,就把本地的仓库覆盖上去了)

2)、 flink-runtime/flink-runtime-web 有时候会编译很久

3)、编译flink-table-api-java 的时候编译不过

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.8.:compile (default-compile) on project flink-table-api-java: Compilation failure

[ERROR] /home/venn/git/release/flink-release-1.9./flink-table/flink-table-api-java/src/main/java/org/apache/flink/table/operations/ut

ils/factories/AggregateOperationFactory.java:[,] unreported exception X; must be caught or declared to be thrown

[ERROR]

[ERROR] -> [Help ]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help ] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :flink-table-api-java

是低版本jdk的bug,下面这种用法有点问题

List<String> aliases = children.subList(, children.size())

.stream()

.map(alias -> ExpressionUtils.extractValue(alias, String.class)

.orElseThrow(() -> new ValidationException("Unexpected alias: " + alias)))

.collect(toList());

解决方法: 升级jdk 1.8 的小版本, 从jdk1.8.0_91 升到 jdk1.8.0_111 解决了

4)、可以预装个 gcc、gcc-c++,中间会有报错,不过应该不影响

编译Flink 1.9.0的更多相关文章

- flink编译支持CDH6.2.0(hadoop3.0.0)

准备工作 因为在编译时需要下载许多依赖包,在执行编译前最好先配置下代理仓库 <mirrors> <mirror> <id>nexus-aliyun</id&g ...

- CentOS 7.0编译安装Nginx1.6.0+MySQL5.6.19+PHP5.5.14

准备篇: CentOS 7.0系统安装配置图解教程 http://www.osyunwei.com/archives/7829.html 一.配置防火墙,开启80端口.3306端口 CentOS 7. ...

- CentOS7 编译安装 nginx-1.10.0

对于NGINX 支持epoll模型 epoll模型的优点 定义: epoll是Linux内核为处理大批句柄而作改进的poll,是Linux下多路复用IO接口select/poll的增强版本,它能显著的 ...

- CentOS 6.2编译安装Nginx1.2.0+MySQL5.5.25+PHP5.3.13

CentOS 6.2编译安装Nginx1.2.0+MySQL5.5.25+PHP5.3.132013-10-24 15:31:12标签:服务器 防火墙 file 配置文件 written 一.配置好I ...

- mac OS X Yosemite 上编译hadoop 2.6.0/2.7.0及TEZ 0.5.2/0.7.0 注意事项

1.jdk 1.7问题 hadoop 2.7.0必须要求jdk 1.7.0,而oracle官网已经声明,jdk 1.7 以后不准备再提供更新了,所以趁现在还能下载,赶紧去down一个mac版吧 htt ...

- 如何编译Apache Hadoop2.6.0源代码

如何编译Apache Hadoop2.6.0源代码 1.安装CentOS 我使用的是CentOS6.5,下载地址是http://mirror.neu.edu.cn/centos/6.5/isos/x8 ...

- VC++编译MPIR 2.7.0

目录 第1章编译 2 1.1 简介 2 1.2 下载 3 1.3 解决方案 4 1.4 创建项目 5 1.5 复制文件树 6 1.6 不使用预编译头文件 8 ...

- CentOS 6.2编译安装Nginx1.2.0+MySQL5.5.25+PHP5.3.13+博客系统WordPress3.3.2

说明: 操作系统:CentOS 6.2 32位 系统安装教程:CentOS 6.2安装(超级详细图解教程): http://www.osyunwei.com/archives/1537.html 准备 ...

- CentOS 6.4 64位 源码编译hadoop 2.2.0

搭建环境:Centos 6.4 64bit 1.安装JDK 参考这里2.安装mavenmaven官方下载地址,可以选择源码编码安装,这里就直接下载编译好的wget http://mirror.bit. ...

随机推荐

- python - Django - restframework 简单使用 和 组件

FBV 和 CBV CBV 通过函数调用方法FBV 通过类调用方法 其本质上都是 CBV 但是 FBV 内部封装了关于 method 的方法,由于基本上都是前端的请求,所有像GET,POST等方 ...

- NOI.ac 模拟赛20181103 排队 翘课 运气大战

题解 排队 20% 1≤n≤20,1≤x,hi≤201\le n\le 20, 1\le x,h_i\le 201≤n≤20,1≤x,hi≤20 随便暴力 50% 1≤n≤2000,1≤x,hi≤1 ...

- React navtive

http://www.linuxidc.com/Linux/2015-09/123239.htm

- MySQL-linux系统下面的配置文件

一般linux 上都放在 /etc/my.cnf ,window 上安装都是默认可能按照上面的路径还是没找到, window 上 可以登录到mysql中 使用 show variables ...

- uic

uic user interface complieruic mainwindow.ui >> ui_mainwidow.h

- EL运算符和基本运算

1.EL存取器:.和[] ${applicationScope.list[].name} 获取applicationScope中list集合的第二个元素的name属性. 2.三元运算符: ${> ...

- 关于bootstrap的双层遮罩问题

在使用bootstrap的双层遮罩时 遇到这么2个问题 第一个是当关闭遮罩里面层遮罩时滚动条会向左溢出 第二个也是当关闭遮罩里面层遮罩时 在第一层遮罩的内容相当于固定住了 拖动滚动条也只能显示他固定住 ...

- codevs6003一次做对算我输

6003 一次做对算我输 时间限制: 1 s 空间限制: 1000 KB 题目等级 : 大师 Master 题目描述 Description 更新数据了!!!!!!!更新数据了!!!!!! ...

- P1903 [国家集训队]数颜色 (带修改莫队)

题目描述 墨墨购买了一套N支彩色画笔(其中有些颜色可能相同),摆成一排,你需要回答墨墨的提问.墨墨会向你发布如下指令: 1. Q L R代表询问你从第L支画笔到第R支画笔中共有几种不同颜色的画笔. 2 ...

- 32、reduceByKey和groupByKey对比

一.groupByKey 1.图解 val counts = pairs.groupByKey().map(wordCounts => (wordCounts._1, wordCounts._2 ...