Flume实战案例运维篇

Flume实战案例运维篇

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Flume概述

1>.什么是Flume

Flume是一个分布式、可靠、高可用的海量日志聚合系统,支持在系统中定制各类数据发送方,用于收集数据;同时,Flume提供对数据进行简单处理,并写到各种数据接收方。

官方地址:http://flume.apache.org/。

2>.Flume特性

()高可靠性

Flume提供了end to end的数据可靠性机制

()易于扩展

Agent为分布式架构,可水平扩展

()易于恢复

Channel中保存了与数据源有关的事件,用于失败时的恢复

()功能丰富

Flume内置了多种组件,包括不同数据源和不同存储方式

3>.Flume常用组件

()Source:

数据源,简单的说就是agent获取数据的入口。 ()Channel:

管道,数据流通和存储的通道。一个source必须至少和一个channel关联。 ()Sink:

用来接收channel传输的数据并将之传送到指定的地方,成功后从channel中删除。

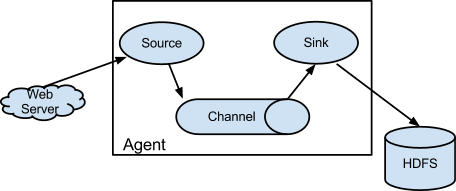

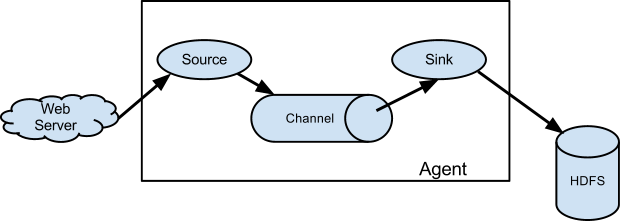

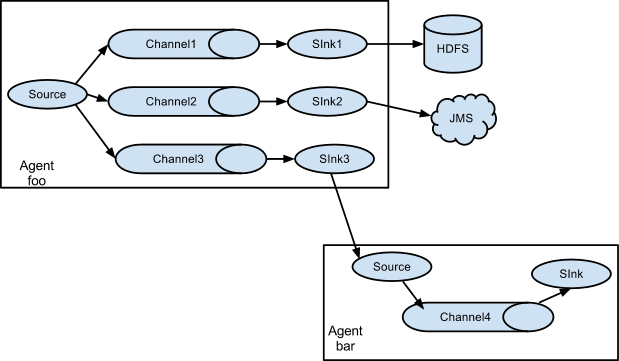

4>.Flume架构

二.部署Flume环境

1>.下载flume组件

[root@node101.yinzhengjie.org.cn ~]# yum -y install wget

Loaded plugins: fastestmirror

Determining fastest mirrors

* base: mirrors.tuna.tsinghua.edu.cn

* extras: mirrors.aliyun.com

* updates: mirror.bit.edu.cn

base | 3.6 kB ::

extras | 3.4 kB ::

updates | 3.4 kB ::

updates//x86_64/primary_db | 6.5 MB ::

Resolving Dependencies

--> Running transaction check

---> Package wget.x86_64 :1.14-.el7_6. will be installed

--> Finished Dependency Resolution Dependencies Resolved ==============================================================================================================================================================================================================================================================================

Package Arch Version Repository Size

==============================================================================================================================================================================================================================================================================

Installing:

wget x86_64 1.14-.el7_6. updates k Transaction Summary

==============================================================================================================================================================================================================================================================================

Install Package Total download size: k

Installed size: 2.0 M

Downloading packages:

wget-1.14-.el7_6..x86_64.rpm | kB ::

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : wget-1.14-.el7_6..x86_64 /

Verifying : wget-1.14-.el7_6..x86_64 / Installed:

wget.x86_64 :1.14-.el7_6. Complete!

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# yum -y install wget

[root@node101.yinzhengjie.org.cn ~]# wget http://mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bin.tar.gz

---- ::-- http://mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bin.tar.gz

Resolving mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.8.193, :f000:::::

Connecting to mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.8.193|:... connected.

HTTP request sent, awaiting response... Found

Location: http://103.238.48.8/mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bin.tar.gz [following]

---- ::-- http://103.238.48.8/mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bin.tar.gz

Connecting to 103.238.48.8:... connected.

HTTP request sent, awaiting response... OK

Length: (65M) [application/x-gzip]

Saving to: ‘apache-flume-1.9.-bin.tar.gz’ %[====================================================================================================================================================================================================================================>] ,, .87MB/s in 22s -- :: (2.95 MB/s) - ‘apache-flume-1.9.-bin.tar.gz’ saved [/] [root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# wget http://mirrors.tuna.tsinghua.edu.cn/apache/flume/1.9.0/apache-flume-1.9.0-bin.tar.gz

2>.解压flume

[root@node105.yinzhengjie.org.cn ~]# ll

total

-rw-r--r-- root root Jan apache-flume-1.9.-bin.tar.gz

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# tar -zxf apache-flume-1.9.-bin.tar.gz -C /home/softwares/

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ll /home/softwares/apache-flume-1.9.-bin/

total

drwxr-xr-x mysql mysql Jul : bin

-rw-rw-r-- mysql mysql Nov CHANGELOG

drwxr-xr-x mysql mysql Jul : conf

-rw-r--r-- mysql mysql Nov DEVNOTES

-rw-r--r-- mysql mysql Nov doap_Flume.rdf

drwxrwxr-x mysql mysql Dec docs

drwxr-xr-x root root Jul : lib

-rw-rw-r-- mysql mysql Dec LICENSE

-rw-r--r-- mysql mysql Nov NOTICE

-rw-r--r-- mysql mysql Nov README.md

-rw-rw-r-- mysql mysql Dec RELEASE-NOTES

drwxr-xr-x root root Jul : tools

[root@node105.yinzhengjie.org.cn ~]#

3>.配置flume的环境变量

[root@node105.yinzhengjie.org.cn ~]# vi /etc/profile

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# tail - /etc/profile

#Add by yinzhengjie

FLUME_HOME=/home/softwares/apache-flume-1.9.-bin

PATH=$PATH:$FLUME_HOME/bin

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# source /etc/profile

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# flume-ng version

Flume 1.9.

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: d4fcab4f501d41597bc616921329a4339f73585e

Compiled by fszabo on Mon Dec :: CET

From source with checksum 35db629a3bda49d23e9b3690c80737f9

[root@node105.yinzhengjie.org.cn ~]#

4>.自定义flume的配置文件存放目录

[root@node105.yinzhengjie.org.cn ~]# mkdir -pv /home/data/flume/{log,job,shell}

mkdir: created directory ‘/home/data’

mkdir: created directory ‘/home/data/flume’

mkdir: created directory ‘/home/data/flume/log’

mkdir: created directory ‘/home/data/flume/job’

mkdir: created directory ‘/home/data/flume/shell’

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ll /home/data/flume/

total

drwxr-xr-x root root Jul : job #用于存放flume启动的agent端的配置文件

drwxr-xr-x root root Jul : log #用于存放日志文件

drwxr-xr-x root root Jul : shell #用于存放启动脚本

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

三.Flume案例

1>.监控端口数据(netcat source-memory channel-logger sink)

[root@node105.yinzhengjie.org.cn ~]# yum -y install telnet net-tools

Loaded plugins: fastestmirror

Determining fastest mirrors

* base: mirror.bit.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

base | 3.6 kB ::

extras | 3.4 kB ::

updates | 3.4 kB ::

updates//x86_64/primary_db | 6.5 MB ::

Package net-tools-2.0-0.24.20131004git.el7.x86_64 already installed and latest version

Resolving Dependencies

--> Running transaction check

---> Package telnet.x86_64 :0.17-.el7 will be installed

--> Finished Dependency Resolution Dependencies Resolved ==============================================================================================================================================================================================================================================================================

Package Arch Version Repository Size

==============================================================================================================================================================================================================================================================================

Installing:

telnet x86_64 :0.17-.el7 base k Transaction Summary

==============================================================================================================================================================================================================================================================================

Install Package Total download size: k

Installed size: k

Downloading packages:

telnet-0.17-.el7.x86_64.rpm | kB ::

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : :telnet-0.17-.el7.x86_64 /

Verifying : :telnet-0.17-.el7.x86_64 / Installed:

telnet.x86_64 :0.17-.el7 Complete!

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# yum -y install telnet net-tools

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-netcat.conf

# 这里的“yinzhengjie”是agent的名称,它是我们自定义的。我们分别给“yinzhengjie”的sources,sinks,channels的别名分别为r1,k1和c1

yinzhengjie.sources = r1

yinzhengjie.sinks = k1

yinzhengjie.channels = c1 yinzhengjie.sources.r1.type = netcat

yinzhengjie.sources.r1.bind = node105.yinzhengjie.org.cn

yinzhengjie.sources.r1.port = # 指定sink的类型,我们这里指定的为logger,即控制台输出。

yinzhengjie.sinks.k1.type = logger # 指定channel的类型为memory,指定channel的容量是1000,每次传输的容量是100

yinzhengjie.channels.c1.type = memory

yinzhengjie.channels.c1.capacity =

yinzhengjie.channels.c1.transactionCapacity = # 绑定source和sink

yinzhengjie.sources.r1.channels = c1

yinzhengjie.sinks.k1.channel = c1

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-netcat.conf #编写flume的agent配置文件

[root@node105.yinzhengjie.org.cn ~]# flume-ng agent --conf /home/softwares/apache-flume-1.9.-bin/conf --name yinzhengjie --conf-file /home/data/flume/job/flume-netcat.conf -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger==INFO,console

Warning: JAVA_HOME is not set!

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger==INFO,console -cp '/home/softwares/apache-flume-1.9.0-bin/conf:/home/softwares/apache-flume-1.9.0-bin/lib/*:/lib/*' -Djava.library.path= o

rg.apache.flume.node.Application --name yinzhengjie --conf-file /home/data/flume/job/flume-netcat.conf2019-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL keystore path specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL keystore password specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL keystore type specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL truststore path specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL truststore password specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL truststore type specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL include protocols specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL exclude protocols specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL include cipher suites specified.

-- ::, (main) [DEBUG - org.apache.flume.util.SSLUtil.initSysPropFromEnvVar(SSLUtil.java:)] No global SSL exclude cipher suites specified.

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider.start(PollingPropertiesFileConfigurationProvider.java:)] Configuration provider starting

-- ::, (lifecycleSupervisor--) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider.start(PollingPropertiesFileConfigurationProvider.java:)] Configuration provider started

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Checking file:/home/data/flume/job/flume-netcat.conf for changes

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Reloading configuration file:/home/data/flume/job/flume-netcat.conf

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Created context for r1: type

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Created context for c1: type

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:)] Added sinks: k1 Agent: yinzhengjie

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:k1

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Created context for k1: channel

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:k1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.isValid(FlumeConfiguration.java:)] Starting validation of configuration for agent: yinzhengjie

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.LogPrivacyUtil.<clinit>(LogPrivacyUtil.java:)] Logging of configuration details is disabled. To see configuration details in the log run the agent with -Dorg.apache.flume.log.printconfig=true J

VM argument. Please note that this is not recommended in production systems as it may leak private information to the logfile.-- ::, (conf-file-poller-) [WARN - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateConfigFilterSet(FlumeConfiguration.java:)] Agent configuration for 'yinzhengjie' has no configfilters.

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateChannels(FlumeConfiguration.java:)] Created channel c1

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateSinks(FlumeConfiguration.java:)] Creating sink: k1 using LOGGER

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:)] Channels:c1 -- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:)] Sinks k1 -- ::, (conf-file-poller-) [DEBUG - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:)] Sources r1 -- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:)] Post-validation flume configuration contains configuration for agents: [yinzhengjie]

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:)] Creating channels

-- ::, (conf-file-poller-) [INFO - org.apache.flume.channel.DefaultChannelFactory.create(DefaultChannelFactory.java:)] Creating instance of channel c1 type memory

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:)] Created channel c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:)] Creating instance of source r1, type netcat

-- ::, (conf-file-poller-) [INFO - org.apache.flume.sink.DefaultSinkFactory.create(DefaultSinkFactory.java:)] Creating instance of sink: k1, type: logger

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:)] Channel c1 connected to [r1, k1]

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }}

sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@3344b1b counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} }-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Channel c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Waiting for channel: c1 to start. Sleeping for ms

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:)] Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean.

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:)] Component type: CHANNEL, name: c1 started

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Sink k1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Source r1

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.source.NetcatSource.start(NetcatSource.java:)] Source starting

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.log.Log.initialized(Log.java:)] Logging to org.slf4j.impl.Log4jLoggerAdapter(org.eclipse.jetty.util.log) via org.eclipse.jetty.util.log.Slf4jLog

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.util.log.Log.initialized(Log.java:)] Logging initialized @1169ms to org.eclipse.jetty.util.log.Slf4jLog

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.server.Server@346a3eed added {qtp1818551798{STOPPED,<=<=,i=,q=},AUTO}

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [DEBUG - org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:)] Polling sink runner starting

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.source.NetcatSource.start(NetcatSource.java:)] Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/172.30.1.105:]

-- ::, (lifecycleSupervisor--) [DEBUG - org.apache.flume.source.NetcatSource.start(NetcatSource.java:)] Source started

-- ::, (Thread-) [DEBUG - org.apache.flume.source.NetcatSource$AcceptHandler.run(NetcatSource.java:)] Starting accept handler

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] HttpConnectionFactory@5ac8a68a[HTTP/1.1] added {HttpConfiguration@7454628a{/,/,https://:0,[]},POJO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{null,[]}{0.0.0.0:} added {org.eclipse.jetty.server.Server@346a3eed,UNMANAGED}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{null,[]}{0.0.0.0:} added {qtp1818551798{STOPPED,<=<=,i=,q=},AUTO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{null,[]}{0.0.0.0:} added {org.eclipse.jetty.util.thread.ScheduledExecutorScheduler@1da3d1e8,AUTO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{null,[]}{0.0.0.0:} added {org.eclipse.jetty.io.ArrayByteBufferPool@4fa9a485,POJO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{null,[http/1.1]}{0.0.0.0:} added {HttpConnectionFactory@5ac8a68a[HTTP/1.1],AUTO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.server.AbstractConnector.addConnectionFactory(AbstractConnector.java:)] ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:} added HttpConnectionFactory@5ac8a68a[HTTP/1.1]

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:} added {org.eclipse.jetty.server.ServerConnector$ServerConnectorManage

r@4fc180ce,MANAGED}-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.server.Server@346a3eed added {ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:},AUTO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.server.Server@346a3eed added {org.apache.flume.instrumentation.http.HTTPMetricsServer$HTTPMetricsHandler@52e

879b7,MANAGED}-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.eclipse.jetty.server.Server@346a3eed

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.server.Server@346a3eed added {org.eclipse.jetty.server.handler.ErrorHandler@4ed57293,AUTO}

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.Server.doStart(Server.java:)] jetty-9.4..v20170531

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:)] starting org.eclipse.jetty.server.Server@346a3eed

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting qtp1818551798{STOPPED,<=<=,i=,q=}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1380ms qtp1818551798{STARTED,<=<=,i=,q=}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.apache.flume.instrumentation.http.HTTPMetricsServer$HTTPMetricsHandler@52e879b7

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:)] starting org.apache.flume.instrumentation.http.HTTPMetricsServer$HTTPMetricsHandler@52e879b7

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1381ms org.apache.flume.instrumentation.http.HTTPMetricsServer$HTTPMetricsHandler@52e879b7

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.eclipse.jetty.server.handler.ErrorHandler@4ed57293

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.server.handler.AbstractHandler.doStart(AbstractHandler.java:)] starting org.eclipse.jetty.server.handler.ErrorHandler@4ed57293

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1382ms org.eclipse.jetty.server.handler.ErrorHandler@4ed57293

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:} added {sun.nio.ch.ServerSocketChannelImpl[/0.0.0.0:],POJO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.eclipse.jetty.util.thread.ScheduledExecutorScheduler@1da3d1e8

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1384ms org.eclipse.jetty.util.thread.ScheduledExecutorScheduler@1da3d1e8

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting HttpConnectionFactory@5ac8a68a[HTTP/1.1]

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1384ms HttpConnectionFactory@5ac8a68a[HTTP/1.1]

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.eclipse.jetty.server.ServerConnector$ServerConnectorManager@4fc180ce

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.io.ManagedSelector@1fe6c0aa id= keys=- selected=- added {EatWhatYouKill@41905dc0/org.eclipse.jetty.io.Man

agedSelector$SelectorProducer@7dcfdb71/IDLE//,AUTO}-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] org.eclipse.jetty.server.ServerConnector$ServerConnectorManager@4fc180ce added {org.eclipse.jetty.io.ManagedSelector@1fe6c0aa

id= keys=- selected=-,AUTO}-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting org.eclipse.jetty.io.ManagedSelector@1fe6c0aa id= keys=- selected=-

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarting(AbstractLifeCycle.java:)] starting EatWhatYouKill@41905dc0/org.eclipse.jetty.io.ManagedSelector$SelectorProducer@7dcfdb71/IDLE//

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1389ms EatWhatYouKill@41905dc0/org.eclipse.jetty.io.ManagedSelector$SelectorProducer@7dcfdb71/IDLE//

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.thread.QueuedThreadPool.execute(QueuedThreadPool.java:)] queue org.eclipse.jetty.io.ManagedSelector$$Lambda$/@6d147c43

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1477ms org.eclipse.jetty.io.ManagedSelector@1fe6c0aa id= keys= selected=

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1477ms org.eclipse.jetty.server.ServerConnector$ServerConnectorManager@4fc180ce

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.ContainerLifeCycle.addBean(ContainerLifeCycle.java:)] ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:} added {acceptor-@2a11399b,POJO}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.thread.QueuedThreadPool.execute(QueuedThreadPool.java:)] queue acceptor-@2a11399b

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.AbstractConnector.doStart(AbstractConnector.java:)] Started ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:}

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1479ms ServerConnector@{HTTP/1.1,[http/1.1]}{0.0.0.0:}

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.Server.doStart(Server.java:)] Started @1479ms

-- ::, (conf-file-poller-) [DEBUG - org.eclipse.jetty.util.component.AbstractLifeCycle.setStarted(AbstractLifeCycle.java:)] STARTED @1479ms org.eclipse.jetty.server.Server@346a3eed

-- ::, (qtp1818551798-) [DEBUG - org.eclipse.jetty.util.thread.QueuedThreadPool$.run(QueuedThreadPool.java:)] run org.eclipse.jetty.io.ManagedSelector$$Lambda$/@6d147c43

-- ::, (qtp1818551798-) [DEBUG - org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.produce(EatWhatYouKill.java:)] EatWhatYouKill@41905dc0/org.eclipse.jetty.io.ManagedSelector$SelectorProducer@7dcfdb71/PRODUCING// execute true

-- ::, (qtp1818551798-) [DEBUG - org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:)] EatWhatYouKill@41905dc0/org.eclipse.jetty.io.ManagedSelector$SelectorProducer@7dcfdb71/PRODUCING// produce non-blocking

-- ::, (qtp1818551798-) [DEBUG - org.eclipse.jetty.io.ManagedSelector$SelectorProducer.select(ManagedSelector.java:)] Selector loop waiting on select

-- ::, (qtp1818551798-) [DEBUG - org.eclipse.jetty.util.thread.QueuedThreadPool$.run(QueuedThreadPool.java:)] run acceptor-@2a11399b

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Checking file:/home/data/flume/job/flume-netcat.conf for changes

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Starting connection handler

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Chars read =

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Events processed =

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Checking file:/home/data/flume/job/flume-netcat.conf for changes

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: E5 B0 B9 E6 AD A3 E6 9D B0 E5 B0 E6 AD A4 E4 ................ }

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Checking file:/home/data/flume/job/flume-netcat.conf for changes

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Chars read =

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Events processed =

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: 6E 7A 6E 6A 6F yinzhengjie dao }

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Chars read =

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Events processed =

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: 6F 6C 6E 0D golang. }

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Chars read =

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Events processed =

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: 6F 6E 0D python. }

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Chars read =

-- ::, (netcat-handler-) [DEBUG - org.apache.flume.source.NetcatSource$NetcatSocketHandler.run(NetcatSource.java:)] Events processed =

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: 6A 0D java. }

-- ::, (conf-file-poller-) [DEBUG - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Checking file:/home/data/flume/job/flume-netcat.conf for changes

[root@node105.yinzhengjie.org.cn ~]# flume-ng agent --conf /home/softwares/apache-flume-1.9.0-bin/conf --name #启动flume

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN 172.30.1.105: *:*

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# telnet node105.yinzhengjie.org.cn

Trying 172.30.1.105...

Connected to node105.yinzhengjie.org.cn.

Escape character is '^]'.

尹正杰到此一游!

OK

yinzhengjie dao ci yi you !

OK

golang

OK

python

OK

java

OK

[root@node105.yinzhengjie.org.cn ~]# telnet node105.yinzhengjie.org.cn 8888 #测试连接flume

[root@node105.yinzhengjie.org.cn ~]# vi /home/data/flume/shell/start-netcat.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-netcat.sh

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com

#Data:Thu Oct :: CST #将监控数据发送给ganglia,需要指定ganglia服务器地址,使用请确认是否部署好ganglia服务!

#nohup flume-ng agent -c /home/data/flume/job/ --conf-file=/home/data/flume/job/flume-netcat.conf --name yinzhengjie -Dflume.monitoring.type=ganglia -Dflume.monitoring.hosts=node105.yinzhengjie.org.cn: -Dflume.root.logger=INFO,console >> /home/data/flume/log/flume-g

anglia-flume-netcat.log >& & #启动flume自身的监控参数,默认执行以下脚本

nohup flume-ng agent -c /home/softwares/apache-flume-1.9.-bin/conf --conf-file=/home/data/flume/job/flume-netcat.conf --name yinzhengjie -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console >> /home/data/flume/log/flume-netcat.l

og >& &[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# chmod +x /home/data/flume/shell/start-netcat.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ll /home/data/flume/shell/start-netcat.sh

-rwxr-xr-x root root Jul : /home/data/flume/shell/start-netcat.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-netcat.sh #编写flume的启动脚本,生产环境推荐大家使用该方式!

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# /home/data/flume/shell/start-netcat.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN 172.30.1.105: *:*

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# tail -100f /home/data/flume/log/flume-netcat.log

Warning: JAVA_HOME is not set!

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console -cp '/home/data/flume/job:/home/softwares/apache-flume-1.9.0-bin/lib/*:/lib/*' -Djava.library.path= org.apache.flume.node.App

lication --conf-file=/home/data/flume/job/flume-netcat.conf --name yinzhengjielog4j:WARN No appenders could be found for logger (org.apache.flume.util.SSLUtil).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Warning: JAVA_HOME is not set!

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console -cp '/home/softwares/apache-flume-1.9.0-bin/conf:/home/softwares/apache-flume-1.9.0-bin/lib/*:/lib/*' -Djava.library.path= or

g.apache.flume.node.Application --conf-file=/home/data/flume/job/flume-netcat.conf --name yinzhengjie2019-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider.start(PollingPropertiesFileConfigurationProvider.java:)] Configuration provider starting

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:)] Reloading configuration file:/home/data/flume/job/flume-netcat.conf

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addProperty(FlumeConfiguration.java:)] Added sinks: k1 Agent: yinzhengjie

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:k1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:r1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:k1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.addComponentConfig(FlumeConfiguration.java:)] Processing:c1

-- ::, (conf-file-poller-) [WARN - org.apache.flume.conf.FlumeConfiguration$AgentConfiguration.validateConfigFilterSet(FlumeConfiguration.java:)] Agent configuration for 'yinzhengjie' has no configfilters.

-- ::, (conf-file-poller-) [INFO - org.apache.flume.conf.FlumeConfiguration.validateConfiguration(FlumeConfiguration.java:)] Post-validation flume configuration contains configuration for agents: [yinzhengjie]

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:)] Creating channels

-- ::, (conf-file-poller-) [INFO - org.apache.flume.channel.DefaultChannelFactory.create(DefaultChannelFactory.java:)] Creating instance of channel c1 type memory

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.loadChannels(AbstractConfigurationProvider.java:)] Created channel c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.source.DefaultSourceFactory.create(DefaultSourceFactory.java:)] Creating instance of source r1, type netcat

-- ::, (conf-file-poller-) [INFO - org.apache.flume.sink.DefaultSinkFactory.create(DefaultSinkFactory.java:)] Creating instance of sink: k1, type: logger

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:)] Channel c1 connected to [r1, k1]

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting new configuration:{ sourceRunners:{r1=EventDrivenSourceRunner: { source:org.apache.flume.source.NetcatSource{name:r1,state:IDLE} }}

sinkRunners:{k1=SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@5b204d1f counterGroup:{ name:null counters:{} } }} channels:{c1=org.apache.flume.channel.MemoryChannel{name: c1}} }-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Channel c1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Waiting for channel: c1 to start. Sleeping for ms

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.register(MonitoredCounterGroup.java:)] Monitored counter group for type: CHANNEL, name: c1: Successfully registered new MBean.

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.instrumentation.MonitoredCounterGroup.start(MonitoredCounterGroup.java:)] Component type: CHANNEL, name: c1 started

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Sink k1

-- ::, (conf-file-poller-) [INFO - org.apache.flume.node.Application.startAllComponents(Application.java:)] Starting Source r1

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.source.NetcatSource.start(NetcatSource.java:)] Source starting

-- ::, (lifecycleSupervisor--) [INFO - org.apache.flume.source.NetcatSource.start(NetcatSource.java:)] Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/172.30.1.105:]

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.util.log.Log.initialized(Log.java:)] Logging initialized @1117ms to org.eclipse.jetty.util.log.Slf4jLog

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.Server.doStart(Server.java:)] jetty-9.4..v20170531

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.AbstractConnector.doStart(AbstractConnector.java:)] Started ServerConnector@48970ee9{HTTP/1.1,[http/1.1]}{0.0.0.0:}

-- ::, (conf-file-poller-) [INFO - org.eclipse.jetty.server.Server.doStart(Server.java:)] Started @1358ms

-- ::, (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:)] Event: { headers:{} body: 6E 7A 6E 6A 6F yinzhengjie dao }

[root@node105.yinzhengjie.org.cn ~]# tail -100f /home/data/flume/log/flume-netcat.log #启动flume并查看启动日志信息

[root@node105.yinzhengjie.org.cn ~]# yum -y install epel-release

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.bit.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch :- will be installed

--> Finished Dependency Resolution Dependencies Resolved ==============================================================================================================================================================================================================================================================================

Package Arch Version Repository Size

==============================================================================================================================================================================================================================================================================

Installing:

epel-release noarch - extras k Transaction Summary

==============================================================================================================================================================================================================================================================================

Install Package Total download size: k

Installed size: k

Downloading packages:

epel-release--.noarch.rpm | kB ::

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release--.noarch /

Verifying : epel-release--.noarch / Installed:

epel-release.noarch :- Complete!

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# yum -y install epel-release #安装EPEL源

[root@node105.yinzhengjie.org.cn ~]# yum list jq

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

epel/x86_64/metalink | 6.1 kB ::

* base: mirror.bit.edu.cn

* epel: mirrors.tuna.tsinghua.edu.cn

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

epel | 5.3 kB ::

(/): epel/x86_64/group_gz | kB ::

epel/x86_64/updateinfo FAILED

http://ftp.jaist.ac.jp/pub/Linux/Fedora/epel/7/x86_64/repodata/52f0298e60c86c08c5a90ffdff1f223a1166be2d7e011c9015ecfc8dc8bdf38b-updateinfo.xml.bz2: [Errno 14] HTTP Error 404 - Not Found ] 0.0 B/s | 0 B --:--:-- ETA

Trying other mirror.

To address this issue please refer to the below wiki article https://wiki.centos.org/yum-errors If above article doesn't help to resolve this issue please use https://bugs.centos.org/. (/): epel/x86_64/updateinfo | kB ::

(/): epel/x86_64/primary_db | 6.8 MB ::

Available Packages

jq.x86_64 1.5-.el7 epel

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# yum list jq #查看jq安装包是否存在

[root@node105.yinzhengjie.org.cn ~]# yum -y install jq

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirror.bit.edu.cn

* epel: mirrors.yun-idc.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

Resolving Dependencies

--> Running transaction check

---> Package jq.x86_64 :1.5-.el7 will be installed

--> Processing Dependency: libonig.so.()(64bit) for package: jq-1.5-.el7.x86_64

--> Running transaction check

---> Package oniguruma.x86_64 :5.9.-.el7 will be installed

--> Finished Dependency Resolution Dependencies Resolved ==============================================================================================================================================================================================================================================================================

Package Arch Version Repository Size

==============================================================================================================================================================================================================================================================================

Installing:

jq x86_64 1.5-.el7 epel k

Installing for dependencies:

oniguruma x86_64 5.9.-.el7 epel k Transaction Summary

==============================================================================================================================================================================================================================================================================

Install Package (+ Dependent package) Total download size: k

Installed size: k

Downloading packages:

warning: /var/cache/yum/x86_64//epel/packages/jq-1.5-.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID 352c64e5: NOKEY

Public key for jq-1.5-.el7.x86_64.rpm is not installed

(/): jq-1.5-.el7.x86_64.rpm | kB ::

(/): oniguruma-5.9.-.el7.x86_64.rpm | kB ::

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Total kB/s | kB ::

Retrieving key from file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

Importing GPG key 0x352C64E5:

Userid : "Fedora EPEL (7) <epel@fedoraproject.org>"

Fingerprint: 91e9 7d7c 4a5e 96f1 7f3e 888f 6a2f aea2 352c 64e5

Package : epel-release--.noarch (@extras)

From : /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : oniguruma-5.9.-.el7.x86_64 /

Installing : jq-1.5-.el7.x86_64 /

Verifying : oniguruma-5.9.-.el7.x86_64 /

Verifying : jq-1.5-.el7.x86_64 / Installed:

jq.x86_64 :1.5-.el7 Dependency Installed:

oniguruma.x86_64 :5.9.-.el7 Complete!

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# yum -y install jq #安装jq工具,便于我们查看json格式的内容

[root@node105.yinzhengjie.org.cn ~]# curl http://node105.yinzhengjie.org.cn:10501/metrics | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

--:--:-- --:--:-- --:--:--

{

"CHANNEL.c1": { #这是c1的CHANEL监控数据,c1该名称在flume-netcat.conf中配置文件中定义的。

"ChannelCapacity": "", #channel的容量,目前仅支持File Channel,Memory channel的统计数据。

"ChannelFillPercentage": "0.0", #channel已填入的百分比。

"Type": "CHANNEL", #很显然,这里是CHANNEL监控项,类型为CHANNEL。

"ChannelSize": "", #目前channel中事件的总数量,目前仅支持File Channel,Memory channel的统计数据。

"EventTakeSuccessCount": "", #sink成功从channel读取事件的总数量。

"EventTakeAttemptCount": "", #sink尝试从channel拉取事件的总次数。这不意味着每次时间都被返回,因为sink拉取的时候channel可能没有任何数据。

"StartTime": "", #channel启动时的毫秒值时间。

"EventPutAttemptCount": "", #Source尝试写入Channe的事件总次数。

"EventPutSuccessCount": "", #成功写入channel且提交的事件总次数。

"StopTime": "" #channel停止时的毫秒值时间,为0表示一直在运行。

}

}

[root@node105.yinzhengjie.org.cn ~]# 温馨提示:

如果你还要想了解更多度量值,可参考官方文档:http://flume.apache.org/FlumeUserGuide.html#monitoring。

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN 172.30.1.105: *:*

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# netstat -untalp | grep

tcp 172.30.1.105: 0.0.0.0:* LISTEN /java

tcp 172.30.1.105: 172.30.1.105: TIME_WAIT -

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# jps

Application

Jps

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# kill

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# jps

Jps

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# netstat -untalp | grep

tcp 172.30.1.105: 172.30.1.105: TIME_WAIT -

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# netstat -untalp | grep 8888 #杀掉对应的flume进程

2>.实时读取本地文件到HDFS集群(需要flume节点配置hadoop集群环境哟,exec source - memory channel - hdfs sink)

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-hdfs.conf

yinzhengjie2.sources = file_source

yinzhengjie2.sinks = hdfs_sink

yinzhengjie2.channels = memory_channel yinzhengjie2.sources.file_source.type = exec

yinzhengjie2.sources.file_source.command = tail -F /var/log/messages

yinzhengjie2.sources.file_source.shell = /bin/bash -c yinzhengjie2.sinks.hdfs_sink.type = hdfs

yinzhengjie2.sinks.hdfs_sink.hdfs.path = hdfs://node101.yinzhengjie.org.cn:8020/flume/%Y%m%d/%H

#上传文件的前缀

yinzhengjie2.sinks.hdfs_sink.hdfs.filePrefix = 172.30.1.105-

#是否按照时间滚动文件夹

yinzhengjie2.sinks.hdfs_sink.hdfs.round = true

#多少时间单位创建一个新的文件夹

yinzhengjie2.sinks.hdfs_sink.hdfs.roundValue =

#重新定义时间单位

yinzhengjie2.sinks.hdfs_sink.hdfs.roundUnit = hour

#是否使用本地时间戳

yinzhengjie2.sinks.hdfs_sink.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

yinzhengjie2.sinks.hdfs_sink.hdfs.batchSize =

#设置文件类型,可支持压缩

yinzhengjie2.sinks.hdfs_sink.hdfs.fileType = DataStream

#多久生成一个新的文件

yinzhengjie2.sinks.hdfs_sink.hdfs.rollInterval =

#设置每个文件的滚动大小

yinzhengjie2.sinks.hdfs_sink.hdfs.rollSize =

#文件的滚动与Event数量无关

yinzhengjie2.sinks.hdfs_sink.hdfs.rollCount =

#最小副本数

yinzhengjie2.sinks.hdfs_sink.hdfs.minBlockReplicas = yinzhengjie2.channels.memory_channel.type = memory

yinzhengjie2.channels.memory_channel.capacity =

yinzhengjie2.channels.memory_channel.transactionCapacity = yinzhengjie2.sources.file_source.channels = memory_channel

yinzhengjie2.sinks.hdfs_sink.channel = memory_channel

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-hdfs.conf #编写配置文件

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-hdfs.sh

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com

#Data:Thu Oct :: CST #将监控数据发送给ganglia,需要指定ganglia服务器地址,使用请确认是否部署好ganglia服务!

#nohup flume-ng agent -c /home/softwares/apache-flume-1.9.-bin/conf --conf-file=/home/data/flume/job/flume-hdfs.conf --name yinzhengjie2 -Dflume.monitoring.type=ganglia -Dflume.monitoring.hosts=node105.yinzhengjie.org.cn:

-Dflume.root.logger=INFO,console >> /home/data/flume/log/flume-ganglia-flume-hdfs.log >& & #启动flume自身的监控参数,默认执行以下脚本

nohup flume-ng agent -c /home/data/flume/job --conf-file=/home/data/flume/job/flume-hdfs.conf --name yinzhengjie2 -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console >> /home/data/flu

me/log/flume-hdfs.log >& &[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# chmod +x /home/data/flume/shell/start-hdfs.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# /home/data/flume/shell/start-hdfs.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# jps

Application

Jps

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-hdfs.sh #编写启动脚本并启动flume

[root@node105.yinzhengjie.org.cn ~]# tail -100f /home/data/flume/log/flume-hdfs.log

Warning: JAVA_HOME is not set!

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console -cp '/home/data/flume/job:/home/softwares/apache-flume-1.9.0-bin/lib/*:/lib/*' -

Djava.library.path= org.apache.flume.node.Application --conf-file=/home/data/flume/job/flume-hdfs.conf --name yinzhengjie2log4j:WARN No appenders could be found for logger (org.apache.flume.util.SSLUtil).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Warning: JAVA_HOME is not set!

Info: Including Hadoop libraries found via (/home/softwares/hadoop-2.6./bin/hadoop) for HDFS access

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console -cp '/home/data/flume/job:/home/softwares/apache-flume-1.9.0-bin/lib/*:/home/sof

twares/hadoop-2.6./etc/hadoop:/home/softwares/hadoop-2.6./share/hadoop/common/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/common/*:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs/*:/home/softwares/hadoop-2.6.0/share/hadoop/yarn/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/yarn/*:/home/softwares/hadoop-2.6.0/share/hadoop/mapreduce/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/lib/*' -Djava.library.path=:/home/softwares/hadoop-2.6.0/lib/native org.apache.flume.node.Application --conf-file=/home/data/flume/job/flume-hdfs.conf --name yinzhengjie2SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/softwares/apache-flume-1.9.0-bin/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/softwares/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

19/07/19 17:27:18 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting

19/07/19 17:27:18 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/home/data/flume/job/flume-hdfs.conf

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:file_source

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:file_source

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:file_source

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:file_source

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Added sinks: hdfs_sink Agent: yinzhengjie2

19/07/19 17:27:18 WARN conf.FlumeConfiguration: Agent configuration for 'yinzhengjie2' has no configfilters.

19/07/19 17:27:18 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [yinzhengjie2]

19/07/19 17:27:18 INFO node.AbstractConfigurationProvider: Creating channels

19/07/19 17:27:18 INFO channel.DefaultChannelFactory: Creating instance of channel memory_channel type memory

19/07/19 17:27:18 INFO node.AbstractConfigurationProvider: Created channel memory_channel

19/07/19 17:27:18 INFO source.DefaultSourceFactory: Creating instance of source file_source, type exec

19/07/19 17:27:18 INFO sink.DefaultSinkFactory: Creating instance of sink: hdfs_sink, type: hdfs

19/07/19 17:27:18 INFO node.AbstractConfigurationProvider: Channel memory_channel connected to [file_source, hdfs_sink]

19/07/19 17:27:18 INFO node.Application: Starting new configuration:{ sourceRunners:{file_source=EventDrivenSourceRunner: { source:org.apache.flume.source.ExecSource{name:file_source,state:IDLE} }} sinkRunners:{hdfs_sink=Sink

Runner: { policy:org.apache.flume.sink.DefaultSinkProcessor@331a821d counterGroup:{ name:null counters:{} } }} channels:{memory_channel=org.apache.flume.channel.MemoryChannel{name: memory_channel}} }19/07/19 17:27:18 INFO node.Application: Starting Channel memory_channel

19/07/19 17:27:18 INFO node.Application: Waiting for channel: memory_channel to start. Sleeping for 500 ms

19/07/19 17:27:18 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: memory_channel: Successfully registered new MBean.

19/07/19 17:27:18 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: memory_channel started

19/07/19 17:27:19 INFO node.Application: Starting Sink hdfs_sink

19/07/19 17:27:19 INFO node.Application: Starting Source file_source

19/07/19 17:27:19 INFO source.ExecSource: Exec source starting with command: tail -F /var/log/messages

19/07/19 17:27:19 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: file_source: Successfully registered new MBean.

19/07/19 17:27:19 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: file_source started

19/07/19 17:27:19 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: hdfs_sink: Successfully registered new MBean.

19/07/19 17:27:19 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: hdfs_sink started

19/07/19 17:27:19 INFO util.log: Logging initialized @1347ms to org.eclipse.jetty.util.log.Slf4jLog

19/07/19 17:27:19 INFO server.Server: jetty-9.4.6.v20170531

19/07/19 17:27:19 INFO server.AbstractConnector: Started ServerConnector@3ab21218{HTTP/1.1,[http/1.1]}{0.0.0.0:10502}

19/07/19 17:27:19 INFO server.Server: Started @1695ms

19/07/19 17:27:23 INFO hdfs.HDFSDataStream: Serializer = TEXT, UseRawLocalFileSystem = false

19/07/19 17:27:23 INFO hdfs.BucketWriter: Creating hdfs://node101.yinzhengjie.org.cn:8020/flume/20190719/17/172.30.1.105-.1563528443487.tmp

^C

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# tail -100f /home/data/flume/log/flume-hdfs.log #查看flume日志收集信息

[root@node105.yinzhengjie.org.cn ~]# hdfs dfs -ls /flume//

Found items

-rw-r--r-- root supergroup -- : /flume///172.30.1.105-..tmp

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# hdfs dfs -ls /flume/20190719/17 #查看hdfs对应目录是否生成相应的日志信息

[root@node105.yinzhengjie.org.cn ~]# curl http://node105.yinzhengjie.org.cn:10502/metrics | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

--:--:-- --:--:-- --:--:--

{

"SOURCE.file_source": {

"AppendBatchAcceptedCount": "", #成功提交到channel的批次的总数量。

"GenericProcessingFail": "", #常规处理失败的次数

"EventAcceptedCount": "", #成功写出到channel的事件总数量。

"AppendReceivedCount": "", #每批只有一个事件的事件总数量(与RPC调用的一个append调用相等)。

"StartTime": "", #SOURCE启动时的毫秒值时间。

"AppendBatchReceivedCount": "", #接收到事件批次的总数量。

"ChannelWriteFail": "", #往CHANNEL写失败的次数

"EventReceivedCount": "", #目前为止source已经接收到的事件总数量。

"EventReadFail": "", #时间读取失败的次数

"Type": "SOURCE", #当前类型为SOURRCE

"AppendAcceptedCount": "", #逐条录入的次数,单独传入的事件到Channel且成功返回的事件总数量。

"OpenConnectionCount": "", #目前与客户端或sink保持连接的总数量,目前仅支持avro source展现该度量。

"StopTime": "" #SOURCE停止时的毫秒值时间,0代表一直运行着

},

"CHANNEL.memory_channel": {

"ChannelCapacity": "", #channel的容量,目前仅支持File Channel,Memory channel的统计数据。

"ChannelFillPercentage": "0.0", #channel已填入的百分比。

"Type": "CHANNEL", #当前类型为CHANNEL

"ChannelSize": "", #目前channel中事件的总数量,目前仅支持File Channel,Memory channel的统计数据。

"EventTakeSuccessCount": "", #sink成功从channel读取事件的总数量。

"EventTakeAttemptCount": "", #sink尝试从channel拉取事件的总次数。这不意味着每次时间都被返回,因为sink拉取的时候channel可能没有任何数据。

"StartTime": "", #CHANNEL启动时的毫秒值时间。

"EventPutAttemptCount": "", #Source尝试写入Channe的事件总次数。

"EventPutSuccessCount": "", #成功写入channel且提交的事件总次数。

"StopTime": "" #CHANNEL停止时的毫秒值时间。

},

"SINK.hdfs_sink": {

"ConnectionCreatedCount": "", #下一个阶段(或存储系统)创建链接的数量(如HDFS创建一个文件)。

"BatchCompleteCount": "", #批量处理event的个数等于批处理大小的数量。

"EventWriteFail": "", #时间写失败的次数

"BatchEmptyCount": "", #批量处理event的个数为0的数量(空的批量的数量),如果数量很大表示source写入数据的速度比sink处理数据的速度慢很多。

"EventDrainAttemptCount": "", #sink尝试写出到存储的事件总数量。

"StartTime": "", #SINK启动时的毫秒值时间。

"BatchUnderflowCount": "", #批量处理event的个数小于批处理大小的数量(比sink配置使用的最大批量尺寸更小的批量的数量),如果该值很高也表示sink比source更快。

"ChannelReadFail": "", #从CHANNEL读取失败的次数

"ConnectionFailedCount": "", #连接失败的次数

"ConnectionClosedCount": "", #连接关闭的次数

"Type": "SINK", #当前类型为SINK

"EventDrainSuccessCount": "", #sink成功写出到存储的事件总数量。

"StopTime": "" #SINK停止时的毫秒值时间。

}

}

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]#

3>.实时指定目录文件内容到HDFS集群(需要flume节点配置hadoop集群环境哟,spooldir source - memory channel - hdfs sink)

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-dir.conf

yinzhengjie3.sources = spooldir_source

yinzhengjie3.sinks = hdfs_sink

yinzhengjie3.channels = memory_channel # Describe/configure the source

yinzhengjie3.sources.spooldir_source.type = spooldir

yinzhengjie3.sources.spooldir_source.spoolDir = /yinzhengjie/data/flume/upload

yinzhengjie3.sources.spooldir_source.fileSuffix = .COMPLETED

yinzhengjie3.sources.spooldir_source.fileHeader = true

#忽略所有以.tmp结尾的文件,不上传

yinzhengjie3.sources.spooldir_source.ignorePattern = ([^ ]*\.tmp)

#获取源文件名称,方便下面的sink调用变量fileName

yinzhengjie3.sources.spooldir_source.basenameHeader = true

yinzhengjie3.sources.spooldir_source.basenameHeaderKey = fileName # Describe the sink

yinzhengjie3.sinks.hdfs_sink.type = hdfs

yinzhengjie3.sinks.hdfs_sink.hdfs.path = hdfs://node101.yinzhengjie.org.cn:8020/flume

#上传文件的前缀

yinzhengjie3.sinks.hdfs_sink.hdfs.filePrefix = 172.30.1.105-upload-

#是否按照时间滚动文件夹

yinzhengjie3.sinks.hdfs_sink.hdfs.round = true

#多少时间单位创建一个新的文件夹

yinzhengjie3.sinks.hdfs_sink.hdfs.roundValue =

#重新定义时间单位

yinzhengjie3.sinks.hdfs_sink.hdfs.roundUnit = hour

#是否使用本地时间戳

yinzhengjie3.sinks.hdfs_sink.hdfs.useLocalTimeStamp = true

#积攒多少个Event才flush到HDFS一次

yinzhengjie3.sinks.hdfs_sink.hdfs.batchSize =

#设置文件类型,可支持压缩

yinzhengjie3.sinks.hdfs_sink.hdfs.fileType = DataStream

#多久生成一个新的文件

yinzhengjie3.sinks.hdfs_sink.hdfs.rollInterval =

#设置每个文件的滚动大小大概是128M

yinzhengjie3.sinks.hdfs_sink.hdfs.rollSize =

#文件的滚动与Event数量无关

yinzhengjie3.sinks.hdfs_sink.hdfs.rollCount =

#最小冗余数

yinzhengjie3.sinks.hdfs_sink.hdfs.minBlockReplicas =

#和source的basenameHeader,basenameHeaderKey两个属性一起用可以保持原文件名称上传

yinzhengjie3.sinks.hdfs_sink.hdfs.filePrefix = %{fileName} # Use a channel which buffers events in memory

yinzhengjie3.channels.memory_channel.type = memory

yinzhengjie3.channels.memory_channel.capacity =

yinzhengjie3.channels.memory_channel.transactionCapacity = # Bind the source and sink to the channel

yinzhengjie3.sources.spooldir_source.channels = memory_channel

yinzhengjie3.sinks.hdfs_sink.channel = memory_channel

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/job/flume-dir.conf #编写flume配置文件

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-dir.sh

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com

#Data:Thu Oct :: CST #将监控数据发送给ganglia,需要指定ganglia服务器地址,使用请确认是否部署好ganglia服务!

#nohup flume-ng agent -c /home/data/flume/job --conf-file=/home/data/flume/job/flume-dir.conf --name yinzhengjie3 -Dflume.monitoring.type=ganglia -Dflume.monitoring.hosts=node105.yinzhengjie.org.cn: -Dflume.root.logger=INFO,console >> /home/data/flume/log/flume-gang

lia-flume-dir.log >& & #启动flume自身的监控参数,默认执行以下脚本

nohup flume-ng agent -c /home/data/flume/job --conf-file=/home/data/flume/job/flume-dir.conf --name yinzhengjie3 -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console >> /home/data/flume/log/flume-dir.log >& &

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# cat /home/data/flume/shell/start-dir.sh #编写启动脚本

[root@node105.yinzhengjie.org.cn ~]# mkdir -pv /yinzhengjie/data/flume/upload

mkdir: created directory ‘/yinzhengjie’

mkdir: created directory ‘/yinzhengjie/data’

mkdir: created directory ‘/yinzhengjie/data/flume’

mkdir: created directory ‘/yinzhengjie/data/flume/upload’

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# echo http://www.cnblogs.com/yinzhengjie>/yinzhengjie/data/flume/upload/yinzhengjie.blog

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# echo http://www.cnblogs.com/yinzhengjie>/yinzhengjie/data/flume/upload/yinzhengjie2.tmp

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# echo http://www.cnblogs.com/yinzhengjie>/yinzhengjie/data/flume/upload/yinzhengjie3.txt

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# mkdir -pv /yinzhengjie/data/flume/upload #创建测试数据

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# /home/data/flume/shell/start-dir.sh

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN *: *:*

LISTEN *: *:*

LISTEN ::: :::*

[root@node105.yinzhengjie.org.cn ~]#

[root@node105.yinzhengjie.org.cn ~]# tail -100f /home/data/flume/log/flume-dir.log

Warning: JAVA_HOME is not set!

Info: Including Hadoop libraries found via (/home/softwares/hadoop-2.6./bin/hadoop) for HDFS access

Info: Including Hive libraries found via () for Hive access

+ exec /home/softwares/jdk1..0_201/bin/java -Xmx20m -Dflume.monitoring.type=http -Dflume.monitoring.port= -Dflume.root.logger=INFO,console -cp '/home/data/flume/job:/home/softwares/apache-flume-1.9.0-bin/lib/*:/home/softwares/hadoop-2.6.0/etc/hadoop:/home/software

s/hadoop-2.6./share/hadoop/common/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/common/*:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/hdfs/*:/home/softwares/hadoop-2.6.0/share/hadoop/yarn/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/yarn/*:/home/softwares/hadoop-2.6.0/share/hadoop/mapreduce/lib/*:/home/softwares/hadoop-2.6.0/share/hadoop/mapreduce/*:/contrib/capacity-scheduler/*.jar:/lib/*' -Djava.library.path=:/home/softwares/hadoop-2.6.0/lib/native org.apache.flume.node.Application --conf-file=/home/data/flume/job/flume-dir.conf --name yinzhengjie3SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/softwares/apache-flume-1.9.0-bin/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/softwares/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

19/07/19 18:28:43 INFO node.PollingPropertiesFileConfigurationProvider: Configuration provider starting

19/07/19 18:28:43 INFO node.PollingPropertiesFileConfigurationProvider: Reloading configuration file:/home/data/flume/job/flume-dir.conf

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Added sinks: hdfs_sink Agent: yinzhengjie3

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:memory_channel

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:spooldir_source

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Processing:hdfs_sink

19/07/19 18:28:43 WARN conf.FlumeConfiguration: Agent configuration for 'yinzhengjie3' has no configfilters.

19/07/19 18:28:43 INFO conf.FlumeConfiguration: Post-validation flume configuration contains configuration for agents: [yinzhengjie3]

19/07/19 18:28:43 INFO node.AbstractConfigurationProvider: Creating channels

19/07/19 18:28:43 INFO channel.DefaultChannelFactory: Creating instance of channel memory_channel type memory

19/07/19 18:28:43 INFO node.AbstractConfigurationProvider: Created channel memory_channel

19/07/19 18:28:43 INFO source.DefaultSourceFactory: Creating instance of source spooldir_source, type spooldir

19/07/19 18:28:43 INFO sink.DefaultSinkFactory: Creating instance of sink: hdfs_sink, type: hdfs

19/07/19 18:28:43 INFO node.AbstractConfigurationProvider: Channel memory_channel connected to [spooldir_source, hdfs_sink]

19/07/19 18:28:43 INFO node.Application: Starting new configuration:{ sourceRunners:{spooldir_source=EventDrivenSourceRunner: { source:Spool Directory source spooldir_source: { spoolDir: /yinzhengjie/data/flume/upload } }} sinkRunners:{hdfs_sink=SinkRunner: { policy:org

.apache.flume.sink.DefaultSinkProcessor@440e91df counterGroup:{ name:null counters:{} } }} channels:{memory_channel=org.apache.flume.channel.MemoryChannel{name: memory_channel}} }19/07/19 18:28:43 INFO node.Application: Starting Channel memory_channel

19/07/19 18:28:43 INFO node.Application: Waiting for channel: memory_channel to start. Sleeping for 500 ms

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: CHANNEL, name: memory_channel: Successfully registered new MBean.

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Component type: CHANNEL, name: memory_channel started

19/07/19 18:28:43 INFO node.Application: Starting Sink hdfs_sink

19/07/19 18:28:43 INFO node.Application: Starting Source spooldir_source

19/07/19 18:28:43 INFO source.SpoolDirectorySource: SpoolDirectorySource source starting with directory: /yinzhengjie/data/flume/upload

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SINK, name: hdfs_sink: Successfully registered new MBean.

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Component type: SINK, name: hdfs_sink started

19/07/19 18:28:43 INFO util.log: Logging initialized @1358ms to org.eclipse.jetty.util.log.Slf4jLog

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Monitored counter group for type: SOURCE, name: spooldir_source: Successfully registered new MBean.

19/07/19 18:28:43 INFO instrumentation.MonitoredCounterGroup: Component type: SOURCE, name: spooldir_source started

19/07/19 18:28:44 INFO server.Server: jetty-9.4.6.v20170531

19/07/19 18:28:44 INFO server.AbstractConnector: Started ServerConnector@1d367324{HTTP/1.1,[http/1.1]}{0.0.0.0:10503}

19/07/19 18:28:44 INFO server.Server: Started @1609ms

19/07/19 18:29:16 INFO avro.ReliableSpoolingFileEventReader: Last read took us just up to a file boundary. Rolling to the next file, if there is one.

19/07/19 18:29:16 INFO avro.ReliableSpoolingFileEventReader: Preparing to move file /yinzhengjie/data/flume/upload/yinzhengjie.blog to /yinzhengjie/data/flume/upload/yinzhengjie.blog.COMPLETED

19/07/19 18:29:16 INFO hdfs.HDFSDataStream: Serializer = TEXT, UseRawLocalFileSystem = false

19/07/19 18:29:16 INFO hdfs.BucketWriter: Creating hdfs://node101.yinzhengjie.org.cn:8020/flume/yinzhengjie.blog.1563532156286.tmp