who is the next one?

Turn-Taking: 参加会话的人参加整个会话的过程中轮流说话,end-of-utterance detection systems,是对说话转变的预测,既什么时候发生对话者之间的转变。

- Evaluation of Real-Time Deep Learning Turn-Taking Models for Multiple Dialogue Scenarios

- IMPROVING END-OF-TURN DETECTION IN SPOKEN DIALOGUES BY DETECTING SPEAKER INTENTIONS AS A SECONDARY TASK

- Dialog Prediction for a General Model of Turn-Taking

一个主要的挑战是能够产生实际的机制轮流,这将控制谁将发言和什么时候他们将这样做[23]。所有语言的轮流发言都有一个共同的目标,以避免重叠的讲话,并尽量减少之间的差距,说话轮流[20]。

One major challenge is to be able to generate realistic mechanisms for turn-taking, which regulates who will speak and when they will do so.

重点研究如何从声音线索来预测特定话语的转换。

Each utterance in the sequence represents a complete sentence, containing both acoustic and lexical cues, and varies in duration.

序列中的每个话语代表一个完整的句子,包含声音和词汇线索,并随持续时间变化。

PROBLEM SETUP

- Prediction of Who Will Be Next Speaker and When Using Mouth-Opening Pattern in Multi-Party Conversation:更偏向于实际场景下的对话,利用张嘴,眼瞥等多模态信息,进行预测。Face-to-face communication

We investigated the mouth-opening transition pattern (MOTP), which represents the change of mouth-opening degree during the end of an utterance, and used it to predict the next speaker and utterance interval between the start time of the next speaker’s utterance and the end time of the current speaker’s utterance in a multi-party conversation.

manually annotated in four-person conversation

As a multimodal-feature fusion, we also implemented models using eye-gaze behavior,

- Addressee and Response Selection in Multi-Party Conversations with Speaker Interaction RNNs

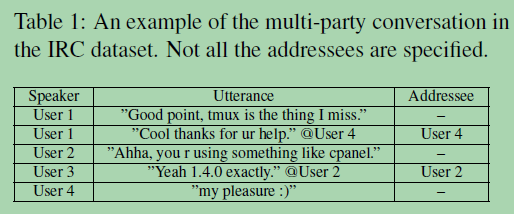

problem: addressee and response selection in multi-party conversations. 我们研究了多方对话中的受话者和回应选择问题。

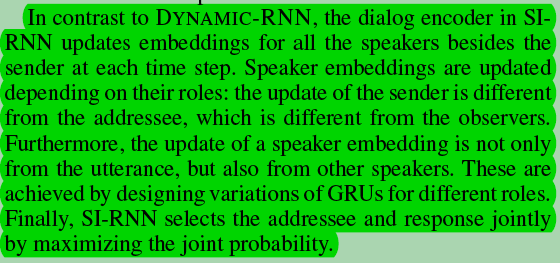

Understanding multi-party conversations is challenging because of complex speaker interactions: multiple speakers exchange messages with each other, playing different roles (sender, addressee, observer), and these roles vary across turns.

以前的先进系统只更新了发送方的Speaker 嵌入,而SI-RNN使用了一个新的对话编码器,以角色敏感的方式更新Speaker 嵌入。in a role-sensitive way.

Additionally, unlike the previous work that selected the addressee and response separately, SI-RNN selects them jointly by viewing the task as a sequence prediction problem.

此外,不同于以往的工作分别选择收件人和回应,SI-RNN通过将任务视为一个序列预测问题来联合选择他们。

In this paper, we study the problem of addressee and response selection in multi-party conversations: given a responding speaker and a dialog context, the task is to select an addressee and a response from a set of candidates for the responding speaker.

首先给定了回答者和上下文信息,任务是选一个这句话是说给谁的,还检索说话内容;该任务需要对多方对话进行建模,可以直接用于构建基于检索的对话系统。

Next Utterance Classification task for multi-turn two-party dialogs. Ouchi and Tsuboi (2016) extend NUC to multi-party conversations by integrating the addressee detection problem. Since the data is text-based, they use only textual information to predict addressees as opposed to relying on acoustic signals or gaze information in multimodal dialog systems.

1\首先,在每个时间步骤中,只有嵌入的发送者从话语中被更新。因此,其他说话者对所讲的内容视而不见,而该模型也无法捕捉到收件人的信息。

2\第二,收信人和回复是相互依赖的,DYNAMIC RNN是独立选择的。

Turn Taking是构建多机器人系统的一种重要问题,主要任务是预测多方对话中下一个最有可能进行互动的参与者,即说话人预测(speaker,区别于 addressee 预测)。(M. G. de Bayser et al. 2019[21])使用机器学习算法(MLE 和 SVM)来进行 Turn-Taking 研究。(G. De Bayser, Guerra, and Cavalin 2020[22])提出了一个集成 MLE、CNN 和 FSA 的系统在 MultiWOZ 数据集上进行 Turn-Taking 研究。

- Learning Multi-Party Turn-Taking Models from Dialogue Logs

- 背景:机器学习(ML)技术的应用,使智能系统能够从对话日志中学习多方轮流模式,learn multi-party turn-taking models from dialogue logs.

- 任务:判断下一个说话的是谁,determining who speaks next, after each utterance of a dialogue, given who has spoken and what was said in the previous utterances.

- Key idea: 利用了机器学习模型,this paper presents comparisons of the accuracy of different ML techniques such as Maximum Likelihood Estimation (MLE), Support Vector Machines (SVM), and Convolutional Neural Networks (CNN) architectures, with and without utterance data.

- 结果表明:(i)语料库的大小对基于内容的深度学习方法的准确性有非常积极的影响,并且这些模型在较大的数据集中表现最好;和(2)如果数据集对话小和面向主题——(但很少有主题),这是足够使用一个MLE或SVM模型,虽然可以实现更高的精度与使用话语的内容一个CNN模型。

- 使用ML方法定义一种建模轮流的方法,并设计数据集来训练和评估这类模型。defining a way to model turn-taking using an ML approach, and designing data sets to train and evaluate such models.

- Reddit or Ubuntu IRC:以往数据集存在的问题:forum-oriented and they do not have the structure of a conversation with clear speaker turns. The original training corpus is organized in days with no clear threads of conversation between the participants. Therefore, there are multiple conversations happening concurrently each day.

who spoke and what was said.

That interaction can be a reply to the last interaction, a reply to an interaction in the past in the dialogue, or even a new idea or an interruption.

if the dialogue dataset is small and topic-oriented (but with few topics), it might be sufficient to use an agent-only MLE or SVM models, although slightly higher accuracies can be achieved with the use of the content of the utterances with a CNN model.

if the dialogue dataset is bigger, our results indicate that an agent-and-content CNN model performs best, albeit almost at the level of a very simple, baseline model which simply uses the speaker before the last as its prediction.

- A Hybrid Solution to Learn Turn-Taking in Multi-Party Service-based Chat Groups

Addressee识别:根据数据集中是否存在 Addressee 标签,我们将 Addressee 识别分为隐式识别和显式识别两种任务。在隐式 Addressee 识别中,主要对 Utterance 进行建模,各种任务大多可以抽象为 Reply-to 关系结构识别问题。在显式 Addressee 识别中,主要通过对 Utterance 和 Speaker 进行建模,从而进行 Addressee 的预测。

- Who Is Speaking to Whom? Learning to Identify Utterance Addressee in Multi-Party Conversations

Conversation structure is useful for both understanding the nature of conversation dynamics and for providing features for many downstream applications such as summarization of conversations. In this work, we define the problem of conversation structure modeling as identifying the parent utterance(s) to which each utterance in the conversation responds to.

In multi-party conversations, identifying the relationship among users is also an important task.

It can be categorized into two topics, 1) predicting who will be the next speaker (Meng et al., 2017) and 2) who is the addressee (Ouchi and Tsuboi, 2016; Zhang et al., 2017). For the first topic, Meng et al. (2017) investigated a temporal-based and a content-based method to jointly model the users and context.For the second topic, which is closely related to ours, Ouchi and Tsuboi (2016) proposed to predict the addressee and utterance given a context with all available information. Later, Zhang et al. (2017) proposed a speaker-interactive model, which takes users’ role information into consideration and implements a role-sensitively state tracking process.

- 背景: In real multiparty conversations, we can observe who is speaking, but the addressee information is not always explicit.

Therefore, compared to two-party conversations, a unique issue of multi-party conversations is to understand who is speaking to whom.

- 任务: we aim to tackle the challenge of identifying all the missing addressees in a conversation session.

- Key idea: a novel who-to-whom (W2W) model which models users and utterances in the session jointly in an interactive way.

To capture the correlation within users and utterances in multi-party conversations, we propose an interactive representation learning approach to jointly learn the representations of users and utterances and enhance them mutually.

- 整体框架

- Who Did They Respond to? Conversation Structure Modeling Using Masked Hierarchical Transformer

Discovering the conversation structure allows us to segment the conversation by topics. It also permits easier analysis and summarization of the various threads and topics discussed in the conversation.

General Speaker Modeling

- Towards Neural Speaker Modeling in Multi-Party Conversation: The Task, Dataset, and Models (predicting who will be the next speaker )2017。 给一句话,找到是谁说的。

Recently, researchers have begun to realize the importance of speaker modeling in neural dialog systems, but there lacks established tasks and datasets.

- Motivation:

A simple setting in the research of dialog systems is context-free, i.e., only a single utterance is considered during reply generation (Shang et al., 2015). Other studies leverage context information by concatenating several utterances (Sordoni et al., 2015) or building hierarchical models (Yao et al., 2015; Serban et al., 2016). The above approaches do not distinguish different speakers, and thus speaker information would be lost during conversation modeling.

- Speaker modeling is in fact important to dialog systems and has been studied in traditional dialog research. However, existing methods are usually based on hand-crafted statistics and ad hoc to a certain application (Lin and Walker, 2011). Another research direction is speaker modeling in a multi-modal setting, e.g., acoustic and visual (Uthus and Aha, 2013), which is beyond the focus of this paper.

we propose speaker classification as a surrogate(代理 替代) task for general speaker modeling and collect massive data to facilitate research in this direction.

We further investigate the temporal-based and content-based models of speakers and propose several hybrids of them.

- The observation is that, what a speaker has said (called content) provides non-trivial background information of the speaker.

- Meanwhile, the relative order of a speaker (e.g., the i-th latest speaker) is a strong bias: nearer speakers are more likely to speak again; we call it temporal information.

- We investigate different strategies for combination, ranging from linear interpolation to complicated gating mechanisms inspired by Differentiable Neural Computers

In this paper, we propose a speaker classification task that predicts the speaker of an utterance.

根据说话者的说话内容以及说话的相对顺序来 建模speaker。

用说话人的话语来建模说话人是很自然的,这些话语提供了说话人的背景、立场等有启发性的信息。

- Using Respiration to Predict Who Will Speak Next and When in Multiparty Meetings

Techniques that use nonverbal behaviors to predict turn-changing situations—such as, in multiparty meetings, who the next speaker will be and when the next utterance will occur—have been receiving a lot of attention in recent research. To build a model for predicting these behaviors we conducted a research study to determine whether respiration could be effectively used as a basis for the prediction.

对话过程可以抽象为:Speaker(说话人), Addressee(回复人), Utterance(说话内容)

在多方对话中,存在多个Speakers, 每个人说的Utterance,对应历史对话中的一些Utterance(Addressee),Reply-to 关系。

不同的任务类型:

Addressee 识别方面:

1)隐式Addressee识别:只从Utterance层面,判断当前给定的Utterance,是回复历史对话记录中的那些Utterance?Utterances 之间 Reply-to 关系结构识别任务

- 第一个任务只需要从对话历史中找出一个 utterance 作为输入消息的 reply-to 目标;z

- 第二个任务可以看做从对话历史中找出一组 utterance 作为 reply-to 目标。

2)显示Addressee识别:每个Utterance存在对应的Addressee标签,相当于预测当前给定的Utterance是回复之前那个Speaker的?

- 当前说话人(Speaker)和回复(Response)均已知,求解 Addressee。该情况可以用于为多方对话的数据构建,如显式Addressee缺失补全问题。

- 当前说话人(Speaker)已知,但回复(Response)未知,求解 Addressee。该情况需要进行多任务学习,是多方对话机器人需要具备的基本功能。

Response回复生成方面(都是已知要生成的Utterance的说话者Speaker):

- 1)没有明确的Addressee标签信息,仅通过对话内容生成

- 2)有明确的Addressee信息作为辅助

- 3)同时判断Addressee信息和Response回复生成内容

next Speaker 识别:

1)给定一句话Utterance,判断这句话是谁说的,就是判断下一句话的说话者。Speaker Modeling

2)turn talking:

- 基于多模态信息(nonverbal)的turn talking 识别(更偏向于实际开会环境下的场景)

- 基于文本信息(text-based chat group)的turn talking识别(网络聊天室)determining who speaks next, after each utterance of a dialogue, given who has spoken and what was said in the previous utterances.

预测下一个Speaker和相应的Utterance。

Generally, a current speaker may select the next speaker, who in turn selects the next, and so forth. If the current speaker does not select a next speaker, then the next speaker may self-select, with the rights to the turn going to the first starter. If a new speaker does not self-select, then the current speaker may continue. This process recurs so as to provide for minimal gap and overlap between speakers.

Motivation:

- If a computer can understand and predict how such multifaceted conversations are carried out smoothly, it should be possible to develop a system that supports smooth communication and can dialogue with the participants.

如果一台计算机能够理解和预测这种多层面的对话是如何顺利进行的,那么就有可能开发出一个支持顺畅交流并能够与参与者对话的系统。

- If a computational model can predict the next speaker and the utterance interval between the start time of the next speaker’s utterance and the end time of the current speaker’s utterance, the model will be an indispensable technology for facilitating conversations between humans and conversation agents or robots.

如果一个计算模型能够预测下一个说话人,以及下一个说话人说话的开始时间和现在说话人说话的结束时间之间的说话间隔,那么这个模型将成为促进人类和对话代理或机器人之间的对话不可或缺的技术。

- For example, this technology will help conversation agents and robots start and end utterances at the right time when they participate in a multi-party conversation.

例如,这项技术将帮助对话代理和机器人在适当的时间开始和结束谈话,当他们参与多方谈话。

- Computers may also be able to use this technology to help or encourage participants to speak at the appropriate time in face-to-face conversations.

计算机也可以使用这项技术来帮助或鼓励参与者在适当的时间进行面对面的交谈。

- contributed to smooth turn-keeping and changing and the adjustment of the utterance interval.

一个主要的挑战是能够产生实际的机制轮流,这将控制谁将发言和什么时候他们将这样做[23]。所有语言的轮流发言都有一个共同的目标,以避免重叠的讲话,并尽量减少之间的差距,说话轮流[20]。

随机推荐

- PyTorch 广播机制

PyTorch 广播机制 定义 PyTorch的tensor参数可以自动扩展其大小.一般的是小一点的会变大,来满足运算需求. 规则 满足一下情况的tensor是可以广播的. 至少有一个维度 两个ten ...

- JS_简单的效果-鼠标移动、点击、定位元素、修改颜色等

1 <!DOCTYPE html> 2 <html lang="en"> 3 <head> 4 <meta charset="U ...

- Python学习笔记: 通过type annotation来伪指定变量类型

简介 通过annotation像强类型language那样指定变量类型,包括参数和返回值的类型 因为Python是弱类型语言,这种指定实际上无效的.所以这种写法叫annotation,就是个注释参考的 ...

- C++的三种继承方式详解以及区别

目录 目录 C++的三种继承方式详解以及区别 前言 一.public继承 二.protected继承 三.private继承 四.三者区别 五.总结 后话 C++的三种继承方式详解以及区别 前言 我发 ...

- stm32F103C8T6通过写寄存器点亮LED灯

因为我写寄存器的操作不太熟练,所以最近腾出时间学习了一下怎么写寄存器,现在把我的经验贴出来,如有不足请指正 我使用的板子是stm32F103C8T6(也就是最常用的板子),现在要通过写GPIO的寄存器 ...

- sqlx操作MySQL实战及其ORM原理

sqlx是Golang中的一个知名三方库,其为Go标准库database/sql提供了一组扩展支持.使用它可以方便的在数据行与Golang的结构体.映射和切片之间进行转换,从这个角度可以说它是一个OR ...

- CentOS 8及以上版本配置IP的方法,你 get 了吗

接上篇文章讲了 Ubuntu 18及以上版本的配置方法,本文再来讲讲 CentOS 8 及以上版本配置 IP 的方法. Centos/Redhat(8.x) 配置 IP 方法 说明:CentOS 8 ...

- uniapp中IOS安卓热更新和整包更新app更新

在App.vue中 onLaunch: function() { console.log('App Launch'); // #ifdef APP-PLUS this.getVersion(); // ...

- 精彩分享 | 欢乐游戏 Istio 云原生服务网格三年实践思考

作者 吴连火,腾讯游戏专家开发工程师,负责欢乐游戏大规模分布式服务器架构.有十余年微服务架构经验,擅长分布式系统领域,有丰富的高性能高可用实践经验,目前正带领团队完成云原生技术栈的全面转型. 导语 欢 ...

- 个人作业——体温上报app(二阶段)

Code.java package com.example.helloworld; import android.graphics.Bitmap; import android.graphics.Ca ...