吴裕雄 python深度学习与实践(15)

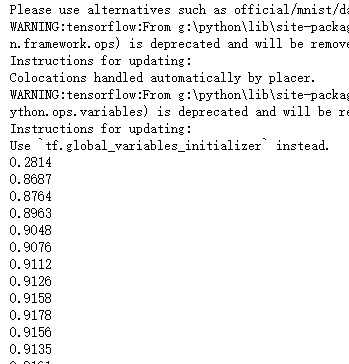

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets("D:\\F\\TensorFlow_deep_learn\\MNIST\\", one_hot=True) x_data = tf.placeholder("float32", [None, 784])

weight = tf.Variable(tf.ones([784, 10]))

bias = tf.Variable(tf.ones([10]))

y_model = tf.nn.softmax(tf.matmul(x_data, weight) + bias)

y_data = tf.placeholder("float32", [None, 10]) loss = tf.reduce_sum(tf.pow((y_model - y_data), 2)) train_step = tf.train.GradientDescentOptimizer(0.01).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init) for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={x_data:batch_xs, y_data:batch_ys})

if _ % 50 == 0:

correct_prediction = tf.equal(tf.argmax(y_model, 1), tf.argmax(y_data, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy, feed_dict={x_data: mnist.test.images, y_data: mnist.test.labels}))

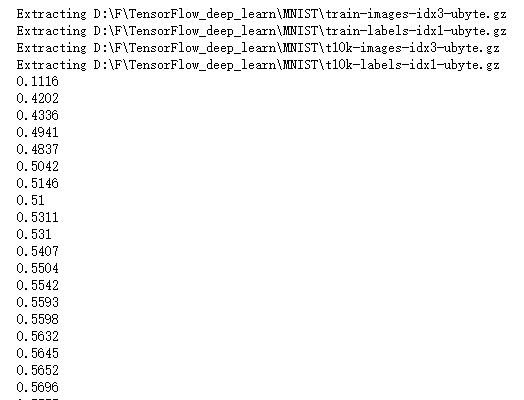

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets("D:\\F\\TensorFlow_deep_learn\\MNIST\\", one_hot=True) x_data = tf.placeholder("float32", [None, 784])

weight = tf.Variable(tf.ones([784, 10]))

bias = tf.Variable(tf.ones([10]))

y_model = tf.nn.relu(tf.matmul(x_data, weight) + bias)

y_data = tf.placeholder("float32", [None, 10])

loss = -tf.reduce_sum(y_data*tf.log(y_model)) train_step = tf.train.GradientDescentOptimizer(0.01).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init) for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(50)

sess.run(train_step, feed_dict={x_data:batch_xs, y_data:batch_ys})

if _ % 50 == 0:

correct_prediction = tf.equal(tf.argmax(y_model, 1), tf.argmax(y_data, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy, feed_dict={x_data: mnist.test.images, y_data: mnist.test.labels}))

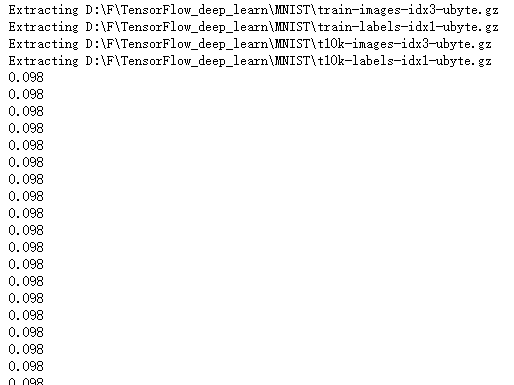

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets("D:\\F\\TensorFlow_deep_learn\\MNIST\\", one_hot=True) x_data = tf.placeholder("float32", [None, 784]) weight1 = tf.Variable(tf.ones([784, 256]))

bias1 = tf.Variable(tf.ones([256]))

y1_model1 = tf.matmul(x_data, weight1) + bias1 weight2 = tf.Variable(tf.ones([256, 10]))

bias2 = tf.Variable(tf.ones([10]))

y_model = tf.nn.softmax(tf.matmul(y1_model1, weight2) + bias2) y_data = tf.placeholder("float32", [None, 10]) loss = -tf.reduce_sum(y_data*tf.log(y_model))

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init) for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(50)

sess.run(train_step, feed_dict={x_data:batch_xs, y_data:batch_ys})

if _ % 50 == 0:

correct_prediction = tf.equal(tf.argmax(y_model, 1), tf.argmax(y_data, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy, feed_dict={x_data: mnist.test.images, y_data: mnist.test.labels}))

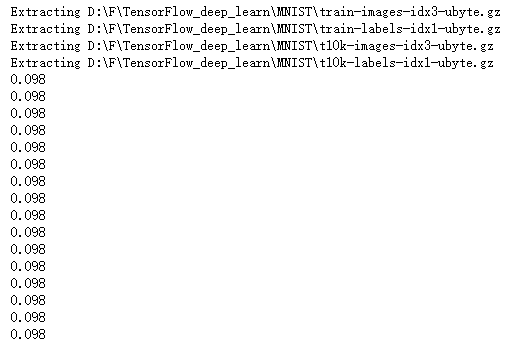

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets("D:\\F\\TensorFlow_deep_learn\\MNIST\\", one_hot=True) x_data = tf.placeholder("float32", [None, 784])

x_image = tf.reshape(x_data, [-1,28,28,1]) w_conv = tf.Variable(tf.ones([5,5,1,32]))

b_conv = tf.Variable(tf.ones([32]))

h_conv = tf.nn.relu(tf.nn.conv2d(x_image, w_conv, strides=[1, 1, 1, 1], padding='SAME') + b_conv) h_pool = tf.nn.max_pool(h_conv, ksize=[1, 2, 2, 1],strides=[1, 2, 2, 1], padding='SAME') w_fc = tf.Variable(tf.ones([14*14*32,1024]))

b_fc = tf.Variable(tf.ones([1024])) h_pool_flat = tf.reshape(h_pool, [-1, 14*14*32])

h_fc = tf.nn.relu(tf.matmul(h_pool_flat, w_fc) + b_fc) W_fc2 = tf.Variable(tf.ones([1024,10]))

b_fc2 = tf.Variable(tf.ones([10])) y_model = tf.nn.softmax(tf.matmul(h_fc, W_fc2) + b_fc2) y_data = tf.placeholder("float32", [None, 10]) loss = -tf.reduce_sum(y_data*tf.log(y_model))

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init) for _ in range(1000):

batch_xs, batch_ys = mnist.train.next_batch(200)

sess.run(train_step, feed_dict={x_data:batch_xs, y_data:batch_ys})

if _ % 50 == 0:

correct_prediction = tf.equal(tf.argmax(y_model, 1), tf.argmax(y_data, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print(sess.run(accuracy, feed_dict={x_data: mnist.test.images, y_data: mnist.test.labels}))

import tensorflow as tf

import tensorflow.examples.tutorials.mnist.input_data as input_data mnist = input_data.read_data_sets("D:\\F\\TensorFlow_deep_learn\\MNIST\\", one_hot=True) x_data = tf.placeholder("float", shape=[None, 784])

y_data = tf.placeholder("float", shape=[None, 10]) def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial) def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial) def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='VALID') def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='VALID') W_conv1 = weight_variable([5, 5, 1, 32])

b_conv1 = bias_variable([32])

x_image = tf.reshape(x_data, [-1, 28, 28, 1])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

h_pool1 = max_pool_2x2(h_conv1) W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

h_pool2 = max_pool_2x2(h_conv2) W_fc1 = weight_variable([4 * 4 * 64, 1024])

b_fc1 = bias_variable([1024]) h_pool2_flat = tf.reshape(h_pool2, [-1, 4*4*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1) keep_prob = tf.placeholder("float")

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob) W_fc2 = weight_variable([1024, 10])

b_fc2 = bias_variable([10]) y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2) cross_entropy = -tf.reduce_sum(y_data * tf.log(y_conv))

train_step = tf.train.AdamOptimizer(1e-2).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y_conv,1), tf.argmax(y_data, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float")) sess = tf.Session()

sess.run(tf.initialize_all_variables()) for i in range(1000):

batch = mnist.train.next_batch(50)

if i%5 == 0:

train_accuracy = sess.run(accuracy, feed_dict={x_data:batch[0], y_data: batch[1], keep_prob: 1.0})

print("step %d, training accuracy %g"%(i, train_accuracy))

sess.run(train_step, feed_dict={x_data: batch[0], y_data: batch[1], keep_prob: 0.5})

吴裕雄 python深度学习与实践(15)的更多相关文章

- 吴裕雄 python深度学习与实践(13)

import numpy as np import matplotlib.pyplot as plt x_data = np.random.randn(10) print(x_data) y_data ...

- 吴裕雄 python深度学习与实践(18)

# coding: utf-8 import time import numpy as np import tensorflow as tf import _pickle as pickle impo ...

- 吴裕雄 python深度学习与实践(17)

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data import time # 声明输 ...

- 吴裕雄 python深度学习与实践(16)

import struct import numpy as np import matplotlib.pyplot as plt dateMat = np.ones((7,7)) kernel = n ...

- 吴裕雄 python深度学习与实践(14)

import numpy as np import tensorflow as tf import matplotlib.pyplot as plt threshold = 1.0e-2 x1_dat ...

- 吴裕雄 python深度学习与实践(12)

import tensorflow as tf q = tf.FIFOQueue(,"float32") counter = tf.Variable(0.0) add_op = t ...

- 吴裕雄 python深度学习与实践(11)

import numpy as np from matplotlib import pyplot as plt A = np.array([[5],[4]]) C = np.array([[4],[6 ...

- 吴裕雄 python深度学习与实践(10)

import tensorflow as tf input1 = tf.constant(1) print(input1) input2 = tf.Variable(2,tf.int32) print ...

- 吴裕雄 python深度学习与实践(9)

import numpy as np import tensorflow as tf inputX = np.random.rand(100) inputY = np.multiply(3,input ...

随机推荐

- HTML/overflow的认识

1.overflow的定义:overflow属性规定当内容溢出元素框时应该做的处理.2.overflow:scorll; 是提供一种滚动的机制.3.关于overflow的其他相关值设置:

- Parsing Natural Scenes and Natural Language with Recursive Neural Networks-paper

Parsing Natural Scenes and Natural Language with Recursive Neural Networks作者信息: Richard Socher richa ...

- JAVA第八次作业

JAVA第八次作业 (一)学习总结 1.用思维导图对本周的学习内容进行总结 参考资料: XMind. 2.通过实验内容中的具体实例说明在执行executeUpdate()方法和executeQuery ...

- strstr函数的运用

strstr函数用于搜索一个字符串在另一个字符串中的第一次出现,该函数返回字符串的其余部分(从匹配点).如果未找到所搜索的字符串,则返回 false.

- Introduction of filter in servlet

官方给出的Filter的定义是在请求一个资源或者从一个资源返回信息的时候执行过滤操作的插件.我们使用过滤起最多的场景估计就是在请求和返回时候的字符集转换,或者权限控制,比如一个用户没有登录不能请求某些 ...

- PTA寒假一

7-1 打印沙漏 (20 分) 本题要求你写个程序把给定的符号打印成沙漏的形状.例如给定17个"*",要求按下列格式打印 所谓"沙漏形状",是指每行输出奇数个符 ...

- spring-boot入门总结

1.org.springframework.web.bind.annotation不存在 错误的pom.xml <dependency> <groupId>org.spring ...

- Illegalmixofcollations (utf8_unicode_ci,IMPLICIT) and (utf8_general_ci,IMPLICIT)foroperation '= 连表查询排序规则问题

两张 表 关联 ,如果 join的字段 排序规则 不一样就会出这个问题 . 解决办法 ,统一 排序规则. 在说说区别,utf8mb4_general_ci 更加快,但是在遇到某些特殊语言或者字符集,排 ...

- Guava 1:概览

一.引言 都说java是开源的,但是除了JDK外,能坚持更新且被广泛认可的开源jar包实在是不可多得.其中最显眼的自然是guava了,背靠google自然底气十足,今天就来解开guava的面纱,初探一 ...

- 如何在idea中引入一个新maven项目

如何在idea中引入一个新的maven项目,请参见如下操作: