High scalability with Fanout and Fastly

转自:http://blog.fanout.io/2017/11/15/high-scalability-fanout-fastly/

Fanout Cloud is for high scale data push. Fastly is for high scale data pull. Many realtime applications need to work with data that is both pushed and pulled, and thus can benefit from using both of these systems in the same application. Fanout and Fastly can even be connected together!

Using Fanout and Fastly in the same application, independently, is pretty straightforward. For example, at initialization time, past content could be retrieved from Fastly, and Fanout Cloud could provide future pushed updates. What does it mean to connect the two systems together though? Read on to find out.

Proxy chaining

Since Fanout and Fastly both work as reverse proxies, it is possible to have Fanout proxy traffic through Fastly rather than sending it directly to your origin server. This provides some unique benefits:

Cached initial data. Fanout lets you build API endpoints that serve both historical and future content, for example an HTTP streaming connection that returns some initial data before switching into push mode. Fastly can provide that initial data, reducing load on your origin server.

Cached Fanout instructions. Fanout’s behavior (e.g. transport mode, channels to subscribe to, etc.) is determined by instructions provided in origin server responses, usually in the form of special headers such as

Grip-HoldandGrip-Channel. Fastly can cache these instructions/headers, again reducing load on your origin server.High availability. If your origin server goes down, Fastly can serve cached data and instructions to Fanout. This means clients could connect to your API endpoint, receive historical data, and activate a streaming connection, all without needing access to the origin server.

Network flow

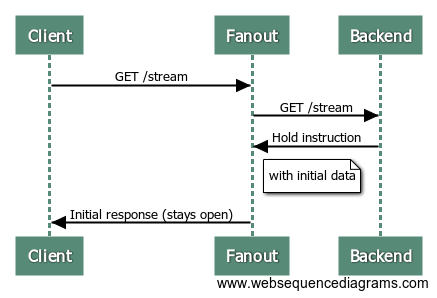

Suppose there’s an API endpoint /stream that returns some initial data and then stays open until there is an update to push. With Fanout, this can be implemented by having the origin server respond with instructions:

HTTP/1.1 200 OK

Content-Type: text/plain

Content-Length: 29

Grip-Hold: stream

Grip-Channel: updates

{"data": "current value"}

When Fanout Cloud receives this response from the origin server, it converts it into a streaming response to the client:

HTTP/1.1 200 OK

Content-Type: text/plain

Transfer-Encoding: chunked

Connection: Transfer-Encoding

{"data": "current value"}

The request between Fanout Cloud and the origin server is now finished, but the request between the client and Fanout Cloud remains open. Here’s a sequence diagram of the process:

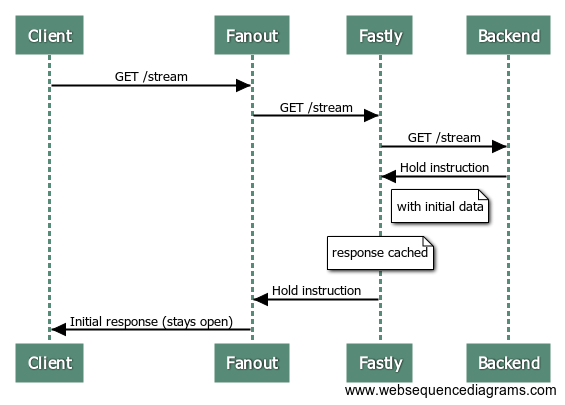

Since the request to the origin server is just a normal short-lived request/response interaction, it can alternatively be served through a caching server such as Fastly. Here’s what the process looks like with Fastly in the mix:

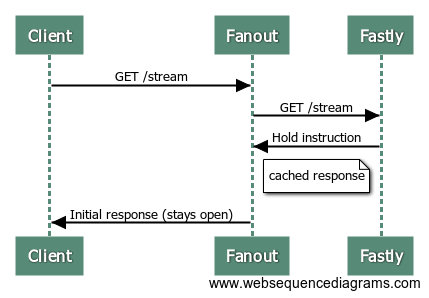

Now, guess what happens when the next client makes a request to the /stream endpoint?

That’s right, the origin server isn’t involved at all! Fastly serves the same response to Fanout Cloud, with those special HTTP headers and initial data, and Fanout Cloud sets up a streaming connection with the client.

Of course, this is only the connection setup. To send updates to connected clients, the data must be published to Fanout Cloud.

We may also need to purge the Fastly cache, if an event that triggers a publish causes the origin server response to change as well. For example, suppose the “value” that the /stream endpoint serves has been changed. The new value could be published to all current connections, but we’d also want any new connections that arrive afterwards to receive this latest value as well, rather than the older cached value. This can be solved by purging from Fastly and publishing to Fanout Cloud at the same time.

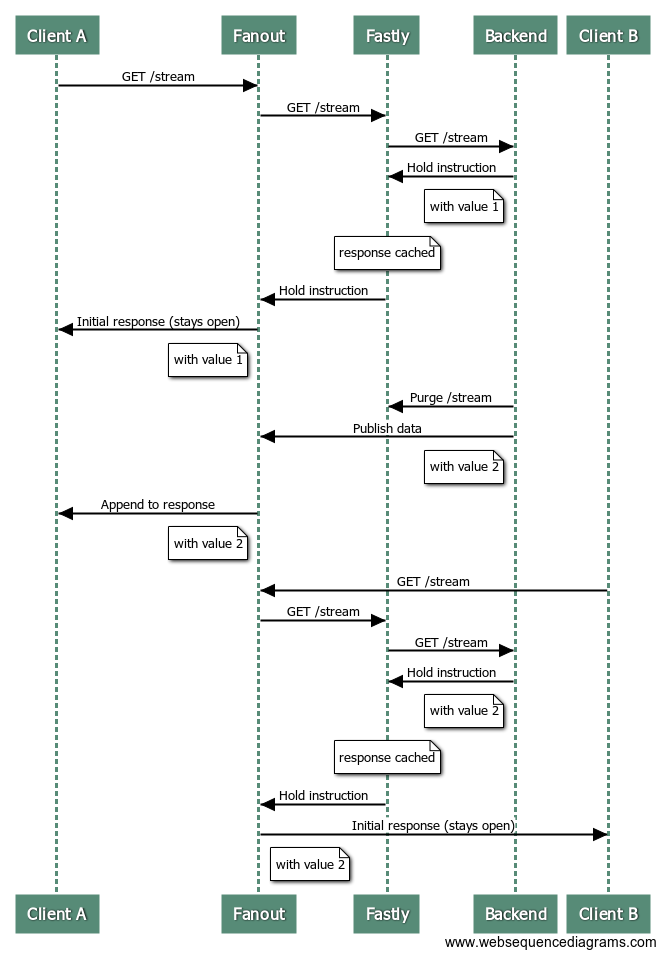

Here’s a (long) sequence diagram of a client connecting, receiving an update, and then another client connecting:

At the end of this sequence, the first and second clients have both received the latest data.

Rate-limiting

One gotcha with purging at the same time as publishing is if your data rate is high it can negate the caching benefit of using Fastly.

The sweet spot is data that is accessed frequently (many new visitors per second), changes infrequently (minutes), and you want changes to be delivered instantly (sub-second). An example could be a live blog. In that case, most requests can be served/handled from cache.

If your data changes multiple times per second (or has the potential to change that fast during peak moments), and you expect frequent access, you really don’t want to be purging your cache multiple times per second. The workaround is to rate-limit your purges. For example, during periods of high throughput, you might purge and publish at a maximum rate of once per second or so. This way the majority of new visitors can be served from cache, and the data will be updated shortly after.

An example

We created a Live Counter Demo to show off this combined Fanout + Fastly architecture. Requests first go to Fanout Cloud, then to Fastly, then to a Django backend server which manages the counter API logic. Whenever a counter is incremented, the Fastly cache is purged and the data is published through Fanout Cloud. The purge and publish process is also rate-limited to maximize caching benefit.

The code for the demo is on GitHub.

High scalability with Fanout and Fastly的更多相关文章

- spring amqp rabbitmq fanout配置

基于spring amqp rabbitmq fanout配置如下: 发布端 <rabbit:connection-factory id="rabbitConnectionFactor ...

- 可扩展性 Scalability

水平扩展和垂直扩展: Horizontal and vertical scaling Methods of adding more resources for a particular applica ...

- RabbitMQ Exchange中的fanout类型

fanout 多播 在之前都是使用direct直连类型的交换机,通过routingkey来决定把消息推到哪个queue中. 而fanout则是把拿到消息推到与之绑定的所有queue中. 分析业务,怎样 ...

- RabbitMQ学习笔记4-使用fanout交换器

fanout交换器会把发送给它的所有消息发送给绑定在它上面的队列,起到广播一样的效果. 本里使用实际业务中常见的例子, 订单系统:创建订单,然后发送一个事件消息 积分系统:发送订单的积分奖励 短信平台 ...

- What is the difference between extensibility and scalability?

You open a small fast food center, with a serving capacity of 5-10 people at a time. But you have en ...

- Achieving High Availability and Scalability - ARR and NLB

Achieving High Availability and Scalability: Microsoft Application Request Routing (ARR) for IIS 7.0 ...

- Improve Scalability With New Thread Pool APIs

Pooled Threads Improve Scalability With New Thread Pool APIs Robert Saccone Portions of this article ...

- A Flock Of Tasty Sources On How To Start Learning High Scalability

This is a guest repost by Leandro Moreira. When we usually are interested about scalability we look ...

- SSIS ->> Reliability And Scalability

Error outputs can obviously be used to improve reliability, but they also have an important part to ...

随机推荐

- Locust性能测试-no-web模式和csv报告保存 转自:悠悠

前言 前面是在web页面操作,需要手动的点start启动,结束的时候也需要手工去点stop,没法自定义运行时间,这就不太方便. locust提供了命令行运行的方法,不启动web页面也能运行,这就是no ...

- Intellij IDEA最全的热键表(default keymap)

Editing Ctrl + Space Basic code completion (the name of any class, method or variable) Ctrl + Shift ...

- Deploy custom service on non hadoop node with Apache Ambari

1 I want to deploy a custom service onto non hadoop nodes using Apache Ambari. I have created a cu ...

- java之 代理设计模式

1. 设计一个案例来实现租房功能.分析:在租房的过程中涉及到了3个对象,房东,中介,房客. 中介和房客具有相同的功能--租房. 可以设计如下: 2.上图的设计实际上就是一个代理设计模式---静态代理设 ...

- vs2013 C++编译器在调试的时候无法看到变量的值

- 3.matplotlib绘制条形图

plt.bar() # coding=utf-8 from matplotlib import pyplot as plt from matplotlib import font_manager my ...

- 自己用JQueryUI封装了几个系统常用对话框

/* * @功能描述:各种系统消息框 * @前置插件:JQueryUI * @开 发 者:魏巍 * @开发日期:2015-04-15 * @version 1.0 */ var SF = {}; SF ...

- 30个关于Shell脚本的经典案例(上)

对于初学者而言,因为没有实战经验,写不出来Shell脚本很正常,如果工作了几年的运维老年还是写不出来,那就是没主动找需求,缺乏练习,缺乏经验.针对以上问题,总结了30个生产环境中经典的Shell脚本, ...

- OO第三单元作业总结

OO第三单元作业总结--JML 第三单元的主题是JML规格的学习,其中的三次作业也是围绕JML规格的实现所展开的(虽然感觉作业中最难的还是如何正确适用数据结构以及如何正确地对于时间复杂度进行优化). ...

- CentOS 7源码安装MYSQL-5.6

一. 环境准备 Linux CentOS7.3系统一台主机即可: MYSQL官网:https://www.mysql.com/ MYSQL软件下载:http://ftp.kaist.ac.kr/mys ...