如何在maven项目里面编写mapreduce程序以及一个maven项目里面管理多个mapreduce程序

我们平时创建普通的mapreduce项目,在遍代码当你需要导包使用一些工具类的时候,

你需要自己找到对应的架包,再导进项目里面其实这样做非常不方便,我建议我们还是用maven项目来得方便多了

话不多说了,我们就开始吧

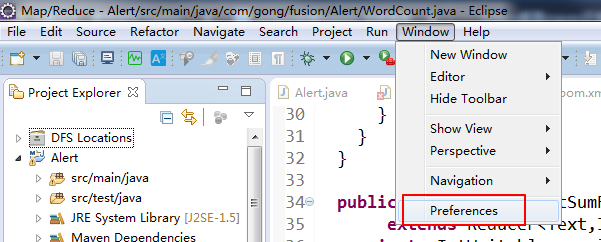

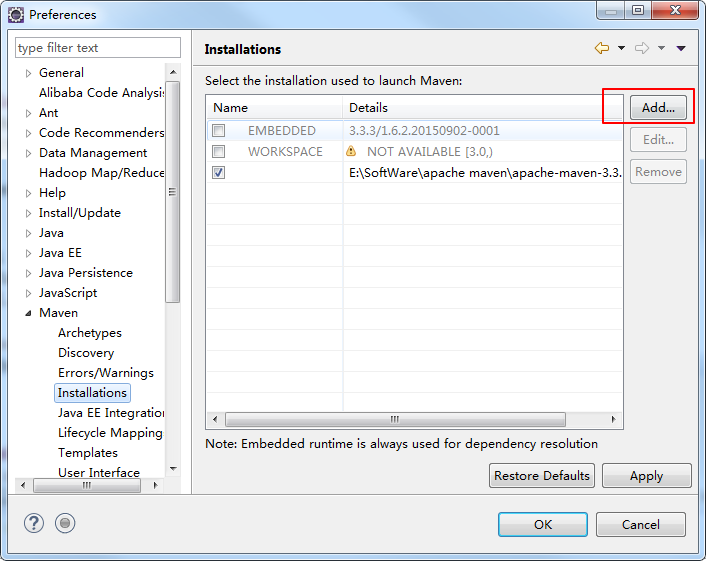

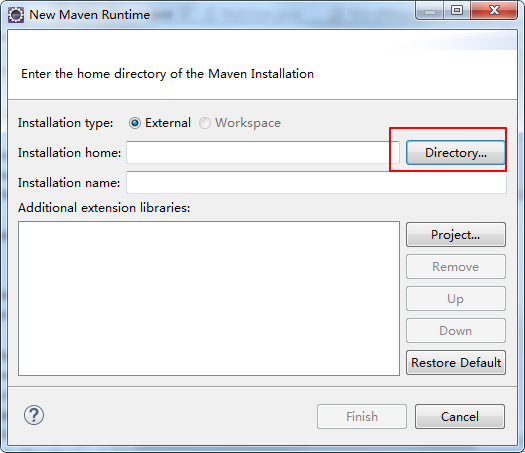

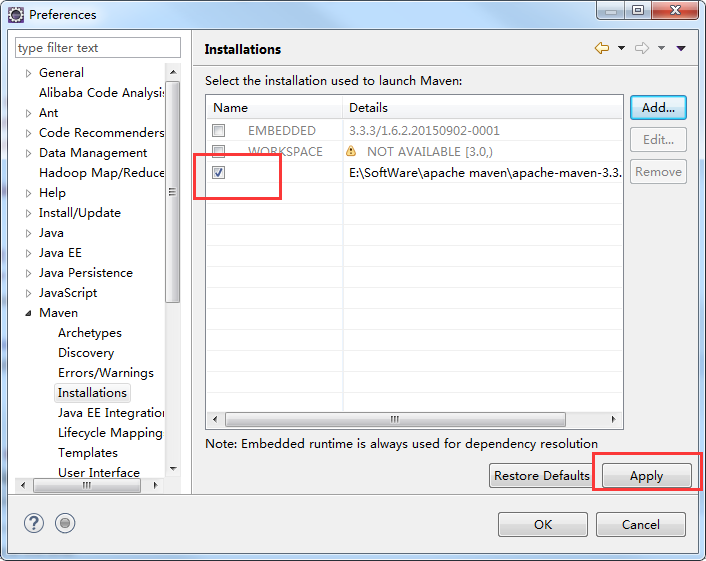

首先你在eclipse里把你本地安装的maven导进来

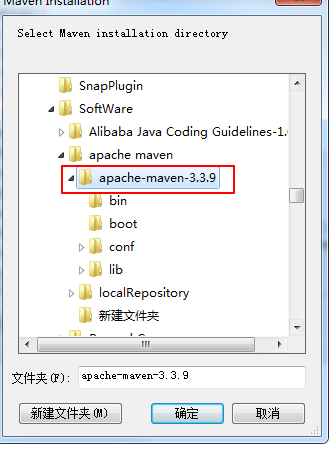

选择你本地安装的maven路径

勾选中你添加进来的maven

把本地安装的maven的setting文件添加进来

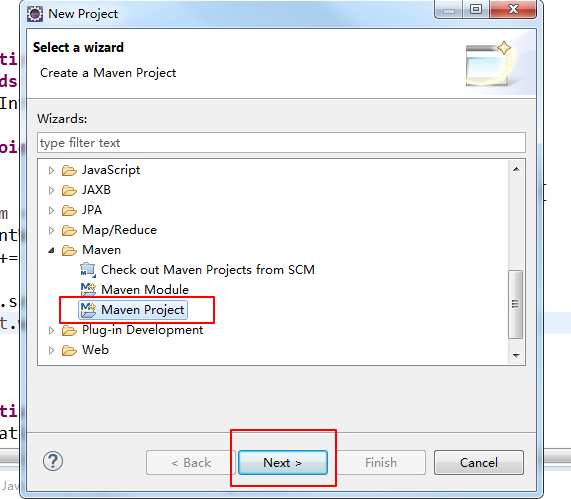

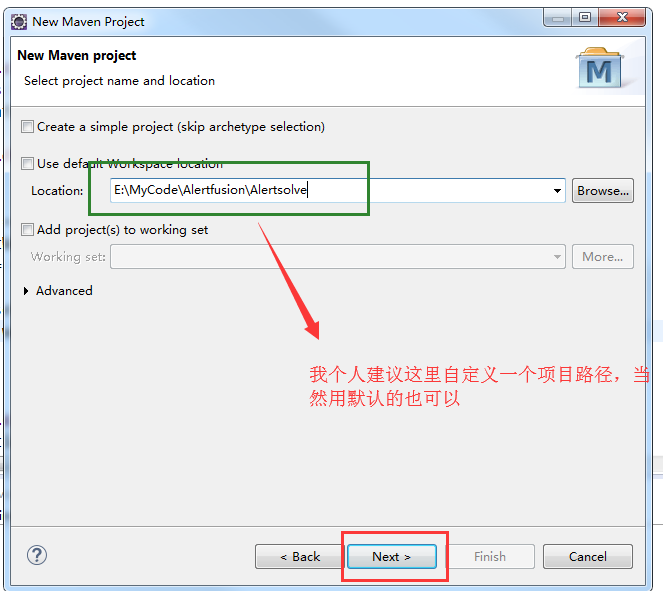

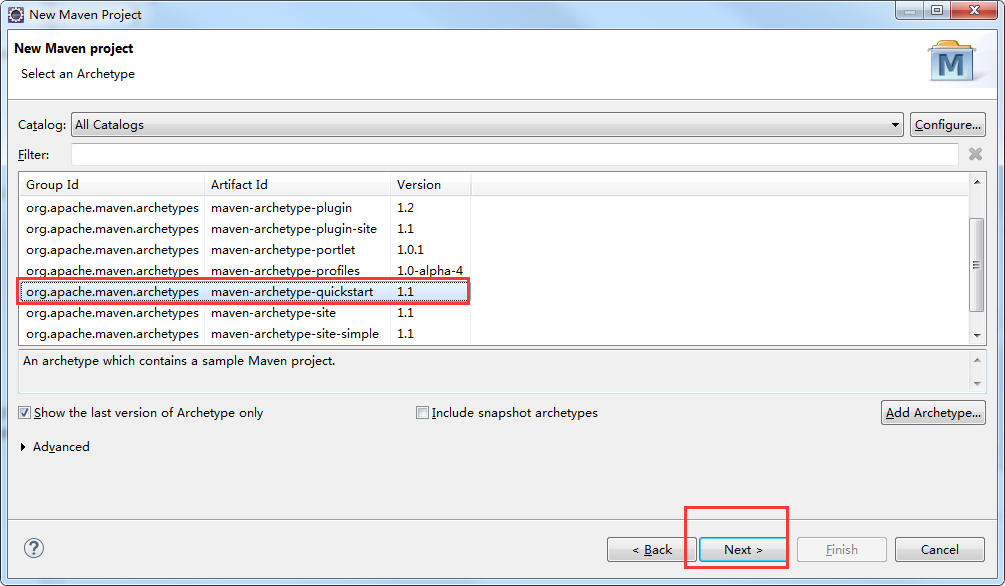

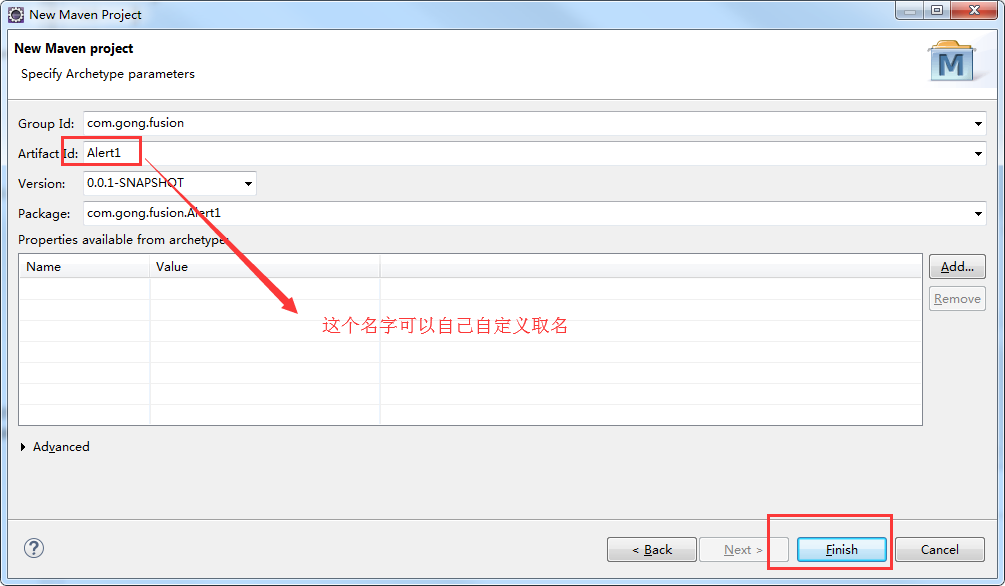

接下来创建一个maven项目

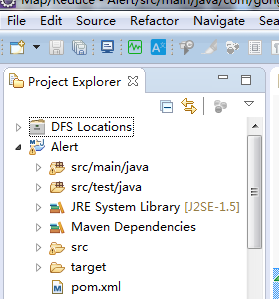

可以看到一个maven项目创建成功!!

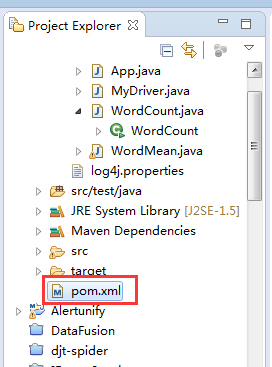

现在我们来配置pom.xml文件,把mapreduce程序运行的一些架包通过maven导进来

这个是我的项目文件可以给大家作参考

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion> <groupId>com.gong.fusion</groupId>

<artifactId>Alert</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging> <name>Alert</name>

<url>http://maven.apache.org</url> <properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties> <dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.6.0</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.7</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>2.6</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.1</version>

<executions>

<!-- Run shade goal on package phase -->

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<transformers>

<!-- add Main-Class to manifest file -->

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.gong.fusion.Alert.MyDriver</mainClass> //这里是你自己项目的目录

</transformer>

</transformers>

<createDependencyReducedPom>false</createDependencyReducedPom>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

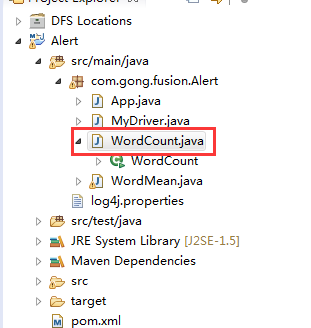

下面我们来写一个经典例子wordcount代码来实验一下

如何新建一个类来写我就不说了,我直接把代码放上来

package com.gong.fusion.Alert; import java.io.IOException;

import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount { public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{ private final static IntWritable one = new IntWritable(1);

private Text word = new Text(); public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

} public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

} public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path("hdfs://cdh-master:9000/user/kfk/data/wc.input"));

FileOutputFormat.setOutputPath(job, new Path("hdfs://cdh-master:9000/data/user/gong/wordcount-out1"));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

我的eclipse是已经跟我的大数据集群HDFS连接的.

大家记得添加这个文件

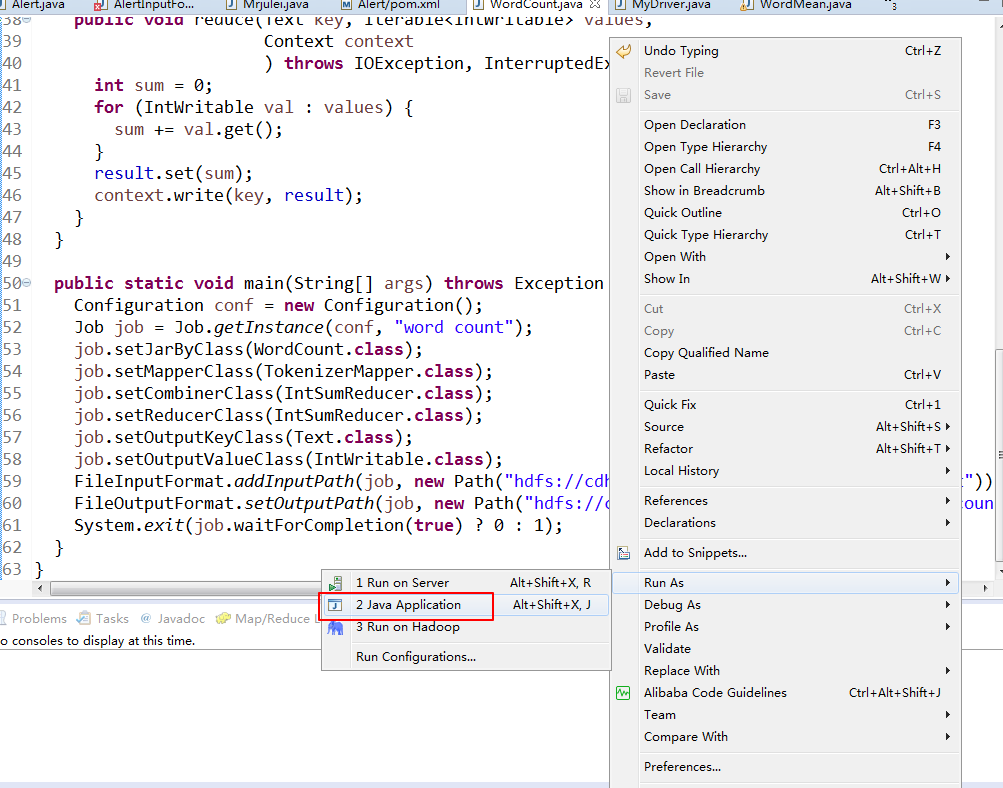

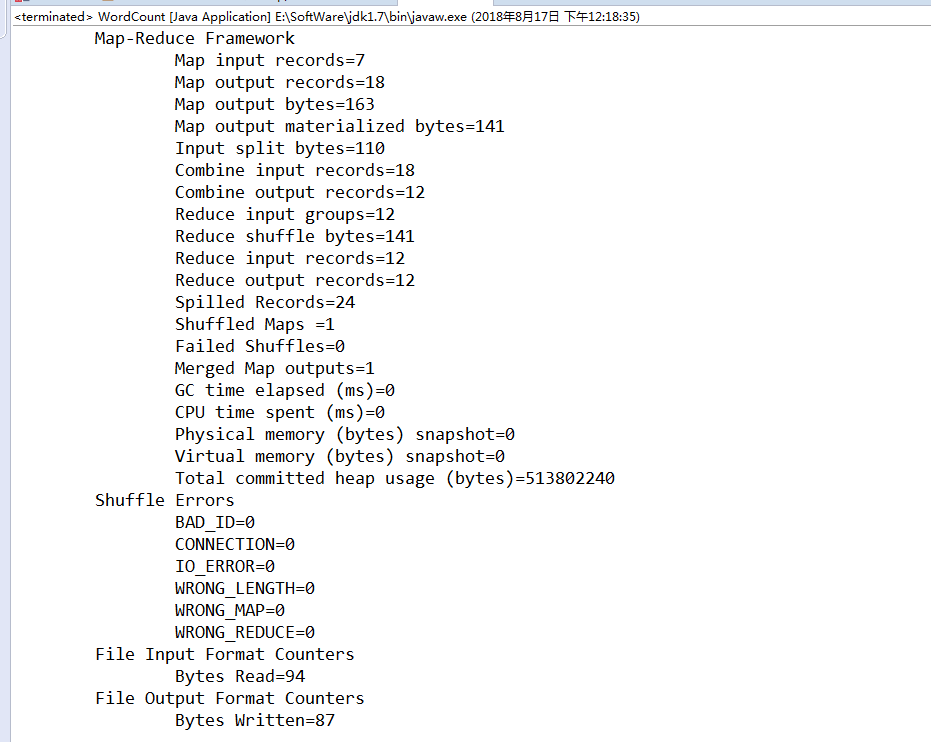

我们运行一下这个代码

运行成功!!!!!

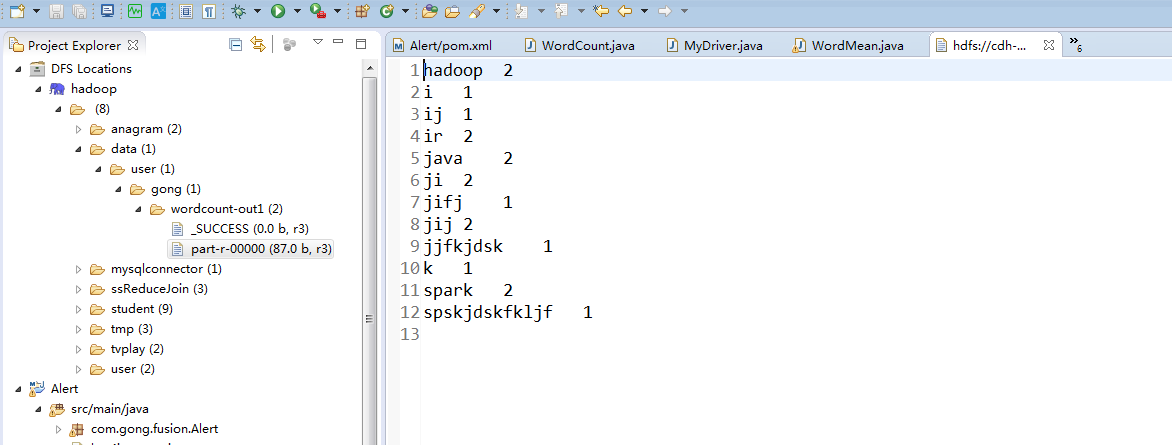

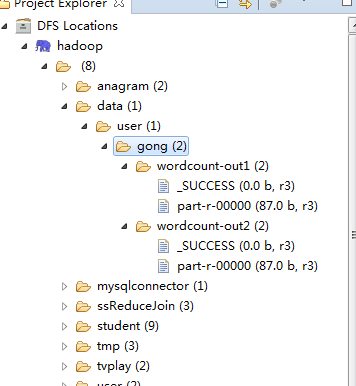

我们在hdfs上查看运行结果

这样们就实现了在maven 项目里面运行mapreduce程序了

接下来要讲的就是怎么管理多个mapreduce程序

我们新建一个MyDriver类用来管理多个mapreduce程序的类,和再创建另外一个mapreduce程序类wordmean

wordmean的内容跟wordcount是一样的,我就是把名字和输出路径改了一下!!!

当然在实际的开发中不会有这样的情况的,我是方便测试才这样做

package com.gong.fusion.Alert; import java.io.IOException;

import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.Reducer.Context;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import com.gong.fusion.Alert.WordCount.IntSumReducer;

import com.gong.fusion.Alert.WordCount.TokenizerMapper; public class WordMean {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable>{ private final static IntWritable one = new IntWritable(1);

private Text word = new Text(); public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

} public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

} public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path("hdfs://cdh-master:9000/user/kfk/data/wc.input"));

FileOutputFormat.setOutputPath(job, new Path("hdfs://cdh-master:9000/data/user/gong/wordcount-out2"));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

package com.gong.fusion.Alert;

import org.apache.hadoop.util.ProgramDriver;

public class MyDriver {

public static void main(String argv[]){

int exitCode = -1;

ProgramDriver pgd = new ProgramDriver();

try {

pgd.addClass("wordcount", WordCount.class,

"A map/reduce program that counts the words in the input files.");

pgd.addClass("wordmean", WordMean.class,

"A map/reduce program that counts the average length of the words in the input files."); exitCode = pgd.run(argv);

}

catch(Throwable e){

e.printStackTrace();

} System.exit(exitCode);

}

}

现在就通过Mydriver这个类来同时管理两个mapreduce代码了

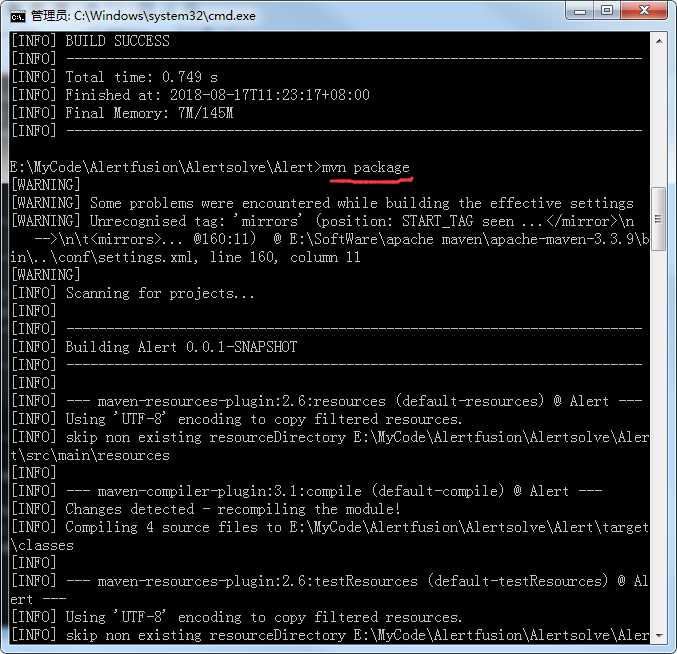

我们现在把程序通过maven打包放到大数据集群上面运行一下

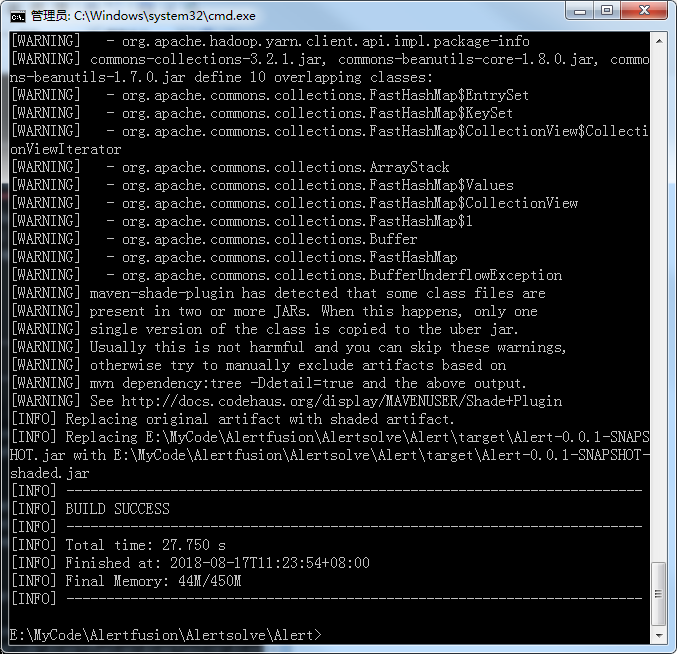

在我们的电脑打开cmd窗口,切换到你的项目路径下,用mvn clean清除一下

然后我们通过命令mvn package对项目进行打包

打包成功!!!

一般都会打包在target目录下的

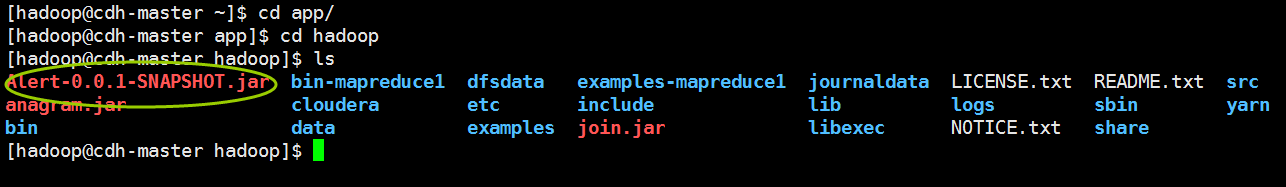

我们把这个包上传到我们的大数据集群上面去,怎么上传我就不多说了,用工具上传,或者用rz命令上传就可以了

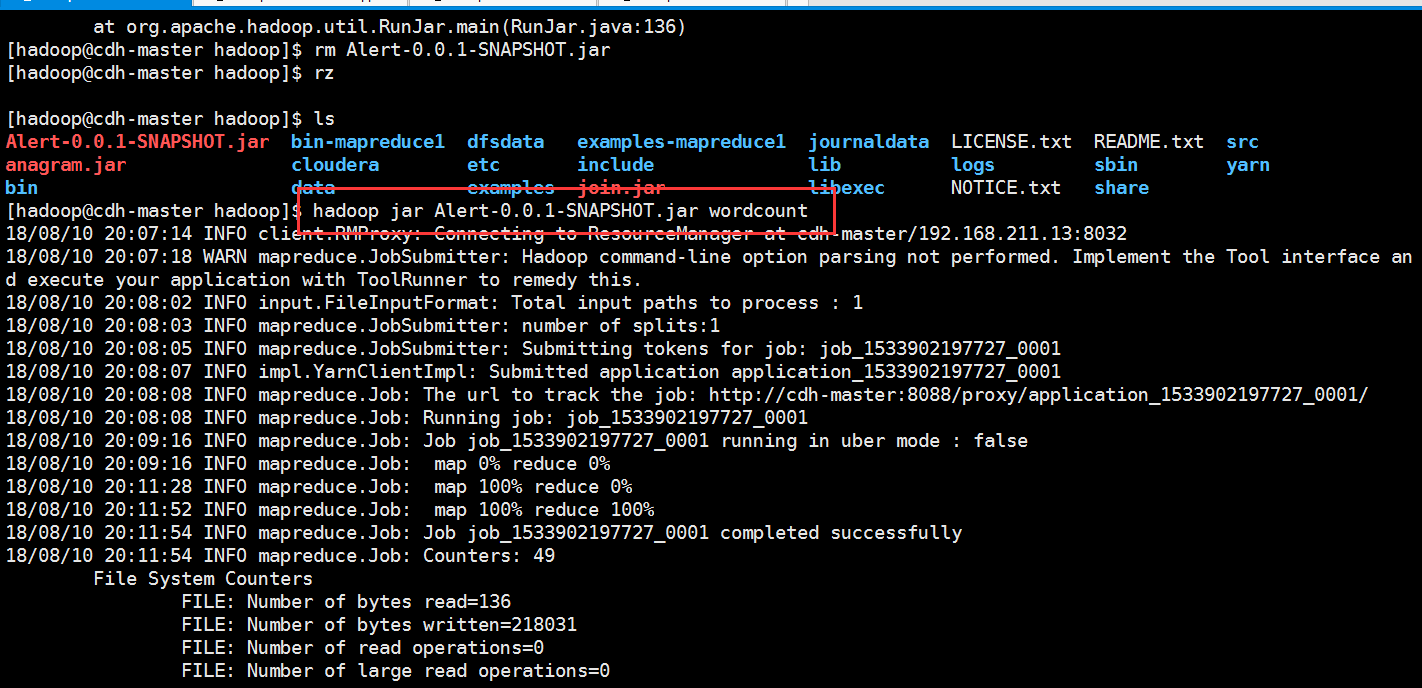

我们在集群上运行一下

我们直接在代码包后面加上其中一个mapreduce类的别名就可以了,这个别名在Mydiver类里面定义的

可以看到我们对两个不同的mapreduce都起了不同的别名

下面我们看看运行的结果

[hadoop@cdh-master hadoop]$ hadoop jar Alert-0.0.1-SNAPSHOT.jar wordcount

18/08/10 20:07:14 INFO client.RMProxy: Connecting to ResourceManager at cdh-master/192.168.211.13:8032

18/08/10 20:07:18 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

18/08/10 20:08:02 INFO input.FileInputFormat: Total input paths to process : 1

18/08/10 20:08:03 INFO mapreduce.JobSubmitter: number of splits:1

18/08/10 20:08:05 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1533902197727_0001

18/08/10 20:08:07 INFO impl.YarnClientImpl: Submitted application application_1533902197727_0001

18/08/10 20:08:08 INFO mapreduce.Job: The url to track the job: http://cdh-master:8088/proxy/application_1533902197727_0001/

18/08/10 20:08:08 INFO mapreduce.Job: Running job: job_1533902197727_0001

18/08/10 20:09:16 INFO mapreduce.Job: Job job_1533902197727_0001 running in uber mode : false

18/08/10 20:09:16 INFO mapreduce.Job: map 0% reduce 0%

18/08/10 20:11:28 INFO mapreduce.Job: map 100% reduce 0%

18/08/10 20:11:52 INFO mapreduce.Job: map 100% reduce 100%

18/08/10 20:11:54 INFO mapreduce.Job: Job job_1533902197727_0001 completed successfully

18/08/10 20:11:54 INFO mapreduce.Job: Counters: 49

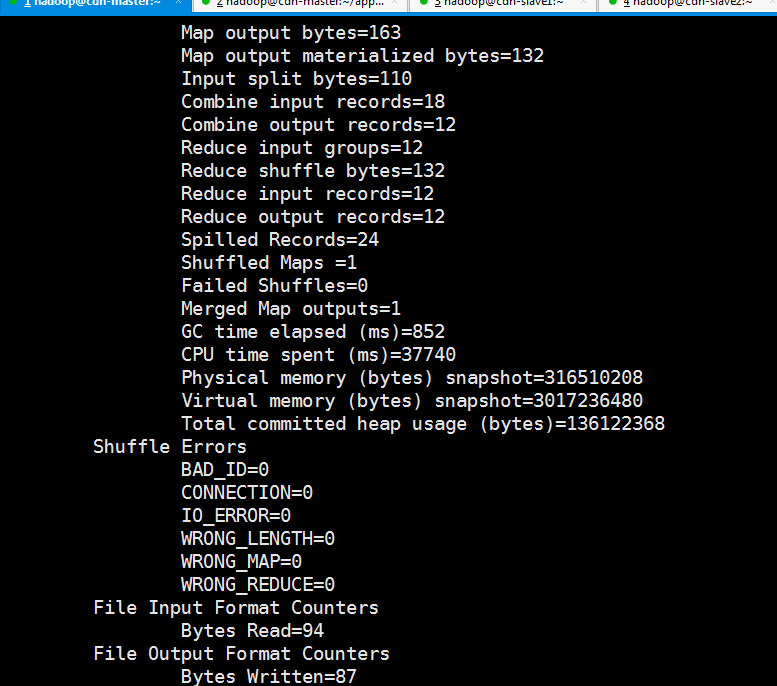

File System Counters

FILE: Number of bytes read=136

FILE: Number of bytes written=218031

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=204

HDFS: Number of bytes written=87

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=118978

Total time spent by all reduces in occupied slots (ms)=20993

Total time spent by all map tasks (ms)=118978

Total time spent by all reduce tasks (ms)=20993

Total vcore-seconds taken by all map tasks=118978

Total vcore-seconds taken by all reduce tasks=20993

Total megabyte-seconds taken by all map tasks=121833472

Total megabyte-seconds taken by all reduce tasks=21496832

Map-Reduce Framework

Map input records=7

Map output records=18

Map output bytes=163

Map output materialized bytes=132

Input split bytes=110

Combine input records=18

Combine output records=12

Reduce input groups=12

Reduce shuffle bytes=132

Reduce input records=12

Reduce output records=12

Spilled Records=24

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=852

CPU time spent (ms)=37740

Physical memory (bytes) snapshot=316510208

Virtual memory (bytes) snapshot=3017236480

Total committed heap usage (bytes)=136122368

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=94

File Output Format Counters

Bytes Written=87

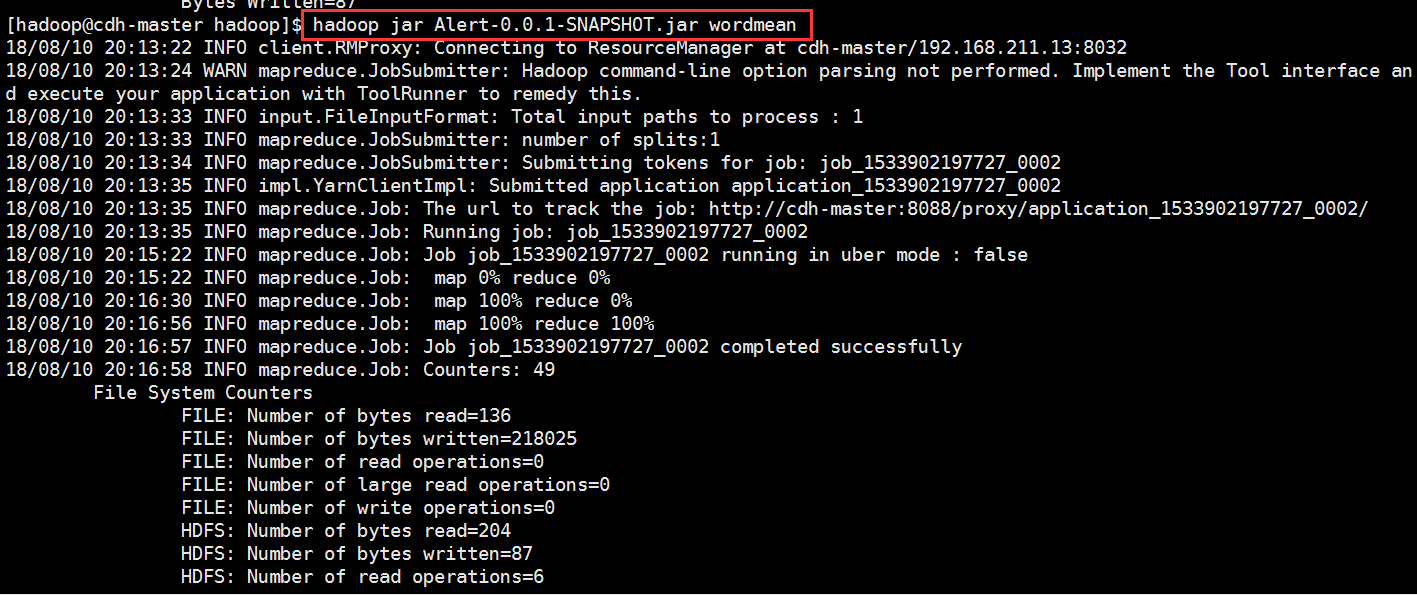

我们运行一下另外一个mapreduce程序

[hadoop@cdh-master hadoop]$ hadoop jar Alert-0.0.1-SNAPSHOT.jar wordmean

18/08/10 20:13:22 INFO client.RMProxy: Connecting to ResourceManager at cdh-master/192.168.211.13:8032

18/08/10 20:13:24 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

18/08/10 20:13:33 INFO input.FileInputFormat: Total input paths to process : 1

18/08/10 20:13:33 INFO mapreduce.JobSubmitter: number of splits:1

18/08/10 20:13:34 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1533902197727_0002

18/08/10 20:13:35 INFO impl.YarnClientImpl: Submitted application application_1533902197727_0002

18/08/10 20:13:35 INFO mapreduce.Job: The url to track the job: http://cdh-master:8088/proxy/application_1533902197727_0002/

18/08/10 20:13:35 INFO mapreduce.Job: Running job: job_1533902197727_0002

18/08/10 20:15:22 INFO mapreduce.Job: Job job_1533902197727_0002 running in uber mode : false

18/08/10 20:15:22 INFO mapreduce.Job: map 0% reduce 0%

18/08/10 20:16:30 INFO mapreduce.Job: map 100% reduce 0%

18/08/10 20:16:56 INFO mapreduce.Job: map 100% reduce 100%

18/08/10 20:16:57 INFO mapreduce.Job: Job job_1533902197727_0002 completed successfully

18/08/10 20:16:58 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=136

FILE: Number of bytes written=218025

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=204

HDFS: Number of bytes written=87

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=65084

Total time spent by all reduces in occupied slots (ms)=23726

Total time spent by all map tasks (ms)=65084

Total time spent by all reduce tasks (ms)=23726

Total vcore-seconds taken by all map tasks=65084

Total vcore-seconds taken by all reduce tasks=23726

Total megabyte-seconds taken by all map tasks=66646016

Total megabyte-seconds taken by all reduce tasks=24295424

Map-Reduce Framework

Map input records=7

Map output records=18

Map output bytes=163

Map output materialized bytes=132

Input split bytes=110

Combine input records=18

Combine output records=12

Reduce input groups=12

Reduce shuffle bytes=132

Reduce input records=12

Reduce output records=12

Spilled Records=24

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=493

CPU time spent (ms)=8170

Physical memory (bytes) snapshot=312655872

Virtual memory (bytes) snapshot=3007705088

Total committed heap usage (bytes)=150081536

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=94

File Output Format Counters

Bytes Written=87

[hadoop@cdh-master hadoop]$

可以看到两个不同的输出路径上,是两个程序分别运行的结果

如何在maven项目里面编写mapreduce程序以及一个maven项目里面管理多个mapreduce程序的更多相关文章

- Maven(三)使用 IDEA 创建一个 Maven 项目

利用 IDEA 创建一个 Maven 项目 创建 Maven 项目 选择 File --> New --> Project 选中 Maven 填写项目信息 选择工作空间 目录结构 ├─sr ...

- Maven 系列 二 :Maven 常用命令,手动创建第一个 Maven 项目【转】

1.根据 Maven 的约定,我们在D盘根目录手动创建如下目录及文件结构: 2.打开 pom.xml 文件,添加如下内容: <project xmlns="http://maven.a ...

- 微信小程序创建一个新项目

1. 新建一个文件夹. 2. 打开微信小程序开发工具,导入新建文件夹:然后输入创建的appId:会自动生成一个project.config.json,打开这个文件,会看到appid这个字段. 3.可以 ...

- Maven 系列 二 :Maven 常用命令,手动创建第一个 Maven 项目

1.根据 Maven 的约定,我们在D盘根目录手动创建如下目录及文件结构: 2.打开 pom.xml 文件,添加如下内容: 1 <project xmlns="http://maven ...

- Maven入门指南② :Maven 常用命令,手动创建第一个 Maven 项目

1.根据 Maven 的约定,我们在D盘根目录手动创建如下目录及文件结构: 2.打开pom.xml文件,添加如下内容: <project xmlns="http://maven.apa ...

- Web —— java web 项目 Tomcat 的配置 与 第一个web 项目创建

目录: 0.前言 1.Tomcat的配置 2.第一个Web 项目 0.前言 刚刚开始接触web开发,了解的也不多,在这里记录一下我的第一个web项目启动的过程.网上教程很多,使用的java IDE 好 ...

- Maven(一)如何用Eclipse创建一个Maven项目

1.什么是Maven Apache Maven 是一个项目管理和整合工具.基于工程对象模型(POM)的概念,通过一个中央信息管理模块,Maven 能够管理项目的构建.报告和文档. Maven工程结构和 ...

- maven安装配置及使用maven创建一个web项目

今天开始学习使用maven,现在把学习过程中的资料整理在这边. 第一部分.maven安装和配置. http://jingyan.baidu.com/article/295430f136e8e00c7e ...

- eclipse中创建一个maven项目

1.什么是Maven Apache Maven 是一个项目管理和整合工具.基于工程对象模型(POM)的概念,通过一个中央信息管理模块,Maven 能够管理项目的构建.报告和文档. Maven工程结构和 ...

随机推荐

- C# Monitor的Wait和Pulse方法使用详解

[转载]http://blog.csdn.net/qqsttt/article/details/24777553 Monitor的Wait和Pulse方法在线程的同步锁使用中是比较复杂的,理解稍微困难 ...

- mysql之 误用SECONDS_BEHIND_MASTER衡量MYSQL主备的延迟时间

链接:http://www.woqutech.com/?p=1116 MySQL 本身通过 show slave status 提供了 Seconds_Behind_Master ,用于衡量主备之间的 ...

- href="javacript:;" href="javacript:void(0);" href="#"区别。。。

一.href="javacript:;" 这种用法不正确,这么用的话会出现浏览器访问“javascript:;”这个地址的现象: 二.href="javacript:v ...

- eclipse卡死在search for main types 20 files to index

run as application时,提示search for main types 20 files to index (*/*/*.jar)某个maven依赖jar出了问题,找不到main方法 ...

- C# 构造方法...

Class1.cs using System; using System.Collections.Generic; using System.Linq; using System.Text; usin ...

- ASP.NET AJAX入门系列(7):使用客户端脚本对UpdateProgress编程

在本篇文章中,我们将通过编写JavaScript来使用客户端行为扩展UpdateProgress控件,客户端代码将使用ASP.NET AJAX Library中的PageRequestManager, ...

- Gradle详细解析***

前言 对于Android工程师来说编译/打包等问题立即就成痛点了.一个APP有多个版本,Release版.Debug版.Test版.甚至针对不同APP Store都有不同的版本.在以前ROM的环境下, ...

- DS二叉树--叶子数量

题目描述 计算一颗二叉树包含的叶子结点数量. 提示:叶子是指它的左右孩子为空. 建树方法采用“先序遍历+空树用0表示”的方法,即给定一颗二叉树的先序遍历的结果为AB0C00D00,其中空节点用字符‘0 ...

- [蓝桥杯]ALGO-16.算法训练_进制转换

问题描述 我们可以用这样的方式来表示一个十进制数: 将每个阿拉伯数字乘以一个以该数字所处位置的(值减1)为指数,以10为底数的幂之和的形式.例如:123可表示为 1*102+2*101+3*100这样 ...

- 【AMQ】之JMS概念

1.JMS(Java Message Service)Java消息服务,是Java20几种技术其中之一 2.JMS规范定义了Java中访问消息中间件的接口,但是没有给实现,这个实现就是由第三方使用者来 ...