MapReduce编程系列 — 2:计算平均分

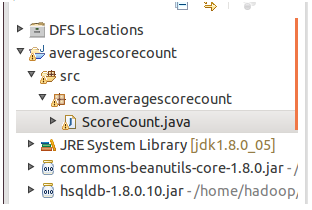

1、项目名称:

2、程序代码:

package com.averagescorecount; import java.io.IOException;

import java.util.Iterator;

import java.util.StringTokenizer; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class ScoreCount {

/*这个map的输入是经过InputFormat分解过的数据集,InputFormat的默认值是TextInputFormat,它针对文件,

*按行将文本切割成InputSplits,并用LineRecordReader将InputSplit解析成<key,value>对,

*key是行在文本中的位置,value是文件中的一行。

*/

public static class Map extends Mapper<LongWritable, Text, Text , IntWritable>{

public void map(LongWritable key , Text value , Context context ) throws IOException, InterruptedException{

String line = value.toString();

System.out.println("line:"+line); System.out.println("TokenizerMapper.map...");

System.out.println("Map key:"+key.toString()+" Map value:"+value.toString());

//将输入的数据首先按行进行分割

StringTokenizer tokenizerArticle = new StringTokenizer(line,"\n");

//分别对每一行进行处理

while (tokenizerArticle.hasMoreTokens()) {

//每行按空格划分

StringTokenizer tokenizerLine = new StringTokenizer(tokenizerArticle.nextToken());

String strName = tokenizerLine.nextToken();//学生姓名部分

String strScore= tokenizerLine.nextToken();//成绩部分 Text name = new Text(strName);

int scoreInt = Integer.parseInt(strScore); System.out.println("name:"+name+" scoreInt:"+scoreInt); context.write(name, new IntWritable(scoreInt));

System.out.println("context_map:"+context.toString());

}

System.out.println("context_map_111:"+context.toString());

}

} public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable>{

public void reduce(Text key , Iterable<IntWritable> values,Context context) throws IOException,InterruptedException{

int sum = 0;

int count = 0;

int score = 0;

System.out.println("reducer...");

System.out.println("Reducer key:"+key.toString()+" Reducer values:"+values.toString());

//设置迭代器

Iterator<IntWritable> iterator = values.iterator();

while (iterator.hasNext()) {

score = iterator.next().get();

System.out.println("score:"+score);

sum += score;

count++; }

int average = (int) sum/count;

System.out.println("key"+key+" average:"+average);

context.write(key, new IntWritable(average));

System.out.println("context_reducer:"+context.toString());

}

} public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = new Job(conf, "score count");

job.setJarByClass(ScoreCount.class); job.setMapperClass(Map.class);

job.setCombinerClass(Reduce.class);

job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class); FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

陈东伟 90

李宁 87

杨森 86

陈东奇 78

谭果 94

盖盖 83

陈洲立 68

陈东伟 96

李宁 82

杨森 85

陈东奇 72

谭果 97

盖盖 82

陈洲立 46

陈东伟 48

李宁 67

杨森 33

陈东奇 28

谭果 78

盖盖 87

4、运行过程:

14/09/20 19:31:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/09/20 19:31:16 WARN mapred.JobClient: Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

14/09/20 19:31:16 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

14/09/20 19:31:16 INFO input.FileInputFormat: Total input paths to process : 1

14/09/20 19:31:16 WARN snappy.LoadSnappy: Snappy native library not loaded

14/09/20 19:31:16 INFO mapred.JobClient: Running job: job_local_0001

14/09/20 19:31:16 INFO util.ProcessTree: setsid exited with exit code 0

14/09/20 19:31:16 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@4080b02f

14/09/20 19:31:16 INFO mapred.MapTask: io.sort.mb = 100

14/09/20 19:31:16 INFO mapred.MapTask: data buffer = 79691776/99614720

14/09/20 19:31:16 INFO mapred.MapTask: record buffer = 262144/327680

line:陈洲立 67

TokenizerMapper.map...

Map key:0 Map value:陈洲立 67

name:陈洲立 scoreInt:67

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东伟 90

TokenizerMapper.map...

Map key:13 Map value:陈东伟 90

name:陈东伟 scoreInt:90

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:李宁 87

TokenizerMapper.map...

Map key:26 Map value:李宁 87

name:李宁 scoreInt:87

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:杨森 86

TokenizerMapper.map...

Map key:36 Map value:杨森 86

name:杨森 scoreInt:86

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东奇 78

TokenizerMapper.map...

Map key:46 Map value:陈东奇 78

name:陈东奇 scoreInt:78

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:谭果 94

TokenizerMapper.map...

Map key:59 Map value:谭果 94

name:谭果 scoreInt:94

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:盖盖 83

TokenizerMapper.map...

Map key:69 Map value:盖盖 83

name:盖盖 scoreInt:83

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈洲立 68

TokenizerMapper.map...

Map key:79 Map value:陈洲立 68

name:陈洲立 scoreInt:68

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东伟 96

TokenizerMapper.map...

Map key:92 Map value:陈东伟 96

name:陈东伟 scoreInt:96

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:李宁 82

TokenizerMapper.map...

Map key:105 Map value:李宁 82

name:李宁 scoreInt:82

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:杨森 85

TokenizerMapper.map...

Map key:115 Map value:杨森 85

name:杨森 scoreInt:85

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东奇 72

TokenizerMapper.map...

Map key:125 Map value:陈东奇 72

name:陈东奇 scoreInt:72

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:谭果 97

TokenizerMapper.map...

Map key:138 Map value:谭果 97

name:谭果 scoreInt:97

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:盖盖 82

TokenizerMapper.map...

Map key:148 Map value:盖盖 82

name:盖盖 scoreInt:82

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈洲立 46

TokenizerMapper.map...

Map key:158 Map value:陈洲立 46

name:陈洲立 scoreInt:46

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东伟 48

TokenizerMapper.map...

Map key:171 Map value:陈东伟 48

name:陈东伟 scoreInt:48

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:李宁 67

TokenizerMapper.map...

Map key:184 Map value:李宁 67

name:李宁 scoreInt:67

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:杨森 33

TokenizerMapper.map...

Map key:194 Map value:杨森 33

name:杨森 scoreInt:33

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:陈东奇 28

TokenizerMapper.map...

Map key:204 Map value:陈东奇 28

name:陈东奇 scoreInt:28

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:谭果 78

TokenizerMapper.map...

Map key:217 Map value:谭果 78

name:谭果 scoreInt:78

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:盖盖 87

TokenizerMapper.map...

Map key:227 Map value:盖盖 87

name:盖盖 scoreInt:87

context_map:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

line:

TokenizerMapper.map...

Map key:237 Map value:

context_map_111:org.apache.hadoop.mapreduce.Mapper$Context@d4cf771

14/09/20 19:31:16 INFO mapred.MapTask: Starting flush of map output

reducer...

Reducer key:李宁 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:82

score:87

score:67

key李宁 average:78

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:杨森 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:33

score:86

score:85

key杨森 average:68

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:盖盖 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:87

score:83

score:82

key盖盖 average:84

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:谭果 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:94

score:97

score:78

key谭果 average:89

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:陈东伟 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:48

score:90

score:96

key陈东伟 average:78

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:陈东奇 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:72

score:78

score:28

key陈东奇 average:59

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

reducer...

Reducer key:陈洲立 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@63dbbdf2

score:68

score:67

score:46

key陈洲立 average:60

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@3d32487

14/09/20 19:31:16 INFO mapred.MapTask: Finished spill 0

14/09/20 19:31:16 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting

14/09/20 19:31:17 INFO mapred.JobClient: map 0% reduce 0%

14/09/20 19:31:19 INFO mapred.LocalJobRunner:

14/09/20 19:31:19 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done.

14/09/20 19:31:19 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@5fc24d33

14/09/20 19:31:19 INFO mapred.LocalJobRunner:

14/09/20 19:31:19 INFO mapred.Merger: Merging 1 sorted segments

14/09/20 19:31:19 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 102 bytes

14/09/20 19:31:19 INFO mapred.LocalJobRunner:

reducer...

Reducer key:李宁 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:78

key李宁 average:78

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:杨森 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:68

key杨森 average:68

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:盖盖 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:84

key盖盖 average:84

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:谭果 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:89

key谭果 average:89

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:陈东伟 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:78

key陈东伟 average:78

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:陈东奇 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:59

key陈东奇 average:59

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

reducer...

Reducer key:陈洲立 Reducer values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@2407325d

score:60

key陈洲立 average:60

context_reducer:org.apache.hadoop.mapreduce.Reducer$Context@52403ee2

14/09/20 19:31:19 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting

14/09/20 19:31:19 INFO mapred.LocalJobRunner:

14/09/20 19:31:19 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now

14/09/20 19:31:19 INFO output.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to hdfs://localhost:9000/user/hadoop/score_output

14/09/20 19:31:20 INFO mapred.JobClient: map 100% reduce 0%

14/09/20 19:31:22 INFO mapred.LocalJobRunner: reduce > reduce

14/09/20 19:31:22 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done.

14/09/20 19:31:23 INFO mapred.JobClient: map 100% reduce 100%

14/09/20 19:31:23 INFO mapred.JobClient: Job complete: job_local_0001

14/09/20 19:31:23 INFO mapred.JobClient: Counters: 22

14/09/20 19:31:23 INFO mapred.JobClient: Map-Reduce Framework

14/09/20 19:31:23 INFO mapred.JobClient: Spilled Records=14

14/09/20 19:31:23 INFO mapred.JobClient: Map output materialized bytes=106

14/09/20 19:31:23 INFO mapred.JobClient: Reduce input records=7

14/09/20 19:31:23 INFO mapred.JobClient: Virtual memory (bytes) snapshot=0

14/09/20 19:31:23 INFO mapred.JobClient: Map input records=22

14/09/20 19:31:23 INFO mapred.JobClient: SPLIT_RAW_BYTES=116

14/09/20 19:31:23 INFO mapred.JobClient: Map output bytes=258

14/09/20 19:31:23 INFO mapred.JobClient: Reduce shuffle bytes=0

14/09/20 19:31:23 INFO mapred.JobClient: Physical memory (bytes) snapshot=0

14/09/20 19:31:23 INFO mapred.JobClient: Reduce input groups=7

14/09/20 19:31:23 INFO mapred.JobClient: Combine output records=7

14/09/20 19:31:23 INFO mapred.JobClient: Reduce output records=7

14/09/20 19:31:23 INFO mapred.JobClient: Map output records=21

14/09/20 19:31:23 INFO mapred.JobClient: Combine input records=21

14/09/20 19:31:23 INFO mapred.JobClient: CPU time spent (ms)=0

14/09/20 19:31:23 INFO mapred.JobClient: Total committed heap usage (bytes)=408944640

14/09/20 19:31:23 INFO mapred.JobClient: File Input Format Counters

14/09/20 19:31:23 INFO mapred.JobClient: Bytes Read=238

14/09/20 19:31:23 INFO mapred.JobClient: FileSystemCounters

14/09/20 19:31:23 INFO mapred.JobClient: HDFS_BYTES_READ=476

14/09/20 19:31:23 INFO mapred.JobClient: FILE_BYTES_WRITTEN=81132

14/09/20 19:31:23 INFO mapred.JobClient: FILE_BYTES_READ=448

14/09/20 19:31:23 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=79

14/09/20 19:31:23 INFO mapred.JobClient: File Output Format Counters

14/09/20 19:31:23 INFO mapred.JobClient: Bytes Written=79

5、输出结果:

MapReduce编程系列 — 2:计算平均分的更多相关文章

- MapReduce编程系列 — 1:计算单词

1.代码: package com.mrdemo; import java.io.IOException; import java.util.StringTokenizer; import org.a ...

- 【原创】MapReduce编程系列之二元排序

普通排序实现 普通排序的实现利用了按姓名的排序,调用了默认的对key的HashPartition函数来实现数据的分组.partition操作之后写入磁盘时会对数据进行排序操作(对一个分区内的数据作排序 ...

- MapReduce编程系列 — 6:多表关联

1.项目名称: 2.程序代码: 版本一(详细版): package com.mtjoin; import java.io.IOException; import java.util.Iterator; ...

- MapReduce编程系列 — 5:单表关联

1.项目名称: 2.项目数据: chile parentTom LucyTom JackJone LucyJone JackLucy MaryLucy Ben ...

- MapReduce编程系列 — 4:排序

1.项目名称: 2.程序代码: package com.sort; import java.io.IOException; import org.apache.hadoop.conf.Configur ...

- MapReduce编程系列 — 3:数据去重

1.项目名称: 2.程序代码: package com.dedup; import java.io.IOException; import org.apache.hadoop.conf.Configu ...

- 【原创】MapReduce编程系列之表连接

问题描述 需要连接的表如下:其中左边是child,右边是parent,我们要做的是找出grandchild和grandparent的对应关系,为此需要进行表的连接. Tom Lucy Tom Jim ...

- MapReduce 编程 系列九 Reducer数目

本篇介绍怎样控制reduce的数目.前面观察结果文件,都会发现通常是以part-r-00000 形式出现多个文件,事实上这个reducer的数目有关系.reducer数目多,结果文件数目就多. 在初始 ...

- MapReduce 编程 系列七 MapReduce程序日志查看

首先,假设须要打印日志,不须要用log4j这些东西,直接用System.out.println就可以,这些输出到stdout的日志信息能够在jobtracker网站终于找到. 其次,假设在main函数 ...

随机推荐

- ZigBee 入网详解

本文将根据Sniffer来详细解释ZigBee终端设备入网的整个流程,原创博文. 当协调器建立好网络后,终端设备执行zb_startrequest函数,准备入网时,他们两者之间详细的流程如下.

- 简单的MySQLDB类

<?php error_reporting(E_ALL ^ E_DEPRECATED); //数据库操作类 class MySQLDB{ //属性--必要的信息 private $_host; ...

- 转载 C# BindingSource

1.引言 BindingSource组件是数据源和控件间的一座桥,同时提供了大量的API和Event供我们使用.使用这些API我们可以将Code与各种具体类型数据源进行解耦:使用这些Event我们可以 ...

- 一种C# TCP异步编程中遇到的问题

最近在维护公司的一个socket服务端工具,该工具主要是提供两个socket server服务,对两端连接的程序进行数据的透明转发. 程序运行期间,遇到一个问题,程序的一端是GPRS设备,众所周知,G ...

- PHP、Java、C#实现URI参数签名算法,确保应用与REST服务器之间的安全通信,防止Secret Key盗用、数据篡改等恶意攻击行为

简介 应用基于HTTP POST或HTTP GET请求发送Open API调用请求时,为了确保应用与REST服务器之间的安全通信,防止Secret Key盗用.数据篡改等恶意攻击行为,REST服务器使 ...

- tomcat源码解读(1)–tomcat热部署实现原理

tomcat的热部署实现原理:tomcat启动的时候会有启动一个线程每隔一段时间会去判断应用中加载的类是否发生变法(类总数的变化,类的修改),如果发生了变化就会把应用的启动的线程停止掉,清除引用,并且 ...

- CentOS系统中手动调整系统时间的方法

我们一般使用“date -s”命令来修改系统时间.比如将系统时间设定成1996年6月10日的命令如下. #date -s 06/10/96 将系统时间设定成下午1点12分0秒的命令如下. #date ...

- C++数据类型总结

关键字:C++, 数据类型, VS2015. OS:Windows 10. ANSI C/C++基本数据类型: Type Size 数值范围 无值型void 0 byte 无值域 布尔型bool 1 ...

- PhantomJS实现最简单的模拟登录方案

以前写爬虫,遇到需要登录的页面,一般都是通过chrome的检查元素,查看登录需要的参数和加密方法,如果网站的加密非常复杂,例如登录qq的,就会很蛋疼 在后面,有了Pyv8,就可以把加密的js文件扔给它 ...

- Beaglebone Back学习一(开发板介绍)

随着开源软件的盛行.成熟,开源硬件也迎来了春天,先有Arduino,后有Raspherry Pi,到当前的Beaglebone .相信在不久的将来,开源项目将越来越多,越来越走向成熟. ...