Spark Shuffle(二)Executor、Driver之间Shuffle结果消息传递、追踪(转载)

1. 前言

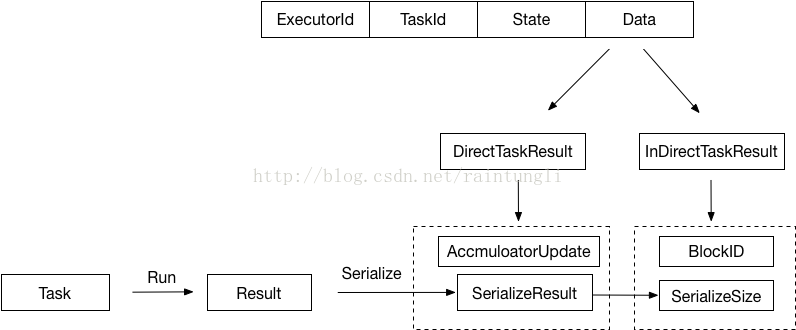

2. StatusUpdate消息

override def statusUpdate(taskId: Long, state: TaskState, data: ByteBuffer) {

val msg = StatusUpdate(executorId, taskId, state, data)

driver match {

case Some(driverRef) => driverRef.send(msg)

case None => logWarning(s"Drop $msg because has not yet connected to driver")

}

}

- ExecutorId: Executor自己的ID

- TaskId: task分配的ID

- State: Task的运行状态

LAUNCHING, RUNNING, FINISHED, FAILED, KILLED, LOST

- Data: 保存序列化的Result

2.1 Executor端发送

- 最大的返回结果大小,如果超过设定的最大返回结果时,返回的结果内容会被丢弃,只是返回序列化的InDirectTaskResult,里面包含着BlockID和序列化后的结果大小

spark.driver.maxResultSize

- 最大的直接返回结果大小:如果返回的结果大于最大的直接返回结果大小,小于最大的返回结果大小,采用了保存的折中的策略,将序列化DirectTaskResult保存到BlockManager中,关于BlockManager可以参考前面写的BlockManager系列,返回InDirectTaskResult,里面包含着BlockID和序列化的结果大小

spark.task.maxDirectResultSize

- 直接返回:如果返回的结果小于等于最大的直接返回结果大小,将直接将序列化的DirectTaskResult返回给Driver端

val serializedResult: ByteBuffer = {

if (maxResultSize > && resultSize > maxResultSize) {

logWarning(s"Finished $taskName (TID $taskId). Result is larger than maxResultSize " +

s"(${Utils.bytesToString(resultSize)} > ${Utils.bytesToString(maxResultSize)}), " +

s"dropping it.")

ser.serialize(new IndirectTaskResult[Any](TaskResultBlockId(taskId), resultSize))

} else if (resultSize > maxDirectResultSize) {

val blockId = TaskResultBlockId(taskId)

env.blockManager.putBytes(

blockId,

new ChunkedByteBuffer(serializedDirectResult.duplicate()),

StorageLevel.MEMORY_AND_DISK_SER)

logInfo(

s"Finished $taskName (TID $taskId). $resultSize bytes result sent via BlockManager)")

ser.serialize(new IndirectTaskResult[Any](blockId, resultSize))

} else {

logInfo(s"Finished $taskName (TID $taskId). $resultSize bytes result sent to driver")

serializedDirectResult

}

}

2.2 Driver端接收

Driver端处理StatusUpdate的消息的代码如下:

case StatusUpdate(executorId, taskId, state, data) =>

scheduler.statusUpdate(taskId, state, data.value)

if (TaskState.isFinished(state)) {

executorDataMap.get(executorId) match {

case Some(executorInfo) =>

executorInfo.freeCores += scheduler.CPUS_PER_TASK

makeOffers(executorId)

case None =>

// Ignoring the update since we don't know about the executor.

logWarning(s"Ignored task status update ($taskId state $state) " +

s"from unknown executor with ID $executorId")

}

}

scheduler实例是TaskSchedulerImpl.scala

if (TaskState.isFinished(state)) {

cleanupTaskState(tid)

taskSet.removeRunningTask(tid)

if (state == TaskState.FINISHED) {

taskResultGetter.enqueueSuccessfulTask(taskSet, tid, serializedData)

} else if (Set(TaskState.FAILED, TaskState.KILLED, TaskState.LOST).contains(state)) {

taskResultGetter.enqueueFailedTask(taskSet, tid, state, serializedData)

}

}

statusUpdate函数调用了enqueueSuccessfulTask方法

def enqueueSuccessfulTask(

taskSetManager: TaskSetManager,

tid: Long,

serializedData: ByteBuffer): Unit = {

getTaskResultExecutor.execute(new Runnable {

override def run(): Unit = Utils.logUncaughtExceptions {

try {

val (result, size) = serializer.get().deserialize[TaskResult[_]](serializedData) match {

case directResult: DirectTaskResult[_] =>

if (!taskSetManager.canFetchMoreResults(serializedData.limit())) {

return

}

// deserialize "value" without holding any lock so that it won't block other threads.

// We should call it here, so that when it's called again in

// "TaskSetManager.handleSuccessfulTask", it does not need to deserialize the value.

directResult.value(taskResultSerializer.get())

(directResult, serializedData.limit())

case IndirectTaskResult(blockId, size) =>

if (!taskSetManager.canFetchMoreResults(size)) {

// dropped by executor if size is larger than maxResultSize

sparkEnv.blockManager.master.removeBlock(blockId)

return

}

logDebug("Fetching indirect task result for TID %s".format(tid))

scheduler.handleTaskGettingResult(taskSetManager, tid)

val serializedTaskResult = sparkEnv.blockManager.getRemoteBytes(blockId)

if (!serializedTaskResult.isDefined) {

/* We won't be able to get the task result if the machine that ran the task failed

* between when the task ended and when we tried to fetch the result, or if the

* block manager had to flush the result. */

scheduler.handleFailedTask(

taskSetManager, tid, TaskState.FINISHED, TaskResultLost)

return

}

val deserializedResult = serializer.get().deserialize[DirectTaskResult[_]](

serializedTaskResult.get.toByteBuffer)

// force deserialization of referenced value

deserializedResult.value(taskResultSerializer.get())

sparkEnv.blockManager.master.removeBlock(blockId)

(deserializedResult, size)

} // Set the task result size in the accumulator updates received from the executors.

// We need to do this here on the driver because if we did this on the executors then

// we would have to serialize the result again after updating the size.

result.accumUpdates = result.accumUpdates.map { a =>

if (a.name == Some(InternalAccumulator.RESULT_SIZE)) {

val acc = a.asInstanceOf[LongAccumulator]

assert(acc.sum == 0L, "task result size should not have been set on the executors")

acc.setValue(size.toLong)

acc

} else {

a

}

} scheduler.handleSuccessfulTask(taskSetManager, tid, result)

} catch {

case cnf: ClassNotFoundException =>

val loader = Thread.currentThread.getContextClassLoader

taskSetManager.abort("ClassNotFound with classloader: " + loader)

// Matching NonFatal so we don't catch the ControlThrowable from the "return" above.

case NonFatal(ex) =>

logError("Exception while getting task result", ex)

taskSetManager.abort("Exception while getting task result: %s".format(ex))

}

}

})

}

2.2.1 DirectTaskResult处理过程

- 直接反序列化成DirectTaskResult,反序列化后进行了整体返回内容的大小的判断,在前面的2.1中介绍参数:spark.driver.maxResultSize,这个参数是Driver端的参数控制的,在Spark中会启动多个Task,参数的控制是一个整体的控制所有的Tasks的返回结果的数量大小,当然单个task使用该筏值的控制也是没有问题,因为只要有一个任务返回的结果超过maxResultSize,整体返回的数据也会超过maxResultSize。

- 对DirectTaskResult里的result进行了反序列化。

2.2.2 InDirectTaskResult处理过程

- 通过size判断大小是否超过spark.driver.maxResultSize筏值控制

- 通过BlockManager来获取BlockID的内容反序列化成DirectTaskResult

- 对DirectTaskResult里的result进行了反序列化

最后调用handleSuccessfulTask方法

sched.dagScheduler.taskEnded(tasks(index), Success, result.value(), result.accumUpdates, info)

回到了Dag的调度,向eventProcessLoop的队列里提交了CompletionEvent的事件

def taskEnded(

task: Task[_],

reason: TaskEndReason,

result: Any,

accumUpdates: Seq[AccumulatorV2[_, _]],

taskInfo: TaskInfo): Unit = {

eventProcessLoop.post(

CompletionEvent(task, reason, result, accumUpdates, taskInfo))

}

2.3 MapOutputTracker

2.3.1 RegisterMapOutput

def compressSize(size: Long): Byte = {

if (size == ) {

} else if (size <= 1L) {

} else {

math.min(, math.ceil(math.log(size) / math.log(LOG_BASE)).toInt).toByte

}

}

private[scheduler] def handleTaskCompletion(event: CompletionEvent) {

............

case smt: ShuffleMapTask =>

val shuffleStage = stage.asInstanceOf[ShuffleMapStage]

updateAccumulators(event)

val status = event.result.asInstanceOf[MapStatus]

val execId = status.location.executorId

logDebug("ShuffleMapTask finished on " + execId)

if (failedEpoch.contains(execId) && smt.epoch <= failedEpoch(execId)) {

logInfo(s"Ignoring possibly bogus $smt completion from executor $execId")

} else {

shuffleStage.addOutputLoc(smt.partitionId, status)

}

if (runningStages.contains(shuffleStage) && shuffleStage.pendingPartitions.isEmpty) {

markStageAsFinished(shuffleStage)

logInfo("looking for newly runnable stages")

logInfo("running: " + runningStages)

logInfo("waiting: " + waitingStages)

logInfo("failed: " + failedStages)

// We supply true to increment the epoch number here in case this is a

// recomputation of the map outputs. In that case, some nodes may have cached

// locations with holes (from when we detected the error) and will need the

// epoch incremented to refetch them.

// TODO: Only increment the epoch number if this is not the first time

// we registered these map outputs.

mapOutputTracker.registerMapOutputs(

shuffleStage.shuffleDep.shuffleId,

shuffleStage.outputLocInMapOutputTrackerFormat(),

changeEpoch = true)

clearCacheLocs()

if (!shuffleStage.isAvailable) {

// Some tasks had failed; let's resubmit this shuffleStage

// TODO: Lower-level scheduler should also deal with this

logInfo("Resubmitting " + shuffleStage + " (" + shuffleStage.name +

") because some of its tasks had failed: " +

shuffleStage.findMissingPartitions().mkString(", "))

submitStage(shuffleStage)

} else {

// Mark any map-stage jobs waiting on this stage as finished

if (shuffleStage.mapStageJobs.nonEmpty) {

val stats = mapOutputTracker.getStatistics(shuffleStage.shuffleDep)

for (job <- shuffleStage.mapStageJobs) {

markMapStageJobAsFinished(job, stats)

}

}

submitWaitingChildStages(shuffleStage)

}

}

当处理shuffleMapTask的结果的时候,mapOutputTracker.registerMapOutputs进行了MapOutputs的注册

protected val mapStatuses = new ConcurrentHashMap[Int, Array[MapStatus]]().asScala

def registerMapOutputs(shuffleId: Int, statuses: Array[MapStatus], changeEpoch: Boolean = false) {

mapStatuses.put(shuffleId, statuses.clone())

if (changeEpoch) {

incrementEpoch()

}

}

2.3.2 获取MapStatus

val blockFetcherItr = new ShuffleBlockFetcherIterator(

context,

blockManager.shuffleClient,

blockManager,

mapOutputTracker.getMapSizesByExecutorId(handle.shuffleId, startPartition, endPartition),

// Note: we use getSizeAsMb when no suffix is provided for backwards compatibility

SparkEnv.get.conf.getSizeAsMb("spark.reducer.maxSizeInFlight", "48m") * * ,

SparkEnv.get.conf.getInt("spark.reducer.maxReqsInFlight", Int.MaxValue))

通过getMapSizesByExecutorId获取ShuffledId所对应的MapStatus

def getMapSizesByExecutorId(shuffleId: Int, startPartition: Int, endPartition: Int)

: Seq[(BlockManagerId, Seq[(BlockId, Long)])] = {

logDebug(s"Fetching outputs for shuffle $shuffleId, partitions $startPartition-$endPartition")

val statuses = getStatuses(shuffleId)

// Synchronize on the returned array because, on the driver, it gets mutated in place

statuses.synchronized {

return MapOutputTracker.convertMapStatuses(shuffleId, startPartition, endPartition, statuses)

}

}

在getStatuses方法中

private def getStatuses(shuffleId: Int): Array[MapStatus] = {

val statuses = mapStatuses.get(shuffleId).orNull

if (statuses == null) {

logInfo("Don't have map outputs for shuffle " + shuffleId + ", fetching them")

val startTime = System.currentTimeMillis

var fetchedStatuses: Array[MapStatus] = null

fetching.synchronized {

// Someone else is fetching it; wait for them to be done

while (fetching.contains(shuffleId)) {

try {

fetching.wait()

} catch {

case e: InterruptedException =>

}

}

// Either while we waited the fetch happened successfully, or

// someone fetched it in between the get and the fetching.synchronized.

fetchedStatuses = mapStatuses.get(shuffleId).orNull

if (fetchedStatuses == null) {

// We have to do the fetch, get others to wait for us.

fetching += shuffleId

}

}

if (fetchedStatuses == null) {

// We won the race to fetch the statuses; do so

logInfo("Doing the fetch; tracker endpoint = " + trackerEndpoint)

// This try-finally prevents hangs due to timeouts:

try {

val fetchedBytes = askTracker[Array[Byte]](GetMapOutputStatuses(shuffleId))

fetchedStatuses = MapOutputTracker.deserializeMapStatuses(fetchedBytes)

logInfo("Got the output locations")

mapStatuses.put(shuffleId, fetchedStatuses)

} finally {

fetching.synchronized {

fetching -= shuffleId

fetching.notifyAll()

}

}

}

logDebug(s"Fetching map output statuses for shuffle $shuffleId took " +

s"${System.currentTimeMillis - startTime} ms")

if (fetchedStatuses != null) {

return fetchedStatuses

} else {

logError("Missing all output locations for shuffle " + shuffleId)

throw new MetadataFetchFailedException(

shuffleId, -, "Missing all output locations for shuffle " + shuffleId)

}

} else {

return statuses

}

}

- 封装了一层缓存mapStatus,对同一个Executor来说,里面的线程都是运行同一个Driver的提交的任务,对相同的shuffeID,MapStatus是一样的

- 对同一个Executor、ShuffeID来说,通过Driver获取信息只需要一次,Driver里保存的Shuffle的结果是单点的,对同一个Executor来说获取同一个ShuffleID只需要请求一次,在Traker里面保存了一个队列fetching,里面保存的ShuffeID代表的是有线程正在从Driver端获取ShuffleID的MapStatus,如果发现有值,当前线程会等待,直到其他的线程获取ShuffleID状态并保存到缓存结束,当前线程直接从缓存中获取当前状态

- Executor 向Driver发送GetMapOutputStatuses(shuffleId)消息

- Driver收到GetMapOutputStatuses消息后保存到消息队列mapOutputRequests,Map-Output-Dispatcher-x多线程处理消息队列,返回序列化的MapStatus

- Executor反序列化成MapStatus

2.2.3 以BlockManagerId为key的Shuffle的序列

private def convertMapStatuses(

shuffleId: Int,

startPartition: Int,

endPartition: Int,

statuses: Array[MapStatus]): Seq[(BlockManagerId, Seq[(BlockId, Long)])] = {

assert (statuses != null)

val splitsByAddress = new HashMap[BlockManagerId, ArrayBuffer[(BlockId, Long)]]

for ((status, mapId) <- statuses.zipWithIndex) {

if (status == null) {

val errorMessage = s"Missing an output location for shuffle $shuffleId"

logError(errorMessage)

throw new MetadataFetchFailedException(shuffleId, startPartition, errorMessage)

} else {

for (part <- startPartition until endPartition) {

splitsByAddress.getOrElseUpdate(status.location, ArrayBuffer()) +=

((ShuffleBlockId(shuffleId, mapId, part), status.getSizeForBlock(part)))

}

}

} splitsByAddress.toSeq

}

Spark Shuffle(二)Executor、Driver之间Shuffle结果消息传递、追踪(转载)的更多相关文章

- [Spark性能调优] 第三章 : Spark 2.1.0 中 Sort-Based Shuffle 产生的内幕

本課主題 Sorted-Based Shuffle 的诞生和介绍 Shuffle 中六大令人费解的问题 Sorted-Based Shuffle 的排序和源码鉴赏 Shuffle 在运行时的内存管理 ...

- Spark Executor Driver资源调度小结【转】

一.引子 在Worker Actor中,每次LaunchExecutor会创建一个CoarseGrainedExecutorBackend进程,Executor和CoarseGrainedExecut ...

- Spark Executor Driver资源调度汇总

一.简介 于Worker Actor于,每次LaunchExecutor这将创建一个CoarseGrainedExecutorBackend流程.Executor和CoarseGrainedExecu ...

- 大数据:Spark Core(二)Driver上的Task的生成、分配、调度

1. 什么是Task? 在前面的章节里描写叙述过几个角色,Driver(Client),Master,Worker(Executor),Driver会提交Application到Master进行Wor ...

- Spark Core(二)Driver上的Task的生成、分配、调度(转载)

1. 什么是Task? 在前面的章节里描述过几个角色,Driver(Client),Master,Worker(Executor),Driver会提交Application到Master进行Worke ...

- Spark源码分析之Sort-Based Shuffle读写流程

一 .概述 我们知道Spark Shuffle机制总共有三种: 1.未优化的Hash Shuffle:每一个ShuffleMapTask都会为每一个ReducerTask创建一个单独的文件,总的文件数 ...

- Spark 调优之ShuffleManager、Shuffle

Shuffle 概述 影响Spark性能的大BOSS就是shuffle,因为该环节包含了大量的磁盘IO.序列化.网络数据传输等操作. 因此,如果要让作业的性能更上一层楼,就有必要对 shuffle 过 ...

- 大话Spark(4)-一文理解MapReduce Shuffle和Spark Shuffle

Shuffle本意是 混洗, 洗牌的意思, 在MapReduce过程中需要各节点上同一类数据汇集到某一节点进行计算,把这些分布在不同节点的数据按照一定的规则聚集到一起的过程成为Shuffle. 在Ha ...

- Spark Tungsten揭秘 Day2 Tungsten-sort Based Shuffle

Spark Tungsten揭秘 Day2 Tungsten-sort Based Shuffle 今天在对钨丝计划思考的基础上,讲解下基于Tungsten的shuffle. 首先解释下概念,Tung ...

随机推荐

- 扒一扒MathType不为人知的技巧

MathType作为一款编辑数学公式的神器,很多人在使用它时只是很简单地使用了一些最基本的模板,很多功能都没有使用.MathType功能比你想象中的大很多,今天我们就来扒一扒MathType那些不为人 ...

- linux系统中,查看当前系统中,都在监听哪些端口

需求描述: 查看当前系统中都监听着哪些的端口,用netstat命令,在此记录下 操作过程: 1.查看系统中都在监听哪些端口 [root@testvm home]# netstat -ntl Activ ...

- Ubuntu16.04下Mongodb(离线安装方式|非apt-get)安装部署步骤(图文详解)(博主推荐)

不多说,直接上干货! 说在前面的话 首先,查看下你的操作系统的版本. root@zhouls-virtual-machine:~# cat /etc/issue Ubuntu LTS \n \l r ...

- NHibernate初学四之关联一对一关系

1:数据库脚本,创建两张表T_Area.T_Unit,表示一个单位对应一个地区,在单位表中有个AreaID为T_Area表中的ID: CREATE TABLE [dbo].[T_Area]( [ID] ...

- swift--使用UserDefaults来进行本地数据存储

UserDefaults适合轻量级的本地客户端存储,存储一个值,新值可以覆盖旧值,可以重复存储,也可以存储一次,然后直接从UserDefaults里面读取上次存储的信息,很方便,用的时候,宏定义下,直 ...

- 查看磁盘读写:iotop

iotop命令用来动态地查看磁盘IO情况,用法如下: [root@localhost ~]$ yum install -y iotop # 安装iotop命令 [root@localhost ~]$ ...

- /var/log/spooler

/var/log/spooler 用来记录 Linux 新闻群组方面的日志,内容一般是空的,没什么用,了解即可

- HTML实体大全

HTML 4.01 支持 ISO 8859-1 (Latin-1) 字符集. ISO-8859-1 的较低部分(从 1 到 127 之间的代码)是最初的 7 比特 ASCII. ISO-8859-1 ...

- 基于Cocos2d-x学习OpenGL ES 2.0系列——纹理贴图(6)

在上一篇文章中,我们介绍了如何绘制一个立方体,里面涉及的知识点有VBO(Vertex Buffer Object).IBO(Index Buffer Object)和MVP(Modile-View-P ...

- LeetCode - Nth Highest Salary

题目大概意思是要求找出第n高的Salary,直接写一个Function.作为一个SQL新手我学到了1.SQL中Function的写法和变量的定义,取值.2.limit查询分 页的方法. 在这个题 ...