本地idea开发mapreduce程序提交到远程hadoop集群执行

https://www.codetd.com/article/664330

https://blog.csdn.net/dream_an/article/details/84342770

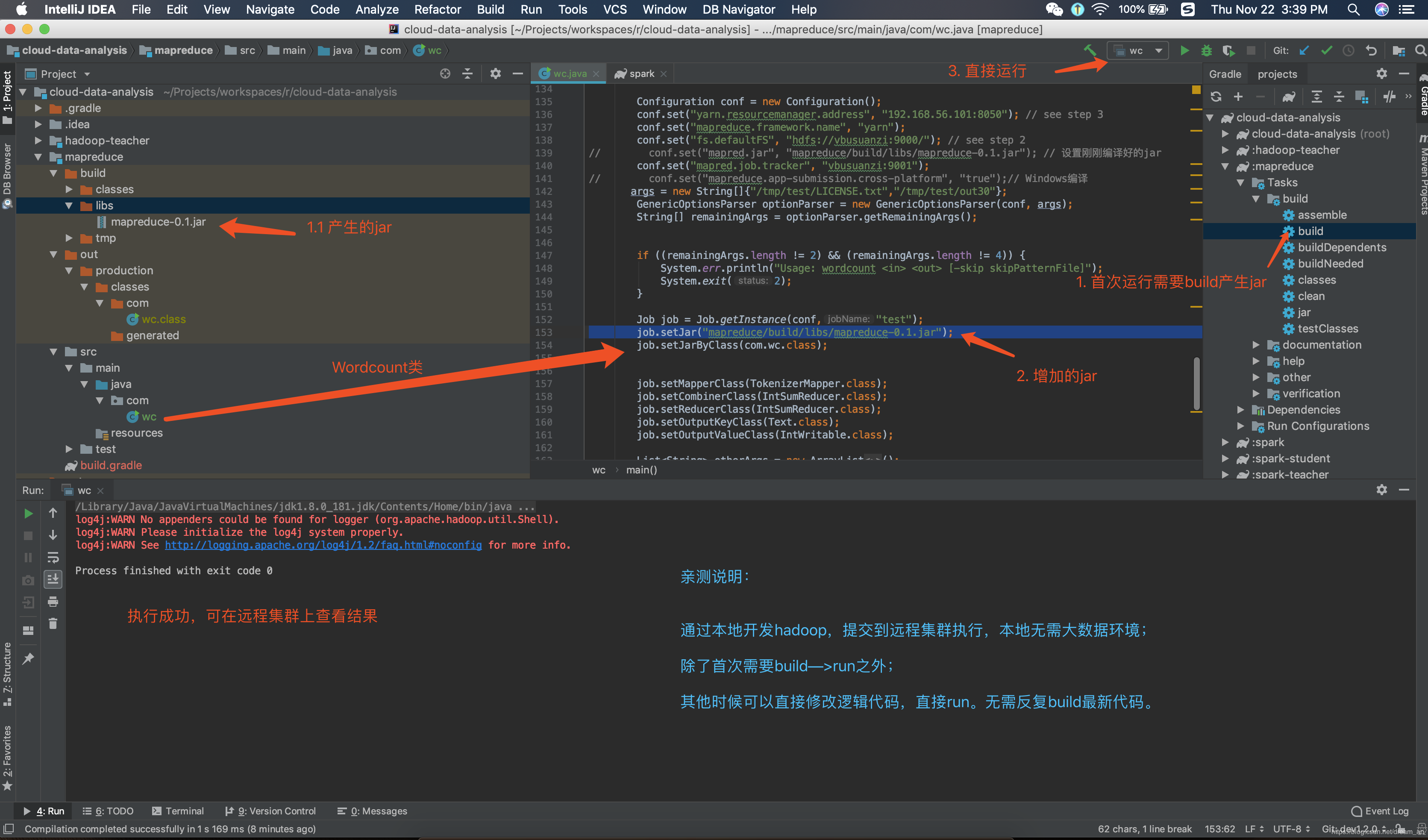

通过idea开发mapreduce程序并直接run,提交到远程hadoop集群执行mapreduce。

简要流程:本地开发mapreduce程序–>设置yarn 模式 --> 直接本地run–>远程集群执行mapreduce程序;

完整的流程:本地开发mapreduce程序——> 设置yarn模式——>初次编译产生jar文件——>增加 job.setJar("mapreduce/build/libs/mapreduce-0.1.jar");——>直接在Idea中run——>远程集群执行mapreduce程序;

一图说明问题:

源码

build.gradle

plugins {

id 'java'

}

group 'com.ruizhiedu'

version '0.1'

sourceCompatibility = 1.8

repositories {

mavenCentral()

}

dependencies {

compile group: 'org.apache.hadoop', name: 'hadoop-common', version: '3.1.0'

compile group: 'org.apache.hadoop', name: 'hadoop-mapreduce-client-core', version: '3.1.0'

compile group: 'org.apache.hadoop', name: 'hadoop-mapreduce-client-jobclient', version: '3.1.0'

testCompile group: 'junit', name: 'junit', version: '4.12'

}

java文件

输入、输出已经让我写死了,可以直接run。不需要再运行时候设置idea运行参数

wc.java

package com; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Counter;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import org.apache.hadoop.util.StringUtils; import java.io.BufferedReader; import java.io.FileReader;

import java.io.IOException;

import java.net.URI;

import java.util.*; /**

* @author wangxiaolei(王小雷)

* @since 2018/11/22

*/ public class wc {

public static class TokenizerMapper

extends Mapper<Object, Text, Text, IntWritable> { static enum CountersEnum { INPUT_WORDS } private final static IntWritable one = new IntWritable();

private Text word = new Text(); private boolean caseSensitive;

private Set<String> patternsToSkip = new HashSet<String>(); private Configuration conf;

private BufferedReader fis; @Override

public void setup(Context context) throws IOException,

InterruptedException {

conf = context.getConfiguration();

caseSensitive = conf.getBoolean("wordcount.case.sensitive", true);

if (conf.getBoolean("wordcount.skip.patterns", false)) {

URI[] patternsURIs = Job.getInstance(conf).getCacheFiles();

for (URI patternsURI : patternsURIs) {

Path patternsPath = new Path(patternsURI.getPath());

String patternsFileName = patternsPath.getName().toString();

parseSkipFile(patternsFileName);

}

}

} private void parseSkipFile(String fileName) {

try {

fis = new BufferedReader(new FileReader(fileName));

String pattern = null;

while ((pattern = fis.readLine()) != null) {

patternsToSkip.add(pattern);

}

} catch (IOException ioe) {

System.err.println("Caught exception while parsing the cached file '"

+ StringUtils.stringifyException(ioe));

}

} @Override

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

String line = (caseSensitive) ?

value.toString() : value.toString().toLowerCase();

for (String pattern : patternsToSkip) {

line = line.replaceAll(pattern, "");

}

StringTokenizer itr = new StringTokenizer(line);

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

Counter counter = context.getCounter(CountersEnum.class.getName(),

CountersEnum.INPUT_WORDS.toString());

counter.increment();

}

}

} public static class IntSumReducer

extends Reducer<Text,IntWritable,Text,IntWritable> {

private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable<IntWritable> values,

Context context

) throws IOException, InterruptedException {

int sum = ;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

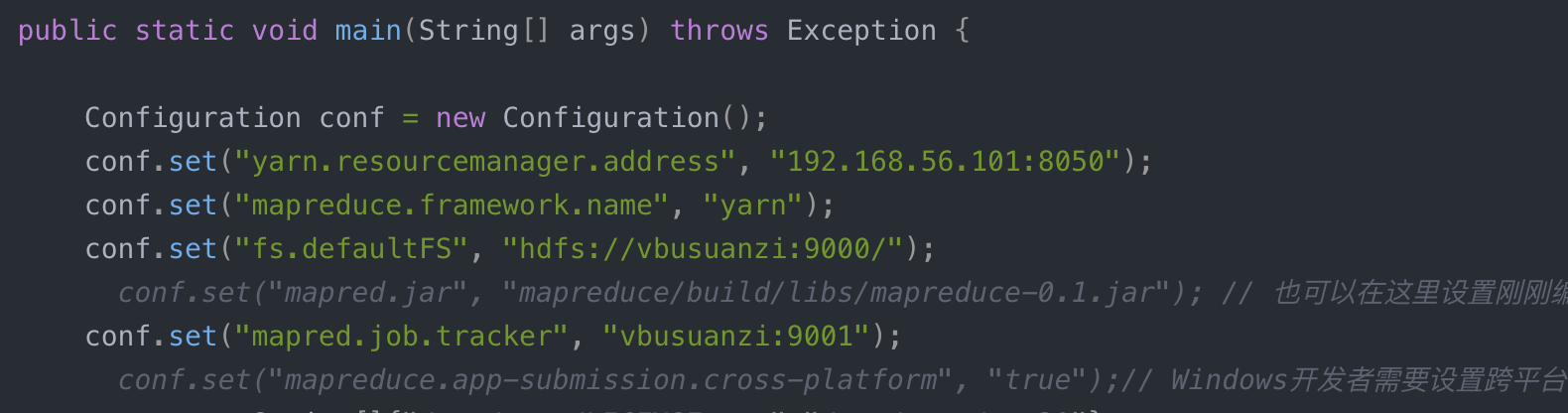

} public static void main(String[] args) throws Exception { Configuration conf = new Configuration();

conf.set("yarn.resourcemanager.address", "192.168.56.101:8050");

conf.set("mapreduce.framework.name", "yarn");

conf.set("fs.defaultFS", "hdfs://vbusuanzi:9000/");

// conf.set("mapred.jar", "mapreduce/build/libs/mapreduce-0.1.jar"); // 也可以在这里设置刚刚编译好的jar

conf.set("mapred.job.tracker", "vbusuanzi:9001");

// conf.set("mapreduce.app-submission.cross-platform", "true");// Windows开发者需要设置跨平台

args = new String[]{"/tmp/test/LICENSE.txt","/tmp/test/out30"};

GenericOptionsParser optionParser = new GenericOptionsParser(conf, args);

String[] remainingArgs = optionParser.getRemainingArgs(); if ((remainingArgs.length != ) && (remainingArgs.length != )) {

System.err.println("Usage: wordcount <in> <out> [-skip skipPatternFile]");

System.exit();

} Job job = Job.getInstance(conf,"test");

job.setJar("mapreduce/build/libs/mapreduce-0.1.jar");

job.setJarByClass(com.wc.class); job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class); List<String> otherArgs = new ArrayList<String>();

for (int i=; i < remainingArgs.length; ++i) {

if ("-skip".equals(remainingArgs[i])) {

job.addCacheFile(new Path(remainingArgs[++i]).toUri());

job.getConfiguration().setBoolean("wordcount.skip.patterns", true);

} else {

otherArgs.add(remainingArgs[i]);

}

}

FileInputFormat.addInputPath(job, new Path(otherArgs.get()));

FileOutputFormat.setOutputPath(job, new Path(otherArgs.get())); job.waitForCompletion(true); System.exit(job.waitForCompletion(true) ? : );

}

}

本地idea开发mapreduce程序提交到远程hadoop集群执行的更多相关文章

- eclipse连接远程hadoop集群开发时权限不足问题解决方案

转自:http://blog.csdn.net/shan9liang/article/details/9734693 eclipse连接远程hadoop集群开发时报错 Exception in t ...

- eclipse连接远程hadoop集群开发时0700问题解决方案

eclipse连接远程hadoop集群开发时报错 错误信息: Exception in thread "main" java.io.IOException:Failed to se ...

- 在windows远程提交任务给Hadoop集群(Hadoop 2.6)

我使用3台Centos虚拟机搭建了一个Hadoop2.6的集群.希望在windows7上面使用IDEA开发mapreduce程序,然后提交的远程的Hadoop集群上执行.经过不懈的google终于搞定 ...

- 本地Pycharm将spark程序发送到远端spark集群进行处理

前言 最近在搞hadoop+spark+python,所以就搭建了一个本地的hadoop环境,基础环境搭建地址hadoop2.7.7 分布式集群安装与配置,spark集群安装并集成到hadoop集群, ...

- Eclipse提交任务至Hadoop集群遇到的问题

环境:Windows8.1,Eclipse 用Hadoop自带的wordcount示例 hadoop2.7.0 hadoop-eclipse-plugin-2.7.0.jar //Eclipse的插件 ...

- idea打jar包-MapReduce作业提交到hadoop集群执行

https://blog.csdn.net/jiaotangX/article/details/78661862 https://liushilang.iteye.com/blog/2093173

- Eclipse远程提交hadoop集群任务

文章概览: 1.前言 2.Eclipse查看远程hadoop集群文件 3.Eclipse提交远程hadoop集群任务 4.小结 1 前言 Hadoop高可用品台搭建完备后,参见<Hadoop ...

- IntelliJ IDEA编写的spark程序在远程spark集群上运行

准备工作 需要有三台主机,其中一台主机充当master,另外两台主机分别为slave01,slave02,并且要求三台主机处于同一个局域网下 通过命令:ifconfig 可以查看主机的IP地址,如下图 ...

- Hadoop集群(第7期)_Eclipse开发环境设置

1.Hadoop开发环境简介 1.1 Hadoop集群简介 Java版本:jdk-6u31-linux-i586.bin Linux系统:CentOS6.0 Hadoop版本:hadoop-1.0.0 ...

随机推荐

- 学习:record用法

详情请参考官网:http://www.erlang.org/doc/reference_manual/records.html http://www.erlang.org/doc/programmin ...

- 一条SQL语句查询两表中两个字段

首先描述问题,student表中有字段startID,endID.garde表中的ID需要对应student表中的startID或者student表中的endID才能查出grade表中的name字段, ...

- 如何过滤php中危险的HTML代码

用php过滤html里可能被利用来引入外部危险内容的代码.有些时候,需要让用户提交html内容,以便丰富用户发布的信息,当然,有些可能造成显示页面布局混乱的代码也在过滤范围内. 以下是引用片段: #用 ...

- ThinkPHP项目笔记之登录,注册,安全退出篇

1.先说注册 a.准备好注册页面,register.html,当然一般有,姓名,邮箱,地址等常用的. b."不要相信用户提交的一切数据",安全,安全是第一位的.所以要做判断,客户端 ...

- 《linux系统及其编程》实验课记录(四)

实验4:组织目录和文件 实验目标: 熟悉几个基本的操作系统文件和目录的命令的功能.语法和用法, 整理出一个更有条理的主目录,每个文件都位于恰当的子目录. 实验背景: 你的主目录中已经积压了一些文件,你 ...

- mathtype免费版下载及序列号获取地址

在编辑公式这个方面来说,MathType是使用最多的一个工具,因为它操作简单,不需要复杂的学习过程就可以很快地掌握操作技巧,并且功能也比Office自带的公式编辑器完善很多,可以对公式进行批量修改.编 ...

- SQL语句:语法错误(操作符丢失)在查询表达式中

所谓操作符丢失,应该是你在拼接SQL语句是少了关键词或者分隔符,导致系统无法识别SQL语句.建议:1.监控SQL语句,看看哪里出现问题:断点看下最后的sql到底是什么样子就知道了,另外你可以把这段sq ...

- @Override错误

导入一个项目,项目所有类报 @Override 有错误,去掉就不报错了,原因?在 Java Compiler 将 Enable project specific setting 选中 然后再选择1 ...

- C#三种字符串拼接方法的效率对比

C#字符串拼接的方法常用的有:StringBuilder.+.string.Format.List<string>.使用情况不同,效率不同. 1.+的方式 string sql = &qu ...

- 电力项目十三--js添加浮动框

修改page/menu/loading.jsp页面 首先,页面中引入浮动窗样式css <!-- 浮动窗口样式css begin --> <style type="text/ ...