爬虫系列 一次采集.NET WebForm网站的坎坷历程

今天接到一个活,需要统计人员的工号信息,由于种种原因不能直接连数据库 [无奈]、[无奈]、[无奈]。采取迂回方案,写个工具自动登录网站,采集用户信息。

这也不是第一次采集ASP.NET网站,以前采集的时候就知道,这种网站采集比较麻烦,尤其是WebForm的ASP.NET 网站,那叫一个费劲。

喜欢现在流行的Restful模式的网站,数据接口采集那才叫舒服。

闲话少说,开干

工作量不大,HTTP纯手写

先准备下一个GET/POST预备使用

- public static string Get(string url, Action<string> SuccessCallback, Action<string> FailCallback) {

- HttpWebRequest req = WebRequest.Create(url) as HttpWebRequest;

- req.Method = "GET";

- req.UserAgent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36";

- req.Accept = "*/*";

- req.KeepAlive = true;

- req.ServicePoint.ConnectionLimit = int.MaxValue;

- req.ServicePoint.Expect100Continue = false;

- req.CookieContainer = sznyCookie; #静态变量

- req.Credentials = System.Net.CredentialCache.DefaultCredentials;

- string msg = "";

- using (HttpWebResponse rsp = req.GetResponse() as HttpWebResponse)

- {

- using (StreamReader reader = new StreamReader(rsp.GetResponseStream()))

- {

- msg = reader.ReadToEnd();

- }

- }

- return msg;

- }

- public static string Post(string url, Dictionary<string, string> dicParms, Action<string> SuccessCallback, Action<string> FailCallback) {

- StringBuilder data = new StringBuilder();

- foreach (var kv in dicParms) {

- if (kv.Key.StartsWith("header"))

- continue;

- data.Append($"&{Common.UrlEncode( kv.Key,Encoding.UTF8)}={ Common.UrlEncode( kv.Value,Encoding.UTF8)}");

- }

- if (data.Length > )

- data.Remove(, );

- HttpWebRequest req = WebRequest.Create(url) as HttpWebRequest;

- req.Method = "POST";

- req.KeepAlive = true;

- req.CookieContainer = sznyCookie;

- req.Connection = "KeepAlive";

- req.KeepAlive = true;

- req.ContentType = "application/x-www-form-urlencoded";

- req.Accept = "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9";

- req.Referer = url;

- if (dicParms.ContainsKey("ScriptManager1"))

- {

- req.Headers.Add("X-MicrosoftAjax", "Delta=true");

- req.Headers.Add("X-Requested-With", "XMLHttpRequest");

- req.ContentType = "application/x-www-form-urlencoded; charset=UTF-8";

- req.Accept = "*/*";

- }

- req.Headers.Add("Cache-Control", "no-cache");

- req.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36";

- req.ServicePoint.ConnectionLimit = int.MaxValue;

- req.ServicePoint.Expect100Continue = false;

- req.AllowAutoRedirect = true;

- req.Credentials = System.Net.CredentialCache.DefaultCredentials;

- byte[] buffer = Encoding.UTF8.GetBytes(data.ToString());

- using (Stream reqStream = req.GetRequestStream())

- {

- reqStream.Write(buffer, , buffer.Length);

- }

- string msg = "";

- using (HttpWebResponse rsp = req.GetResponse() as HttpWebResponse)

- {

- using (StreamReader reader = new StreamReader(rsp.GetResponseStream()))

- {

- msg = reader.ReadToEnd();

- if (msg.Contains("images/dl.jpg") || msg.Contains("pageRedirect||%2flogin.aspx"))

- {

- //登录失败

- if (FailCallback != null)

- FailCallback(msg);

- }

- else {

- if (SuccessCallback!=null)

- SuccessCallback(msg);

- }

- }

- }

- return msg;

- }

整个过程分为登陆、用户信息列表、用户信息详情,分三步走来完成这个项目

登陆

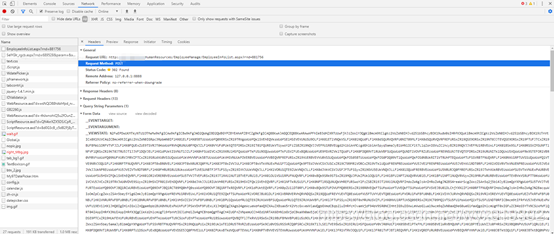

根据Chrome抓包结果编写Login,帐号密码没有任何加密,直接明文显示了,直接用了,根据是否跳转页面判断是否登陆成功。调试查看结果登陆成功了。

根据上面的抓包数据,可以调用下面的代码确定是否登陆成功。

- public static bool SznyLogin(string username, string password, Action<string> SuccessCallback, Action<string> FailCallback) {

- string url = "http://127.0.0.1/login.aspx";

- string msg = Get(url, SuccessCallback, FailCallback);

- if (msg.Trim().Length > ) {

- Dictionary<string, string> dicParms = new Dictionary<string, string>();

- dicParms.Add("__VIEWSTATE", "");

- dicParms.Add("__EVENTVALIDATION", "");

- dicParms.Add("Text_Name", "");

- dicParms.Add("Text_Pass", "");

- dicParms.Add("btn_Login.x", new Random().Next().ToString());

- dicParms.Add("btn_Login.y", new Random().Next().ToString());

- MatchCollection mc = Regex.Matches(msg, @"<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?value=""(?<val>[^""]*?)""[^<>]*?/?>|<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>|<select[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- if (dicParms.ContainsKey(mi.Groups["name"].Value.Trim()))

- dicParms[mi.Groups["name"].Value.Trim()] = mi.Groups["val"].Value.Trim();

- }

- dicParms["Text_Name"] = username;

- dicParms["Text_Pass"] = password;

- msg=Post(url, dicParms, SuccessCallback, FailCallback);

- if (msg.Contains("images/dl.jpg") || msg.Contains("pageRedirect||%2flogin.aspx"))

- {

- return false;

- }

- else

- return true;

- }

- return false;

- }

抓取人员信息

看到下面这个页面,失望了,列表上没有工号,如果列表上有工号 设置一页显示全部信息就可以把所有的数据都抓取到了。

换个思路:是不是我直接设置一页显示所有的数据后,然后根据员工ID可以获取到所有的信息呢?

接下来点击任意一条信息后,查看详情,显示下面的调用结果。Url上没有ID,Get这条路走不通了,查看Post的数据,更失望,没有ID,通过行信息绑定。传统的WebForm 提交模式…

把所有的数据显示到一页,把列表的数据先采集完,然后最后一个页面一个页面的采集工号信息。

- public static CookieContainer sznyCookie = new CookieContainer();

- /// <summary>

- /// 员工信息

- /// </summary>

- public static Dictionary<int, Dictionary<string,string>> dicSznyEmployees = new Dictionary<int, Dictionary<string, string>>();

- public static Dictionary<string, string> dicSznyEmployeeParms = new Dictionary<string, string>();

- /// <summary>

- /// 人员顺序号

- /// </summary>

- public static ConcurrentQueue<int> queueSznyEmployeeInfo = new ConcurrentQueue<int>();

- public static ConcurrentQueue<int> queueSuccessEmployeeInfo = new ConcurrentQueue<int>();

- public static bool SznyEmployeeList(Action<string> SuccessCallback, Action<string> FailCallback)

- {

- string url = $"http://127.0.0.1/HumanResources/EmployeeManage/EmployeeInfoList.aspx";

- string msg = Get(url, SuccessCallback, FailCallback);

- if (msg.Trim().Length > )

- {

- //统计参数

- //__doPostBack\('(?<name>[^']*?)'

- //new Regex(@"__doPostBack\('(?<name>[^']*?)'", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- string name = "";

- MatchCollection mc = Regex.Matches(msg, @"__doPostBack\('(?<name>[^']*?)'", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- name = mi.Groups["name"].Value.Trim();

- break;

- }

- //(?<=<a[^<>]*?href="javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)"[^<>]*?>条/页)

- //new Regex(@"(?<=<a[^<>]*?href=""javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)""[^<>]*?>条/页)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- string smname = "";

- Match m = Regex.Match(msg, @"(?<=<a[^<>]*?href=""javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)""[^<>]*?>条/页)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- if (m.Success)

- smname = m.Value.Trim().Replace("'", "").Replace("'", "");

- //<input[^<>]*?name="(?<name>[^"]*?)"[^<>]*?value="(?<val>[^"]*?)"[^<>]*?/?>|<input[^<>]*?name="(?<name>[^"]*?)"[^<>]*?"[^<>]*?/?>|<select[^<>]*?name="(?<name>[^"]*?)"[^<>]*?"[^<>]*?/?>

- //new Regex(@"<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?value=""(?<val>[^""]*?)""[^<>]*?/?>|<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>|<select[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- Dictionary<string, string> dicParms = new Dictionary<string, string>();

- dicParms.Add("ScriptManager1", $"UpdatePanel1|{smname}");

- dicParms.Add("__EVENTTARGET", smname);

- dicParms.Add("__EVENTARGUMENT", "");

- dicParms.Add("__VIEWSTATE", "");

- dicParms.Add("__EVENTVALIDATION", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_GridViewID", $"{name}");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentPage", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalPage", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageSize", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentNum", "");

- dicParms.Add("XM", "ZXMCHECK");

- List<string> lstParms = new List<string>() { "XM", "MdGridView_t_unitemployees_dwyg_iCurrentPage", "MdGridView_t_unitemployees_dwyg_GridViewID", "MdGridView_t_unitemployees_dwyg_iCurrentNum", "MdGridView_t_unitemployees_dwyg_iPageCount", "MdGridView_t_unitemployees_dwyg_iPageSize", "Button_Query", "__EVENTTARGET", "__EVENTARGUMENT", "Button_SelQuery", "Button_view", "Button_edit", "Button_out", "ImageButton_Tx", "ImageButton_xx1", "Button_qd", "MdGridView_t_unitemployees_dwyg_GridViewID", "__ASYNCPOST", "MdGridView_t_unitemployees_dwyg__PageSetText" };

- mc = Regex.Matches(msg, @"<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?value=""(?<val>[^""]*?)""[^<>]*?/?>|<input[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>|<select[^<>]*?name=""(?<name>[^""]*?)""[^<>]*?""[^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- if (lstParms.Contains(mi.Groups["name"].Value.Trim()))

- continue;

- if (dicParms.ContainsKey(mi.Groups["name"].Value.Trim()))

- dicParms[mi.Groups["name"].Value.Trim()] = mi.Groups["val"].Value.Trim();

- else

- dicParms.Add(mi.Groups["name"].Value.Trim(), mi.Groups["val"].Value.Trim());

- }

- if (dicParms.ContainsKey("MdGridView_t_unitemployees_dwyg$_PageSetText"))

- dicParms["MdGridView_t_unitemployees_dwyg$_PageSetText"] = "";

- else

- dicParms.Add("MdGridView_t_unitemployees_dwyg$_PageSetText", "");//1200条 每页

- msg = Post(url, dicParms, SuccessCallback, FailCallback);

- dicSznyEmployees.Clear();

- dicSznyEmployeeParms.Clear();

- dicSznyEmployeeParms.Clear();

- dicSznyEmployeeParms.Add("__EVENTTARGET", "");

- dicSznyEmployeeParms.Add("__EVENTARGUMENT", "");

- dicSznyEmployeeParms.Add("__VIEWSTATE", dicParms["__VIEWSTATE"]);

- dicSznyEmployeeParms.Add("__EVENTVALIDATION", dicParms["__EVENTVALIDATION"]);

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_GridViewID", $"{name}");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentPage", "");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iTotalPage", "");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iTotalCount", "");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iPageSize", "");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iPageCount", "");

- dicSznyEmployeeParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentNum", "");

- dicSznyEmployeeParms.Add("XM", "ZXMCHECK");

- lstParms.Clear();

- lstParms = new List<string>() { "XM", "__EVENTTARGET", "__EVENTARGUMENT", "Button_Query", "Button_SelQuery", };

- lstParms.Add("Button_edit");

- lstParms.Add("Button_out");

- lstParms.Add("ImageButton_Tx");

- lstParms.Add("ImageButton_xx1");

- lstParms.Add("Button_qd");

- lstParms.Add("MdGridView_t_unitemployees_dwyg_GridViewID");

- lstparms.add("mdgridview_t_unitemployees_dwyg_icurrentpage");

- lstparms.add("mdgridview_t_unitemployees_dwyg_itotalpage");

- lstparms.add("mdgridview_t_unitemployees_dwyg_itotalcount");

- lstparms.add("mdgridview_t_unitemployees_dwyg_ipagesize");

- lstparms.add("mdgridview_t_unitemployees_dwyg_ipagecount");

- lstParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentNum");

- mc = Regex.Matches(msg, @"<input[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?value=[""'](?<val>[^'""]*?)[""'][^<>]*?/?>|<input[^<>]*?name=[""'](?<name>[^'""]*?)[""'][^<>]*?[""'][^<>]*?/?>|<select[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?[""'][^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- if (lstParms.Contains(mi.Groups["name"].Value.Trim()))

- continue;

- if (dicSznyEmployeeParms.ContainsKey(mi.Groups["name"].Value.Trim()))

- dicSznyEmployeeParms[mi.Groups["name"].Value.Trim()] = mi.Groups["val"].Value.Trim();

- else

- dicSznyEmployeeParms.Add(mi.Groups["name"].Value.Trim(), mi.Groups["val"].Value.Trim());

- }

- int cnt = int.Parse(dicSznyEmployeeParms["MdGridView_t_unitemployees_dwyg_iTotalCount"]);

- for (int i = ; i <= cnt; i++)

- queueSznyEmployeeInfo.Enqueue(i);

- //获取TR

- //< tr[^<>] *? name = "SelectTR"[^<>] *?>.*?</ tr >

- //new Regex(@"<tr[^<>]*?name=""SelectTR""[^<>]*?>.*?</tr>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- mc = Regex.Matches(msg, @"<tr[^<>]*?name=""SelectTR""[^<>]*?>.*?</tr>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- //获取td

- //(?<=<td[^<>]*?>).*?(?=</td>)

- //new Regex("(?<=<td[^<>]*?>).*?(?=</td>)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- MatchCollection mic = Regex.Matches(mi.Value, "(?<=<td[^<>]*?>).*?(?=</td>)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- int ix = int.Parse(mic[].Value.Trim());

- if (!dicSznyEmployees.ContainsKey(ix))

- {

- dicSznyEmployees.Add(ix, new Dictionary<string, string>());

- }

- queueSznyEmployeeInfo.Enqueue(ix);

- dicSznyEmployees[ix].Add("UserName", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("PersonID", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Birthday", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Sex", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("HomePhone", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("TelPhone", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Mail", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Address", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("MinZu", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("AddressJiGuan", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("ZhengZhiMianmao", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Paiqianshijian", mic[].Value.Trim().Replace(" ", ""));

- dicSznyEmployees[ix].Add("Remark", mic[].Value.Trim().Replace(" ", ""));

- }

- }

- return true;

- }

这样所有的人员信息一次性采集到静态变量字典中了,剩下的一个工号可以慢慢获取了。

既然是这样,老实的分析Post数据,按照格式Post数据把。

分析完Post的数据后,突发奇想,我是不是可以通过相同的__ViewState和__EVENTVALIDATION POST数据呢?说干就干。

写代码跳转到员工列表页面,然后POST数据设置一页显示所有数据。

所有的POST的参数,保存到一个静态变量中。

发现POST批量提交的时候,前3次正常,以后就直接未登录。

果断放弃,换思路。

那如果这样不行 可不可以把所有的数据放到一个页面上,然后每次获取一次页面,然后根据顺序号POST数据呢。

上面已经把所有的列表数据都采集完了,顺序号也固定了,然后在POST数据的时候,发现有的人员和工号不对应。

这时候去分析为什么数据会出现不对应的情况呢?发现正则表达式写的还有问题。获取页面的Input的时候,属性有可能使用双引号,也有可能使用单引号。

正则表达式由原来的

- <input[^<>]*?name="(?<name>[^"]*?)"[^<>]*?value="(?<val>[^"]*?)"[^<>]*?/?>|<input[^<>]*?name="(?<name>[^"]*?)"[^<>]*?"[^<>]*?/?>|<select[^<>]*?name="(?<name>[^"]*?)"[^<>]*?"[^<>]*?/?>

修改为

- <input[^<>]*?name=["'](?<name>[^"']*?)["'][^<>]*?value=["'](?<val>[^'"]*?)["'][^<>]*?/?>|<input[^<>]*?name=["'](?<name>[^'"]*?)["'][^<>]*?["'][^<>]*?/?>|<select[^<>]*?name=["'](?<name>[^"']*?)["'][^<>]*?["'][^<>]*?/?>

由于网站异步提交,也就是以前WEBForm采用的ScriptManager,提交的时候返回的HTML不是整个Document,没有注意,以为没有返回__ViewState。所以采用GET的时候获取的__ViewState继续执行获取工号的操作。发现获取的工号都是错误,人员与工号对不上

麻爪了,不知道该咋办了。犹豫了一下后,上Fiddler吧,一点点的看提交的参数是否有区别。发现正常网站在Get到页面后,通过调整每页x条数据后,提交的ViewState与原来的不一致。寻寻觅觅 觅觅寻寻 最后发现异步返回的HTML中,最后有ViewState….

由于返回的数据顺序,每次也不一样,也是造成人员、工号不一致的原因。

提交后正常了,但是1000多条的员工信息,每次提交都是2000多个参数。看着冗长的POST数据,无语了。这样提交 先不说网站本身就慢。我提交这么多网站会不会更慢,我的系统是不是也会更慢。

怎么办?

是不是有可能把分页设置成每页只有一条数据,然后每次翻页,采集数据。简单试试把

先修改获取列表页面数据,把数据设置成一条每页,此时不再采集列表中的信息。而是记录总共多少页,放入队列中,共定时任务去分页采集数据。列表信息通过后面的分页数据采集。

由于网站是内部系统,为了不影响系统的正常运行,每次只采集一条信息,等待这条信息采集完成后,在采集下一页信息。

采集列表

- public static void ReqSznyEmployeeList(int ix,Action<string> SuccessCallback, Action<string> FailCallback)

- {

- string url = $"http://127.0.0.1/HumanResources/EmployeeManage/EmployeeInfoList.aspx";

- HttpWebRequest req = WebRequest.Create(url) as HttpWebRequest;

- req.Method = "GET";

- req.UserAgent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36";

- req.Accept = "*/*";

- req.KeepAlive = true;

- req.ServicePoint.ConnectionLimit = int.MaxValue;

- req.ServicePoint.Expect100Continue = false;

- req.CookieContainer = sznyCookie;

- req.Credentials = System.Net.CredentialCache.DefaultCredentials;

- req.BeginGetResponse(new AsyncCallback(RspSznyEmployeeList), new object[] { req, url,ix, SuccessCallback, FailCallback });

- }

- private static void RspSznyEmployeeList(IAsyncResult result)

- {

- object[] parms = result.AsyncState as object[];

- HttpWebRequest req = parms[] as HttpWebRequest;

- string url = parms[].ToString();

- int ix = int.Parse(parms[].ToString());

- Action<string> SuccessCallback = parms[] as Action<string>;

- Action<string> FailCallback = parms[] as Action<string>;

- try

- {

- using (HttpWebResponse rsp = req.EndGetResponse(result) as HttpWebResponse)

- {

- using (StreamReader reader = new StreamReader(rsp.GetResponseStream()))

- {

- string msg = "";

- msg = reader.ReadToEnd();

- //统计参数

- //__doPostBack\('(?<name>[^']*?)'

- //new Regex(@"__doPostBack\('(?<name>[^']*?)'", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- string name = "";

- MatchCollection mc = Regex.Matches(msg, @"__doPostBack\('(?<name>[^']*?)'", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- name = mi.Groups["name"].Value.Trim();

- break;

- }

- //(?<=<a[^<>]*?href="javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)"[^<>]*?>条/页)

- //new Regex(@"(?<=<a[^<>]*?href=""javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)""[^<>]*?>条/页)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- string smname = "MdGridView_t_unitemployees_dwyg$_SearchGo";

- //Match m = Regex.Match(msg, @"(?<=<a[^<>]*?href=""javascript:__dopostback\()[^<>]*?(?=,[^<>]*?\)""[^<>]*?>条/页)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- //<input[^<>]*?name=["'](?<name>[^"']*?)["'][^<>]*?value=["'](?<val>[^'"]*?)["'][^<>]*?/?>|<input[^<>]*?name=["'](?<name>[^'"]*?)["'][^<>]*?["'][^<>]*?/?>|<select[^<>]*?name=["'](?<name>[^"']*?)["'][^<>]*?["'][^<>]*?/?>

- //new Regex(@"<input[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?value=[""'](?<val>[^'""]*?)[""'][^<>]*?/?>|<input[^<>]*?name=[""'](?<name>[^'""]*?)[""'][^<>]*?[""'][^<>]*?/?>|<select[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?[""'][^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- Dictionary<string, string> dicParms = new Dictionary<string, string>();

- dicParms.Add("ScriptManager1", $"UpdatePanel1|{smname}");

- dicParms.Add("__EVENTTARGET", smname);

- dicParms.Add("__EVENTARGUMENT", "");

- dicParms.Add("__VIEWSTATE", "");

- dicParms.Add("__EVENTVALIDATION", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_GridViewID", $"{name}");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentPage", ix.ToString());

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalPage", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageSize", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentNum", "");

- //dicParms.Add("MdGridView_t_unitemployees_dwyg$_SearchTextBox", ix.ToString());

- dicParms.Add("XM", "ZXMCHECK");

- List<string> lstParms = new List<string>() { "ScriptManager1", "XM", "MdGridView_t_unitemployees_dwyg_iCurrentNum", "Button_Query", "__EVENTTARGET", "__EVENTARGUMENT", "Button_SelQuery", "Button_view", "Button_edit", "Button_out", "ImageButton_Tx", "ImageButton_xx1", "Button_qd", "__ASYNCPOST", "MdGridView_t_unitemployees_dwyg__PageSetText" };

- mc = Regex.Matches(msg, @"<input[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?value=[""'](?<val>[^'""]*?)[""'][^<>]*?/?>|<input[^<>]*?name=[""'](?<name>[^'""]*?)[""'][^<>]*?[""'][^<>]*?/?>|<select[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?[""'][^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- if (lstParms.Contains(mi.Groups["name"].Value.Trim()))

- continue;

- if (dicParms.ContainsKey(mi.Groups["name"].Value.Trim()))

- dicParms[mi.Groups["name"].Value.Trim()] = mi.Groups["val"].Value.Trim();

- else

- dicParms.Add(mi.Groups["name"].Value.Trim(), mi.Groups["val"].Value.Trim());

- }

- if (dicParms.ContainsKey("MdGridView_t_unitemployees_dwyg$_PageSetText"))

- dicParms["MdGridView_t_unitemployees_dwyg$_PageSetText"] = "";

- else

- dicParms.Add("MdGridView_t_unitemployees_dwyg$_PageSetText", "");//1200条 每页

- if (dicParms.ContainsKey("MdGridView_t_unitemployees_dwyg_iPageCount"))

- dicParms["MdGridView_t_unitemployees_dwyg_iPageCount"] = "";

- else

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageCount", "");

- if (dicParms.ContainsKey("MdGridView_t_unitemployees_dwyg_iPageSize"))

- dicParms["MdGridView_t_unitemployees_dwyg_iPageSize"] = "";

- else

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageSize", "");

- if (dicParms.ContainsKey("MdGridView_t_unitemployees_dwyg$_SearchTextBox"))

- dicParms["MdGridView_t_unitemployees_dwyg$_SearchTextBox"] = $"{ix}";

- else

- dicParms.Add("MdGridView_t_unitemployees_dwyg$_SearchTextBox", $"{ix}");/*第几页*/

- dicParms["MdGridView_t_unitemployees_dwyg_iTotalPage"] = dicParms["MdGridView_t_unitemployees_dwyg_iTotalCount"];

- msg = Post(url, dicParms, SuccessCallback, FailCallback);

- //获取TR

- //<tr[^<>]*?name="SelectTR"[^<>]*?>.*?</tr>

- //new Regex(@"<tr[^<>]*?name=""SelectTR""[^<>]*?>.*?</tr>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- mc = Regex.Matches(msg, @"<tr[^<>]*?name=""SelectTR""[^<>]*?>.*?</tr>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- //获取td

- //(?<=<td[^<>]*?>).*?(?=</td>)

- //new Regex("(?<=<td[^<>]*?>).*?(?=</td>)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- MatchCollection mic = Regex.Matches(mi.Value, "(?<=<td[^<>]*?>).*?(?=</td>)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- if (!dicSznyEmployees.ContainsKey(ix))

- {

- dicSznyEmployees.Add(ix, new Dictionary<string, string>());

- }

- //queueSznyEmployeeInfo.Enqueue(ix);

- if (!dicSznyEmployees[ix].ContainsKey("UserName"))

- dicSznyEmployees[ix].Add("UserName", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["UserName"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("PersonID"))

- dicSznyEmployees[ix].Add("PersonID", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["PersonID"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Birthday"))

- dicSznyEmployees[ix].Add("Birthday", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Birthday"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Sex"))

- dicSznyEmployees[ix].Add("Sex", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Sex"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("HomePhone"))

- dicSznyEmployees[ix].Add("HomePhone", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["HomePhone"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("TelPhone"))

- dicSznyEmployees[ix].Add("TelPhone", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["TelPhone"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Mail"))

- dicSznyEmployees[ix].Add("Mail", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Mail"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Address"))

- dicSznyEmployees[ix].Add("Address", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Address"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("MinZu"))

- dicSznyEmployees[ix].Add("MinZu", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["MinZu"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("AddressJiGuan"))

- dicSznyEmployees[ix].Add("AddressJiGuan", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["AddressJiGuan"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("ZhengZhiMianmao"))

- dicSznyEmployees[ix].Add("ZhengZhiMianmao", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["ZhengZhiMianmao"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Paiqianshijian"))

- dicSznyEmployees[ix].Add("Paiqianshijian", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Paiqianshijian"] = mic[].Value.Trim().Replace(" ", "");

- if (!dicSznyEmployees[ix].ContainsKey("Remark"))

- dicSznyEmployees[ix].Add("Remark", mic[].Value.Trim().Replace(" ", ""));

- else

- dicSznyEmployees[ix]["Remark"] = mic[].Value.Trim().Replace(" ", "");

- }

- dicParms.Clear();

- mc = Regex.Matches(msg, @"(?<name>__VIEWSTATE)\|(?<v>[^\|]+)|(?<name>__EVENTVALIDATION)\|(?<v>[^\|]+)", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- dicParms.Add(mi.Groups["name"].Value.Trim(), mi.Groups["v"].Value.Trim());

- }

- dicParms.Add("HiddenField_param", "");

- dicParms.Add("__EVENTTARGET", "");

- dicParms.Add("__EVENTARGUMENT", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_GridViewID", $"{name}");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentPage", ix.ToString());

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalPage", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iTotalCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageSize", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iPageCount", "");

- dicParms.Add("MdGridView_t_unitemployees_dwyg_iCurrentNum", "");

- dicParms.Add("XM", "ZXMCHECK");

- lstParms.Clear();

- lstParms = new List<string>() { "XM", "__EVENTTARGET", "__EVENTARGUMENT", "Button_Query", "Button_SelQuery", };

- lstParms.Add("Button_edit");

- lstParms.Add("Button_out");

- lstParms.Add("ImageButton_Tx");

- lstParms.Add("ImageButton_xx1");

- lstParms.Add("Button_qd");

- mc = Regex.Matches(msg, @"<input[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?value=[""'](?<val>[^'""]*?)[""'][^<>]*?/?>|<input[^<>]*?name=[""'](?<name>[^'""]*?)[""'][^<>]*?[""'][^<>]*?/?>|<select[^<>]*?name=[""'](?<name>[^""']*?)[""'][^<>]*?[""'][^<>]*?/?>", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- foreach (Match mi in mc)

- {

- if (lstParms.Contains(mi.Groups["name"].Value.Trim()))

- continue;

- if (dicParms.ContainsKey(mi.Groups["name"].Value.Trim()))

- dicParms[mi.Groups["name"].Value.Trim()] = mi.Groups["val"].Value.Trim();

- else

- dicParms.Add(mi.Groups["name"].Value.Trim(), mi.Groups["val"].Value.Trim());

- }

- ReqSznyEmployeeInfo(ix, dicParms, SuccessCallback, FailCallback);

- }

- }

- }

- catch (Exception ex) {

- Business.queueSznyEmployeeInfo.Enqueue(ix);

- Business.queueMsg.Enqueue($"{DateTime.Now.ToString("yyy-MM-dd HH:mm:ss")}{ex.Message}");

- }

- }

获取工号

- public static void ReqSznyEmployeeInfo(int ix,Dictionary<string,string> dicParms, Action<string> SuccessCallback, Action<string> FailCallback) {

- StringBuilder data = new StringBuilder();

- foreach (var kv in dicParms)

- {

- if (kv.Key.StartsWith("header"))

- continue;

- data.Append($"&{Common.UrlEncode(kv.Key, Encoding.UTF8)}={ Common.UrlEncode(kv.Value, Encoding.UTF8)}");

- }

- if (data.Length > )

- data.Remove(, );

- HttpWebRequest req = WebRequest.Create("http://127.0.0.1/HumanResources/EmployeeManage/EmployeeInfoList.aspx") as HttpWebRequest;

- req.Method = "POST";

- req.KeepAlive = true;

- req.CookieContainer = sznyCookie;

- req.ContentType = "application/x-www-form-urlencoded";

- req.Accept = "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9";

- if (dicParms.ContainsKey("ScriptManager1"))

- {

- req.Headers.Add("X-MicrosoftAjax", "Delta=true");

- req.Headers.Add("X-Requested-With", "XMLHttpRequest");

- req.ContentType = "application/x-www-form-urlencoded; charset=UTF-8";

- req.Accept = "*/*";

- }

- req.Headers.Add("Cache-Control", "max-age=0");

- req.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.163 Safari/537.36";

- req.ServicePoint.ConnectionLimit = int.MaxValue;

- req.ServicePoint.Expect100Continue = false;

- req.AllowAutoRedirect = true;

- req.Credentials = System.Net.CredentialCache.DefaultCredentials;

- byte[] buffer = Encoding.UTF8.GetBytes(data.ToString());

- using (Stream reqStream = req.GetRequestStream())

- {

- reqStream.Write(buffer, , buffer.Length);

- }

- req.BeginGetResponse(new AsyncCallback(RspSznyEmployeeInfo), new object[] { req,ix, dicParms, SuccessCallback, FailCallback });

- }

- private static void RspSznyEmployeeInfo(IAsyncResult result)

- {

- object[] parms = result.AsyncState as object[];

- HttpWebRequest req = parms[] as HttpWebRequest;

- int ix =int.Parse( parms[].ToString());

- Dictionary<string, string> dicParms = parms[] as Dictionary<string, string>;

- Action<string> SuccessCallback = parms[] as Action<string>;

- Action<string> FailCallback = parms[] as Action<string>;

- try

- {

- using (HttpWebResponse rsp = req.EndGetResponse(result) as HttpWebResponse)

- {

- using (StreamReader reader = new StreamReader(rsp.GetResponseStream()))

- {

- string msg = "";

- msg = reader.ReadToEnd();

- string code = "无";

- //<input[^<>]*?name\s*?=\s*?["']TextBox_YG_Code_str["'][^<>]*?value\s*?=\s*?["'](?<code>[^"']*?)["']|<input[^<>]*?value\s*?=\s*?["'](?<code>[^"']*?)["'][^<>]*?name\s*?=\s*?["']TextBox_YG_Code_str["']

- //new Regex(@"<input[^<>]*?name\s*?=\s*?[""']TextBox_YG_Code_str[""'][^<>]*?value\s*?=\s*?[""'](?<code>[^""']*?)[""']|<input[^<>]*?value\s*?=\s*?[""'](?<code>[^""']*?)[""'][^<>]*?name\s*?=\s*?[""']TextBox_YG_Code_str[""']", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture)

- Match m = Regex.Match(msg, @"<input[^<>]*?name\s*?=\s*?[""']TextBox_YG_Code_str[""'][^<>]*?value\s*?=\s*?[""'](?<code>[^""']*?)[""']|<input[^<>]*?value\s*?=\s*?[""'](?<code>[^""']*?)[""'][^<>]*?name\s*?=\s*?[""']TextBox_YG_Code_str[""']", RegexOptions.IgnoreCase | RegexOptions.Singleline | RegexOptions.Multiline | RegexOptions.IgnorePatternWhitespace | RegexOptions.ExplicitCapture);

- if (m.Success)

- code = m.Groups["code"].Value.Trim();

- if (dicSznyEmployees[ix].ContainsKey("Code"))

- dicSznyEmployees[ix]["Code"] = code;

- else

- dicSznyEmployees[ix].Add("Code", code);

- queueSuccessEmployeeInfo.Enqueue(ix);

- }

- }

- }

- catch (Exception ex) {

- Business.queueSznyEmployeeInfo.Enqueue(ix);

- Business.queueMsg.Enqueue($"{DateTime.Now.ToString("yyy-MM-dd HH:mm:ss")}{ex.Message}");

- }

- }

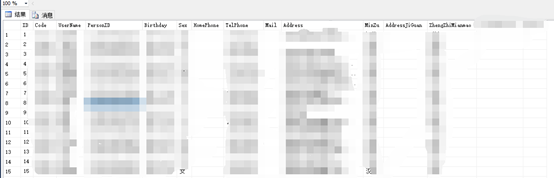

入库

采集到的信息,通过定时任务保存到数据库。

- Task.Factory.StartNew(() => {

- while (true) {

- if (Business.queueSuccessEmployeeInfo.Count <= ) {

- Thread.Sleep();

- continue;

- }

- List<Dictionary<string, string>> lst = new List<Dictionary<string, string>>();

- while (Business.queueSuccessEmployeeInfo.Count > ) {

- Business.queueSuccessEmployeeInfo.TryDequeue(out int ix);

- lst.Add(Business.dicSznyEmployees[ix]);

- if (lst.Count >= )

- break;

- }

- DbAccess.AddTran(lst, "SznyEmployee",new List<string>() { "UserName", "PersonID" });

- Thread.Sleep();

- }

- });

总结

采集的时候,为了能利用已经采集到的信息,而不是重复采集,在采集的时候对数据库数据进行判断是否存在。纯粹是为了提高效率,WebForm的网站真是太慢,太慢了

以前写异步纯粹是为了提高线程效率,在.NET中感觉不到快乐。

终于搞定了,数据已经成功入库了。

.NET的没落也是有原因的,网站的速度的确是慢,.net押宝.net core的新体验了。

我讨厌采集WEBForm网站,写了这么久的爬虫,祈祷永远不要在碰到WEBFORM了。

秀一下结果 庆祝一下把

爬虫系列 一次采集.NET WebForm网站的坎坷历程的更多相关文章

- 爬虫系列:连接网站与解析 HTML

这篇文章是爬虫系列第三期,讲解使用 Python 连接到网站,并使用 BeautifulSoup 解析 HTML 页面. 在 Python 中我们使用 requests 库来访问目标网站,使用 Bea ...

- 爬虫系列3:Requests+Xpath 爬取租房网站信息并保存本地

数据保存本地 [抓取]:参考前文 爬虫系列1:https://www.cnblogs.com/yizhiamumu/p/9451093.html [分页]:参考前文 爬虫系列2:https://www ...

- 爬虫系列2:Requests+Xpath 爬取租房网站信息

Requests+Xpath 爬取租房网站信息 [抓取]:参考前文 爬虫系列1:https://www.cnblogs.com/yizhiamumu/p/9451093.html [分页]:参考前文 ...

- java爬虫系列第二讲-爬取最新动作电影《海王》迅雷下载地址

1. 目标 使用webmagic爬取动作电影列表信息 爬取电影<海王>详细信息[电影名称.电影迅雷下载地址列表] 2. 爬取最新动作片列表 获取电影列表页面数据来源地址 访问http:// ...

- 爬虫系列:存储 CSV 文件

上一期:爬虫系列:存储媒体文件,讲解了如果通过爬虫下载媒体文件,以及下载媒体文件相关代码讲解. 本期将讲解如果将数据保存到 CSV 文件. 逗号分隔值(Comma-Separated Values,C ...

- java爬虫系列第一讲-爬虫入门

1. 概述 java爬虫系列包含哪些内容? java爬虫框架webmgic入门 使用webmgic爬取 http://ady01.com 中的电影资源(动作电影列表页.电影下载地址等信息) 使用web ...

- java爬虫系列目录

1. java爬虫系列第一讲-爬虫入门(爬取动作片列表) 2. java爬虫系列第二讲-爬取最新动作电影<海王>迅雷下载地址 3. java爬虫系列第三讲-获取页面中绝对路径的各种方法 4 ...

- 爬虫系列1:Requests+Xpath 爬取豆瓣电影TOP

爬虫1:Requests+Xpath 爬取豆瓣电影TOP [抓取]:参考前文 爬虫系列1:https://www.cnblogs.com/yizhiamumu/p/9451093.html [分页]: ...

- python 全栈开发,Day134(爬虫系列之第1章-requests模块)

一.爬虫系列之第1章-requests模块 爬虫简介 概述 近年来,随着网络应用的逐渐扩展和深入,如何高效的获取网上数据成为了无数公司和个人的追求,在大数据时代,谁掌握了更多的数据,谁就可以获得更高的 ...

随机推荐

- AI vs PS 矢量 VS 位图

矢量图 AI最大可以放大64000%.不会失真,依然很清晰.原理是不同的点以及点与点之间的路径构成的,不论放大的多大,点在路径在,就可以精确的计算出它的区域.AI中无法直接编辑位图. 位图 代表PS, ...

- Jmeter 使用正则表达式提取响应结果中的值

正则表达式提取的界面如下图: apply to: Main sample and sub-samples:作用于父节点取样器及对应子节点取样器Main sample only:仅作用于父节点取样器Su ...

- Thymeleaf+SpringBoot+Mybatis实现的齐贤易游网旅游信息管理系统

项目简介 项目来源于:https://github.com/liuyongfei-1998/root 本系统是基于Thymeleaf+SpringBoot+Mybatis.是非常标准的SSM三大框架( ...

- java 递归及其经典应用--求阶乘、打印文件信息、计算斐波那契数列

什么是递归 我先看下百度百科的解释: 一种计算过程,如果其中每一步都要用到前一步或前几步的结果,称为递归的.用递归过程定义的函数,称为递归函数,例如连加.连乘及阶乘等.凡是递归的函数,都是可计算的,即 ...

- php中session_id()函数详细介绍,会话id生成过程及session id长度

php中session_id()函数原型及说明session_id()函数说明:stringsession_id([string$id])session_id() 可以用来获取/设置 当前会话 ID. ...

- Linux 如何通过 iscsi target name 获取 ip

by Mike Andrews # lsscsi -t [:::] disk iqn.-.com.blockbridge:t-pjxfzufjkp-illoghjk,t,0x1 /dev/sda [: ...

- Redis持久化存储(一)

Redis介绍 Redis 是完全开源免费的,遵守BSD协议,是一个高性能的key-value数据库. Redis 与其他 key - value 缓存产品有以下三个特点: Redis支持数据的持久化 ...

- 设计模式 - 迭代器模式详解及其在ArrayList中的应用

基本介绍 迭代器模式(Iterator Pattern)是 Java 中使用最多的一种模式,它可以顺序的访问容器中的元素,但不需要知道容器的内部细节 模式结构 Iterator(抽象迭代器):定义遍历 ...

- js 之 JSON详解

JSON:JavaScriptObjectNotation JSON是一种语法,用来序列化对象.数组.字符串.布尔值和null. JSON是基于JavaScript的语法,但与之不同 注意事项 JSO ...

- c++ 如何开N次方?速解

c++ 如何开N次方?速解 直接上代码 #include <iostream> #include <cmath> using namespace std; typedef ...