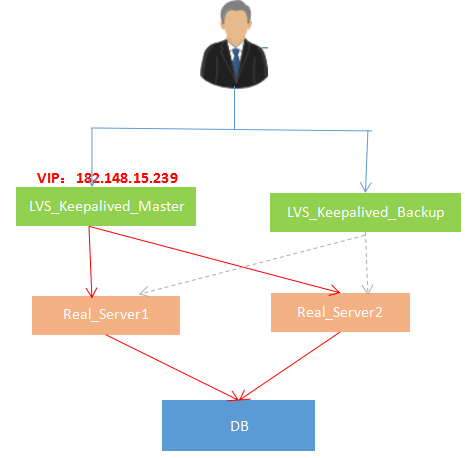

lvs+keepalive主从和主主架构

下面配置主从

1)关闭SELinux和防火墙

vi /etc/sysconfig/selinux

SELINUX=disabled setenforce 临时关闭SELinux,文件配置后,重启生效

182.148.15.0/24是服务器的公网网段,192.168.1.0/24是服务器的私网网段

一定要注意:加上这个组播规则后,MASTER和BACKUP故障时,才能实现VIP资源的正常转移。其故障恢复后,VIP也还会正常转移回来。

vim /etc/sysconfig/iptables

-A INPUT -s 182.148.15.0/ -d 224.0.0.18 -j ACCEPT #允许组播地址通信。

-A INPUT -s 192.168.1.0/ -d 224.0.0.18 -j ACCEPT

-A INPUT -s 182.148.15.0/ -p vrrp -j ACCEPT #允许 VRRP(虚拟路由器冗余协)通信

-A INPUT -s 192.168.1.0/ -p vrrp -j ACCEPT

-A INPUT -m state --state NEW -m tcp -p tcp --dport -j ACCEPT /etc/init.d/iptables restart

2) lvs安装(主备两台都要操作)

yum install -y libnl* popt*

modprobe -l |grep ipvs

cd /usr/local/src/

wget http://www.linuxvirtualserver.org/software/kernel-2.6/ipvsadm-1.26.tar.gz

ln -s /usr/src/kernels/2.6.-431.5..el6.x86_64/ /usr/src/linux

tar -zxvf ipvsadm-1.26.tar.gz

cd ipvsadm-1.26

make && make install 查看lvs集群

ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

3)编写lvs启动脚本(两台都要操作)

vim /etc/init.d/realserver

#!/bin/sh

VIP=182.148.15.239

. /etc/rc.d/init.d/functions case "$1" in

# 禁用本地的ARP请求、绑定本地回环地址

start)

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/sysctl -p >/dev/null >&

/sbin/ifconfig lo: $VIP netmask 255.255.255.255 up #在回环地址上绑定VIP,设定掩码,与Direct Server(自身)上的IP保持通信

/sbin/route add -host $VIP dev lo:

echo "LVS-DR real server starts successfully.\n"

;;

stop)

/sbin/ifconfig lo: down

/sbin/route del $VIP >/dev/null >&

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "LVS-DR real server stopped.\n"

;;

status)

isLoOn=`/sbin/ifconfig lo: | grep "$VIP"`

isRoOn=`/bin/netstat -rn | grep "$VIP"`

if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then

echo "LVS-DR real server has run yet."

else

echo "LVS-DR real server is running."

fi

exit

;;

*)

echo "Usage: $0 {start|stop|status}"

exit

esac

exit

将lvs脚本加入开机自启动

chmod +x /etc/init.d/realserver

echo "/etc/init.d/realserver start" >> /etc/rc.d/rc.local 启动LVS脚本(注意:如果这两台realserver机器重启了,一定要确保service realserver start 启动了,即lo:0本地回环上绑定了vip地址,否则lvs转发失败!)

service realserver start 查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了

ifconfig

eth0 Link encap:Ethernet HWaddr :::D1::

inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224

inet6 addr: fe80:::ff:fed1:/ Scope:Link

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (36.1 MiB) TX bytes: (21.8 GiB) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::/ Scope:Host

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 b) TX bytes: (0.0 b) lo: Link encap:Local Loopback

inet addr:182.148.15.239 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU: Metric:

4)安装keepalive(主备)

yum install -y openssl-devel

cd /usr/local/src/

wget http://www.keepalived.org/software/keepalived-1.3.5.tar.gz

tar -zvxf keepalived-1.3..tar.gz

cd keepalived-1.3.

./configure --prefix=/usr/local/keepalived

make && make install cp /usr/local/src/keepalived-1.3./keepalived/etc/init.d/keepalived /etc/rc.d/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

mkdir /etc/keepalived/

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

echo "/etc/init.d/keepalived start" >> /etc/rc.local chmod +x /etc/rc.d/init.d/keepalived

chkconfig keepalived on

service keepalived start

5)配置

主备打开ip_forward转发功能

echo "" > /proc/sys/net/ipv4/ip_forward 主的keepalive.conf配置

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Master

} vrrp_instance VI_1 {

state MASTER #指定instance初始状态,实际根据优先级决定.backup节点不一样

interface eth0 #虚拟IP所在网

virtual_router_id #VRID,相同VRID为一个组,决定多播MAC地址

priority #优先级,另一台改为90.backup节点不一样

advert_int #检查间隔

authentication {

auth_type PASS #认证方式,可以是pass或ha

auth_pass #认证密码

}

virtual_ipaddress {

182.148.15.239 #VIP

}

} virtual_server 182.148.15.239 {

delay_loop #服务轮询的时间间隔

lb_algo wrr #加权轮询调度,LVS调度算法 rr|wrr|lc|wlc|lblc|sh|sh

lb_kind DR #LVS集群模式 NAT|DR|TUN,其中DR模式要求负载均衡器网卡必须有一块与物理网卡在同一个网段

#nat_mask 255.255.255.0

persistence_timeout #会话保持时间

protocol TCP #健康检查协议 ## Real Server设置,80就是连接端口

real_server 182.148.15.233 {

weight ##权重

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} /etc/init.d/keepadlived start

ip addr : lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether ::::dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.237/ brd 182.48.115.255 scope global eth0

inet 182.48.115.239/ scope global eth0

inet6 fe80:::ff:fe68:dcb6/ scope link

valid_lft forever preferred_lft foreve ipvsadm -ln IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.48.115.239: wrr persistent

-> 182.48.115.233: Route

-> 182.48.115.238: Route 备用上的keepalived.conf配置

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Backup

} vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

182.148.15.239

}

} virtual_server 182.148.15.239 {

delay_loop

lb_algo wrr

lb_kind DR persistence_timeout

protocol TCP real_server 182.148.15.233 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} /etc/init.d/keepalived start

ip addr

查看LVS_Keepalived_Backup机器上,发现VIP默认在LVS_Keepalived_Master机器上,只要当LVS_Keepalived_Backup发生故障时,VIP资源才会飘到自己这边来。 : lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether :::7c:b8:f0 brd ff:ff:ff:ff:ff:ff

inet 182.48.115.236/ brd 182.48.115.255 scope global eth0

inet 182.48.115.239/ brd 182.48.115.255 scope global secondary eth0:

inet6 fe80:::ff:fe7c:b8f0/ scope link

valid_lft forever preferred_lft forever ipvsadm -L -n IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.48.115.239: wrr persistent

-> 182.48.115.233: Route

-> 182.48.115.238: Route

3)后端真实服务器上的操作

分别在两台Real Server上配置两个域名www.test1.com,www.test2.com 在master和backup正常访问两个域名 curl http://www.tes1.com

this is page of Real_Server1:182.148.15.238 www.test1.com

curl http://www.test2.com

this is page of Real_Server2:182.148.15.238 www.test2.com 关闭真实服务器2的nginx,发现对应域名请求到real_server 上

usr/local/nginx/sbin/nginx -s stop

lsof -i:

再次在LVS_Keepalived_Master 和 LVS_Keepalived_Backup两台机器上访问这两个域名,就会发现已经负载到Real_Server1上了

curl http://www.test1.com

this is page of Real_Server1:182.148.15.233 www.test1.com

curl http://www.test2.com

this is page of Real_Server1:182.148.15.233 www.test2.com curl http://www.test1.com

this is page of Real_Server1:182.148.15.233 www.test1.com

curl http://www.test2.com

this is page of Real_Server1:182.148.15.233 www.test2.com 另外,设置这两台Real Server的iptables,让其80端口只对前面的两个vip资源开放

vim /etc/sysconfig/iptables

......

-A INPUT -s 182.148.15.239 -m state --state NEW -m tcp -p tcp --dport -j ACCEPT /etc/init.d/iptables restart

4)测试

将www.test1.com和www.test2.com测试域名解析到VIP:182.148.15.239,然后在浏览器里是可以正常访问的。

)测试LVS功能(上面Keepalived的lvs配置中,自带了健康检查,当后端服务器的故障出现故障后会自动从lvs集群中踢出,当故障恢复后,再自动加入到集群中)

先查看当前LVS集群,如下:发现后端两台Real Server的80端口都运行正常

[root@LVS_Keepalived_Master ~]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239: wrr persistent

-> 182.148.15.233: Route

-> 182.148.15.238: Route 现在测试关闭一台Real Server,比如Real_Server2

[root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx -s stop 过一会儿再次查看当前LVS集群,如下:发现Real_Server2已经被踢出当前LVS集群了

[root@LVS_Keepalived_Master ~]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239: wrr persistent

-> 182.148.15.233: Route 最后重启Real_Server2的80端口,发现LVS集群里又再次将其添加进来了

[root@Real_Server2 ~]# /usr/local/nginx/sbin/nginx [root@LVS_Keepalived_Master ~]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 182.148.15.239: wrr persistent

-> 182.148.15.233: Route

-> 182.148.15.238: Route 以上测试中,http://www.wangshibo.com和http://www.guohuihui.com域名访问都不受影响。 )测试Keepalived心跳测试的高可用

默认情况下,VIP资源是在LVS_Keepalived_Master上

[root@LVS_Keepalived_Master ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether ::::dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.237/ brd 182.148.15.255 scope global eth0

inet 182.148.15.239/ scope global eth0

inet 182.148.15.239/ brd 182.148.15.255 scope global secondary eth0:

inet6 fe80:::ff:fe68:dcb6/ scope link

valid_lft forever preferred_lft forever 然后关闭LVS_Keepalived_Master的keepalived,发现VIP就会转移到LVS_Keepalived_Backup上。

[root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived stop

Stopping keepalived: [ OK ]

[root@LVS_Keepalived_Master ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether ::::dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.237/ brd 182.148.15.255 scope global eth0

inet 182.148.15.239/ brd 182.148.15.255 scope global secondary eth0:

inet6 fe80:::ff:fe68:dcb6/ scope link

valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP的移动信息

[root@LVS_Keepalived_Master ~]# tail -f /var/log/messages

.............

May :: Haproxy_Keepalived_Master Keepalived_healthcheckers[]: TCP connection to [182.148.15.233]: failed.

May :: Haproxy_Keepalived_Master Keepalived_healthcheckers[]: TCP connection to [182.148.15.233]: failed.

May :: Haproxy_Keepalived_Master Keepalived_healthcheckers[]: Check on service [182.148.15.233]: failed after retry.

May :: Haproxy_Keepalived_Master Keepalived_healthcheckers[]: Removing service [182.148.15.233]: from VS [182.148.15.239]: [root@LVS_Keepalived_Backup ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether :::7c:b8:f0 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.236/ brd 182.148.15.255 scope global eth0

inet 182.148.15.239/ scope global eth0

inet 182.148.15.239/ brd 182.148.15.255 scope global secondary eth0:

inet6 fe80:::ff:fe7c:b8f0/ scope link

valid_lft forever preferred_lft forever 接着再重新启动LVS_Keepalived_Master的keepalived,发现VIP又转移回来了

[root@LVS_Keepalived_Master ~]# /etc/init.d/keepalived start

Starting keepalived: [ OK ]

[root@LVS_Keepalived_Master ~]# ip addr

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether ::::dc:b6 brd ff:ff:ff:ff:ff:ff

inet 182.148.15.237/ brd 182.148.15.255 scope global eth0

inet 182.148.15.239/ scope global eth0

inet 182.148.15.239/ brd 182.148.15.255 scope global secondary eth0:

inet6 fe80:::ff:fe68:dcb6/ scope link

valid_lft forever preferred_lft forever 查看系统日志,能查看到LVS_Keepalived_Master的VIP转移回来的信息

[root@LVS_Keepalived_Master ~]# tail -f /var/log/messages

.............

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 182.148.15.239

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: VRRP_Instance(VI_1) Sending/queueing gratuitous ARPs on eth0 for 182.148.15.239

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 182.148.15.239

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 182.148.15.239

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 182.148.15.239

May :: Haproxy_Keepalived_Master Keepalived_vrrp[]: Sending gratuitous ARP on eth0 for 182.148.15.239

LVS+KEEPALIVED主主热备的高可用环境部署

主主环境相比于主从环境,区别只在于:

)LVS负载均衡层需要两个VIP。比如182.148.15.239和182.148.15.

)后端的realserver上要绑定这两个VIP到lo本地回环口上

)Keepalived.conf的配置相比于上面的主从模式也有所不同 主主架构的具体配置如下:

)编写LVS启动脚本(在Real_Server1 和Real_Server2上都要操作,realserver脚本内容是一样的) 由于后端realserver机器要绑定两个VIP到本地回环口lo上(分别绑定到lo:0和lo:),所以需要编写两个启动脚本 [root@Real_Server1 ~]# vim /etc/init.d/realserver1

#!/bin/sh

VIP=182.148.15.239

. /etc/rc.d/init.d/functions case "$1" in start)

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/sysctl -p >/dev/null >&

/sbin/ifconfig lo: $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:

echo "LVS-DR real server starts successfully.\n"

;;

stop)

/sbin/ifconfig lo: down

/sbin/route del $VIP >/dev/null >&

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "LVS-DR real server stopped.\n"

;;

status)

isLoOn=`/sbin/ifconfig lo: | grep "$VIP"`

isRoOn=`/bin/netstat -rn | grep "$VIP"`

if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then

echo "LVS-DR real server has run yet."

else

echo "LVS-DR real server is running."

fi

exit

;;

*)

echo "Usage: $0 {start|stop|status}"

exit

esac

exit [root@Real_Server1 ~]# vim /etc/init.d/realserver2

#!/bin/sh

VIP=182.148.15.235

. /etc/rc.d/init.d/functions case "$1" in start)

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/sysctl -p >/dev/null >&

/sbin/ifconfig lo: $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:

echo "LVS-DR real server starts successfully.\n"

;;

stop)

/sbin/ifconfig lo: down

/sbin/route del $VIP >/dev/null >&

echo "" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "LVS-DR real server stopped.\n"

;;

status)

isLoOn=`/sbin/ifconfig lo: | grep "$VIP"`

isRoOn=`/bin/netstat -rn | grep "$VIP"`

if [ "$isLoON" == "" -a "$isRoOn" == "" ]; then

echo "LVS-DR real server has run yet."

else

echo "LVS-DR real server is running."

fi

exit

;;

*)

echo "Usage: $0 {start|stop|status}"

exit

esac

exit 将lvs脚本加入开机自启动

[root@Real_Server1 ~]# chmod +x /etc/init.d/realserver1

[root@Real_Server1 ~]# chmod +x /etc/init.d/realserver2

[root@Real_Server1 ~]# echo "/etc/init.d/realserver1" >> /etc/rc.d/rc.local

[root@Real_Server1 ~]# echo "/etc/init.d/realserver2" >> /etc/rc.d/rc.local 启动LVS脚本

[root@Real_Server1 ~]# service realserver1 start

LVS-DR real server starts successfully.\n [root@Real_Server1 ~]# service realserver2 start

LVS-DR real server starts successfully.\n 查看Real_Server1服务器,发现VIP已经成功绑定到本地回环口lo上了

[root@Real_Server1 ~]# ifconfig

eth0 Link encap:Ethernet HWaddr :::D1::

inet addr:182.148.15.233 Bcast:182.148.15.255 Mask:255.255.255.224

inet6 addr: fe80:::ff:fed1:/ Scope:Link

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (36.1 MiB) TX bytes: (21.8 GiB) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::/ Scope:Host

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 b) TX bytes: (0.0 b) lo: Link encap:Local Loopback

inet addr:182.148.15.239 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU: Metric: lo: Link encap:Local Loopback

inet addr:182.148.15.235 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU: Metric: )Keepalived.conf的配置

LVS_Keepalived_Master机器上的Keepalived.conf配置

先打开ip_forward路由转发功能

[root@LVS_Keepalived_Master ~]# echo "" > /proc/sys/net/ipv4/ip_forward [root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Master

} vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

182.148.15.239

}

} vrrp_instance VI_2 {

state BACKUP

interface eth0

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

182.148.15.235

}

} virtual_server 182.148.15.239 {

delay_loop

lb_algo wrr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout

protocol TCP real_server 182.148.15.233 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} virtual_server 182.148.15.235 {

delay_loop

lb_algo wrr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout

protocol TCP real_server 182.148.15.233 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} LVS_Keepalived_Backup机器上的Keepalived.conf配置

[root@LVS_Keepalived_Master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs {

router_id LVS_Backup

} vrrp_instance VI_1 {

state Backup

interface eth0

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

182.148.15.239

}

} vrrp_instance VI_2 {

state Master

interface eth0

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

182.148.15.235

}

} virtual_server 182.148.15.239 {

delay_loop

lb_algo wrr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout

protocol TCP real_server 182.148.15.233 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} virtual_server 182.148.15.235 {

delay_loop

lb_algo wrr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout

protocol TCP real_server 182.148.15.233 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

real_server 182.148.15.238 {

weight

TCP_CHECK {

connect_timeout

nb_get_retry

delay_before_retry

connect_port

}

}

} 其他验证操作和上面主从模式一样~

lvs+keepalive主从和主主架构的更多相关文章

- Apache+lvs高可用+keepalive(主从+双主模型)

Apache+lvs高可用+keepalive(主从+双主模型) keepalive实验准备环境: httpd-2.2.15-39.el6.centos.x86_64 keepalived-1 ...

- LVS+Keepalived 高可用环境部署记录(主主和主从模式)

之前的文章介绍了LVS负载均衡-基础知识梳理, 下面记录下LVS+Keepalived高可用环境部署梳理(主主和主从模式)的操作流程: 一.LVS+Keepalived主从热备的高可用环境部署 1)环 ...

- Keepalived搭建主从架构、主主架构实例

实例拓扑图: DR1和DR2部署Keepalived和lvs作主从架构或主主架构,RS1和RS2部署nginx搭建web站点. 注意:各节点的时间需要同步(ntpdate ntp1.aliyun.co ...

- 搭建MySQL的主从、半同步、主主复制架构

复制其最终目的是让一台服务器的数据和另外的服务器的数据保持同步,已达到数据冗余或者服务的负载均衡.一台主服务器可以连接多台从服务器,并且从服务器也可以反过来作为主服务器.主从服务器可以位于不同的网络拓 ...

- MySQL主从、主主、半同步节点架构的的原理及实验总结

一.原理及概念: MySQL 主从复制概念 MySQL 主从复制是指数据可以从一个MySQL数据库服务器主节点复制到一个或多个从节点.MySQL 默认采用异步复制方式,这样从节点不用一直访问主服务器来 ...

- LVS+Keepalived+Mysql+主主数据库架构[2台]

架构图 安装步骤省略. 158.140 keepalived.conf ! Configuration File for keepalived global_defs { #全局标识模块 notifi ...

- Haproxy+Keepalived高可用环境部署梳理(主主和主从模式)

Nginx.LVS.HAProxy 是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,通常会结合Keepalive做健康检查,实现故障转移的高可用功能. 1)在四层(tcp)实现负载均衡的 ...

- LVS+MYCAT+读写分离+MYSQL主备同步部署手册

LVS+MYCAT+读写分离+MYSQL主备同步部署手册 1 配置MYSQL主备同步…. 2 1.1 测试环境… 2 1.2 配置主数据库… 2 1.2.1 ...

- 【转载】LVS+MYCAT+读写分离+MYSQL主备同步部署手册(邢锋)

LVS+MYCAT+读写分离+MYSQL主备同步部署手册 1 配置MYSQL主备同步…. 2 1.1 测试环境… 2 1.2 配置主数据库… 2 1.2.1 ...

随机推荐

- hihocoder1829 Tomb Raider

思路: 暴力枚举. 实现: #include <iostream> #include <set> #include <vector> using namespace ...

- Android 视频录制 java.lang.RuntimeException: start failed.

//mRecorder.setVideoSize(320, 280); // mRecorder.setVideoFrameRate(5); mRecorder.setOutputFile(viodF ...

- List 集合中数据不重复的使用

foreach (DataRow dr in dt.Rows) { list.Add(dr["项目组"].ToString()); } list = list.Distinct&l ...

- 将生成的Excel表发送到邮箱

本文接上一篇,将得到的Excel表发送到邮箱.. 在上一篇中,本人使用的是直接从数据库中获取数据,然后包装成Excel表.现在将该Excel表发送到目的邮箱,如果需要跟上篇一样,定时每天某时刻发送,就 ...

- tomcat 发布本地文件

应用场景,通过web,jsp访问本地mouse文件夹的静态文件 通过修改tomcat配置文件server.xml <!--在Host标签下加入Context标签,path指的是服务器url请求地 ...

- NBUT 1119 Patchouli's Books (STL应用)

题意: 输入一个序列,每个数字小于16,序列元素个数小于9. 要求将这个序列所有可能出现的顺序输出,而且要字典序. 思路: 先排序,输出该升序序列,再用next_permutation进行转变即可,它 ...

- 目后佐道IT教育:师资团队

高端技术顾问 1. leepoor 拥有12年的Web开发和架构经验,在阿里巴巴担任高级架构师,参与阿里巴巴基础技术平台开发和www.alibaba.com架构设计.擅长大型网站技术架构,工作中经常使 ...

- Linux OpenGL 实践篇-12-procedural-texturing

程序式纹理 简单的来说程序式纹理就是用数学公式描述物体表面的纹路 .而实现这个过程的着色器我们称之为程序纹理着色器,通常在这类着色器中我们能使用的输入信息也就是顶点坐标和纹理坐标. 程序式纹理的优点 ...

- (转)linux自动备份oracle数据库并上传到备份服务器 脚本实现

实际项目中,备份数据是不可缺少的一步,完成数据的自动备份减少个人的工作量,是我们的目标.之前很少写过脚本,不过这些简单的操作还是可以做到的!话不多说,开始具体介绍:oracle版本:10.2.0操作系 ...

- 第009课 gcc和arm-linux-gcc和MakeFile

from:第009课 gcc和arm-linux-gcc和MakeFile 第001节_gcc编译器1_gcc常用选项_gcc编译过程详解 gcc的使用方法 gcc [选项] 文件名 gcc常用选项 ...