LVS+Keepalived+Nginx+Tomcat高可用负载均衡集群配置(DR模式,一个VIP,多个端口)

一.概述

LVS作用:实现负载均衡

Keepalived作用:监控集群系统中各个服务节点的状态,HA cluster。

配置LVS有两种方式:

1. 通过ipvsadm命令行方式配置

2. 通过Redhat提供的工具piranha来配置LVS

软件下载:

ipvsadm下载地址:

http://www.linuxvirtualserver.org/software/kernel-2.6/

Keepalived下载地址:

http://www.keepalived.org/software/

安装包版本:

ipvsadm-1.24.tar.gz

keepalived-1.2.2.tar.gz

操作系统版本:

Red Hat Enterprise Linux Server release 6.4 (Santiago)

二.服务器规划:

|

服务器 |

IP地址 |

网关 |

虚拟设备名 |

虚拟IP(VIP) |

部署应用 |

|

Master Director Server |

10.50.13.34 |

10.50.13.1 |

eth0:0 |

10.50.13.11 |

LVS+keepalived |

|

Backup Director Server |

10.50.13.35 |

10.50.13.1 |

eth0:0 |

10.50.13.11 |

LVS+keepalived |

|

Real server1 |

10.50.13.36 |

10.50.13.1 |

lo:0 |

10.50.13.11 |

Nginx+tomcat |

|

Real server2 |

10.50.13.37 |

10.50.13.1 |

lo:0 |

10.50.13.11 |

Nginx+tomcat |

三.LVS安装配置

1.通过ipvsadm软件配置LVS,在Director Serve上安装IPVS管理软件

通过如下命令检查kernel是否已经支持LVS的ipvs模块:

$ modprobe -l |grep ipvs

kernel/net/netfilter/ipvs/ip_vs.ko

kernel/net/netfilter/ipvs/ip_vs_rr.ko

kernel/net/netfilter/ipvs/ip_vs_wrr.ko

kernel/net/netfilter/ipvs/ip_vs_lc.ko

kernel/net/netfilter/ipvs/ip_vs_wlc.ko

kernel/net/netfilter/ipvs/ip_vs_lblc.ko

kernel/net/netfilter/ipvs/ip_vs_lblcr.ko

kernel/net/netfilter/ipvs/ip_vs_dh.ko

kernel/net/netfilter/ipvs/ip_vs_sh.ko

kernel/net/netfilter/ipvs/ip_vs_sed.ko

kernel/net/netfilter/ipvs/ip_vs_nq.ko

kernel/net/netfilter/ipvs/ip_vs_ftp.ko

如果有类似上面的输出,表明系统内核已经默认支持了IPVS模块。接着就可以安装IPVS管理软件了。

2. 安装ipvsadm

$ tar zxf ipvsadm-1.24.tar.gz

$ cd ipvsadm-1.24

$ sudo make

$ sudo make install

make时可能会遇到报错,由于编译程序找不到对应内核的原因,执行以下命令后继续编译

$ ln -s /usr/src/kernels/2.6.18-164.el5-x86_64/ /usr/src/linux

3. ipvsadm配置

为了管理和配置的方便,将ipvsadm配置写成启停脚本:

$ cat lvsdr

#!/bin/bash

# description: Start LVS of Director server

VIP=10.50.13.11

RIP=`echo 10.50.13.{36..37}`

. /etc/rc.d/init.d/functions

case "$1" in

start)

echo " start LVS of Director Server"

# set the Virtual IP Address and sysctl parameter

/sbin/ifconfig eth0:0 ${VIP} broadcast ${VIP} netmask 255.255.255.255 up

/sbin/route add -host ${VIP} dev eth0:0

#echo "1" >/proc/sys/net/ipv4/ip_forward

#Clear IPVS table

/sbin/ipvsadm -C

#set LVS

/sbin/ipvsadm -A -t ${VIP}:80 -s rr -p 600

/sbin/ipvsadm -A -t ${VIP}:90 -s rr -p 600

for rip in ${RIP};do

/sbin/ipvsadm -a -t ${VIP}:80 -r ${rip}:80 -g

/sbin/ipvsadm -a -t ${VIP}:90 -r ${rip}:90 -g

done

#Run LVS

/sbin/ipvsadm

;;

stop)

echo "close LVS Directorserver"

#echo "0" >/proc/sys/net/ipv4/ip_forward

/sbin/ipvsadm -C

/sbin/ifconfig eth0:0 down

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

然后把文件放到/etc/init.d下,执行:

$ chomd +x /etc/init.d/lvsdr

$ service lvsdr start

4. Real server 配置

在lvs的DR和TUN模式下,用户的访问请求到达真实服务器后,是直接返回给用户的,而不再经过前端的Director Server,因此,就需要在每个Real server节点上增加虚拟的VIP地址,这样数据才能直接返回给用户,增加VIP地址的操作可以通过创建脚本的方式来实现,创建文件/etc /init.d/lvsrs,脚本内容如下:

$ cat /etc/init.d/lvsrs

#!/bin/bash

VIP=10.50.13.11

/sbin/ifconfig lo:0 ${VIP} broadcast ${VIP} netmask 255.255.255.255 up

/sbin/route add -host ${VIP} dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p

四.Keepalived安装配置

以下是通过具有sudo权限的普通用户安装的。

tar zxf keepalived-1.2.2.tar.gz

cd keepalived-1.2.2

./configure --sysconf=/etc --with-kernel-dir=/usr/src/kernels/2.6.32-358.el6.x86_64

sudo make

sudo make install

sudo cp /usr/local/sbin/keepalived /sbin/keepalived

配置keepalived

Keepavlived的配置文件在/etc/keepalived/目录下,编辑后的keepavlived的MASTER配置文件如下:

$ cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

Eivll0m@xxx.com

}

notification_email_from keepalived@localhost

smtp_server 10.50.13.34

smtp_connect_timeout 30

router_id LVS_MASTER

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.50.13.11

}

notify_master "/etc/init.d/lvsdr start"

notify_backup "/etc/init.d/lvsdr stop"

}

virtual_server 10.50.13.11 80 {

delay_loop 2

lb_algo rr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 10.50.13.36 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 10.50.13.37 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

virtual_server 10.50.13.11 90 {

delay_loop 2

lb_algo rr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 10.50.13.36 90 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 10.50.13.37 90 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

BACKUP配置文件如下:

$ cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

Eivll0m@xxx.com

}

notification_email_from keepalived@localhost

smtp_server 10.50.13.35

smtp_connect_timeout 30

router_id LVS_BACKUP

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.50.13.11

}

notify_master "/etc/init.d/lvsdr start"

notify_backup "/etc/init.d/lvsdr stop"

}

virtual_server 10.50.13.11 80 {

delay_loop 2

lb_algo rr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 10.50.13.36 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 10.50.13.37 80 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

virtual_server 10.50.13.11 90 {

delay_loop 2

lb_algo rr

lb_kind DR

#nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 10.50.13.36 90 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 10.50.13.37 90 {

weight 1

TCP_CHECK {

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

keepalived启动方法:

/etc/init.d/keepalived start

五.nginx、tomcat安装配置

1.安装nginx

$ tar zxf nginx-1.6.1.tar.gz

$ cd nginx-1.6.1

$ ./configure --prefix=/app/nginx --with-http_ssl_module --with-http_realip_module --add-module=/app/soft/nginx-sticky

$ make

$ make install

2.nginx配置:

upstream test_80 {

sticky;

server 10.50.13.36:8080;

server 10.50.13.37:8080;

}

upstream test_90 {

sticky;

server 10.50.13.36:8090;

server 10.50.13.37:8090;

}

server {

listen 80;

server_name localhost;

.....

location ^~ /test/ {

proxy_pass http://test_80;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

server {

listen 90;

server_name localhost;

.....

location ^~ /test/ {

proxy_pass http://test_80;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

3.安装tomcat(简单,过程略)

10.50.13.36/37每台机器各安装两个tomcat,端口分别启用8080和8090

安装路径:/app/tomcat、/app/tomcat2

4.给tomcat准备测试文件

10.50.13.36:

$ mkdir /app/tomcat/webapps/test

$ cd /app/tomcat/webapps/test

$ vi index.jsp #添加如下内容

<%@ page language="java" %>

<html>

<head><title>10.50.13.36_tomcat1</title></head>

<body>

<h1><font color="blue">10.50.13.36_tomcat1 </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

$ mkdir /app/tomcat/webapps/test

$ cd /app/tomcat/webapps/test

$ vi index.jsp #添加如下内容

<%@ page language="java" %>

<html>

<head><title>10.50.13.36_tomcat2</title></head>

<body>

<h1><font color="blue">10.50.13.36_tomcat2 </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

10.50.13.37:

$ mkdir /app/tomcat/webapps/test

$ cd /app/tomcat/webapps/test

$ vi index.jsp #添加如下内容

<%@ page language="java" %>

<html>

<head><title>10.50.13.37_tomcat1</title></head>

<body>

<h1><font color="blue">10.50.13.37_tomcat1 </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

$ mkdir /app/tomcat/webapps/test

$ cd /app/tomcat/webapps/test

$ vi index.jsp #添加如下内容

<%@ page language="java" %>

<html>

<head><title>10.50.13.37_tomcat2</title></head>

<body>

<h1><font color="blue">10.50.13.37_tomcat7 </font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("abc","abc"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>

六.测试

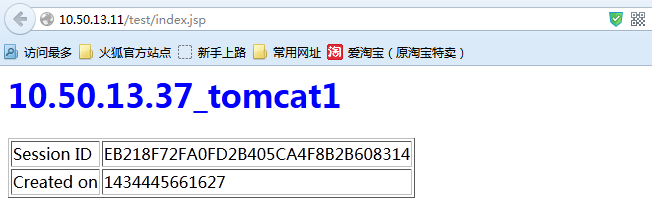

通过浏览器访问http://10.50.13.11/test/index.jsp

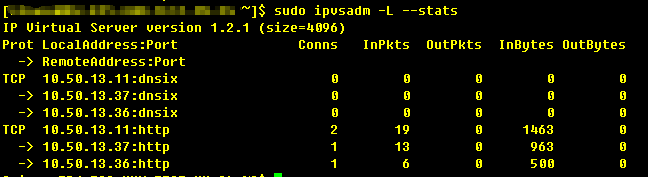

查看LVS统计信息:

通过curl访问http://10.50.13.11/test/index.jsp

查看LVS统计信息:

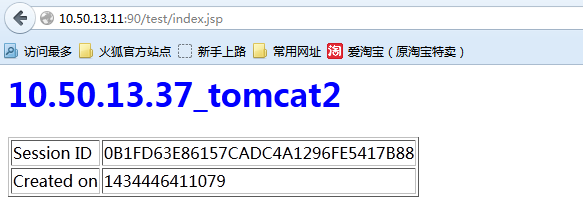

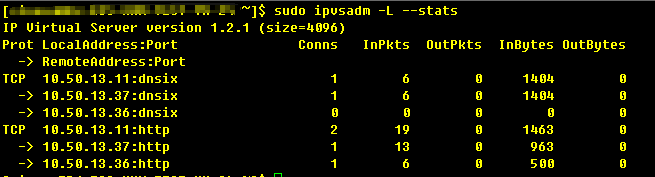

通过浏览器访问http://10.50.13.11:90/test/index.jsp

查看LVS统计信息:可以看到10.50.13.11:dnsix有变化了。

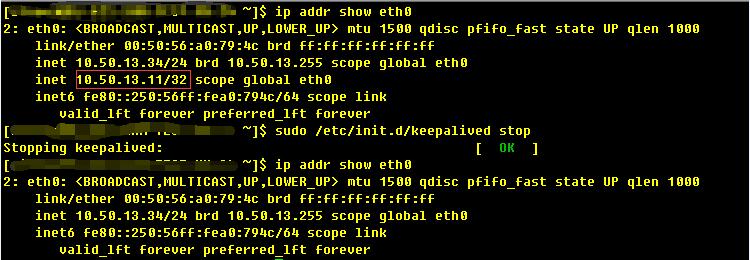

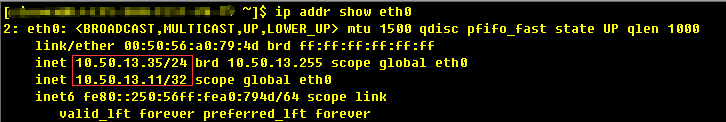

keepalived测试,停掉master上的keepalived服务,看看VIP是否会漂移到BACKUP上面

通过以上测试发现,BACKUP已正常接管服务了。

LVS+Keepalived+Nginx+Tomcat高可用负载均衡集群配置(DR模式,一个VIP,多个端口)的更多相关文章

- LVS+Keepalived搭建MyCAT高可用负载均衡集群

LVS+Keepalived 介绍 LVS LVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统.本项目在1998年5月由章文嵩博士成立,是中国 ...

- Keepalived+Nginx实现高可用负载均衡集群

一 环境介绍 1.操作系统CentOS Linux release 7.2.1511 (Core) 2.服务keepalived+nginx双主高可用负载均衡集群及LAMP应用keepalived-1 ...

- Haproxy+Keepalived搭建Weblogic高可用负载均衡集群

配置环境说明: KVM虚拟机配置 用途 数量 IP地址 机器名 虚拟IP地址 硬件 内存3G 系统盘20G cpu 4核 Haproxy keepalived 2台 192.168.1.10 192 ...

- [转]搭建Keepalived+Nginx+Tomcat高可用负载均衡架构

[原文]https://www.toutiao.com/i6591714650205716996/ 一.概述 初期的互联网企业由于业务量较小,所以一般单机部署,实现单点访问即可满足业务的需求,这也是最 ...

- Keepalived + Nginx + Tomcat 高可用负载均衡架构

环境: 1.centos7.3 2.虚拟ip:192.168.217.200 3.192.168.217.11.192.168.217.12上分别部署Nginx Keepalived Tomcat并进 ...

- lvs + keepalived + nginx + tomcat高可用负载反向代理服务器配置(一) 简介

一. 为什么这样构架 1. 系统高可用性 2. 系统可扩展性 3. 负载均衡能力 LVS+keepalived能很好的实现以上的要求,keepalived提供健康检查,故障转移,提高系统的可用性!采用 ...

- lvs + keepalived + nginx + tomcat高可用负载反向代理服务器配置(二) LVS+Keepalived

一.安装ipvs sudo apt-get install ipvsadm 二.安装keepalived sudo apt-get install keepalived 三.创建keepalived. ...

- lvs + keepalived + nginx + tomcat高可用负载反向代理服务器配置(三) Nginx

1. 安装 sudo apt-get install nginx 2. 配置nginx sudo gedit /etc/nginx/nginx.conf user www-data; worker_ ...

- docker下用keepalived+Haproxy实现高可用负载均衡集群

启动keepalived后宿主机无法ping通用keepalived,报错: [root@localhost ~]# ping 172.18.0.15 PING () bytes of data. F ...

随机推荐

- iOS获取健康步数从加速计到healthkit

计步模块接触了一年多,最近又改需求了,所以又换了全新的统计步数的方法,整理一下吧. 在iPhone5s以前机型因为没有陀螺仪的存在,所以需要用加速度传感器来采集加速度值信息,然后根据震动幅度让其加入踩 ...

- STL的基本使用之关联容器:map和multiMap的基本使用

STL的基本使用之关联容器:map和multiMap的基本使用 简介 map 和 multimap 内部也都是使用红黑树来实现,他们存储的是键值对,并且会自动将元素的key进行排序.两者不同在于map ...

- 关于EF查询表里的部分字段

这个在项目中用到了,在网上找了一下才找到,留下来以后自已使用. List<UniversalInfo> list =new List<UniversalInfo>(); lis ...

- C# Dll动态链接库

新建一个类库. 2 编写一个简单的类库实例,例如:DllTest在默认名为:calss1.cs里编写代码一下是一个简单的:在控制台显示 “你以成功调用了动态连接!”sing System;us ...

- X-Plane飞行模拟器购买安装

要玩起X-Plane第一个步骤当然是购买了,要购买其实非常简单,只需要一张能够支持MasterCard或者其他外币结算的信用卡,在http://www.x-plane.com/官网上购买即可,比逛淘宝 ...

- JS判断浏览器是否支持某一个CSS3属性的方法

var div = document.createElement('div'); console.log(div.style.transition); //如果支持的话, 会输出 "&quo ...

- 『重构--改善既有代码的设计』读书笔记----Move Method

明确函数所在类的位置是很重要的.这样可以避免你的类与别的类有太多耦合.也会让你的类的内聚性变得更加牢固,让你的整个系统变得更加整洁.简单来说,如果在你的程序中,某个类的函数在使用的过程中,更多的是在和 ...

- Java compile时,提示 DeadCode的原因

在工程编译时,编译器发现部分代码是无用代码,则会提示:某一行代码是DeadCode.今天compile工程的时候发现某一个循环出现这个问题,如下: public void mouseOver(fina ...

- Array.prototype.map()

mdn上解释的特别详细 概述 map() 方法返回一个由原数组中的每个元素调用一个指定方法后的返回值组成的新数组. 语法 array.map(callback[, thisArg]) 参数 callb ...

- ubantu下重启apache

启动apache服务 sudo /etc/init.d/apache2 start重启apache服务sudo /etc/init.d/apache2 restart停止apache服务 sudo / ...