ipv4 ipv6 求字符串和整数一一映射的算法 AmazonOrderId

字符串和整数一一映射的算法

公司每人的英文名不同,现在给每个英文名一个不同的数字编号,怎么设计?

走ipv4/6 2/32 2/128就够了,把“网段”概念对应到“表或库”,ip有a_e5类,这概念都可以引过来 和 时间 年月日 店铺 sellid marketplaceid amazon 平台参数对应

w

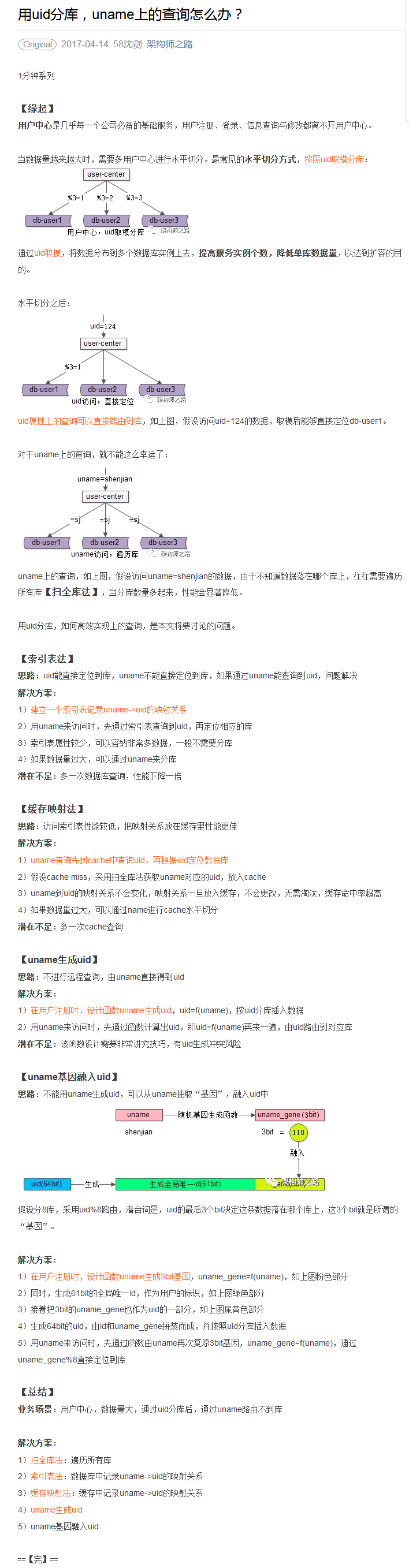

用uid分库,uname上的查询怎么办? http://mp.weixin.qq.com/s/_HB7Iq9chDLk2z_m_btq9w

将以下解决了uid-uname单表的分库,但是如果tab0-uname,tab1-uname,-----,tabn-uname?

具体场景:

ListOrders

返回您在指定时间段内所创建或更新的订单。

GetOrder

根据您指定的 AmazonOrderId 值返回订单。

ListOrderItems

根据您指定的 AmazonOrderId 返回订单商品。

0-AmazonOrderId 为非整数

1- sql WHERE INTAmazonOrderId=123

比 WHERE AmazonOrderId='asad434fsdfdsf-rewrs'

效率高

解决方法:

将AmazonOrderId整数化

0-不设计算法,由mysql的自增主键

1-设计算法,这样不必查数据库AmazonOrderId整数化后的值,即“将互异字符串转化为互异整数”的算法

“求字符串和整数一一映射的算法”

您应了解的“订单 API”部分的相关内容 http://docs.developer.amazonservices.com/zh_CN/orders/2013-09-01/Orders_Overview.html

2017年4月14日 18:41:40

Sharding Pattern

w碎片有相同的模型数据是子集、竖直切分是权宜之计、设计模式、碎片设计逻辑指挥应用去某个碎片读写数据

碎片策略-碎片承租者数据基于承租者ID-针对范围式查询的策略,如同地区用户通过时区碎片化-避免热点的HASH策略-

https://msdn.microsoft.com/en-us/library/dn589797.aspx

Divide a data store into a set of horizontal partitions or shards. This pattern can improve scalability when storing and accessing large volumes of data.

Context and Problem

A data store hosted by a single server may be subject to the following limitations:

- Storage space. A data store for a large-scale cloud application may be expected to contain a huge volume of data that could increase significantly over time. A server typically provides only a finite amount of disk storage, but it may be possible to replace existing disks with larger ones, or add further disks to a machine as data volumes grow. However, the system will eventually reach a hard limit whereby it is not possible to easily increase the storage capacity on a given server.

- Computing resources. A cloud application may be required to support a large number of concurrent users, each of which run queries that retrieve information from the data store. A single server hosting the data store may not be able to provide the necessary computing power to support this load, resulting in extended response times for users and frequent failures as applications attempting to store and retrieve data time out. It may be possible to add memory or upgrade processors, but the system will reach a limit when it is not possible to increase the compute resources any further.

- Network bandwidth. Ultimately, the performance of a data store running on a single server is governed by the rate at which the server can receive requests and send replies. It is possible that the volume of network traffic might exceed the capacity of the network used to connect to the server, resulting in failed requests.

- Geography. It may be necessary to store data generated by specific users in the same region as those users for legal, compliance, or performance reasons, or to reduce latency of data access. If the users are dispersed across different countries or regions, it may not be possible to store the entire data for the application in a single data store.

Scaling vertically by adding more disk capacity, processing power, memory, and network connections may postpone the effects of some of these limitations, but it is likely to be only a temporary solution. A commercial cloud application capable of supporting large numbers of users and high volumes of data must be able to scale almost indefinitely, so vertical scaling is not necessarily the best solution.

Solution

Divide the data store into horizontal partitions or shards. Each shard has the same schema, but holds its own distinct subset of the data. A shard is a data store in its own right (it can contain the data for many entities of different types), running on a server acting as a storage node.

This pattern offers the following benefits:

- You can scale the system out by adding further shards running on additional storage nodes.

- A system can use off the shelf commodity hardware rather than specialized (and expensive) computers for each storage node.

- You can reduce contention and improved performance by balancing the workload across shards.

- In the cloud, shards can be located physically close to the users that will access the data.

When dividing a data store up into shards, decide which data should be placed in each shard. A shard typically contains items that fall within a specified range determined by one or more attributes of the data. These attributes form the shard key (sometimes referred to as the partition key). The shard key should be static. It should not be based on data that might change.

Sharding physically organizes the data. When an application stores and retrieves data, the sharding logic directs the application to the appropriate shard. This sharding logic may be implemented as part of the data access code in the application, or it could be implemented by the data storage system if it transparently supports sharding.

Abstracting the physical location of the data in the sharding logic provides a high level of control over which shards contain which data, and enables data to migrate between shards without reworking the business logic of an application should the data in the shards need to be redistributed later (for example, if the shards become unbalanced). The tradeoff is the additional data access overhead required in determining the location of each data item as it is retrieved.

To ensure optimal performance and scalability, it is important to split the data in a way that is appropriate for the types of queries the application performs. In many cases, it is unlikely that the sharding scheme will exactly match the requirements of every query. For example, in a multi-tenant system an application may need to retrieve tenant data by using the tenant ID, but it may also need to look up this data based on some other attribute such as the tenant’s name or location. To handle these situations, implement a sharding strategy with a shard key that supports the most commonly performed queries.

If queries regularly retrieve data by using a combination of attribute values, it may be possible to define a composite shard key by concatenating attributes together. Alternatively, use a pattern such as Index Table to provide fast lookup to data based on attributes that are not covered by the shard key.

Sharding Strategies

Three strategies are commonly used when selecting the shard key and deciding how to distribute data across shards. Note that there does not have to be a one-to-one correspondence between shards and the servers that host them—a single server can host multiple shards. The strategies are:

The Lookup strategy. In this strategy the sharding logic implements a map that routes a request for data to the shard that contains that data by using the shard key. In a multi-tenant application all the data for a tenant might be stored together in a shard by using the tenant ID as the shard key. Multiple tenants might share the same shard, but the data for a single tenant will not be spread across multiple shards. Figure 1 shows an example of this strategy.

The mapping between the shard key and the physical storage may be based on physical shards where each shard key maps to a physical partition. Alternatively, a technique that provides more flexibility when rebalancing shards is to use a virtual partitioning approach where shard keys map to the same number of virtual shards, which in turn map to fewer physical partitions. In this approach, an application locates data by using a shard key that refers to a virtual shard, and the system transparently maps virtual shards to physical partitions. The mapping between a virtual shard and a physical partition can change without requiring the application code to be modified to use a different set of shard keys.

The Range strategy. This strategy groups related items together in the same shard, and orders them by shard key—the shard keys are sequential. It is useful for applications that frequently retrieve sets of items by using range queries (queries that return a set of data items for a shard key that falls within a given range). For example, if an application regularly needs to find all orders placed in a given month, this data can be retrieved more quickly if all orders for a month are stored in date and time order in the same shard. If each order was stored in a different shard, they would have to be fetched individually by performing a large number of point queries (queries that return a single data item). Figure 2 shows an example of this strategy.

In this example, the shard key is a composite key comprising the order month as the most significant element, followed by the order day and the time. The data for orders is naturally sorted when new orders are created and appended to a shard. Some data stores support two-part shard keys comprising a partition key element that identifies the shard and a row key that uniquely identifies an item within the shard. Data is usually held in row key order within the shard. Items that are subject to range queries and need to be grouped together can use a shard key that has the same value for the partition key but a unique value for the row key.

The Hash strategy. The purpose of this strategy is to reduce the chance of hotspots in the data. It aims to distribute the data across the shards in a way that achieves a balance between the size of each shard and the average load that each shard will encounter. The sharding logic computes the shard in which to store an item based on a hash of one or more attributes of the data. The chosen hashing function should distribute data evenly across the shards, possibly by introducing some random element into the computation. Figure 2 shows an example of this strategy.

- Figure 3 - Sharding tenant data based on a hash of tenant IDs

- To understand the advantage of the Hash strategy over other sharding strategies, consider how a multi-tenant application that enrolls new tenants sequentially might assign the tenants to shards in the data store. When using the Range strategy, the data for tenants 1 to n will all be stored in shard A, the data for tenants n+1 to m will all be stored in shard B, and so on. If the most recently registered tenants are also the most active, most data activity will occur in a small number of shards—which could cause hotspots. In contrast, the Hash strategy allocates tenants to shards based on a hash of their tenant ID. This means that sequential tenants are most likely to be allocated to different shards, as shown in Figure 3 for tenants 55 and 56, which will distribute the load across these shards.

The following table lists the main advantages and considerations for these three sharding strategies.

|

Strategy |

Advantages |

Considerations |

|---|---|---|

|

Lookup |

More control over the way that shards are configured and used. Using virtual shards reduces the impact when rebalancing data because new physical partitions can be added to even out the workload. The mapping between a virtual shard and the physical partitions that implement the shard can be modified without affecting application code that uses a shard key to store and retrieve data. |

Looking up shard locations can impose an additional overhead. |

|

Range |

Easy to implement and works well with range queries because they can often fetch multiple data items from a single shard in a single operation. Easier data management. For example, if users in the same region are in the same shard, updates can be scheduled in each time zone based on the local load and demand pattern. |

May not provide optimal balancing between shards. Rebalancing shards is difficult and may not resolve the problem of uneven load if the majority of activity is for adjacent shard keys. |

|

Hash |

Better chance of a more even data and load distribution. Request routing can be accomplished directly by using the hash function. There is no need to maintain a map. |

Computing the hash may impose an additional overhead. Rebalancing shards is difficult. |

Most common sharding schemes implement one of the approaches described above, but you should also consider the business requirements of your applications and their patterns of data usage. For example, in a multi-tenant application:

- You can shard data based on workload. You could segregate the data for highly volatile tenants in separate shards. The speed of data access for other tenants may be improved as a result.

- You can shard data based on the location of tenants. It may be possible to take the data for tenants in a specific geographic region offline for backup and maintenance during off-peak hours in that region, while the data for tenants in other regions remains online and accessible during their business hours.

- High-value tenants could be assigned their own private high-performing, lightly loaded shards, whereas lower-value tenants might be expected to share more densely-packed, busy shards.

- The data for tenants that require a high degree of data isolation and privacy could be stored on a completely separate server.

Scaling and Data Movement Operations

Each of the sharding strategies implies different capabilities and levels of complexity for managing scale in, scale out, data movement, and maintaining state.

The Lookup strategy permits scaling and data movement operations to be carried out at the user level, either online or offline. The technique is to suspend some or all user activity (perhaps during off-peak periods), move the data to the new virtual partition or physical shard, change the mappings, invalidate or refresh any caches that hold this data, and then allow user activity to resume. Often this type of operation can be centrally managed. The Lookup strategy requires state to be highly cacheable and replica friendly.

The Range strategy imposes some limitations on scaling and data movement operations, which must typically be carried out when a part or all of the data store is offline because the data must be split and merged across the shards. Moving the data to rebalance shards may not resolve the problem of uneven load if the majority of activity is for adjacent shard keys or data identifiers that are within the same range. The Range strategy may also require some state to be maintained in order to map ranges to the physical partitions.

The Hash strategy makes scaling and data movement operations more complex because the partition keys are hashes of the shard keys or data identifiers. The new location of each shard must be determined from the hash function, or the function modified to provide the correct mappings. However, the Hash strategy does not require maintenance of state.

ipv4 ipv6 求字符串和整数一一映射的算法 AmazonOrderId的更多相关文章

- poj2406(求字符串的周期,kmp算法next数组的应用)

题目链接:https://vjudge.net/problem/POJ-2406 题意:求出给定字符串的周期,和poj1961类似. 思路:直接利用next数组的定义即可,当没有周期时,周期即为1. ...

- hdu 4333"Revolving Digits"(KMP求字符串最小循环节+拓展KMP)

传送门 题意: 此题意很好理解,便不在此赘述: 题解: 解题思路:KMP求字符串最小循环节+拓展KMP ①首先,根据KMP求字符串最小循环节的算法求出字符串s的最小循环节的长度,记为 k: ②根据拓展 ...

- java标签(label)求16进制字符串的整数和 把一个整数转为4个16进制字符表示

p.p1 { margin: 0.0px 0.0px 0.0px 0.0px; font: 18.0px Menlo; color: #4f76cb } p.p2 { margin: 0.0px 0. ...

- c++实现atoi()和itoa()函数(字符串和整数转化)

(0) c++类型所占的字节和表示范围 c 语言里 类型转换那些事儿(补码 反码) 应届生面试准备之道 最值得学习阅读的10个C语言开源项目代码 一:起因 (1)字符串类型转化为整数型(Integer ...

- 自己实现字符串转整数(不使用JDK的字符串转整数的方法)

[需求]: (1)如果输入的字符串为null,为空,或者不是数字,抛出异常: (2)如果输入的数字超出int的最大值或最小值,抛出异常: (3)输入的数字允许以+或-号开头,但如果输入的字符串只有&q ...

- ipv4的ip字符串转化为int型

要求: 将现有一个ipv4的ip字符串(仅包含数字,点,空格), 其中数字和点之间的空格(至多一个)是合法的,比如“12 .3. 4 .62”,其他情况均为非法地址.写一个函数将ipv4地址字符串转化 ...

- IP协议/地址(IPv4&IPv6)概要

IP协议/地址(IPv4&IPv6)概要 IP协议 什么是IP协议 IP是Internet Protocol(网际互连协议)的缩写,是TCP/IP体系中的网络层协议. [1] 协议的特征 无连 ...

- [LeetCode] String to Integer (atoi) 字符串转为整数

Implement atoi to convert a string to an integer. Hint: Carefully consider all possible input cases. ...

- 55. 2种方法求字符串的组合[string combination]

[本文链接] http://www.cnblogs.com/hellogiser/p/string-combination.html [题目] 题目:输入一个字符串,输出该字符串中字符的所有组合.举个 ...

随机推荐

- 《设计模式之美》 <01>为什么需要学习掌握设计模式?

1. 应对面试中的设计模式相关问 题学习设计模式和算法一样,最功利.最直接的目的,可能就是应对面试了.不管你是前端工程师.后端工程师,还是全栈工程师,在求职面试中,设计模式问题是被问得频率比较高的一类 ...

- XML基础综合案例【三】

实现简单的学生管理系统 使用xml当做数据,存储学生信息 ** 创建一个xml文件,写一些学生信息 ** 增加操作1.创建解析器2.得到document 3.获取到根节点4.在根节点上面创建stu标签 ...

- deep_learning_Function_tf.equal(tf.argmax(y, 1),tf.argmax(y_, 1))用法

[Tensorflow] tf.equal(tf.argmax(y, 1),tf.argmax(y_, 1))用法 作用:输出正确的预测结果利用tf.argmax()按行求出真实值y_.预测值y最大值 ...

- python文件操作:文件处理与操作模式

一,文件处理的模式基本概念 #coding:utf-8 # 一: 文件处理的三个步骤 # 1. 打开文件拿到文件对象(文件对象====>操作系统打开文件====>硬盘) # f=open( ...

- WebClient HttpWebRequest 下载文件到本地

处理方式: 第一种: 我们需要获取文件,但是我们不需要保存源文件的名称 public void DownFile(string uRLAddress, string localPath, str ...

- 正则表达式 Regular expression为学习助跑

这是一个Regular expression的铁路图(至少我现在是这么称呼的)的图形化界面帮助检验和理解我们所写的Regular expression是否正确. 1.官网 :https://regex ...

- D - Beautiful Graph CodeForces - 1093D (二分图染色+方案数)

D - Beautiful Graph CodeForces - 1093D You are given an undirected unweighted graph consisting of nn ...

- 二叉树的相关定义及BST的实现

一.树的一些概念 树,子树,节点,叶子(终端节点),分支节点(分终端节点): 节点的度表示该节点拥有的子树个数,树的度是树内各节点度的最大值: 子节点(孩子),父节点(双亲),兄弟节点,祖先,子孙,堂 ...

- (一)数据库系统概述和ER图

1.数据库系统 数据库系统有数据库.数据库管理系统.应用系统和数据库管理员组成.数据库呢就是数据的集合,应用系统和管理员就不说了,数据库管理系统即常说的DBMS,比如我们用的mysql,oracle, ...

- TETP服务和PXE功能

PXE PXE:Preboot Excution Environment, Intel公司研发,没有任何操作系统的主机,能够基于网络完成系统的安装工作.