[原][openstack-pike][compute node][issue-1]openstack-nova-compute.service holdoff time over, scheduling restart.

在安装pike compute node节点的时候遇到启动nova-compute失败,问题如下(注意红色字体):

[root@compute1 nova]# systemctl start openstack-nova-compute.service

Job for openstack-nova-compute.service failed because the control process exited with error code.

See "systemctl status openstack-nova-compute.service" and "journalctl -xe" for details.

[root@compute1 nova]# systemctl status openstack-nova-compute.service

● openstack-nova-compute.service - OpenStack Nova Compute Server

Loaded: loaded (/usr/lib/systemd/system/openstack-nova-compute.service; enabled; vendor preset: disabled)

Active: activating (start) since Tue -- :: CST; 2s ago

Main PID: (nova-compute)

Tasks:

CGroup: /system.slice/openstack-nova-compute.service

└─ /usr/bin/python2 /usr/bin/nova-compute Sep :: compute1 systemd[]: openstack-nova-compute.service holdoff time over, scheduling restart.

Sep :: compute1 systemd[]: Starting OpenStack Nova Compute Server...

[root@compute1 nova]# date

Tue Sep :: CST

[root@compute1 nova]# journalctl -xe

Sep :: compute1 avahi-daemon[]: Withdrawing workstation service for lo.

Sep :: compute1 avahi-daemon[]: Withdrawing workstation service for virbr0-nic.

Sep :: compute1 avahi-daemon[]: Withdrawing address record for 192.168.122.1 on virbr0.

Sep :: compute1 avahi-daemon[]: Withdrawing workstation service for virbr0.

Sep :: compute1 avahi-daemon[]: Withdrawing address record for fe80::fc81:46e3:49a7:d1da on ens35.

Sep :: compute1 avahi-daemon[]: Withdrawing address record for fe80::8fb9:::d538 on ens35.

Sep :: compute1 avahi-daemon[]: Withdrawing address record for 192.168.70.73 on ens35.

Sep :: compute1 avahi-daemon[]: Withdrawing workstation service for ens35.

Sep :: compute1 avahi-daemon[]: Withdrawing address record for fe80::fe24:180a:91a7:8bdf on ens34.

Sep :: compute1 avahi-daemon[]: Host name conflict, retrying with compute1-

Sep :: compute1 avahi-daemon[]: Registering new address record for 192.168.122.1 on virbr0.IPv4.

Sep :: compute1 avahi-daemon[]: Registering new address record for fe80::fc81:46e3:49a7:d1da on ens35.*.

Sep :: compute1 avahi-daemon[]: Registering new address record for fe80::8fb9:::d538 on ens35.*.

Sep :: compute1 avahi-daemon[]: Registering new address record for 192.168.70.73 on ens35.IPv4.

Sep :: compute1 avahi-daemon[]: Registering new address record for fe80::fe24:180a:91a7:8bdf on ens34.*.

Sep :: compute1 avahi-daemon[]: Registering new address record for fe80:::9aae::6a4a on ens34.*.

Sep :: compute1 avahi-daemon[]: Registering new address record for 10.50.70.73 on ens34.IPv4.

Sep :: compute1 avahi-daemon[]: Registering new address record for 192.168.184.135 on ens33.IPv4.

Sep :: compute1 avahi-daemon[]: Registering HINFO record with values 'X86_64'/'LINUX'.

Sep :: compute1 systemd[]: openstack-nova-compute.service: main process exited, code=exited, status=/FAILURE

Sep :: compute1 systemd[]: Failed to start OpenStack Nova Compute Server.

-- Subject: Unit openstack-nova-compute.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit openstack-nova-compute.service has failed.

--

-- The result is failed.

Sep :: compute1 systemd[]: Unit openstack-nova-compute.service entered failed state.

Sep :: compute1 systemd[]: openstack-nova-compute.service failed.

Sep :: compute1 systemd[]: openstack-nova-compute.service holdoff time over, scheduling restart.

Sep :: compute1 systemd[]: Starting OpenStack Nova Compute Server...

[root@compute1 nova]#vim /var/log/nova/nova-compute.log

... ERROR nova AccessRefused: (, ): () ACCESS_REFUSED - Login was refused using authentication mechanism AMQPLAIN.

For details see the broker logfile.

从上面的记录中可以推断应该message queue出了问题。导致无法login。

再想想涉及login到rabbit的使用的是用户名和密码:

查看rabbit server [on controller node]用户名密码:

[root@controller ~]# rabbitmq-plugins enable rabbitmq_management //开启rabbit web界面

[root@controller ~]#lsof |grep rabbit //查询rabbit web服务端口 15672

async_63 1089 2034 rabbitmq 49u IPv4 32616 0t0 TCP *: (LISTEN)

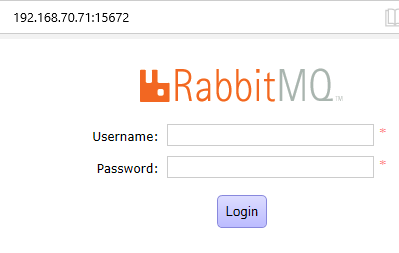

在浏览器中输入controller:15672地址,如下图

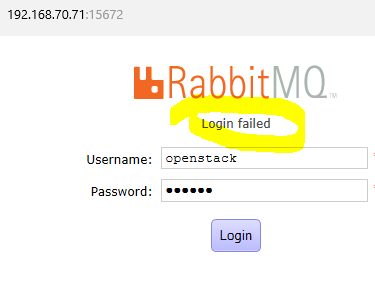

再在compute node中的nova.conf中查找相关的用户名和密码

[DEFAULT]

。。。

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:rabbit@controller

用户名为openstack

密码为rabbit

login 失败

查看rabbit sever的用户列表发现没有openstack这个用户:

[root@controller ~]# rabbitmqctl list_users

Listing users ...

admin [administrator]

guest [administrator]

[重点]使用admin用户登录,由于忘记密码又不能直接查看明文密码,所以在rabbit_server[controller node]上重置用户为admin的用户密码:

[root@controller nova]# rabbitmqctl change_password admin admin

再次使用用户名和密码登录

用户名:admin

密码:admin

最后修改nova.conf [on compute node or controller node]

transport_url= rabbit://admin:admin@controller

启动nova-compute.service

[root@compute1 nova]# systemctl start openstack-nova-compute.service

[root@compute1 nova]# systemctl status openstack-nova-compute.service

● openstack-nova-compute.service - OpenStack Nova Compute Server

Loaded: loaded (/usr/lib/systemd/system/openstack-nova-compute.service; enabled; vendor preset: disabled)

Active: active (running) since Tue -- :: CST; 49s ago

Main PID: (nova-compute)

Tasks:

CGroup: /system.slice/openstack-nova-compute.service

└─ /usr/bin/python2 /usr/bin/nova-compute Sep :: compute1 systemd[]: Starting OpenStack Nova Compute Server...

Sep :: compute1 systemd[]: Started OpenStack Nova Compute Server.

问题解决

[原][openstack-pike][compute node][issue-1]openstack-nova-compute.service holdoff time over, scheduling restart.的更多相关文章

- 《转》 Openstack Grizzly 指定 compute node 创建 instance

声明:此文档仅仅做学习交流使用,请勿用作其它商业用途 作者:朝阳_tony 邮箱:linzhaolover@gmail.com 2013年6月4日9:37:44 星期二 转载请注明出处:http:// ...

- Openstack组件部署 — Nova_Install and configure a compute node

目录 目录 前文列表 Prerequisites 先决条件 Install and configure a compute node Install the packages Edit the etc ...

- openstack pike 集群高可用 安装 部署 目录汇总

# openstack pike 集群高可用 安装部署#安装环境 centos 7 史上最详细的openstack pike版 部署文档欢迎经验分享,欢迎笔记分享欢迎留言,或加QQ群663105353 ...

- openstack pike 使用 openvswitch + vxlan

# openstack pike 使用 openvswitch + vxlan# openstack pike linuxbridge-agent 换为 openvswitch-agent #open ...

- openstack pike 单机 一键安装 shell

#openstack pike 单机 centos 一键安装 shell #openstack pike 集群高可用 安装部署 汇总 http://www.cnblogs.com/elvi/p/7 ...

- CentOS7.2非HA分布式部署Openstack Pike版 (实验)

部署环境 一.组网拓扑 二.设备配置 笔记本:联想L440处理器:i3-4000M 2.40GHz内存:12G虚拟机软件:VMware® Workstation 12 Pro(12.5.2 build ...

- openstack pike与ceph集成

openstack pike与ceph集成 Ceph luminous 安装配置 http://www.cnblogs.com/elvi/p/7897178.html openstack pike 集 ...

- OpenStack Pike超详细搭建文档 LinuxBridge版

前言 搭建前必须看我 本文档搭建的是分布式P版openstack(1 controller + N compute + 1 cinder)的文档. openstack版本为Pike. 搭建的时候,请严 ...

- 照着官网来安装openstack pike之创建并启动instance

有了之前组件(keystone.glance.nova.neutron)的安装后,那么就可以在命令行创建并启动instance了 照着官网来安装openstack pike之environment设置 ...

随机推荐

- Django REST framework API开发

RESTful设计方法 1. 域名 应该尽量将API部署在专用域名之下. https://api.example.com 如果确定API很简单,不会有进一步扩展,可以考虑放在主域名下. https:/ ...

- db2 merge update

DB2 Merge 语句的作用非常强大,它可以将一个表中的数据合并到另一个表中,在合并的同时可以进行插入.删除.更新等操作.我们还是先来看个简单的例子吧,假设你定义了一个雇员表(employe),一个 ...

- [赶集网] 【MySql】赶集网mysql开发36条军规

[赶集网] [MySql]赶集网mysql开发36条军规 (一)核心军规(1)不在数据库做运算 cpu计算务必移至业务层:(2)控制单表数据量 int型不超过1000w,含char则不超过50 ...

- Nancy的基本用法

在前面的文章轻量级的Web框架——Nancy中简单的介绍了一下Nancy的特点,今天这里就介绍下它的基本用法,由于2.0的版本还是预览状态,我这里用的是1.4版本,和最小的版本API还是有些差异的. ...

- AXURE插件在 Chrome 浏览器中用不了怎么办?

使用Chrome浏览器打开axure设计的原型的时候可能无法正常显示,这时Chrome会提示你安装axure rp for Chrome插件.此前,我们只需要简单的点击install.2013年12月 ...

- windows ip 缓存清理

ip缓存 ipconfig /release dns缓存 ipconfig/flushdns

- CodeForces - 344E Read Time (模拟题 + 二分法)

E. Read Time time limit per test 1 second memory limit per test 256 megabytes input standard input o ...

- maven在Idea建立工程,运行出现Server IPC version 9 cannot communicate with client version 4错误

问题的根源在于,工程当中maven dependencies里面的包,有个hadoop-core的包,版本太低,这样,程序里面所有引用到org.apache.hadoop的地方,都是低版本的,你用的是 ...

- 解决Linux文件系统变成只读的方法

解决Linux文件系统变成只读的方法 解决方法 1.重启看是否可以修复(很多机器可以) 2.使用用 fsck – y /dev/hdc6 (/dev/hdc6指你需要修复的分区) 来修复文件系统 ...

- 阿里云 CentOS7 安装 Nginx 后,无法访问的问题

在阿里云实例中,选择 网络与安全中的安全组.修改安全组规则. 例如: 但是还是不行 需要这样排查 netstat -anp | grep 80 iptables -L -n firewall- ...