吴裕雄--天生自然 PYTHON数据分析:所有美国股票和etf的历史日价格和成交量分析

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load in import matplotlib.pyplot as plt

import statsmodels.tsa.seasonal as smt

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import random

import datetime as dt

from sklearn import linear_model

from sklearn.metrics import mean_absolute_error

import plotly # import the relevant Keras modules

from keras.models import Sequential

from keras.layers import Activation, Dense

from keras.layers import LSTM

from keras.layers import Dropout # Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list the files in the input directory from subprocess import check_output

import os

os.chdir('F:\\kaggleDataSet\\price-volume\\Stocks')

#read data

# kernels let us navigate through the zipfile as if it were a directory # trying to read a file of size zero will throw an error, so skip them

# filenames = [x for x in os.listdir() if x.endswith('.txt') and os.path.getsize(x) > 0]

# filenames = random.sample(filenames,1)

filenames = ['prk.us.txt', 'bgr.us.txt', 'jci.us.txt', 'aa.us.txt', 'fr.us.txt', 'star.us.txt', 'sons.us.txt', 'ipl_d.us.txt', 'sna.us.txt', 'utg.us.txt']

filenames = [filenames[1]]

print(filenames)

data = []

for filename in filenames:

df = pd.read_csv(filename, sep=',')

label, _, _ = filename.split(sep='.')

df['Label'] = filename

df['Date'] = pd.to_datetime(df['Date'])

data.append(df)

traces = []

for df in data:

clr = str(r()) + str(r()) + str(r())

df = df.sort_values('Date')

label = df['Label'].iloc[0]

trace = plotly.graph_objs.Scattergl(x=df['Date'],y=df['Close'])

traces.append(trace) layout = plotly.graph_objs.Layout(title='Plot',)

fig = plotly.graph_objs.Figure(data=traces, layout=layout)

plotly.offline.init_notebook_mode(connected=True)

plotly.offline.iplot(fig, filename='dataplot')

df = data[0]

window_len = 10 #Create a data point (i.e. a date) which splits the training and testing set

split_date = list(data[0]["Date"][-(2*window_len+1):])[0] #Split the training and test set

training_set, test_set = df[df['Date'] < split_date], df[df['Date'] >= split_date]

training_set = training_set.drop(['Date','Label', 'OpenInt'], 1)

test_set = test_set.drop(['Date','Label','OpenInt'], 1) #Create windows for training

LSTM_training_inputs = []

for i in range(len(training_set)-window_len):

temp_set = training_set[i:(i+window_len)].copy() for col in list(temp_set):

temp_set[col] = temp_set[col]/temp_set[col].iloc[0] - 1

LSTM_training_inputs.append(temp_set)

LSTM_training_outputs = (training_set['Close'][window_len:].values/training_set['Close'][:-window_len].values)-1 LSTM_training_inputs = [np.array(LSTM_training_input) for LSTM_training_input in LSTM_training_inputs]

LSTM_training_inputs = np.array(LSTM_training_inputs) #Create windows for testing

LSTM_test_inputs = []

for i in range(len(test_set)-window_len):

temp_set = test_set[i:(i+window_len)].copy() for col in list(temp_set):

temp_set[col] = temp_set[col]/temp_set[col].iloc[0] - 1

LSTM_test_inputs.append(temp_set)

LSTM_test_outputs = (test_set['Close'][window_len:].values/test_set['Close'][:-window_len].values)-1 LSTM_test_inputs = [np.array(LSTM_test_inputs) for LSTM_test_inputs in LSTM_test_inputs]

LSTM_test_inputs = np.array(LSTM_test_inputs)

def build_model(inputs, output_size, neurons, activ_func="linear",dropout=0.10, loss="mae", optimizer="adam"):

model = Sequential()

model.add(LSTM(neurons, input_shape=(inputs.shape[1], inputs.shape[2])))

model.add(Dropout(dropout))

model.add(Dense(units=output_size))

model.add(Activation(activ_func))

model.compile(loss=loss, optimizer=optimizer)

return model

# initialise model architecture

nn_model = build_model(LSTM_training_inputs, output_size=1, neurons = 32)

# model output is next price normalised to 10th previous closing price

# train model on data

# note: eth_history contains information on the training error per epoch

nn_history = nn_model.fit(LSTM_training_inputs, LSTM_training_outputs, epochs=5, batch_size=1, verbose=2, shuffle=True)

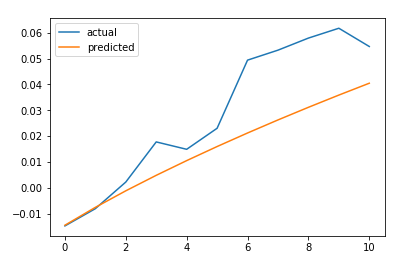

plt.plot(LSTM_test_outputs, label = "actual")

plt.plot(nn_model.predict(LSTM_test_inputs), label = "predicted")

plt.legend()

plt.show()

MAE = mean_absolute_error(LSTM_test_outputs, nn_model.predict(LSTM_test_inputs))

print('The Mean Absolute Error is: {}'.format(MAE))

#https://github.com/llSourcell/How-to-Predict-Stock-Prices-Easily-Demo/blob/master/lstm.py

def predict_sequence_full(model, data, window_size):

#Shift the window by 1 new prediction each time, re-run predictions on new window

curr_frame = data[0]

predicted = []

for i in range(len(data)):

predicted.append(model.predict(curr_frame[np.newaxis,:,:])[0,0])

curr_frame = curr_frame[1:]

curr_frame = np.insert(curr_frame, [window_size-1], predicted[-1], axis=0)

return predicted predictions = predict_sequence_full(nn_model, LSTM_test_inputs, 10) plt.plot(LSTM_test_outputs, label="actual")

plt.plot(predictions, label="predicted")

plt.legend()

plt.show()

MAE = mean_absolute_error(LSTM_test_outputs, predictions)

print('The Mean Absolute Error is: {}'.format(MAE))

结论

LSTM不能解决时间序列预测问题。对一个时间步长的预测并不比滞后模型好多少。如果我们增加预测的时间步长,性能下降的速度就不会像其他更传统的方法那么快。然而,在这种情况下,我们的误差增加了大约4.5倍。它随着我们试图预测的时间步长呈超线性增长。

吴裕雄--天生自然 PYTHON数据分析:所有美国股票和etf的历史日价格和成交量分析的更多相关文章

- 吴裕雄--天生自然 PYTHON数据分析:糖尿病视网膜病变数据分析(完整版)

# This Python 3 environment comes with many helpful analytics libraries installed # It is defined by ...

- 吴裕雄--天生自然 python数据分析:健康指标聚集分析(健康分析)

# This Python 3 environment comes with many helpful analytics libraries installed # It is defined by ...

- 吴裕雄--天生自然 python数据分析:葡萄酒分析

# import pandas import pandas as pd # creating a DataFrame pd.DataFrame({'Yes': [50, 31], 'No': [101 ...

- 吴裕雄--天生自然 PYTHON数据分析:人类发展报告——HDI, GDI,健康,全球人口数据数据分析

import pandas as pd # Data analysis import numpy as np #Data analysis import seaborn as sns # Data v ...

- 吴裕雄--天生自然 python数据分析:医疗费数据分析

import numpy as np import pandas as pd import os import matplotlib.pyplot as pl import seaborn as sn ...

- 吴裕雄--天生自然 PYTHON数据分析:基于Keras的CNN分析太空深处寻找系外行星数据

#We import libraries for linear algebra, graphs, and evaluation of results import numpy as np import ...

- 吴裕雄--天生自然 python数据分析:基于Keras使用CNN神经网络处理手写数据集

import pandas as pd import numpy as np import matplotlib.pyplot as plt import matplotlib.image as mp ...

- 吴裕雄--天生自然 PYTHON数据分析:钦奈水资源管理分析

df = pd.read_csv("F:\\kaggleDataSet\\chennai-water\\chennai_reservoir_levels.csv") df[&quo ...

- 吴裕雄--天生自然 PYTHON数据分析:医疗数据分析

import numpy as np # linear algebra import pandas as pd # data processing, CSV file I/O (e.g. pd.rea ...

随机推荐

- http请求中的 OPTIONS 多余请求消除,减少的案例

问题: 项目中遇到移动端发送同样的请求2次,仔细看了一下,有个是options报文. HTTP请求翻一倍,对服务器的性能有较大影响,造成nginx的无畏消耗,需要消除它. 解决思路: 1.上网查看了一 ...

- 全国疫情精准定点动态更新(.net core)

前言 疫情远比我们在年初想的发展迅速,在过年前还计划着可以亲戚聚聚,结果都泡汤了,开始了自家游. 在初三的时候,看到那个丁香医生,觉得不够详细,比如说我想看下周边城市的疫情情况,但是我地理不好,根本不 ...

- keywords in my life

在脑子里出现的灵光一现的话语总是美好的: 1.当你试图站在人的发展,历史的发展的角度上看待问题,会发现我们身上所发生的任何事情都是必然的. 2.永远不要以好人的身份去看待和分析一件事情. 3.历史悲剧 ...

- 最简单的基于FFMPEG+SDL的视频播放器:拆分-解码器和播放器

===================================================== 最简单的基于FFmpeg的视频播放器系列文章列表: 100行代码实现最简单的基于FFMPEG ...

- 开源项目SMSS发开指南(五)——SSL/TLS加密通信详解(下)

继上一篇介绍如何在多种语言之间使用SSL加密通信,今天我们关注Java端的证书创建以及支持SSL的NioSocket服务端开发.完整源码 一.创建keystore文件 网上大多数是通过jdk命令创建秘 ...

- 移植freertos到stm32 f103 的基本流程和总结

为什么要在stm32 f103上面移植freertos stm32 f103 以他的全面的文档,亲民的价格,强大的功能.成为无数微设备的方案首选.在市场上有极大的使用量.市场占有率也是非常的高.f ...

- react项目中引用amap(高德地图)坑

最近在写一个react项目,用到了需要定位的需求,于是乎自己决定用高德地图(AMap),但是react官方文档的案列很少,大多都是原生JS的方法. 在调用amap的 Geocoder Api 时,一直 ...

- 解决Python2.7的UnicodeEncodeError: 'ascii' codec can't encode异常错误

UnicodeEncodeError: 'ascii' codec can't encode characters in position 0-2: ordinal not in range(128) ...

- 用命令提示符运行简单的Java程序报错

首先用记事本写一个最简单的Java代码,我把文件保存在桌面的HelloWorld文件夹中,这里将记事本的名称改为HelloWorld.java public class HelloWorld{ pub ...

- docker安装db2数据库

查询可安装的db2镜像 # docker search db2 [root@docker-servers ~]# docker search db2 INDEX NAME DESCRIPTION ST ...