Paper Reading - Sequence to Sequence Learning with Neural Networks ( NIPS 2014 )

Link of the Paper: https://arxiv.org/pdf/1409.3215.pdf

Main Points:

- Encoder-Decoder Model: Input sequence -> A vector of a fixed dimensionality -> Target sequence.

- A multilayered LSTM: The LSTM did not have difficulty on long sentences. Deep LSTMs significantly outperformed shallow LSTMs.

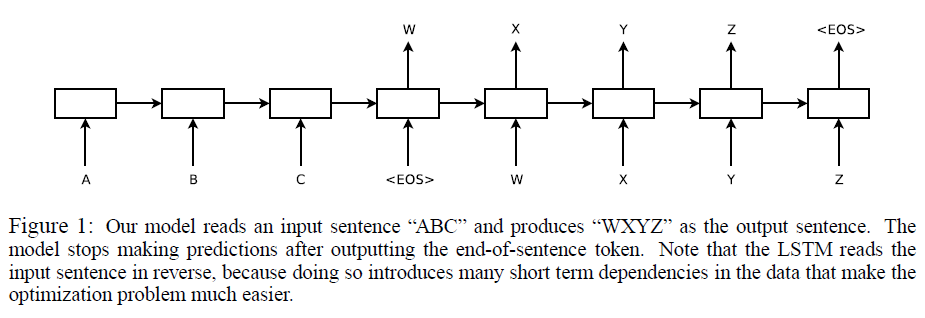

- Reverse Input: Better performance. While the authors do not have a complete explanation to this phenomenon, they believe that it is caused by the introduction of many short term dependencies to the dataset. LSTMs trained on reversed source sentences did much better on long sentences than LSTMs trained on the raw source sentences, which suggests that reversing the input sentences results in LSTMs with better memory utilization.

Other Key Points:

- A significant limitation: Despite their flexibility and power, DNNs can only be applied to problems whose inputs and targets can be sensibly encoded with vectors of fixed dimensionality.

Paper Reading - Sequence to Sequence Learning with Neural Networks ( NIPS 2014 )的更多相关文章

- Paper Reading - Deep Captioning with Multimodal Recurrent Neural Networks ( m-RNN ) ( ICLR 2015 ) ★

Link of the Paper: https://arxiv.org/pdf/1412.6632.pdf Main Points: The authors propose a multimodal ...

- 【论文笔记】Learning Convolutional Neural Networks for Graphs

Learning Convolutional Neural Networks for Graphs 2018-01-17 21:41:57 [Introduction] 这篇 paper 是发表在 ...

- PP: Sequence to sequence learning with neural networks

From google institution; 1. Before this, DNN cannot be used to map sequences to sequences. In this p ...

- 《MATLAB Deep Learning:With Machine Learning,Neural Networks and Artificial Intelligence》选记

一.Training of a Single-Layer Neural Network 1 Delta Rule Consider a single-layer neural network, as ...

- [C1W4] Neural Networks and Deep Learning - Deep Neural Networks

第四周:深层神经网络(Deep Neural Networks) 深层神经网络(Deep L-layer neural network) 目前为止我们学习了只有一个单独隐藏层的神经网络的正向传播和反向 ...

- [C1W3] Neural Networks and Deep Learning - Shallow neural networks

第三周:浅层神经网络(Shallow neural networks) 神经网络概述(Neural Network Overview) 本周你将学习如何实现一个神经网络.在我们深入学习具体技术之前,我 ...

- 目标检测--Scalable Object Detection using Deep Neural Networks(CVPR 2014)

Scalable Object Detection using Deep Neural Networks 作者: Dumitru Erhan, Christian Szegedy, Alexander ...

- Sequence to Sequence Learning with Neural Networks论文阅读

论文下载 作者(三位Google大佬)一开始提出DNN的缺点,DNN不能用于将序列映射到序列.此论文以机器翻译为例,核心模型是长短期记忆神经网络(LSTM),首先通过一个多层的LSTM将输入的语言序列 ...

- Paper Reading——LEMNA:Explaining Deep Learning based Security Applications

Motivation: The lack of transparency of the deep learning models creates key barriers to establishi ...

随机推荐

- Caused by: java.lang.ClassNotFoundException: org.springframework.boot.bind.RelaxedPropertyResolver

Caused by: java.lang.ClassNotFoundException: org.springframework.boot.bind.RelaxedPropertyResolver 这 ...

- Oracle 表空间、段、区和块简述

数据块(Block) 数据块Block是Oracle存储数据信息的最小单位.注意,这里说的是Oracle环境下的最小单位.Oracle也就是通过数据块来屏蔽不同操作系统存储结构的差异.无论是Windo ...

- Java 遍历方法总结

package com.zlh; import java.util.ArrayList; import java.util.HashMap; import java.util.Iterator; im ...

- [译]C语言实现一个简易的Hash table(3)

上一章,我们讲了hash表的数据结构,并简单实现了hash表的初始化与删除操作,这一章我们会讲解Hash函数和实现算法,并手动实现一个Hash函数. Hash函数 本教程中我们实现的Hash函数将会实 ...

- PHP中的递增/递减运算符

看这段代码 <?php $a=10; $b=++$a; //此语句等同于 ; $a=$a+1 ; $b=$a echo $a."<br>"; echo $b; ? ...

- linux-2.6.22.6内核启动分析之head.S引导段代码

学习目标: 了解arch/arm/kernel/head.S作为内核启动的第一个文件所实现的功能! 前面通过对内核Makefile的分析,可以知道arch/arm/kernel/head.S是内核启动 ...

- 单片机中不带字库LCD液晶屏显示少量汉字

单片机中不带字库LCD液晶屏如何显示少量汉字,一般显示汉字的方法有1.使用带字库的LCD屏,2.通过SD 卡或者外挂spi flash存中文字库,3.直接将需要的汉字取模存入mcu的flash中. 第 ...

- pycharm社区版新建django文件不友好操作

一.cmd操作 1.django-admin startproject (新建project名称) 2.在pycharm打开project,运行终端输入:python manage.py starta ...

- cgywin下 hadoop运行 问题

1 cgywin下安装hadoop需要配置JAVA_home变量 , 此时使用 window下安装的jdk就可以 ,但是安装路径不要带有空格.否则会不识别. 2 在Window下启动Hadoop ...

- 20155337祁家伟 2016-2017-2 《Java程序设计》第2周学习总结

20155337 2016-2017-2 <Java程序设计>第2周学习总结 教材学习内容总结 这周我学习了从JDK到IDE的学习内容,简单来说分为以下几个部分 使用命令行和IDE两种方式 ...