2、k8s 基础环境安装

3 k8s 环境配置

3.1 基础环境准备

所有机器执行

#各个机器设置自己的域名 我的设置为 hostnamectl set-hostname ks8-master、hostnamectl set-hostname ks8-node1、hostnamectl set-hostname ks8-node2

hostnamectl set-hostname xxxx

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

3.2 安装kubelet、kubeadm、kubectl

所有机器执行

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

kubelet 现在每隔几秒就会重启,因为它陷入了一个等待 kubeadm 指令的死循环

4 使用kubeadm引导集群

4.1 下载各个机器需要的镜像

所有机器执行

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

4.2 所有机器添加master域名映射,192.168.x.x需要改为自己的master机器ip

所有机器都执行

echo "192.168.x.x cluster-endpoint" >> /etc/hosts

4.3 初始化主节点

只有master才执行,192.168.x.x 修改为自己的master机器ip

#主节点初始化

kubeadm init \

--apiserver-advertise-address=192.168.x.x \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

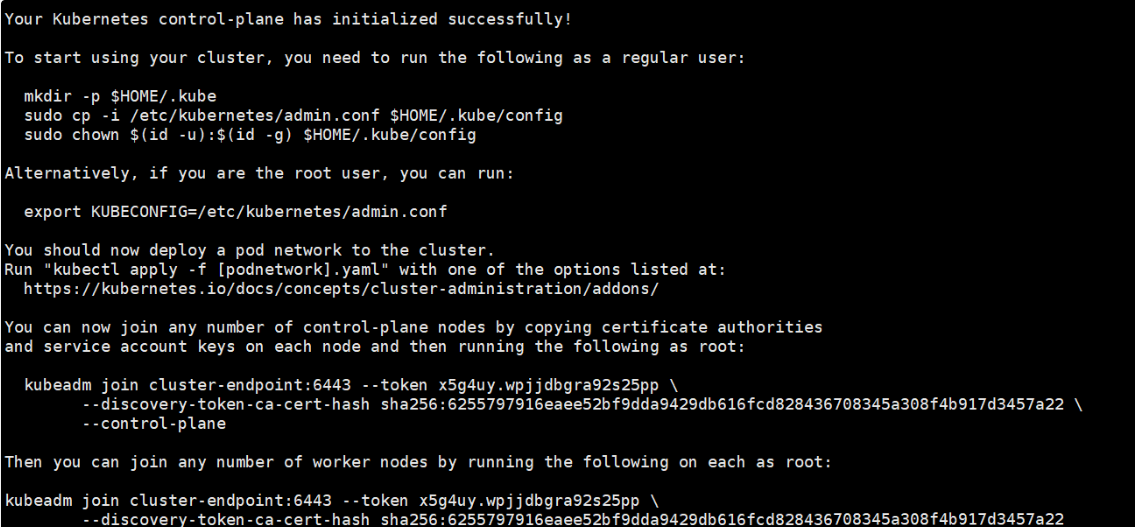

执行后,等待一段时间会输出信息,将输出的信息先保存,以下是用例,实际使用自己的

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7 \

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token hums8f.vyx71prsg74ofce7 \

--discovery-token-ca-cert-hash sha256:a394d059dd51d68bb007a532a037d0a477131480ae95f75840c461e85e2c6ae3

4.4 当前可以使用的命令

#查看集群所有节点

kubectl get nodes

#查看集群部署了哪些应用?

kubectl get pods -A

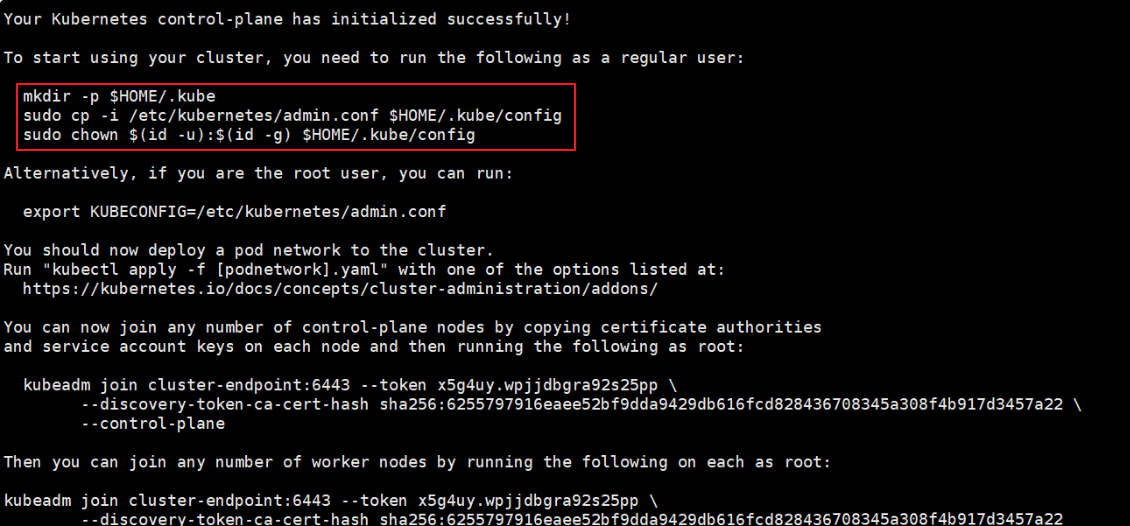

4.5 根据提示新建文件夹

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

4.6 安装网络组件

curl https://docs.projectcalico.org/archive/v3.21/manifests/calico.yaml -O

kubectl apply -f calico.yaml

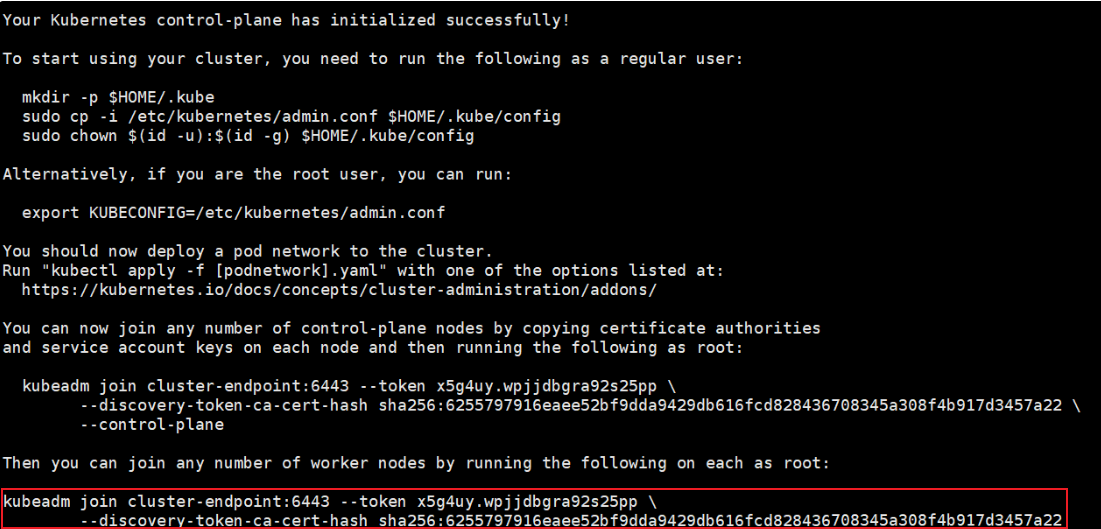

4.7 加入node节点

其他两台node服务器,执行红方框处的命令,记得使用自己的

kubeadm join cluster-endpoint:6443 --token x5g4uy.wpjjdbgra92s25pp \

--discovery-token-ca-cert-hash sha256:6255797916eaee52bf9dda9429db616fcd828436708345a308f4b917d3457a22

因为令牌有有效期,一般24h有效,过期后,如果想新加node,可以使用以下命令

kubeadm token create --print-join-command

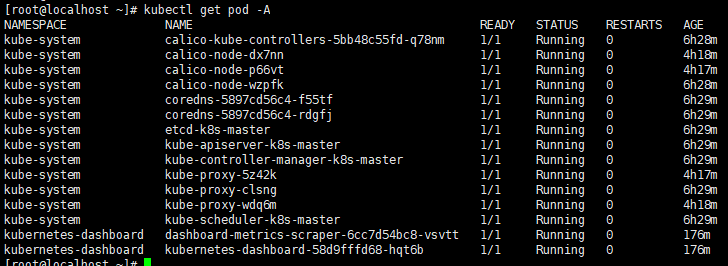

4.8 查看pod状态

kubectl get pod -A

当所有的服务ready好后,进行下一步操作,如果网络慢的问题,需要等待时间较久,耐心等待

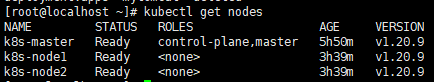

5 验证集群状态

kubectl get nodes

6 部署dashboard

全部在master上操作

6.1 部署

6.1.1 下载recommended.yaml文件

curl -O https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

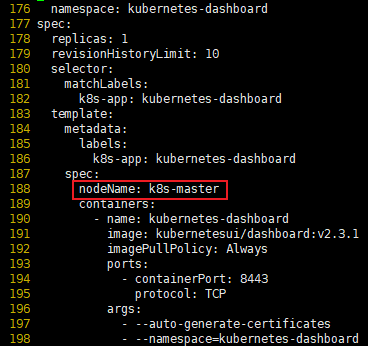

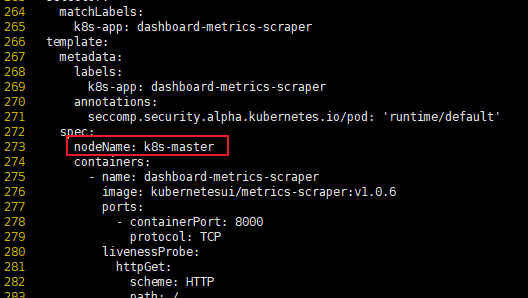

6.1.2 修改recommended.yaml文件

vi recommended.yaml

修改的地方和内容

nodeName: k8s-master

以下是修改好的,可以直接使用

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

nodeName: k8s-master

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.3.1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

nodeName: k8s-master

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

6.1.3 执行部署

kubectl apply -f recommended.yaml

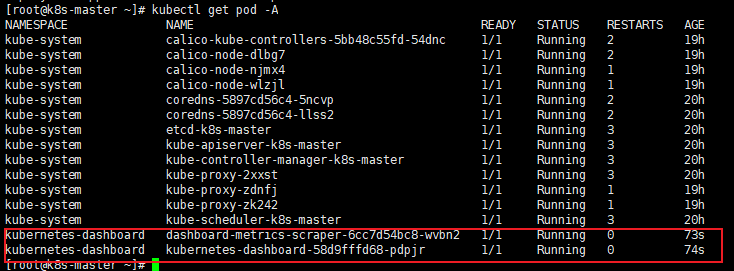

如果使用 kubectl get pod -A 查看多了两个服务,状态是ready状态,进行后续操作

6.2 设置访问端口

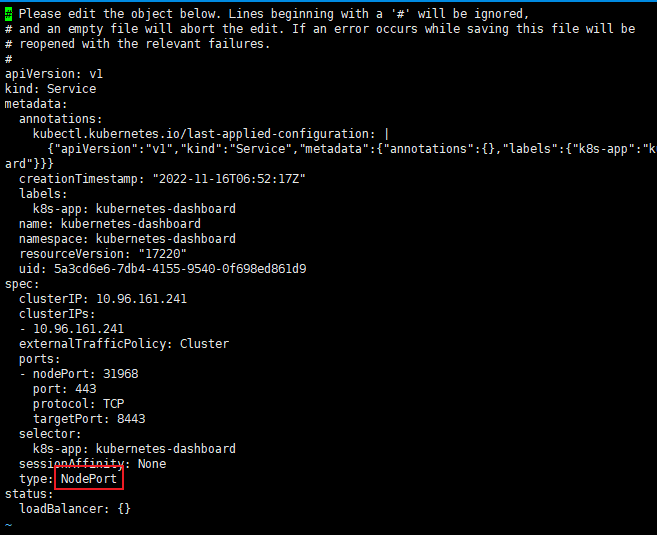

## 6.2.1 设置

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

type: ClusterIP 改为 type: NodePort

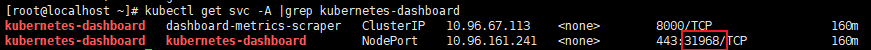

6.2.2 查看端口

kubectl get svc -A |grep kubernetes-dashboard

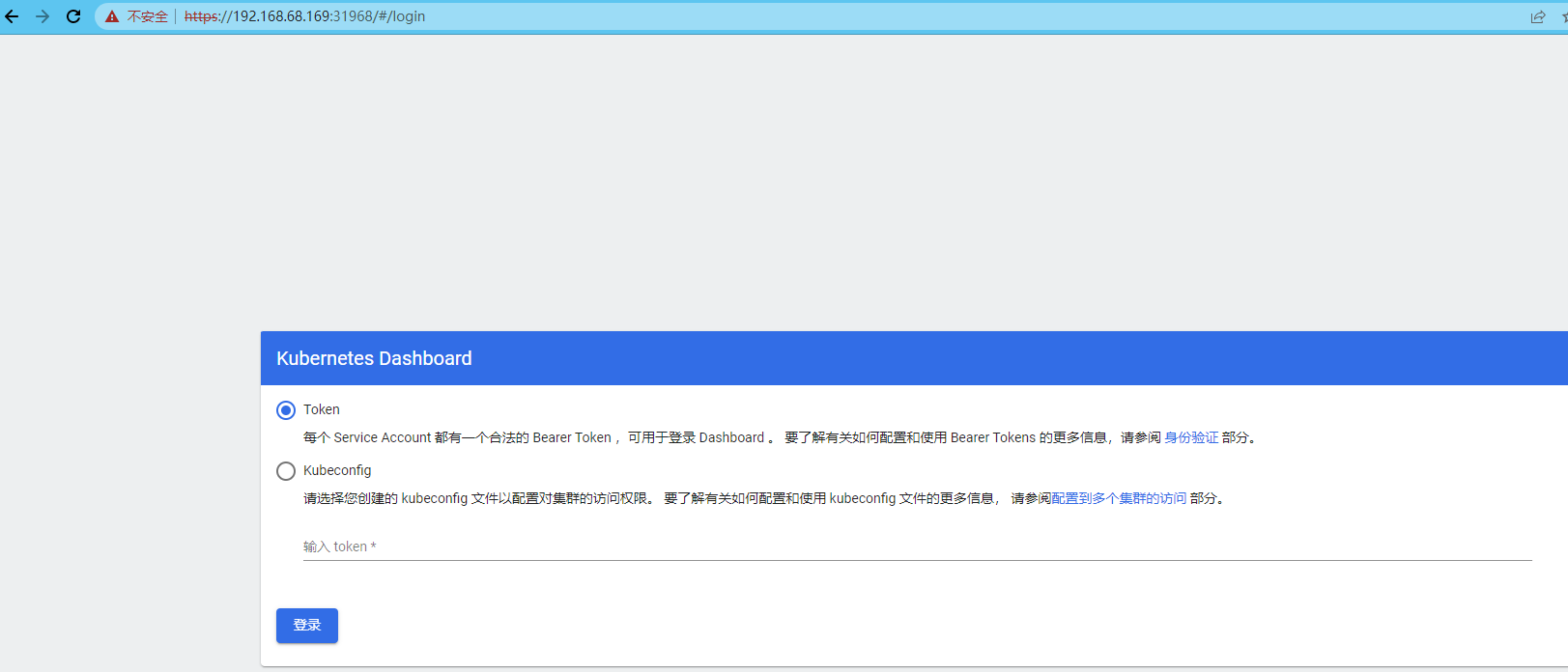

6.2.3 访问

https://集群任意IP:端口

注意:

如果出现如下提示,请先在当前页面,用鼠标点击空白处,然后用键盘输入 thisisunsafe 即可,一定要有完整的thisisunsafe,输错了重新输入即可

输入后即可访问

6.3 创建访问账号

6.3.1 创建访问账号,准备一个yaml文件; vi dash.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

6.3.2 执行创建命令

kubectl apply -f dash.yaml

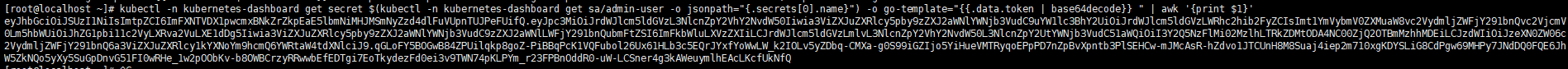

6.4 获取令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}} " | awk '{print $1}'

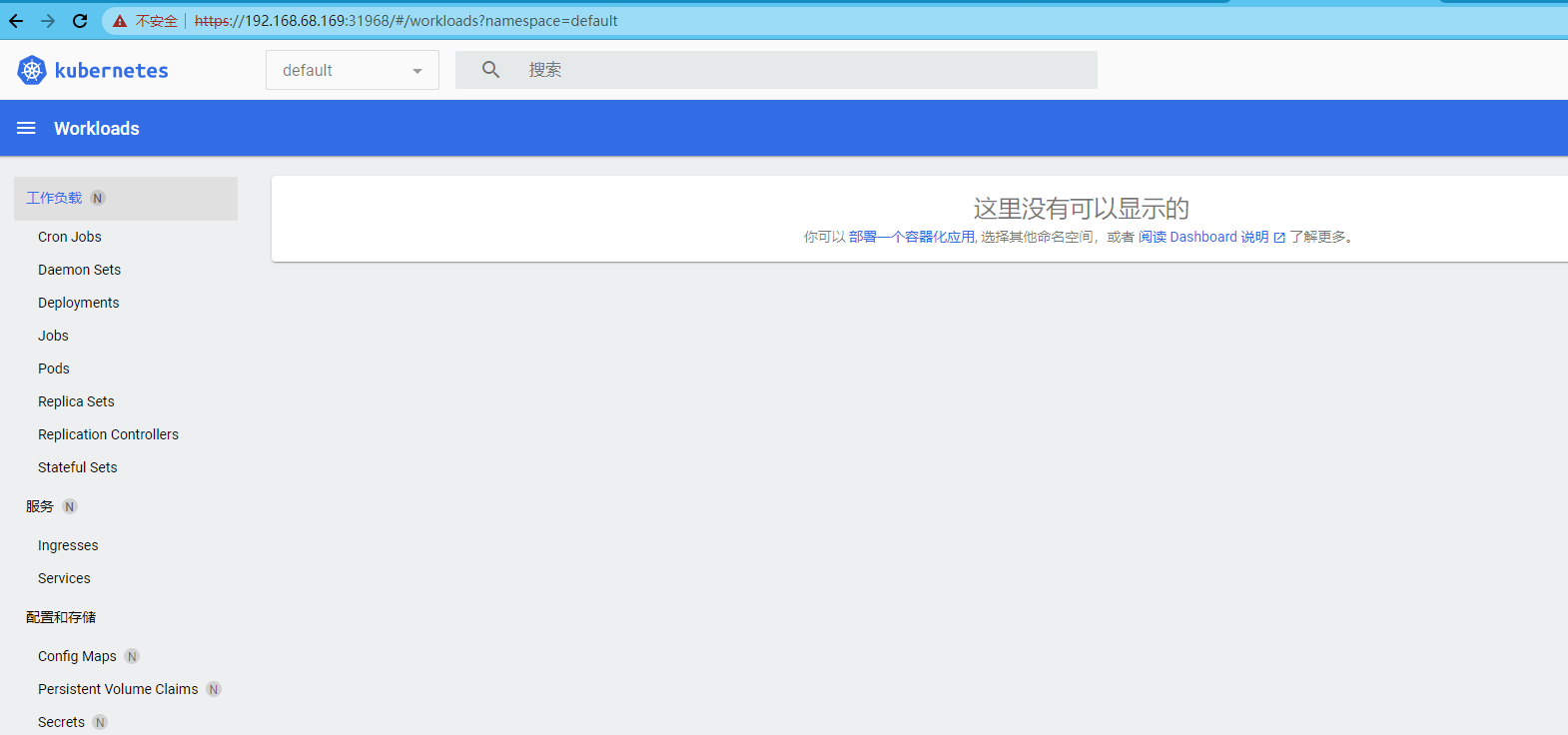

6.5 界面

填入token,即可登录成功

2、k8s 基础环境安装的更多相关文章

- k8s基础环境配置:基于CentOS7.9

k8s基础环境配置:基于CentOS7.9 wmware15安装centos7.9:https://www.cnblogs.com/uncleyong/p/15261742.html 1.配置静态ip ...

- 【SpringCloud之pigx框架学习之路 】1.基础环境安装

[SpringCloud之pigx框架学习之路 ]1.基础环境安装 [SpringCloud之pigx框架学习之路 ]2.部署环境 1.Cmder.exe安装 (1) windows常用命令行工具 下 ...

- ELK-6.5.3学习笔记–elk基础环境安装

本文预计阅读时间 13 分钟 文章目录[隐藏] 1,准备工作. 2,安装elasticsearch. 3,安装logstash. 4,安装kibana 以往都是纸上谈兵,毕竟事情也都由部门其他小伙伴承 ...

- CentOS 8.2 对k8s基础环境配置

一.基础环境配置 1 IP 修改 机器克隆后 IP 修改,使Xshell连接上 [root@localhost ~]# vi /etc/sysconfig/network-scripts/ifcfg- ...

- Docker容器学习梳理 - 基础环境安装

以下是centos系统安装docker的操作记录 1)第一种方法:采用系统自带的docker安装,但是这一般都不是最新版的docker安装epel源[root@docker-server ~]# wg ...

- k8s基础环境搭建

环境准备 服务器之间时间同步 1. 关闭防火墙 systemctl stop firewalld setenforce 0 2. 设置yum源 三台机器都要设置一个master两个node节点 下 ...

- Linux下部署docker记录(0)-基础环境安装

以下是centos系统安装docker的操作记录 1)第一种方法:采用系统自带的docker安装,但是这一般都不是最新版的docker安装epel源[root@docker-server ~]# wg ...

- Linux系统最小化安装之后的系统基础环境安装以及内核优化脚本

#!/bin/bash #添加epel和rpmforge的外部yum扩展源 cd /usr/local/src wget http://mirrors.ustc.edu.cn/fedora/epel/ ...

- ansible playbook实践(一)-基础环境安装

1 介绍 Ansible 是一个系统自动化工具,用来做系统配管理,批量对远程主机执行操作指令. 2 实验环境 ip 角色 192.168.40.71 ansible管控端 192.168.40.72 ...

- 离线安装Cloudera Manager 5和CDH5(最新版5.9.3) 完全教程(二)基础环境安装

一.安装CentOS 6.5 x64 具体安装过程自行百度 1.1 修改IP地址 [root@master ~]# vi /etc/sysconfig/network DEVICE=eth0 TYPE ...

随机推荐

- 结构型模式 - 代理模式Proxy

学习而来,代码是自己敲的.也有些自己的理解在里边,有问题希望大家指出. 代理模式的定义与特点 代理模式的定义:由于某些原因需要给某对象提供一个代理以控制对该对象的访问.这时,访问对象不 ...

- 数据采集之刷cnblog评论

python代码如下: import random import time import requests cookies = { '__gads': 'ID=3c504aa17c4a7048:T=1 ...

- Mybatis Plus整合PageHelper分页的实现示例

1.依赖引入 <dependency> <groupId>com.github.pagehelper</groupId> <artifactId>pag ...

- java反序列化基础

前言:最近开始学习java的序列化与反序列化,现在从原生的序列化与反序列化开始,小小的记录一下 参考文章:https://blog.csdn.net/mocas_wang/article/detail ...

- JavaScript 、三个点、 ...、点点点 是什么语法

笔者在学习ts函数式的时候见到这样的写法,这个语法是es6的扩展运算符,可以在函数调用/数组构造时, 将数组表达式或者string在语法层面展开:还可以在构造字面量对象时, 将对象表达式按key-va ...

- zookeeper06-watcher四字命令

zookeeper四字监控命令 zooKeeper支持某些特定的四字命令与其的交互.它们大多是查询命令,用来获取 zooKeeper服务的当前状态及相关信息.用户在客户端可以通过 telnet 或 ...

- C# File、FileInfo、Directory、DirectoryInfo

本文主要介绍文件类.文件信息类.目录类.目录信息类的常用属性和方法 1.File(文件类) // 1.判断文件是否存在 bool isFileExist = File.Exists(@"D: ...

- go 神奇的错误 time.Now().Format("2006-01-02 13:04:05") 比北京时间大8小时

困倦的时候写了个个获取本地时间,打印总比当前时间大8小时,找了很久原因 package main import ( "fmt" "time" ) func ma ...

- PPMM

代码 #include<cstdio> using namespace std; const int N = 1000000 , INF = 2e9; int n , m , x , he ...

- Centos7部署rsync配合inotify进行系统文件实时备份

转载csdn: Centos7部署rsync配合inotify进行系统文件实时备份_xixixilalalahaha的博客-CSDN博客