Deep learning:四十四(Pylearn2中的Quick-start例子)

前言:

听说Pylearn2是个蛮适合搞深度学习的库,它建立在Theano之上,支持GPU(估计得以后工作才玩这个,现在木有这个硬件条件)运算,由DL大牛Bengio小组弄出来的,再加上Pylearn2里面已经集成了一部分常见的DL算法,本着很想读读这些算法的源码和细节这一想法,打算学习下Pylearn2的使用. 网上这方面的中文资料简直是太少了,虽然本博文没什么实质内容,但也写贴出来,说不定可以帮到一些初学者。

从Bengio的一篇paper: Pylearn2: a machine learning research library可以看出,Pylearn2主要是针对机器学习开发者而设计的(说明使用该库的人需要有一定的机器学习背景知识),利用Pylearn2可以灵活设计自己的机器学习模型和算法,可扩展性较强(具体怎么弄??)。而根据Pylearn2库的特征(官网)上的介绍可知,在pylearn2里,有一些常见的数据模块、模型模块、训练算法模块。数据模块中有常见的MNIST, CIFAR10, CIFAR100, STL10, NORB等。DL模型模块包含:RBM系列,AutoEncoder系列,LCC, maxout等。训练算法模块主要是SGD系列。

Pylearn2安装简单介绍:

好吧,进入正题。首先是库的安装,我是运行在64bit-ubuntu13.10上的。

1. 在此之前还需安装Theano(python下进行符号运算的库,类似Numpy,但在多维矩阵处理上功能更强),安装Theano的方法请参考:Installing Theano(Bleeding-edge install instruction),里面有Ubuntu下安装的链接,按照里面的步骤一步步进行下去就行(期间遇到的各种问题多google吧!)。需要提一下的是,安装成功后,我们需要将Theano升级到开发版本Bleeding-edge下,因为后面的Pylearn2用到了开发版Theano的新特征。具体的升级方法参考网页中的Bleeding-edge install instructions小节。

2. Pylearn2的安装可以参考博文pylearn2安装及测试(lucktroy的csdn博客)。主要有3步:

a. 在想安装Pylearn2的目录下打开vim,输入命令:

git clone git://github.com/lisa-lab/pylearn2.git

b. 配置Pylearn2所用数据目录的环境变量(做一些标准实验时,可将数据放入该目录),即在vim里输入命令行:vim ~/.bashrc ,然后在打开的.bashrc文件最后一行加入语句:export PYLEARN2_DATA_PATH=YourPath/data 保存后退出。其中的YourPath为你想放入数据的目录全称。接着在vim里执行source ~/.vimrc命令

c. 进入pylearn2目录(刚用git下载后会有该文件的),执行命令:python setup.py build.

运行Quick-start例子:

安装完Pylearn2后就想弄个sample爽一把,选的是GRBM算法例子,可参考官网的Quick-start example教程。这个例子中主要有3个步骤(如果实验过程中出现一些问题,可以参考下本博文的附录,看能否提供一些帮助):

步骤一:创建数据。

在YourPath/pylearn2/scripts/tutorials/grbm_smd/ 目录下执行下列命令:python make_dataset.py

从make_dataset.py的源码中可以看出,这里使用的是CIFAR10图片库(http://www.cs.toronto.edu/~kriz/cifar.html(CIFAR10数据库)),为32*32大小的彩色图片,共5w个训练样本和1w个测试样本。训练grbm的patch大小为8*8的,有15w个patch。当然还对该图片库进行了一些预处理,比如ZCA白化等等。最后将预处理好的结果保存为pickle文件(pickle是python中用于序列处理的模块,保存数据为.pkl格式到硬盘,下次要使用该数据时可重新加载):cifar10_preprocessed_train.pkl.

步骤二:GRBM模型参数的训练。

使用的命令(还是在原来的目录下)为:python ../../ train.py cifar_grbm_smd.yaml

其中的cifar_grbm_smd.yaml文件是该实验的配置文件,需要配置数据,模型,算法3个模块的一些参数,yaml文件是我们与pylearn2打交道的文件,如果是使用常见的深度学习模型和常见的优化算法来做实验的话,则只需把配置好这个.yaml文件就可以了。这可以简化不少工作。下面来看看这个cifar_grbm_smd.yaml的代码及一些注释,关于yaml语法的简单介绍可参考:YAML for Pylearn2. 另外,如果想了解GRBM,则可参考网友博文:DeepLearning(深度学习)原理与实现(四),写得很不错。

# pylearn2 tutorial example: cifar_grbm_smd.yaml by Ian Goodfellow

#

# Read the README file before reading this file

#

# This is an example of yaml file, which is the main way that an experimenter

# interacts with pylearn2.

#

# A yaml file is very similar to a python dictionary, with a bit of extra

# syntax. # The !obj tag allows us to create a specific class of object. The text after

# the : indicates what class should be loaded. This is followed by a pair of

# braces containing the arguments to that class's __init__ method.

#

# Here, we allocate a Train object, which represents the main loop of the

# training script. The train script will run this loop repeatedly. Each time

# through the loop, the model is trained on data from a training dataset, then

# saved to file. !obj:pylearn2.train.Train {

# The !pkl tag is used to create an object from a pkl file. Here we retrieve

# the dataset made by make_dataset.py and use it as our training dataset.

dataset: !pkl: "cifar10_preprocessed_train.pkl", # Next we make the model to be trained. It is a Binary Gaussian RBM

model: !obj:pylearn2.models.rbm.GaussianBinaryRBM { # The RBM needs 192 visible units (its inputs are 8x8 patches with 3

# color channels)

nvis : 192, # We'll use 400 hidden units for this RBM. That's a small number but we

# want this example script to train quickly.

nhid : 400, # The elements of the weight matrices of the RBM will be drawn

# independently from U(-0.05, 0.05)

irange : 0.05, # There are many ways to parameterize a GRBM. Here we use a

# parameterization that makes the correspondence to denoising

# autoencoders more clear.

energy_function_class : !obj:pylearn2.energy_functions.rbm_energy.grbm_type_1 {}, # Some learning algorithms are capable of estimating the standard

# deviation of the visible units of a GRBM successfully, others are not

# and just fix the standard deviation to 1. We're going to show off

# and learn the standard deviation.

learn_sigma : True, # Learning works better if we provide a smart initialization for the

# parameters. Here we start sigma at .4 , which is about the same

# standard deviation as the training data. We start the biases on the

# hidden units at -2, which will make them have fairly sparse

# activations.

init_sigma : .4,

init_bias_hid : -2., # Some GRBM training algorithms can't handle the visible units being

# noisy and just use their mean for all computations. We will show off

# and not use that hack here.

mean_vis : False, # One hack we will make is we will scale back the gradient steps on the

# sigma parameter. This way we don't need to worry about sigma getting

# too small prematurely (if it gets too small too fast the learning

# signal gets weak).

sigma_lr_scale : 1e-3 }, # Next we need to specify the training algorithm that will be used to train

# the model. Here we use stochastic gradient descent. algorithm: !obj:pylearn2.training_algorithms.sgd.SGD {

# The learning rate determines how big of steps the learning algorithm

# takes. Here we use fairly big steps initially because we have a

# learning rate adjustment scheme that will scale them down if

# necessary.

learning_rate : 1e-1, # Each gradient step will be based on this many examples

batch_size : 5, # We'll monitor our progress by looking at the first 20 batches of the

# training dataset. This is an estimate of the training error. To be

# really exhaustive, we could use the entire training set instead,

# or to avoid overfitting, we could use held out data instead.

monitoring_batches : 20, monitoring_dataset : !pkl: "cifar10_preprocessed_train.pkl", # Here we specify the objective function that stochastic gradient

# descent should minimize. In this case we use denoising score

# matching, which makes this RBM behave as a denoising autoencoder.

# See

# Pascal Vincent. "A Connection Between Score Matching and Denoising

# Auutoencoders." Neural Computation, 2011

# for details. cost : !obj:pylearn2.costs.ebm_estimation.SMD { # Denoising score matching uses a corruption process to transform

# the raw data. Here we use additive gaussian noise. corruptor : !obj:pylearn2.corruption.GaussianCorruptor {

stdev : 0.4

},

}, # We'll use the monitoring dataset to figure out when to stop training.

#

# In this case, we stop if there is less than a 1% decrease in the

# training error in the last epoch. You'll notice that the learned

# features are a bit noisy. If you'd like nice smooth features you can

# make this criterion stricter so that the model will train for longer.

# (setting N to 10 should make the weights prettier, but will make it

# run a lot longer) termination_criterion : !obj:pylearn2.termination_criteria.MonitorBased {

prop_decrease : 0.01,

N : 1,

}, # Let's throw a learning rate adjuster into the training algorithm.

# To do this we'll use an "extension," which is basically an event

# handler that can be registered with the Train object.

# This particular one is triggered on each epoch.

# It will shrink the learning rate if the objective goes up and increase

# the learning rate if the objective decreases too slowly. This makes

# our learning rate hyperparameter less important to get right.

# This is not a very mathematically principled approach, but it works

# well in practice.

},

extensions : [!obj:pylearn2.training_algorithms.sgd.MonitorBasedLRAdjuster {}],

#Finally, request that the model be saved after each epoch

save_freq : 1

}

由上面的yaml文件可知,yaml中的内容有点类似python中的字典:一个关键字key对应一个值value。而这些key都是对应类的构造函数__init__()中的参数,也就是说将这些value传入到这些构造函数中,并由其对象接收。上面yaml代码中data来源于步骤一的cifar10_preprocessed_train.pkl文件。model来源于pylearn2库下的pylearn2.models.rbm.GaussianBinaryRBM类,而algorithm来源于pylearn2库下的pylearn2.training_algorithms.sgd.SGD类。

当.yaml文件都配置好后,我们就需要启动对应的程序来训练参数了,train.py就是执行的这个功能的,其代码为:

#!/usr/bin/env python

"""

Script implementing the logic for training pylearn2 models. This is intended to be a "driver" for most training experiments. A user

specifies an object hierarchy in a configuration file using a dictionary-like

syntax and this script takes care of the rest. For example configuration files that are consumable by this script, see pylearn2/scripts/train_example

pylearn2/scripts/autoencoder_example

"""

__authors__ = "Ian Goodfellow"

__copyright__ = "Copyright 2010-2012, Universite de Montreal"

__credits__ = ["Ian Goodfellow", "David Warde-Farley"]

__license__ = "3-clause BSD"

__maintainer__ = "Ian Goodfellow"

__email__ = "goodfeli@iro"

# Standard library imports

import argparse

import gc

import logging

import os # Third-party imports

import numpy as np # Local imports

from pylearn2.utils import serial

from pylearn2.utils.logger import (

CustomStreamHandler, CustomFormatter, restore_defaults

) class FeatureDump(object):

def __init__(self, encoder, dataset, path, batch_size=None, topo=False):

self.encoder = encoder

self.dataset = dataset

self.path = path

self.batch_size = batch_size

self.topo = topo def main_loop(self):

if self.batch_size is None:

if self.topo:

data = self.dataset.get_topological_view()

else:

data = self.dataset.get_design_matrix()

output = self.encoder.perform(data)

else:

myiterator = self.dataset.iterator(mode='sequential',

batch_size=self.batch_size,

topo=self.topo)

chunks = []

for data in myiterator:

chunks.append(self.encoder.perform(data))

output = np.concatenate(chunks)

np.save(self.path, output) def make_argument_parser():

parser = argparse.ArgumentParser(

description="Launch an experiment from a YAML configuration file.",

epilog='\n'.join(__doc__.strip().split('\n')[1:]).strip(),

formatter_class=argparse.RawTextHelpFormatter

) #parser是用来接收参数的

parser.add_argument('--level-name', '-L',

action='store_true',

help='Display the log level (e.g. DEBUG, INFO) '

'for each logged message')

parser.add_argument('--timestamp', '-T',

action='store_true',

help='Display human-readable timestamps for '

'each logged message')

parser.add_argument('--verbose-logging', '-V',

action='store_true',

help='Display timestamp, log level and source '

'logger for every logged message '

'(implies -T).')

parser.add_argument('--debug', '-D',

action='store_true',

help='Display any DEBUG-level log messages, '

'suppressed by default.')

parser.add_argument('config', action='store', #按照格式输入参数,比如这里的输入的参数会保存在config中

choices=None,

help='A YAML configuration file specifying the '

'training procedure')

return parser if __name__ == "__main__":

parser = make_argument_parser()

args = parser.parse_args() #读取传入进来的参数,这里是直接在命令行读取该文件,参数放入args.config中

train_obj = serial.load_train_file(args.config) #serial.load_train_file()函数最后返回的是:

# return yaml_parse.load_path(args.config) 也就是说调用的是ymal_parse.load_path()函数。返回的是一个train类的对象。

# 其中的ymal_parse是pylearn2.config中的函数。

return yaml_parse.load_path(config_file_path)

try:

iter(train_obj) #iter()是个迭代器函数

iterable = True

except TypeError as e:

iterable = False # Undo our custom logging setup.

restore_defaults()

# Set up the root logger with a custom handler that logs stdout for INFO

# and DEBUG and stderr for WARNING, ERROR, CRITICAL.

root_logger = logging.getLogger() #logging主要是python中用于处理日志的模块,这里是返回一个logger实例,由于没有指定name,所以是root logger

if args.verbose_logging:

formatter = logging.Formatter(fmt="%(asctime)s %(name)s %(levelname)s "

"%(message)s")

handler = CustomStreamHandler(formatter=formatter)

else:

if args.timestamp:

prefix = '%(asctime)s '

else:

prefix = '' #这里为空

formatter = CustomFormatter(prefix=prefix, only_from='pylearn2')

handler = CustomStreamHandler(formatter=formatter)

root_logger.addHandler(handler) #给root_lgger添加handler来帮助处理日志

# Set the root logger level.

if args.debug:

root_logger.setLevel(logging.DEBUG)

else:

root_logger.setLevel(logging.INFO) #给root_logger设置级别,为INFO级别,因为每个日志消息都会关联一个级别 if iterable: #enumerate()为对一个list或者array既要遍历索引又要遍历元素时使用

for number, subobj in enumerate(iter(train_obj)):#train_obj里面装的是ymal文件内容,类似字典

# Publish a variable indicating the training phase.

phase_variable = 'PYLEARN2_TRAIN_PHASE'

phase_value = 'phase%d' % (number + 1)

os.environ[phase_variable] = phase_value

os.putenv(phase_variable, phase_value) # Execute this training phase.

subobj.main_loop() # Clean up, in case there's a lot of memory used that's

# necessary for the next phase.

del subobj

gc.collect()

else:

train_obj.main_loop() #因为train_obj中已经包含了数据,模型,算法,所以调用main_loop()后表示采用对应算法用对应数据在对应的模型上训练

#直到满足迭代终止条件

其中最核心的就是main_loop()函数了,在调用main_loop()后,程序会自动用algorithm对象使用model对象在data上来训练参数了。至于具体该函数是怎样将data, model, algorithm联系起来的呢?我们可以试着去读一下源码:

首先是由train_obj.main_loop()函数将data, model, algorithm联系起来的。从名字train_obj可以看出它是一个某个类的对象,猜测应该是Pylearn2下的Train类对象,因为在库Pylearn2的子目录下有个model为train.py,该文件有个Train类,并且这个Train类有一个方法:main_loop()。看来一切符合猜测,那么是否真是的呢?

首先来看看train_obj从哪里来的(因为main_loop()是由train_obj来调用的)。由上面的程序可知:train_obj = serial.load_train_file(args.config), 需要跟踪serial, 找到serial.load_train_file()的源代码,最后一句为:return yaml_parse.load_path(args.config). 继续跟踪发现load_path()函数里面调用了load()函数,而里面最调用的是yaml.load()函数,由源码中的注释可知它是将.yaml配置文件转换成一个graph, 而这个graph应该就是一个Train对象...

好吧,到了该看main_loop()的内容了:

def main_loop(self):

"""

Repeatedly runs an epoch of the training algorithm, runs any

epoch-level callbacks, and saves the model.

"""

if self.algorithm is None:

self.model.monitor = Monitor.get_monitor(self.model)

self.setup_extensions()

self.run_callbacks_and_monitoring()

while True:

rval = self.model.train_all(dataset=self.dataset)

if rval is not None:

raise ValueError("Model.train_all should not return anything. Use Model.continue_learning to control whether learning continues.")

self.model.monitor.report_epoch()

if self.save_freq > 0 and self.model.monitor.epochs_seen % self.save_freq == 0:

self.save()

continue_learning = self.model.continue_learning()

assert continue_learning in [True, False, 0, 1]

if not continue_learning:

break

else:

self.algorithm.setup(model=self.model, dataset=self.dataset) #这一句将data,model, dataset联系起来了

self.setup_extensions() #和.yaml文件中的extensions项联系起来了

if not hasattr(self.model, 'monitor'):

# TODO: is this really necessary? I just put this error here

# to prevent an AttributeError later, but I think we could

# rewrite to avoid the AttributeError

raise RuntimeError("The algorithm is responsible for setting"

" up the Monitor, but failed to.")

if len(self.model.monitor._datasets)>0:

# This monitoring channel keeps track of a shared variable,

# which does not need inputs nor data.

self.model.monitor.add_channel(name="monitor_seconds_per_epoch",

ipt=None,

val=self.monitor_time,

data_specs=(NullSpace(), ''),

dataset=self.model.monitor._datasets[0])

self.run_callbacks_and_monitoring()

while True: #循环中,直到满足终止条件

with log_timing(log, None, final_msg='Time this epoch:',

callbacks=[self.monitor_time.set_value]):

rval = self.algorithm.train(dataset=self.dataset) #算法训练的核心函数

if rval is not None:

raise ValueError("TrainingAlgorithm.train should not return anything. Use TrainingAlgorithm.continue_learning to control whether learning continues.")

self.model.monitor.report_epoch()

self.run_callbacks_and_monitoring()

if self.save_freq > 0 and self.model.monitor._epochs_seen % self.save_freq == 0:

self.save()

continue_learning = self.algorithm.continue_learning(self.model) #终止条件测试

assert continue_learning in [True, False, 0, 1]

if not continue_learning:

break self.model.monitor.training_succeeded = True if self.save_freq > 0:

self.save()

步骤三:

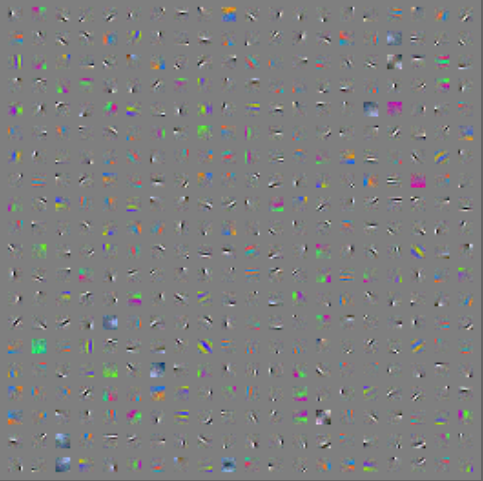

这部分就是看结果显示了,执行命令:python ../../show_weights.py cifar_grbm_smd.pkl 比如我这里执行后的结果显示如下:

当然了你还可以使用plot_monitor.py来看一些对应结果。

总结:

当使用Pylearn2中已有的一些DL模型,采用其中已有的一些优化算法来做实验时,我们只需要配置好实验的.yaml文件即可,调参过程就是不断更改.ymal中的配置。但是如果需要采用自己新提出来的DL模型,或者采用自己新提出的目标函数及优化方法,则还需要自己写出对应的类,具体这部分该怎么做(比如说怎样去实现这个类,接口怎样设计,.ymal文件需要更改哪些地方等),本人暂时没任何经验。希望懂这些的可以大家可贡献贡献下想法,交流交流下。网上有个教程是把Pylearn2当做通常的python库来用,实现了一个异或网络,很不错,见:Neural network example using Pylearn2.

另外,分析Pylearn2的源码可知,每个algorithm中,必须有下面4个函数:__init(), setup(), train(), continue_training(), 作用分别为构造函数, 根据model建立网络的结构,模型参数的训练,模型训练终止处理。model模块中,应该也有一些统一的函数。

附录:

我实验过程中可能出现的一些错误处理:

A:

如果执行 python make_dataset.py后出现错误:

raise IOError("permission error creating %s" % filepath) IOError: permission error creating cifar10_preprocessed_train.pkl

看错误提示应该是权限问题,这时改为命令:

sudo python make_dataset.py

如果继续出现错误:

pylearn2.datasets.exc.NoDataPathError: You need to define your PYLEARN2_DATA_PATH environment variable. If you are using a computer at LISA, this should be set to /data/lisa/data.

说明PYLEARN2_DATA_PATH环境变量没有设置,但是前面却是设置了啊!为什么呢?有可能是你设置环境变量时用的是root权限,而执行该命令只是普通用户。如果切换到root下再执行 root#:python make_dataset.py成功!生成了cifar10_preprocessed_train.pkl

但是后面执行:../../train.py cifar_grbm_smd.yaml出现错误:ImportError: Could not import pylearn2.models but could import pylearn2. Original exception: No module named compat.python2x

到这里基本可以确定是权限问题,解决方法是:重新用普通用户安装了下pylearn2,设置好环境变量,放着好下载的数据后,执行(普通用户下):

python make_dataset

则成功生成了cifar10_preprocessed_train.pkl 可恶的是后续的../../train.py cifar_grbm_smd.yaml还是会出现刚刚的错误。

当然了这个问题主要是因为Theano的版本不对,在使用pylearn2时,应该使用development版本的Theano,按照本文前面的方法更新下Theano即可。

B.

如果在显示权值阶段,当执行下面命令后:sudo python ../../show_weights.py cifar_grbm_smd.pkl.可能会出现下面提示:

You need to choose an image viewer program that pylearn2 should use. Then tell pylearn2 to usethat image viewer program by defining your PYLEARN2_VIEWER_COMMAND environment variable.You need to choose PYLEARN_VIEWER_COMMAND such that running ${PYLEARN2_VIEWER_COMMAND} image.png

in a command prompt on your machine will do the following:

-open an image viewer in a new process.

-not return until you have closed the image.

Acceptable commands include:

gwenview

eog --new-instance

This is assuming that you have gwenview or a version of eog that supports --new-instance

......

……

这说明pylearn2中没有指定图片显示的软件。首先安装gwenview软件:sudo apt-get Install gwenview.

然后设置一下PYLEARN2_VIEWER_COMMAND环境变量。vim ~/.bashrc 在最后一行加入gwenview的安装目录,比如我按照默认的安装目录加入的为:

export PYLEARN2_VIEWER_COMMAND=/usr/bin/gwenview

保存好后执行source ~/.bashrc

参考资料:

Pylearn2: a machine learning research library

Installing Theano(Bleeding-edge install instruction)

pylearn2安装及测试(lucktroy的csdn博客)

Deep learning:四十四(Pylearn2中的Quick-start例子)的更多相关文章

- m_Orchestrate learning system---三十四、使用重定义了$的插件的时候最容易出现的问题是什么

m_Orchestrate learning system---三十四.使用重定义了$的插件的时候最容易出现的问题是什么 一.总结 一句话总结:如下面这段代码,定义了$的值,还是会习惯性的把$当成jQ ...

- m_Orchestrate learning system---二十四、thinkphp里面的ajax如何使用

m_Orchestrate learning system---二十四.thinkphp里面的ajax如何使用 一.总结 一句话总结:其实ajax非常简单:前台要做的事情就是发送ajax请求过来,后台 ...

- 第四十四个知识点:在ECC密码学方案中,描述一些基本的防御方法

第四十四个知识点:在ECC密码学方案中,描述一些基本的防御方法 原文地址:http://bristolcrypto.blogspot.com/2015/08/52-things-number-44-d ...

- NeHe OpenGL教程 第四十四课:3D光晕

转自[翻译]NeHe OpenGL 教程 前言 声明,此 NeHe OpenGL教程系列文章由51博客yarin翻译(2010-08-19),本博客为转载并稍加整理与修改.对NeHe的OpenGL管线 ...

- 网站开发进阶(四十四)input type="submit" 和"button"的区别

网站开发进阶(四十四)input type="submit" 和"button"的区别 在一个页面上画一个按钮,有四种办法: 这就是一个按钮.如果你不写ja ...

- Gradle 1.12用户指南翻译——第四十四章. 分发插件

本文由CSDN博客貌似掉线翻译,其他章节的翻译请参见: http://blog.csdn.net/column/details/gradle-translation.html 翻译项目请关注Githu ...

- 孤荷凌寒自学python第四十四天Python操作 数据库之准备工作

孤荷凌寒自学python第四十四天Python操作数据库之准备工作 (完整学习过程屏幕记录视频地址在文末,手写笔记在文末) 今天非常激动地开始接触Python的数据库操作的学习了,数据库是系统化设计 ...

- Android项目实战(四十四):Zxing二维码切换横屏扫描

原文:Android项目实战(四十四):Zxing二维码切换横屏扫描 Demo链接 默认是竖屏扫描,但是当我们在清单文件中配置横屏显示的时候: <activity android:name=&q ...

- SQL注入之Sqli-labs系列第四十一关(基于堆叠注入的盲注)和四十二关四十三关四十四关四十五关

0x1普通测试方式 (1)输入and1=1和and1=2测试,返回错误,证明存在注入 (2)union select联合查询 (3)查询表名 (4)其他 payload: ,( ,( 0x2 堆叠注入 ...

- m_Orchestrate learning system---三十、项目中的dist文件一般是做什么的

m_Orchestrate learning system---三十.项目中的dist文件一般是做什么的 一.总结 一句话总结: Bootstrap switch:dist 目录是放最终的js和css ...

随机推荐

- Texture2D.GetPixelBilinear(float u, float v)的使用,官方例子注释

using UnityEngine; using System.Collections; public class TEST : MonoBehaviour { public Texture2D so ...

- kali基本设置

一.更换比较快的软件源 修改sources.list文件(su): leafpad /etc/apt/sources.list #debain源 deb http://mirrors.163.com/ ...

- Asp.net MVC4 与 Web Form 并存

Web Forms 与 MVC 的asp.net 基础架构是相同的.MVC 的路由机制并不只MVC 特有的,它与WebForm 也是共享相同的路由机制.Web Forms 的Http请求针 ...

- Building Apps for Windows Phone 8.1教程下载地址整理

官方教程地址http://channel9.msdn.com/Series/Building-Apps-for-Windows-Phone-8-1http://media.ch9.ms/ch9/8db ...

- 人人都是 DBA(III)SQL Server 调度器

在 SQL Server 中,当数据库启动后,SQL Server 会为每个物理 CPU(包括 Physical CPU 和 Hyperthreaded)创建一个对应的任务调度器(Scheduler) ...

- 【C语言学习】《C Primer Plus》第11章 字符串和字符串函数

学习总结 1.字符串(character String)是以空字符串(\o)结尾的char数组. 2.gets()方法代表get String,它从系统的标准输入设备(通常是键盘)获取一个字符串,当字 ...

- [异常解决] ubuntu上安采用sudo启动的firefox,ibus输入法失效问题解决

采用sudo启动的应用是root权限的应用, ibus失效是因为ibus的初始配置采用user权限: 而root下运行的firefox输入法的配置还是停留在默认情况~ 解决方案是在shell下以roo ...

- Django集成百度富文本编辑器uEditor

UEditor是由百度web前端研发部开发所见即所得富文本web编辑器,具有轻量,可定制,注重用户体验等特点,开源基于MIT协议,允许自由使用和修改代码. 首先从ueEditor官网下载最新版本的包, ...

- Axis 1 https(SSL) client 证书验证错误ValidatorException workaround

Axis 1.x 编写的client在测试https的webservice的时候, 由于client 代码建立SSL连接的时候没有对truststore进行设置,在与https部署的webservic ...

- 浏览器 的 session 如何保持?!

http://qindingsky.blog.163.com/blog/static/3122336200832853116360/ 在谈论session机制的时候,常常听到这样一种误解“只要关闭浏览 ...