anisotropy texture filtering

http://www.extremetech.com/computing/51994-the-naked-truth-about-anisotropic-filtering

1、 为什么在纹理采样时需要texture filter(纹理过滤)。

我们的纹理是要贴到三维图形表面的,而三维图形上的pixel中心和纹理上的texel中心并不一至(pixel不一定对应texture上的采样中心texel),大小也不一定一至。当纹理大于三维图形表面时,导至一个像素被映射到许多纹理像素上;当维理小于三维图形表面时,许多个象素都映射到同一纹理。

当这些情况发生时,贴图就会变得模糊或发生错位,马赛克。要解决此类问题,必须通过技术平滑texel和pixel之间的对应。这种技术就是纹理滤波。

不同的过滤模式,计算复杂度不一样,会得到不同的效果。过滤模式由简单到复杂包括:Nearest Point Sampling(最近点采样),Bilinear(双线性过滤)、Trilinear(三线性过滤)、Anisotropic Filtering(各向异性过滤)。

在了解这些之前,有必要了解什么是MipMap和什么时各向同性,各向异性。

2、 什么是MipMap?

Mipmap由Lance Williams 在1983的一篇文章“Pyramidal parametrics”中提出。Wiki中有很详细的介绍( http://en.wikipedia.org/wiki/Mipmap ) . 比如一张256X256的图,在长和宽方向每次减少一倍,生成:128X128,64X64,32X32,16X16,8X8,4X4,2X2,1X1,八张图,组成MipMap,如下图示。

Mipmap早已被硬件支持,硬件会自动为创建的Texture生成mipmap的各级。在D3D的API:CreateTexture中有一个参数levels,就是用于指定生成mipmap到哪个级别,当不指定时就一直生成到1X1。

3、 什么是各向同性和各向异性?

当需要贴图的三维表面平行于屏幕(viewport),则是各向同性的。当要贴图的三维表面与屏幕有一定角度的倾斜,则是各向异性的。

也可以这样理解,当一个texture贴到三维表面上从Camera看来没有变形,投射到屏幕空间中后U方向和V方向比例仍然是一样的,便可以理解成各向同性。反之则认为是各向异性。

4、 Nearest Point Sampling(最近点采样)

这个最简单,每个像素的纹理坐标,并不是刚好对应Texture上的一个采样点texel,怎么办呢?最近点采样取最接近的texel进行采样。

当纹理的大小与贴图的三维图形的大小差不多时,这种方法非常有效和快捷。如果大小不同,纹理就需要进行放大或缩小,这样,结果就会变得矮胖、变形或模糊。

5、 Bilinear(双线性过滤)

双线性过滤以pixel对应的纹理坐标为中心,采该纹理坐标周围4个texel的像素,再取平均,以平均值作为采样值。

双线性过滤像素之间的过渡更加平滑,但是它只作用于一个MipMap Level,它选取texel和pixel之间大小最接近的那一层MipMap进行采样。当和pixel大小匹配的texel大小在两层Mipmap level之间时,双线性过滤在有些情况效果就不太好。于是就有了三线性过滤。

6、 Trilinear(三线性过滤)

三线性过滤以双线性过滤为基础。会对pixel大小与texel大小最接近的两层Mipmap level分别进行双线性过滤,然后再对两层得到的结果进生线性插值。

三线性过滤在一般情况下效果非常理想了。但是到目前为止,我们均是假设是texture投射到屏幕空间是各向同性的。但是当各向异性的情况时,效果仍然不理想,于是产生了Anisotropic Filtering(各向异性过滤)。

7、 Anisotropic Filtering(各向异性过滤)

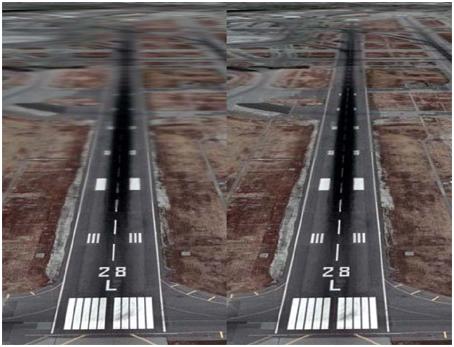

先看效果,左边的图采用三线性过滤,右边的图采用各向异性过滤。

各向同性的过滤在采样的时候,是对正方形区域里行采样。各向异性过滤把纹理与屏幕空间的角度这个因素考虑时去。简单地说,它会考滤一个pixel(x:y=1:1)对应到纹理空间中在u和v方向上u和v的比例关系,当u:v不是1:1时,将会按比例在各方向上采样不同数量的点来计算最终的结果(这时采样就有可能是长方形区域)。

我们一般指的Anisotropic Filtering(AF)均是基于三线过滤的Anisotropic Filtering,因此当u:v不为1:1时,则Anisotropic Filtering比Trilinear需要采样更多的点,具体要采多少,取决于是多少X的AF,现在的显卡最多技持到16X AF。

当开启16X AF的时候,硬件并不是对所有的texture采样都用16X AF,而是需要先计算屏幕空间与纹理空间的夹角(量化后便是上面所说的u:v),只有当夹角大到需要16X时,才会真正使用16X.

如果想了解AF的实现原理,可以查阅此篇Paper: “Implementing an anisotropic texture filter”. 现在AF都是硬件实现,因此只有少数人才清楚AF就尽是怎样实现了(其实细节我也没搞清楚),其实完全可以由Pixel Shader来实现AF,当然性能和由硬件做是没得比的。

8、 各过滤模式性能比较。

下表是各种过滤模式采一个pixel需要sample的次数:

|

Sample Number |

|

|

Nearest Point Sampling |

1 |

|

Bilinear |

4 |

|

Trilinear |

8 |

|

Anisotropic Filtering 4X |

32 |

|

Anisotropic Filtering 16X |

128 |

Anisotropic Filtering 16X效果最好,但是显卡Performance会下降很多,当然也是测试你手中显卡Texture Unit的好方法。如果你觉得你的显卡够牛,那么就把AA和AF都打到最高再试试吧:)

In 3D computer graphics, anisotropic filtering (abbreviated AF) is a method of enhancing the image quality of textures on surfaces of computer graphics that are atoblique viewing angles with respect to the camera where the projection of the texture (not the polygon or other primitive on which it is rendered) appears to be non-orthogonal (thus the origin of the word: "an" for not, "iso" for same, and "tropic" from tropism, relating to direction; anisotropic filtering does not filter the same in every direction).

Like bilinear and trilinear filtering, anisotropic filtering eliminates aliasing effects, but improves on these other techniques by reducing blur and preserving detail at extreme viewing angles.

Anisotropic compression is relatively intensive (primarily memory bandwidth and to some degree computationally, though the standard space-time tradeoff rules apply) and only became a standard feature of consumer-level graphics cards in the late 1990s. Anisotropic filtering is now common in modern graphics hardware (and video driver software) and is enabled either by users through driver settings or by graphics applications and video games through programming interfaces.

Contents

[hide]

An improvement on isotropic MIP mapping[edit]

An example of anisotropic mipmap image storage: the principal image on the top left is accompanied by filtered, linearly transformed copies of reduced size. (click to compare to previous, isotropic mipmaps of the same image)

From this point forth, it is assumed the reader is familiar with MIP mapping.

If we were to explore a more approximate anisotropic algorithm, RIP mapping, as an extension from MIP mapping, we can understand how anisotropic filtering gains so much texture mapping quality. If we need to texture a horizontal plane which is at an oblique angle to the camera, traditional MIP map minification would give us insufficient horizontal resolution due to the reduction of image frequency in the vertical axis. This is because in MIP mapping each MIP level is isotropic, so a 256 × 256 texture is downsized to a 128 × 128 image, then a 64 × 64 image and so on, so resolution halves on each axis simultaneously, so a MIP map texture probe to an image will always sample an image that is of equal frequency in each axis. Thus, when sampling to avoid aliasing on a high-frequency axis, the other texture axes will be similarly downsampled and therefore potentially blurred.

With RIP map anisotropic filtering, in addition to downsampling to 128 × 128, images are also sampled to 256 × 128 and 32 × 128 etc. These anisotropically downsampled images can be probed when the texture-mapped image frequency is different for each texture axis. Therefore, one axis need not blur due to the screen frequency of another axis, and aliasing is still avoided. Unlike more general anisotropic filtering, the RIP mapping described for illustration is limited by only supporting anisotropic probes that are axis-aligned in texture space, so diagonal anisotropy still presents a problem, even though real-use cases of anisotropic texture commonly have such screenspace mappings.

In layman's terms, anisotropic filtering retains the "sharpness" of a texture normally lost by MIP map texture's attempts to avoid aliasing. Anisotropic filtering can therefore be said to maintain crisp texture detail at all viewing orientations while providing fast anti-aliased texture filtering.

Degree of anisotropy supported[edit]

Different degrees or ratios of anisotropic filtering can be applied during rendering and current hardware rendering implementations set an upper bound on this ratio. This degree refers to the maximum ratio of anisotropy supported by the filtering process. So, for example 4:1 (pronounced “4-to-1”) anisotropic filtering will continue to sharpen more oblique textures beyond the range sharpened by 2:1.

In practice what this means is that in highly oblique texturing situations a 4:1 filter will be twice as sharp as a 2:1 filter (it will display frequencies double that of the 2:1 filter). However, most of the scene will not require the 4:1 filter; only the more oblique and usually more distant pixels will require the sharper filtering. This means that as the degree of anisotropic filtering continues to double there are diminishing returns in terms of visible quality with fewer and fewer rendered pixels affected, and the results become less obvious to the viewer.

When one compares the rendered results of an 8:1 anisotropically filtered scene to a 16:1 filtered scene, only a relatively few highly oblique pixels, mostly on more distant geometry, will display visibly sharper textures in the scene with the higher degree of anisotropic filtering, and the frequency information on these few 16:1 filtered pixels will only be double that of the 8:1 filter. The performance penalty also diminishes because fewer pixels require the data fetches of greater anisotropy.

In the end it is the additional hardware complexity vs. these diminishing returns, which causes an upper bound to be set on the anisotropic quality in a hardware design. Applications and users are then free to adjust this trade-off through driver and software settings up to this threshold.

Implementation[edit]

True anisotropic filtering probes the texture anisotropically on the fly on a per-pixel basis for any orientation of anisotropy.

In graphics hardware, typically when the texture is sampled anisotropically, several probes (texel samples) of the texture around the center point are taken, but on a sample pattern mapped according to the projected shape of the texture at that pixel.

Each anisotropic filtering probe is often in itself a filtered MIP map sample, which adds more sampling to the process. Sixteen trilinear anisotropic samples might require 128 samples from the stored texture, as trilinear MIP map filtering needs to take four samples times two MIP levels and then anisotropic sampling (at 16-tap) needs to take sixteen of these trilinear filtered probes.

However, this level of filtering complexity is not required all the time. There are commonly available methods to reduce the amount of work the video rendering hardware must do.

The anisotropic filtering method most commonly implemented on graphics hardware is the composition of the filtered pixel values from only one line of MIP map samples, which is referred to as "footprint assembly".[1][2]

Performance and optimization[edit]

The sample count required can make anisotropic filtering extremely bandwidth-intensive. Multiple textures are common; each texture sample could be four bytes or more, so each anisotropic pixel could require 512 bytes from texture memory, although texture compression is commonly used to reduce this.

A video display device can easily contain over two million pixels, and desired application framerates are often upwards of 30 frames per second. As a result, the required texture memory bandwidth may grow to large values. Ranges of hundreds of gigabytes per second of pipeline bandwidth for texture rendering operations is not unusual where anisotropic filtering operations are involved.[citation needed]

Fortunately, several factors mitigate in favor of better performance:

- The probes themselves share cached texture samples, both inter-pixel and intra-pixel.

- Even with 16-tap anisotropic filtering, not all 16 taps are always needed because only distant highly oblique pixel fills tend to be highly anisotropic.

- Highly Anisotropic pixel fill tends to cover small regions of the screen (i.e. generally under 10%)

- Texture magnification filters (as a general rule) require no anisotropic filtering.

anisotropy texture filtering的更多相关文章

- [ZZ] The Naked Truth About Anisotropic Filtering

http://www.extremetech.com/computing/51994-the-naked-truth-about-anisotropic-filtering In the seemin ...

- OpenGL: 纹理采样 texture sample

Sampler (GLSL) Sampler通常是在Fragment shader(片元着色器)内定义的,这是一个uniform类型的变量,即处理不同的片元时这个变量是一致不变的.一个sampler和 ...

- OpenGL之纹理贴图(Texture)

学习自: https://learnopengl-cn.github.io/01%20Getting%20started/06%20Textures/ 先上一波效果图: 实际上就是:画了一个矩形,然后 ...

- OpenGL ES: 纹理采样 texture sample

Sampler (GLSL) Sampler通常是在Fragment shader(片元着色器)内定义的,这是一个uniform类型的变量,即处理不同的片元时这个变量是一致不变的.一个sampler和 ...

- 【OpenGL】纹理(Texture)

纹理是一个2D图片(也有1D和3D),它用来添加物体的细节:这就像有一张绘有砖块的图片贴到你的3D的房子上,你的房子看起来就有了一个砖墙.因为我们可以在一张图片上插入足够多的细节,这样物体就会拥有很多 ...

- OpenGL入门1.4:纹理/贴图Texture

每一个小步骤的源码都放在了Github 的内容为插入注释,可以先跳过 前言 游戏玩家对Texture这个词应该不陌生,我们已经知道了怎么为每个顶点添加颜色来增加图形的细节,但,如果想让图形看起来更真实 ...

- Mipmap与纹理过滤

为了加快渲染速度和减少纹理锯齿,贴图被处理成由一系列被预先计算和优化过的图片组成的文件,这样的贴图被称为Mipmap. 使用DirectX Texture Tool(DX自带工具)预生成Mipmap ...

- Computer Graphics Research Software

Computer Graphics Research Software Helping you avoid re-inventing the wheel since 2009! Last update ...

- HTML5 3D 粒子波浪动画特效DEMO演示

需要thress.js插件: http://github.com/mrdoob/three.js // three.js - http://github.com/mrdoob/three.js ...

随机推荐

- Session入门

Session是运行在服务器的,不可造假,例如:医生需要一个私人账本,记录病人编号和身份的对应关系.由于身份证无法造假,所以能够保证信息不被假冒.两点:身份证无法造假,这个身份证就可以唯一标识这个用户 ...

- C++实现大数据乘法

结构体定义与封装 struct bigdatacom { private : ]; ]; public : void init(const char *str1,const char *str2) { ...

- Fresco 源码分析(二) Fresco客户端与服务端交互(2) Fresco.initializeDrawee()分析 续

4.2.1.2 Fresco.initializeDrawee()的过程 续 继续上篇博客的分析Fresco.initializeDrawee() sDraweeControllerBuilderSu ...

- laravel框架中注册信息验证

.路由配置 <?php Route::. 控制器分配页面及验证表单提交内容 <?php .form 表单验证 {{ Form::open(array().slideUp(); < ...

- android国外网站

转载来自 http://www.23apk.com/?p=305 http://www.androidboards.com/ http://www.androidev.com/ http://andr ...

- hdu 1430+hdu 3567(预处理)

题目链接:http://acm.hdu.edu.cn/showproblem.php?pid=1430 思路:由于只是8种颜色,所以标号就无所谓了,对起始状态重新修改标号为 12345678,对目标状 ...

- 小甲鱼PE详解之基址重定位详解(PE详解10)

今天有一个朋友发短消息问我说“老师,为什么PE的格式要讲的这么这么细,这可不是一般的系哦”.其实之所以将PE结构放在解密系列继基础篇之后讲并且尽可能细致的讲,不是因为小甲鱼没事找事做,主要原因是因为P ...

- kinect学习笔记(二)—— Sdk平台的搭建~、

一.资源下载 由于我们使用的kinect v1.0,所以我们只需要使用1.8版本的sdk就好了,然后资源包,在QQ群的共享里面已经有啦,所以大家可以直接下载. 二.软件安装 ...

- web应用配置

tomcat 的 server.html 配置文件 加在</Host>之上 <Context path=”/itcast” docBase=”c:\news” /> path虚 ...

- DP/最短路 URAL 1741 Communication Fiend

题目传送门 /* 题意:程序从1到n版本升级,正版+正版->正版,正版+盗版->盗版,盗版+盗版->盗版 正版+破解版->正版,盗版+破解版->盗版 DP:每种情况考虑一 ...