Coherence代理的负载均衡

Coherence在extend模式下,proxy的负载均衡机制官方解释是

Extend client connections are load balanced across proxy service members. By default, a proxy-based strategy is used that distributes client connections to proxy service members that are being utilized the least. Custom proxy-based strategies can be created or the default strategy can be modified as required. As an alternative, a client-based load balance strategy can be implemented by creating a client-side address provider or by relying on randomized client connections to proxy service members. The random approach provides minimal balancing as compared to proxy-based load balancing.

Proxy-based load balancing is the default strategy that is used to balance client connections between two or more members of the same proxy service. The strategy is weighted by a proxy's existing connection count, then by its daemon pool utilization, and lastly by its message backlog.

The proxy-based load balancing strategy is configured within a <proxy-scheme> definition using a <load-balancer> element that is set to proxy. For clarity, the following example explicitly specifies the strategy. However, the strategy is used by default if no strategy is specified and is not required in a proxy scheme definition.

说的比较模糊,在weblogic作为前端来连入后台coherence cluster的情况下,我们模拟实际的生产环境,看一看实际的运作

针对proxy节点的proxy-override.xml

|

<?xml version="1.0"?> <caching-schemes> </backing-map-scheme> <proxy-scheme> </caching-schemes> |

针对storage节点的storage-override.xml

|

<?xml version="1.0"?> <cache-config> <caching-scheme-mapping> <listener/> |

针对客户端的client.xml

|

<?xml version="1.0"?> <caching-schemes> |

另外一个客户端的配置文件client-2.xml

|

<?xml version="1.0"?> <caching-schemes> |

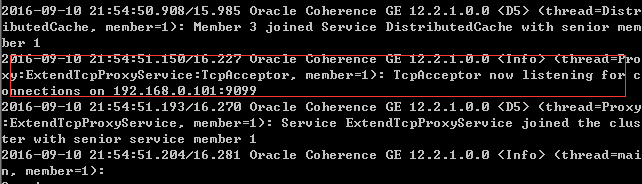

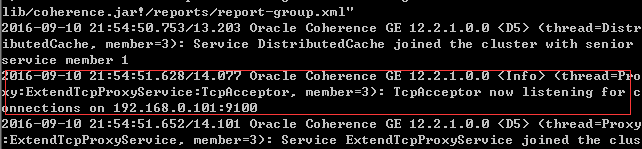

通过proxy-server.cmd启动两个proxy节点,会监听在9099和9100端口

|

"%java_exec%" -server -showversion -Dtangosol.coherence.mode=prod -Dtangosol.coherence.management.remote=true -Dtangosol.coherence.cacheconfig=E:\wls12c\coherence\bin\proxy-override.xml %java_opts% -cp "%coherence_home%\lib\coherence.jar" com.tangosol.net.DefaultCacheServer %* |

通过storage-cmd启动一个storage节点

|

"%java_exec%" -server -showversion -Dtangosol.coherence.mode=prod -Dtangosol.coherence.management.remote=true -Dtangosol.coherence.management=all %java_opts% -Dtangosol.coherence.cacheconfig=E:\wls12c\coherence\bin\storage-override.xml -cp "%coherence_home%\lib\coherence.jar" com.tangosol.net.DefaultCacheServer %* |

在weblogic的setDomainEnv.cmd文件中加入

|

set JAVA_OPTIONS=%JAVA_OPTIONS% -Dtangosol.coherence.cacheconfig="E:\wls12c\coherence\bin\client.xml" -Dtangosol.coherence.tcmp.enabled=false set CLASSPATH=E:\wls1212\coherence\lib\coherence.jar;%CLASSPATH% |

然后部署一个web应用,核心是一个jsp文件coput.jsp,批量放入10000个对象

|

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" <%@ page contentType="text/html;charset=windows-1252"%> <% for (int i=0;i<10000;i++) { %> </body> |

启动weblogic访问,主要连到了9099的proxy server

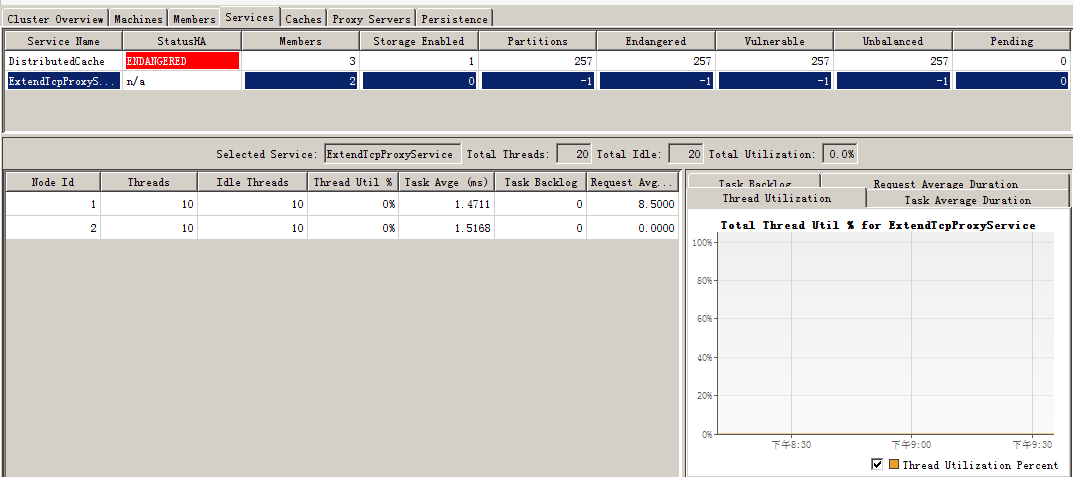

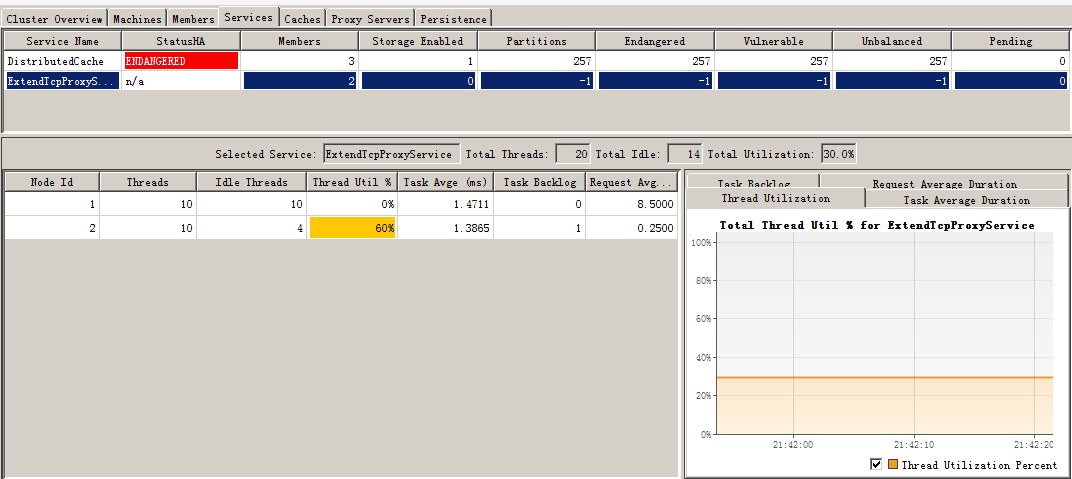

没有压力的时候,通过jvisualvm监控的proxy的线程如下,可以看到有每个都有10个空闲线程:

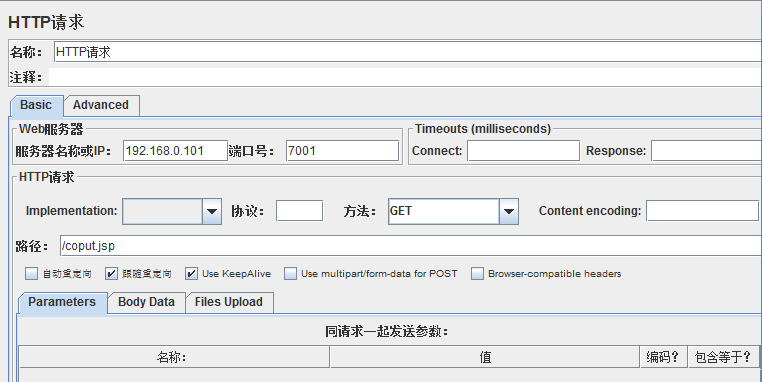

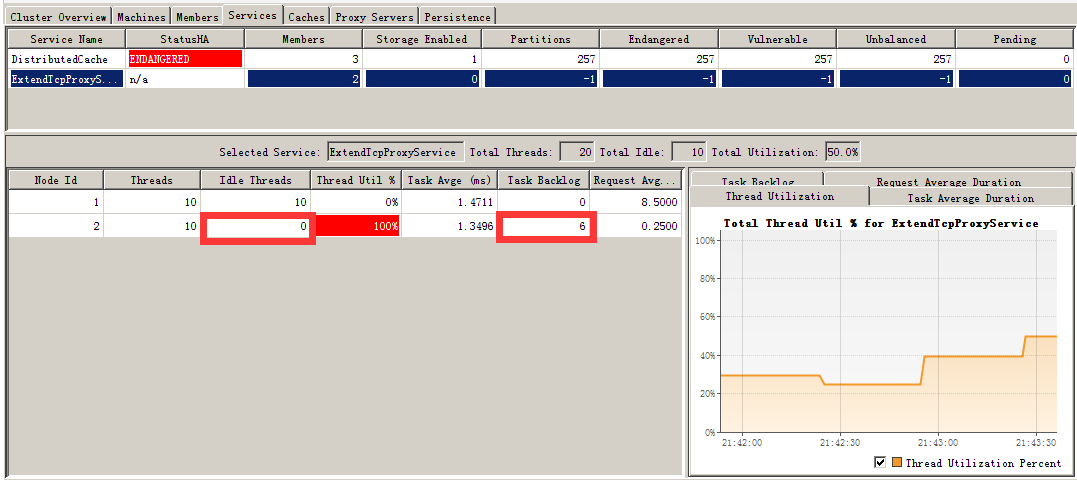

通过jmeter运行20个线程的压力,压力主要压在weblogic server

压力上来时候线程如下

可以看到当空闲线程为0,并且有6个在backlog等待时,都没有分到另外一个proxy.

结论如下:

- Coherence将weblogic server的一个实例当成是一个客户端,并不是基于这个客户端内部的thread进行负载均衡(这不是bug,而是产品设计如此)

- WebLogic Server和Coherence建立的是长连接,除非在超时时间外没有线程访问会断开,在压力比较大的时候,weblogic server会一直用这个连接,并不管这个连接是否已经用完。

- Proxy设置的线程数是有限的,最大512条,proxy占用的资源也是有限的,在压力大的时候可能这个proxy会缓慢甚至挂掉,所以最好的办法还是要把压力分散到不同的proxy上面去。

- 但当多个weblogic实例上来时会进行负载均衡。严格来说,coherence集群是按照client端的NIC和port进行负载均衡的。

修改coput.jsp如下:

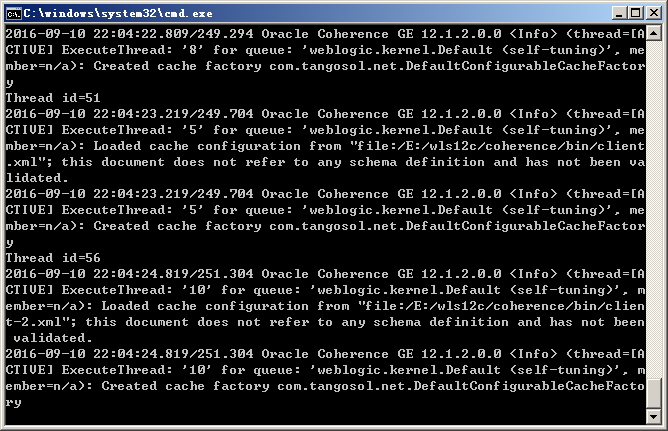

也就是会根据不同的thread id,选择加载不同的xml,实现均分。

|

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" <%@ page contentType="text/html;charset=windows-1252"%> <% for (int i=0;i<10000;i++) { %> </body> |

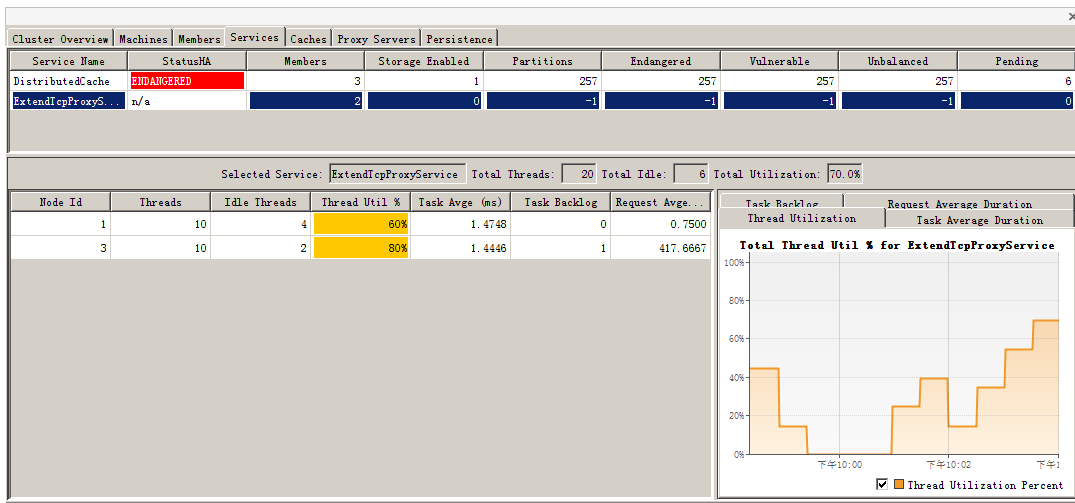

再次进行压力测试,如下:

可以看到压力以及分在两个proxy上.

后台weblogic日志,在根据threadid调用.

Coherence代理的负载均衡的更多相关文章

- Windos环境用Nginx配置反向代理和负载均衡

Windos环境用Nginx配置反向代理和负载均衡 引言:在前后端分离架构下,难免会遇到跨域问题.目前的解决方案大致有JSONP,反向代理,CORS这三种方式.JSONP兼容性良好,最大的缺点是只支持 ...

- Nginx反向代理,负载均衡,redis session共享,keepalived高可用

相关知识自行搜索,直接上干货... 使用的资源: nginx主服务器一台,nginx备服务器一台,使用keepalived进行宕机切换. tomcat服务器两台,由nginx进行反向代理和负载均衡,此 ...

- Nginx服务器 之反向代理与负载均衡

一.反向代理 正向代理: 客户端要获取的资源就在服务器上,客户端请求的资源路径就是最终响应资源的服务器路径,这就是正向代理.正向代理的特点:就是我们明确知道要访问哪个网站地址. 反向代理: 客户端想获 ...

- Nginx 反向代理、负载均衡、页面缓存、URL重写及读写分离详解

转载:http://freeloda.blog.51cto.com/2033581/1288553 大纲 一.前言 二.环境准备 三.安装与配置Nginx 四.Nginx之反向代理 五.Nginx之负 ...

- Tomcat:利用Apache配置反向代理、负载均衡

本篇主要介绍apache配置反向代理,介绍了两种情况:第一种是,只使用apache配置反向代理:第二种是,apache与应用服务器(tomcat)结合,配置反向代理,同时了配置了负载均衡. 准备工作 ...

- 通过Nginx+tomcat+redis实现反向代理 、负载均衡及session同步

一直对于负载均衡比较陌生,今天尝试着去了解了一下,并做了一个小的实验,对于这个概念有一些认识,在此做一个简单的总结 什么是负载均衡 负载均衡,英文 名称为Load Balance,指由多台服务器以对称 ...

- nginx的反向代理和负载均衡的一个总结

之前一直觉的nginx的反向代理和负载均衡很厉害的样子,最近有机会接触了一下公司的这方面的技术,发现技术就是一张窗户纸呀,捅破了啥都明白了! 接下来先看一下nginx的反向代理: 简单的来说就是ngi ...

- Nginx反向代理、负载均衡、页面缓存、URL重写及读写分离详解

大纲 一.前言 二.环境准备 三.安装与配置Nginx 四.Nginx之反向代理 五.Nginx之负载均衡 六.Nginx之页面缓存 七.Nginx之URL重写 八.Nginx之读写分离 注,操作系统 ...

- nginx和tomcat实现反向代理、负载均衡和session共享

这类的文章很多,nginx和tomcat实现反向代理.负载均衡实现很容易,可以参照http://blog.csdn.net/liuzhigang1237/article/details/8880752 ...

随机推荐

- mysqli_insert_id

mysqli_insert_id($mysqli),这个函数一开始我用的时候老是返回0,疯掉了,百度了n次,问了n个人,搞了几天,就是解决不了,最后我把他换成面对对象编程,终于成功了,开心,也许这就是 ...

- Codeforces Round #475 Div. 2 A B C D

A - Splits 题意 将一个正整数拆分成若干个正整数的和,从大到小排下来,与第一个数字相同的数字的个数为这个拆分的权重. 问\(n\)的所有拆分的不同权重可能个数. 思路 全拆成1,然后每次将2 ...

- 【Shell 编程基础第二部分】Shell里的流程控制、Shell里的函数及脚本调试方法!

http://blog.csdn.net/xiaominghimi/article/details/7603003 本站文章均为李华明Himi原创,转载务必在明显处注明:转载自[黑米GameDev街区 ...

- Linux实现利用SSH远程登录服务器详解

Linux实现利用SSH远程登录服务器详解 http://www.111cn.net/sys/linux/55152.htm

- Delphi读写二进制文件

http://www.cnblogs.com/hnxxcxg/p/3691742.html 二进制文件(也叫类型文件),二进制文件是由一批同一类型的数据组成的一个数据序列,就是说一个具体的二进制文件只 ...

- java异常基本知识

Throwable |--Error |--Exception |--RuntimeException 异常体系的特点:异常体系中的所有类 ...

- magento 调整产品详细页自定义选项或配置项的位置

默认位置如下图,感觉不美观 调整后,如下图 打开后台产品页,找到Design下的Display product options in属性,可以看到两个选项:Product Info Column和Bl ...

- POJ 2236 Wireless Network [并查集+几何坐标 ]

An earthquake takes place in Southeast Asia. The ACM (Asia Cooperated Medical team) have set up a wi ...

- B - ACM小组的古怪象棋 【地图型BFS+特殊方向】

ACM小组的Samsara和Staginner对中国象棋特别感兴趣,尤其对马(可能是因为这个棋子的走法比较多吧)的使用进行深入研究.今天他们又在 构思一个古怪的棋局:假如Samsara只有一个马了,而 ...

- 大数据技术之_16_Scala学习_07_数据结构(上)-集合

第十章 数据结构(上)-集合10.1 数据结构特点10.1.1 Scala 集合基本介绍10.1.2 可变集合和不可变集合举例10.2 Scala 不可变集合继承层次一览图10.2.1 图10.2.2 ...