谣言检测(PLAN)——《Interpretable Rumor Detection in Microblogs by Attending to User Interactions》

论文信息

论文标题:Interpretable Rumor Detection in Microblogs by Attending to User Interactions

论文作者:Ling Min Serena Khoo, Hai Leong Chieu, Zhong Qian, Jing Jiang

论文来源:2020,

论文地址:download

论文代码:download

Background

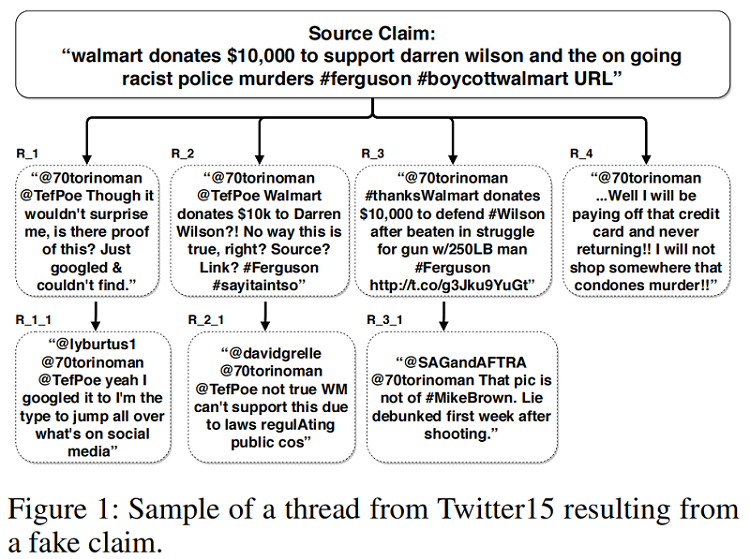

基于群体智能的谣言检测:Figure 1

本文观点:基于树结构的谣言检测模型,往往忽略了 Branch 之间的交互。

1 Introduction

Motivation:a user posting a reply might be replying to the entire thread rather than to a specific user.

Mehtod:We propose a post-level attention model (PLAN) to model long distance interactions between tweets with the multi-head attention mechanism in a transformer network.

We investigated variants of this model:

- a structure aware self-attention model (StA-PLAN) that incorporates tree structure information in the transformer network;

- a hierarchical token and post-level attention model (StA-HiTPLAN) that learns a sentence representation with token-level self-attention.

- We utilize the attention weights from our model to provide both token-level and post-level explanations behind the model’s prediction. To the best of our knowledge, we are the first paper that has done this.

- We compare against previous works on two data sets - PHEME 5 events and Twitter15 and Twitter16 . Previous works only evaluated on one of the two data sets.

- Our proposed models could outperform current state-ofthe-art models for both data sets.

目前谣言检测的类型:

(i) the content of the claim.

(ii) the bias and social network of the source of the claim.

(iii) fact checking with trustworthy sources.

(iv) community response to the claims.

2 Approaches

2.1 Recursive Neural Networks

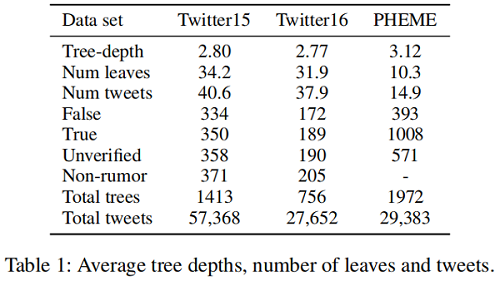

观点:谣言传播树通常是浅层的,一个用户通常只回复一次 source post ,而后进行早期对话。

|

Dataset |

Twitter15 |

Twitter16 |

PHEME |

|

Tree-depth |

2.80 |

2.77 |

3.12 |

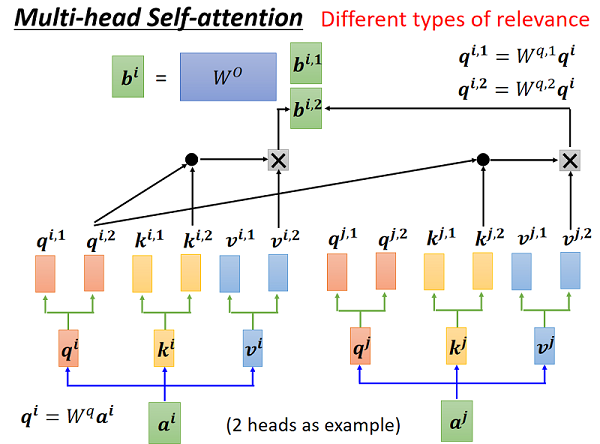

2.2 Transformer Networks

Transformer 中的注意机制使有效的远程依赖关系建模成为可能。

Transformer 中的注意力机制:

$\alpha_{i j}=\operatorname{Compatibility}\left(q_{i}, k_{j}\right)=\operatorname{softmax}\left(\frac{q_{i} k_{j}^{T}}{\sqrt{d_{k}}}\right)\quad\quad\quad(1)$

$z_{i}=\sum_{j=1}^{n} \alpha_{i j} v_{j}\quad\quad\quad(2)$

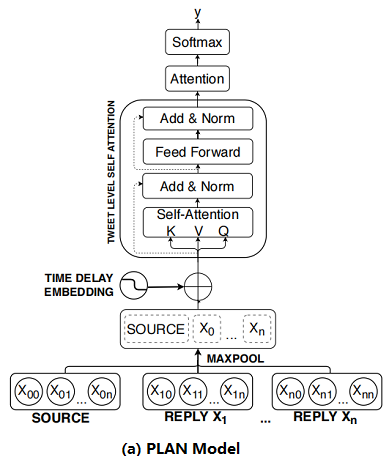

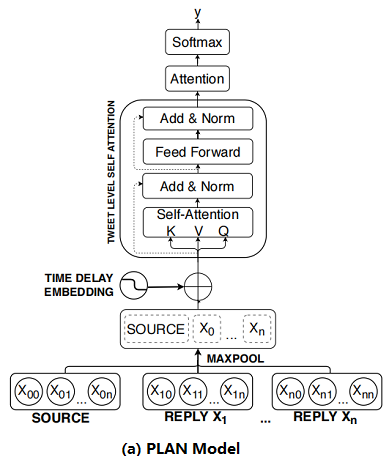

2.3 Post-Level Attention Network (PLAN)

框架如下:

首先:将 Post 按时间顺序排列;

其次:对每个 Post 使用 Max pool 得到 sentence embedding ;

然后:将 sentence embedding $X^{\prime}=\left(x_{1}^{\prime}, x_{2}^{\prime}, \ldots, x_{n}^{\prime}\right)$ 通过 $s$ 个多头注意力模块 MHA 得到 $U=\left(u_{1}, u_{2}, \ldots, u_{n}\right)$;

最后:通过 attention 机制聚合这些输出并使用全连接层进行预测 :

$\begin{array}{l}\alpha_{k}=\operatorname{softmax}\left(\gamma^{T} u_{k}\right) &\quad\quad\quad(3)\\v=\sum\limits _{k=0}^{m} \alpha_{k} u_{k} &\quad\quad\quad(4)\\p=\operatorname{softmax}\left(W_{p}^{T} v+b_{p}\right) &\quad\quad\quad(5)\end{array}$

where $\gamma \in \mathbb{R}^{d_{\text {model }}}, \alpha_{k} \in \mathbb{R}$,$W_{p} \in \mathbb{R}^{d_{\text {model }}, K}$,$b \in \mathbb{R}^{d_{\text {model }}}$,$u_{k}$ is the output after passing through $s$ number of MHA layers,$v$ and $p$ are the representation vector and prediction vector for $X$

回顾:

2.4 Structure Aware Post-Level Attention Network (StA-PLAN)

上述模型的问题:线性结构组织的推文容易失去结构信息。

为了结合显示树结构的优势和自注意力机制,本文扩展了 PLAN 模型,来包含结构信息。

$\begin{array}{l}\alpha_{i j}=\operatorname{softmax}\left(\frac{q_{i} k_{j}^{T}+a_{i j}^{K}}{\sqrt{d_{k}}}\right)\\z_{i}=\sum\limits _{j=1}^{n} \alpha_{i j}\left(v_{j}+a_{i j}^{V}\right)\end{array}$

其中, $a_{i j}^{V}$ 和 $a_{i j}^{K}$ 是代表上述五种结构关系(i.e. parent, child, before, after and self) 的向量。

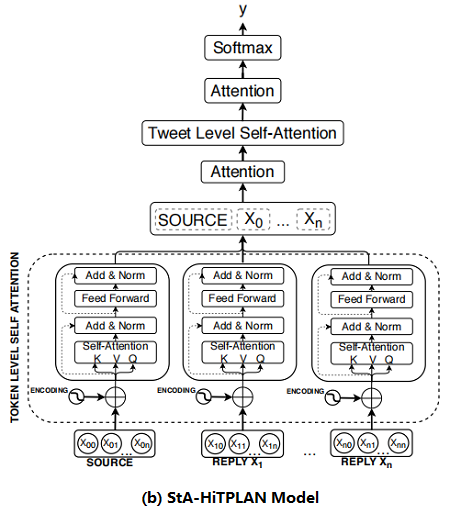

2.5 Structure Aware Hierarchical Token and Post-Level Attention Network (StA-HiTPLAN)

本文的PLAN 模型使用 max-pooling 来得到每条推文的句子表示,然而比较理想的方法是允许模型学习单词向量的重要性。因此,本文提出了一个层次注意模型—— attention at a token-level then at a post-level。层次结构模型的概述如 Figure 2b 所示。

2.6 Time Delay Embedding

source post 创建的时候,reply 一般是抱持怀疑的状态,而当 source post 发布了一段时间后,reply 有着较高的趋势显示 post 是虚假的。因此,本文研究了 time delay information 对上述三种模型的影响。

To include time delay information for each tweet, we bin the tweets based on their latency from the time the source tweet was created. We set the total number of time bins to be 100 and each bin represents a 10 minutes interval. Tweets with latency of more than 1,000 minutes would fall into the last time bin. We used the positional encoding formula introduced in the transformer network to encode each time bin. The time delay embedding would be added to the sentence embedding of tweet. The time delay embedding, TDE, for each tweet is:

$\begin{array}{l}\mathrm{TDE}_{\text {pos }, 2 i} &=&\sin \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}} \\\mathrm{TDE}_{\text {pos }, 2 i+1} &=&\cos \frac{\text { pos }}{10000^{2 i / d_{\text {model }}}}\end{array}$

where pos represents the time bin each tweet fall into and $p o s \in[0,100)$, $i$ refers to the dimension and $d_{\text {model }}$ refers to the total number of dimensions of the model.

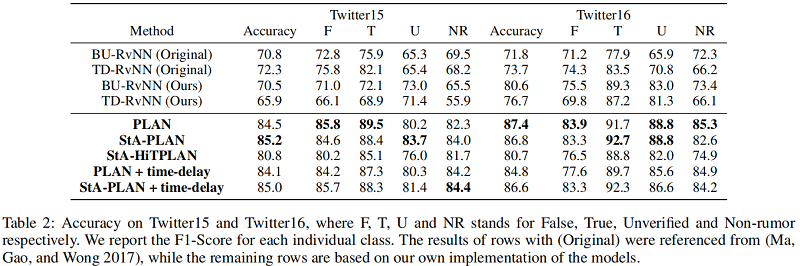

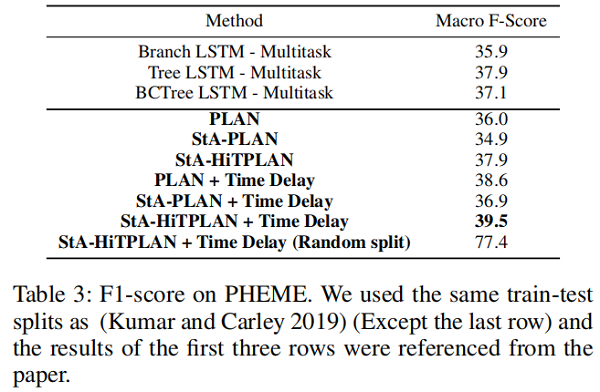

3 Experiments and Results

dataset

Result

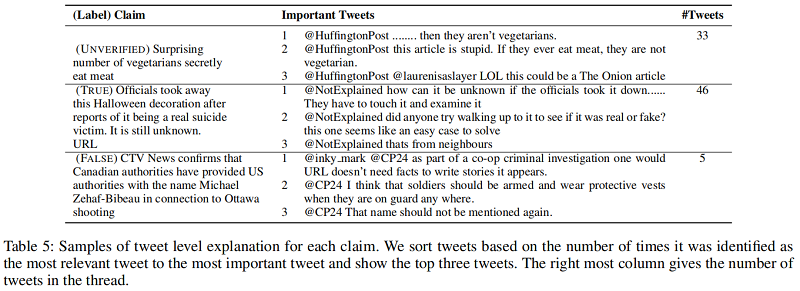

Post-Level Explanations

首先通过最后的 attention 层获得最重要的推文 $tweet_{impt}$ ,然后从第 $i$ 个MHA层获得该层的与 $tweet_{impt}$ 最相关的推文 $tweet _{rel,i}$ ,每篇推文可能被识别成最相关的推文多次,最后按照 被识别的次数排序,取前三名作为源推文的解释。举例如下:

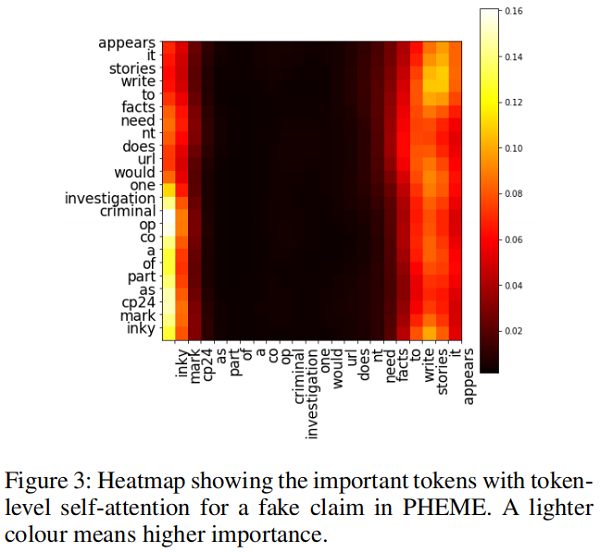

Token-Level Explanation

可以使用 token-level self-attention 的自注意力权重来进行 token-level 的解释。比如评论 “@inky mark @CP24 as part of a co-op criminal investigation one would URL doesn’t need facts to write stories it appears.”中短语“facts to write stories it appears”表达了对源推文的质疑,下图的自注意力权重图可以看出大量权重集中在这一部分,这说明这个短语就可以作为一个解释:

谣言检测(PLAN)——《Interpretable Rumor Detection in Microblogs by Attending to User Interactions》的更多相关文章

- 谣言检测——(PSA)《Probing Spurious Correlations in Popular Event-Based Rumor Detection Benchmarks》

论文信息 论文标题:Probing Spurious Correlations in Popular Event-Based Rumor Detection Benchmarks论文作者:Jiayin ...

- 谣言检测——《MFAN: Multi-modal Feature-enhanced Attention Networks for Rumor Detection》

论文信息 论文标题:MFAN: Multi-modal Feature-enhanced Attention Networks for Rumor Detection论文作者:Jiaqi Zheng, ...

- 谣言检测(GACL)《Rumor Detection on Social Media with Graph Adversarial Contrastive Learning》

论文信息 论文标题:Rumor Detection on Social Media with Graph AdversarialContrastive Learning论文作者:Tiening Sun ...

- 谣言检测(ClaHi-GAT)《Rumor Detection on Twitter with Claim-Guided Hierarchical Graph Attention Networks》

论文信息 论文标题:Rumor Detection on Twitter with Claim-Guided Hierarchical Graph Attention Networks论文作者:Erx ...

- 谣言检测(RDEA)《Rumor Detection on Social Media with Event Augmentations》

论文信息 论文标题:Rumor Detection on Social Media with Event Augmentations论文作者:Zhenyu He, Ce Li, Fan Zhou, Y ...

- 谣言检测()《Data Fusion Oriented Graph Convolution Network Model for Rumor Detection》

论文信息 论文标题:Data Fusion Oriented Graph Convolution Network Model for Rumor Detection论文作者:Erxue Min, Yu ...

- 谣言检测()《Rumor Detection with Self-supervised Learning on Texts and Social Graph》

论文信息 论文标题:Rumor Detection with Self-supervised Learning on Texts and Social Graph论文作者:Yuan Gao, Xian ...

- 谣言检测(PSIN)——《Divide-and-Conquer: Post-User Interaction Network for Fake News Detection on Social Media》

论文信息 论文标题:Divide-and-Conquer: Post-User Interaction Network for Fake News Detection on Social Media论 ...

- 论文解读(RvNN)《Rumor Detection on Twitter with Tree-structured Recursive Neural Networks》

论文信息 论文标题:Rumor Detection on Twitter with Tree-structured Recursive Neural Networks论文作者:Jing Ma, Wei ...

随机推荐

- Logo小变动,心境大不同,SVG矢量动画格式网站Logo图片制作与实践教程(Python3)

原文转载自「刘悦的技术博客」https://v3u.cn/a_id_207 曾几何时,SVG(Scalable Vector Graphics)矢量动画图被坊间称之为一种被浏览器诅咒的技术,只因为糟糕 ...

- 1.2 Hadoop快速入门

1.2 Hadoop快速入门 1.Hadoop简介 Hadoop是一个开源的分布式计算平台. 提供功能:利用服务器集群,根据用户定义的业务逻辑,对海量数据的存储(HDFS)和分析计算(MapReduc ...

- 我与Apache DolphinScheduler的成长之路

关于 Apache DolphinScheduler社区 Apache DolphinScheduler(incubator) 于17年在易观数科立项,19年3月开源, 19 年8月进入Apache ...

- 详解ConCurrentHashMap源码(jdk1.8)

ConCurrentHashMap是一个支持高并发集合,常用的集合之一,在jdk1.8中ConCurrentHashMap的结构和操作和HashMap都很类似: 数据结构基于数组+链表/红黑树. ge ...

- LuoguP1516 青蛙的约会 (Exgcd)

#include <cstdio> #include <iostream> #include <cstring> #include <algorithm> ...

- Luogu2574 XOR的艺术 (分块)

本是要练线段树的,却手贱打了个分块 //#include <iostream> #include <cstdio> #include <cstring> #incl ...

- Canvas 笔记目录

Canvas 基础笔记 初次认识 Canvas Canvas 线性图形(一):路径 Canvas 线性图形(二):圆形 Canvas 线性图形(三):曲线 Canvas 线性图形(四):矩形 Canv ...

- 检查一个数值是否为有限的Number.isFinite()

如果参数类型不是数值,Number.isFinite()一律返回false. Number.isFinite(15); // true Number.isFinite(0.8); // true Nu ...

- CSP2021-S游记

前言 年纪大了,脑子乱了,渐渐被低年级吊打了. 大家这么内卷下去,高年级的普遍后悔自己生早了,低年级永远占优势,不只是机会优势,还有能力优势. 快进到改变基因出生国家队算了-- Day0 非常不幸地被 ...

- P4035 [JSOI2008]球形空间产生器 (向量,高斯消元)

题面 有一个 n n n 维球,给定 n + 1 n+1 n+1 个在球面上的点,求球心坐标. n ≤ 10 n\leq 10 n≤10 . 题解 好久以前的题了,昨天首 A . n n n 太小了! ...