在airflow的BashOperator中执行docker容器中的脚本容易忽略的问题

dag模板

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from airflow.operators import ExternalTaskSensor

from airflow.operators import EmailOperator

from datetime import datetime, timedelta default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(, , ,,,),

'retries': ,

'retryDelay': timedelta(seconds=),

'end_date': datetime(, , )

} dag = DAG('time_my',

default_args=default_args,

schedule_interval='0 0 * * *') time_my_task_1 = BashOperator(

task_id='time_my_task_1',

dag=dag,

bash_command='set -e;docker exec -it testsuan /bin/bash -c "cd /algorithm-platform/algorithm_model_code/ && python time_my_task_1.py " '

)

time_my_task_1

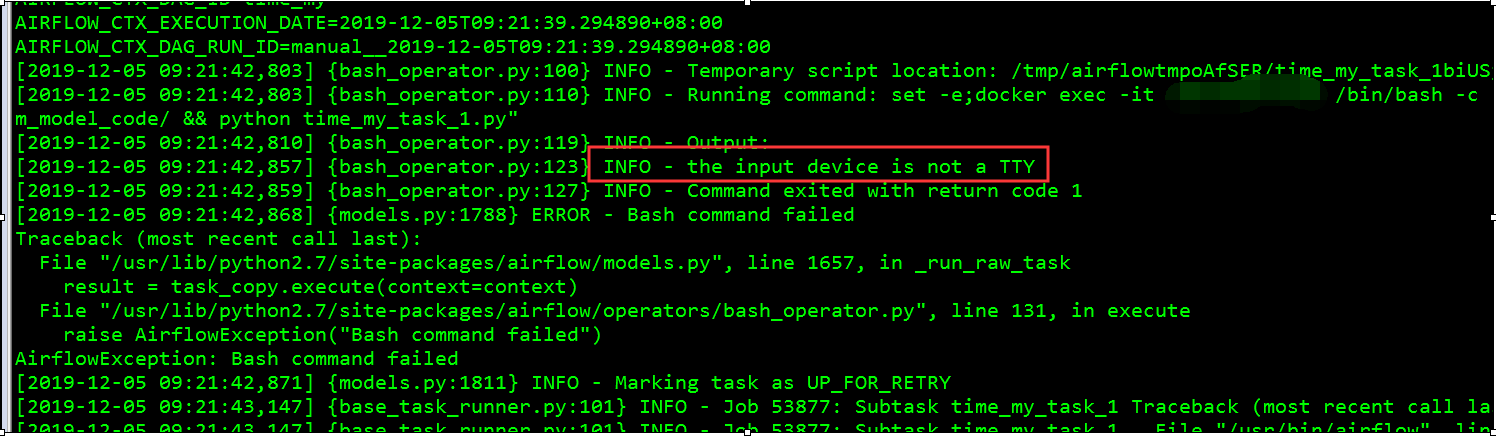

在调度的时候日志报这样的错误

[-- ::,] {models.py:} INFO - Dependencies all met for <TaskInstance: time_my.time_my_task_1 --05T09::39.294890+: [queued]>

[-- ::,] {models.py:} INFO - Dependencies all met for <TaskInstance: time_my.time_my_task_1 --05T09::39.294890+: [queued]>

[-- ::,] {models.py:} INFO -

--------------------------------------------------------------------------------

Starting attempt of

--------------------------------------------------------------------------------

[-- ::,] {models.py:} INFO - Executing <Task(BashOperator): time_my_task_1> on --05T09::39.294890+:

[-- ::,] {base_task_runner.py:} INFO - Running: ['bash', '-c', u'airflow run time_my time_my_task_1 2019-12-05T09:21:39.294890+08:00 --job_id 53877 --raw -sd DAGS_FOLDER/time_my.py --cfg_path /tmp/tmprAeiWr']

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 /usr/lib/python2./site-packages/requests/__init__.py:: RequestsDependencyWarning: urllib3 (1.25.) or chardet (3.0.) doesn't match a supported version!

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 RequestsDependencyWarning)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {settings.py:} INFO - settings.configure_orm(): Using pool settings. pool_size=, pool_recycle=, pid=

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {__init__.py:} INFO - Using executor LocalExecutor

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {configuration.py:} WARNING - section/key [rest_api_plugin/log_loading] not found in config

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {rest_api_plugin.py:} WARNING - Initializing [rest_api_plugin/LOG_LOADING] with default value = False

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {configuration.py:} WARNING - section/key [rest_api_plugin/filter_loading_messages_in_cli_response] not found in config

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {rest_api_plugin.py:} WARNING - Initializing [rest_api_plugin/FILTER_LOADING_MESSAGES_IN_CLI_RESPONSE] with default value = True

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {configuration.py:} WARNING - section/key [rest_api_plugin/rest_api_plugin_http_token_header_name] not found in config

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {rest_api_plugin.py:} WARNING - Initializing [rest_api_plugin/REST_API_PLUGIN_HTTP_TOKEN_HEADER_NAME] with default value = rest_api_plugin_http_token

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {configuration.py:} WARNING - section/key [rest_api_plugin/rest_api_plugin_expected_http_token] not found in config

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {rest_api_plugin.py:} WARNING - Initializing [rest_api_plugin/REST_API_PLUGIN_EXPECTED_HTTP_TOKEN] with default value = None

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {models.py:} INFO - Filling up the DagBag from /usr/local/airflow/dags/time_my.py

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 /usr/lib/python2./site-packages/airflow/utils/helpers.py:: DeprecationWarning: Importing 'ExternalTaskSensor' directly from 'airflow.operators' has been deprecated. Please import from 'airflow.operators.[operator_module]' instead. Support for direct imports will be dropped entirely in Airflow 2.0.

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 DeprecationWarning)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 /usr/lib/python2./site-packages/airflow/utils/helpers.py:: DeprecationWarning: Importing 'EmailOperator' directly from 'airflow.operators' has been deprecated. Please import from 'airflow.operators.[operator_module]' instead. Support for direct imports will be dropped entirely in Airflow 2.0.

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 DeprecationWarning)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 [-- ::,] {cli.py:} INFO - Running <TaskInstance: time_my.time_my_task_1 --05T09::39.294890+: [running]> on host node5

[-- ::,] {bash_operator.py:} INFO - Tmp dir root location:

/tmp

[-- ::,] {bash_operator.py:} INFO - Exporting the following env vars:

AIRFLOW_CTX_TASK_ID=time_my_task_1

AIRFLOW_CTX_DAG_ID=time_my

AIRFLOW_CTX_EXECUTION_DATE=--05T09::39.294890+:

AIRFLOW_CTX_DAG_RUN_ID=manual__2019--05T09::39.294890+:

[-- ::,] {bash_operator.py:} INFO - Temporary script location: /tmp/airflowtmpoAfSER/time_my_task_1biUSjF

[-- ::,] {bash_operator.py:} INFO - Running command: set -e;docker exec -it testsuan /bin/bash -c "cd /algorithm-platform/algorithm_model_code/ && python time_my_task_1.py"

[-- ::,] {bash_operator.py:} INFO - Output:

[-- ::,] {bash_operator.py:} INFO - the input device is not a TTY

[-- ::,] {bash_operator.py:} INFO - Command exited with return code

[-- ::,] {models.py:} ERROR - Bash command failed

Traceback (most recent call last):

File "/usr/lib/python2.7/site-packages/airflow/models.py", line , in _run_raw_task

result = task_copy.execute(context=context)

File "/usr/lib/python2.7/site-packages/airflow/operators/bash_operator.py", line , in execute

raise AirflowException("Bash command failed")

AirflowException: Bash command failed

[-- ::,] {models.py:} INFO - Marking task as UP_FOR_RETRY

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 Traceback (most recent call last):

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/bin/airflow", line , in <module>

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 args.func(args)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/utils/cli.py", line , in wrapper

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 return f(*args, **kwargs)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/bin/cli.py", line , in run

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 _run(args, dag, ti)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/bin/cli.py", line , in _run

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 pool=args.pool,

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/utils/db.py", line , in wrapper

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 return func(*args, **kwargs)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/models.py", line , in _run_raw_task

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 result = task_copy.execute(context=context)

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 File "/usr/lib/python2.7/site-packages/airflow/operators/bash_operator.py", line , in execute

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 raise AirflowException("Bash command failed")

[-- ::,] {base_task_runner.py:} INFO - Job : Subtask time_my_task_1 airflow.exceptions.AirflowException: Bash command failed

[-- ::,] {logging_mixin.py:} INFO - [-- ::,] {jobs.py:} INFO - Task exited with return code

其实问题就出在这

用定时任务执行docker命令的脚本的时候报错如上标题,tty(终端设备的统称): tty一词源于Teletypes,或teletypewriters。

这个的意思是说后台linux执行的时候没有终端设备。我们一般执行docker里的命令时候都喜欢加上-it 这个参数,这里的-it 就是表示终端设备。

所以,如果我们docker执行后台运行的任务或者程序直接去除 -it 这个参数就不会出现这个报错了!

修改后的DAG模板

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from airflow.operators import ExternalTaskSensor

from airflow.operators import EmailOperator

from datetime import datetime, timedelta default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(, , ,,,),

'retries': ,

'retryDelay': timedelta(seconds=),

'end_date': datetime(, , )

} dag = DAG('time_my',

default_args=default_args,

schedule_interval='0 0 * * *') time_my_task_1 = BashOperator(

task_id='time_my_task_1',

dag=dag,

bash_command='set -e;docker exec -i testsuan /bin/bash -c "cd /algorithm-platform/algorithm_model_code/ && python time_my_task_1.py " '

)

time_my_task_1

再次调度就不会出现错误了。

在airflow的BashOperator中执行docker容器中的脚本容易忽略的问题的更多相关文章

- Docker容器中运行ASP.NET Core

在Linux和Windows的Docker容器中运行ASP.NET Core 译者序:其实过去这周我都在研究这方面的内容,结果周末有事没有来得及总结为文章,Scott Hanselman就捷足先登了. ...

- 在 docker 容器中捕获信号

我们可能都使用过 docker stop 命令来停止正在运行的容器,有时可能会使用 docker kill 命令强行关闭容器或者把某个信号传递给容器中的进程.这些操作的本质都是通过从主机向容器发送信号 ...

- 隔离 docker 容器中的用户

笔者在前文<理解 docker 容器中的 uid 和 gid>介绍了 docker 容器中的用户与宿主机上用户的关系,得出的结论是:docker 默认没有隔离宿主机用户和容器中的用户.如果 ...

- Docker容器中找不到vim命令

docker容器中,有的并未安装vi和vim,输入命令vim,会提示vim: command not found(如下图).此时我们就要安装vi命令 执行命令:apt-get update apt-g ...

- docker容器中Postgresql 数据库备份

查看运行的容器: docker ps 进入目标容器: docker exec -u root -it 容器名 /bin/bash docker 中,以root用户,创建备份目录,直接执行如下命令, p ...

- Linux下将.Asp Core 部署到 Docker容器中

我们来部署一个简单的例子: 将一个简单的.Aps Core项目部署到Docker容器中并被外网访问 说明: 下面的步骤都是建立在宿主服务器系统已经安装配置过Docker容器,安装Docker相对比较简 ...

- 解决docker容器中Centos7系统的中文乱码

解决docker容器中Centos7系统的中文乱码问题有如下两种方案: 第一种只能临时解决中文乱码: 在命令行中执行如下命令: # localedef -i zh_CN -f UTF-8 zh_CN. ...

- 在docker容器中编译hadoop 3.1.0

在docker容器中编译hadoop 3.1.0 优点:docker安装好之后可以一键部署编译环境,不用担心各种库不兼容等问题,编译失败率低. Hadoop 3.1.0 的源代码目录下有一个 `sta ...

- 【原创】大叔经验分享(71)docker容器中使用jvm工具

java应用中经常需要用到jvm工具来进行一些操作,如果java应用部署在docker容器中,如何使用jvm工具? 首先要看使用的docker镜像, 比如常用的openjdk镜像分为jdk和jre,只 ...

随机推荐

- NetworkX系列教程(10)-算法之一:最短路径问题

小书匠Graph图论 重头戏部分来了,写到这里我感觉得仔细认真点了,可能在NetworkX中,实现某些算法就一句话的事,但是这个算法是做什么的,用在什么地方,原理是怎么样的,不清除,所以,我决定先把图 ...

- git 忽略文件 目录

git status 这里面的iml文件类似 eclipse .project文件 ,不能删除 .删除就不能识别项目了. 通过git .gitignore文件 过滤 git status gitig ...

- 欢迎使用CSDN的markdown编辑器

以下是蒻鞫第一次打开CSDN-markdown编译器的温馨提示,感觉CSDN好贴心,不作任何用途,仅为纪念,若存在违法侵权行为,请联系留言,立即删除. List item 这里写 欢迎使用Markdo ...

- ES里设置索引中倒排列表仅仅存文档ID——采用docs存储后可以降低pos文件和cfs文件大小

index_options The index_options parameter controls what information is added to the inverted index, ...

- GitHub发卡系统zfaka配置历程

GitHub发卡系统zfaka配置历程 1项目介绍 ZFAKA发卡系统(本系统基于yaf+layui开发) 项目地址 https://github.com/zlkbdotnet/zfaka 我 ...

- nodejs配置QQ企业邮箱

安装模块 npm install -g nodemailer npm install -g nodemailer-smtp-transport 代码示例 var nodemailer = requir ...

- Python3中转换字符串编码

在使用subprocess调用Windows命令时,遇到了字符串不显示中文的问题,源码如下:#-*-coding:utf-8-*-__author__ = '$USER' #-*-coding:utf ...

- this和super的用法

this关键字的使用: 1.成员变量和局部变量重名时,在方法中调用成员变量,需要使用this.调用. 2.把这个类自己的实例化对象当做参数进行传递时,使用this. 3.内部类中,调用外部类的方法或变 ...

- Postgresql修改字段的长度

alter table tbl_exam alter column question type character varing(1000); alter table tbl_exam alter c ...

- Android:Recents和AMS中历史任务的区别

1.1 任务和返回栈 - 实际数据模型 这个是指在调度体系里实际保存的TaskRecord实例,而ActivityRecord-TaskRecord-ActivityStack之间的关系建议看官方文 ...