Model Representation and Cost Function

Model Representation

To establish notation for future use, we’ll use x(i) to denote the “input” variables (living area in this example), also called input features, and y(i) to denote the “output” or target variable that we are trying to predict (price). A pair (x(i),y(i)) is called a training example, and the dataset that we’ll be using to learn—a list of m training examples (x(i),y(i));i=1,...,m—is called a training set. Note that the superscript “(i)” in the notation is simply an index into the training set, and has nothing to do with exponentiation. We will also use X to denote the space of input values, and Y to denote the space of output values. In this example, X = Y = ℝ.

To describe the supervised learning problem slightly more formally, our goal is, given a training set, to learn a function h : X → Y so that h(x) is a “good” predictor for the corresponding value of y. For historical reasons, this function h is called a hypothesis. Seen pictorially, the process is therefore like this:

When the target variable that we’re trying to predict is continuous, such as in our housing example, we call the learning problem a regression problem. When y can take on only a small number of discrete values (such as if, given the living area, we wanted to predict if a dwelling is a house or an apartment, say), we call it a classification problem.

Cost Function

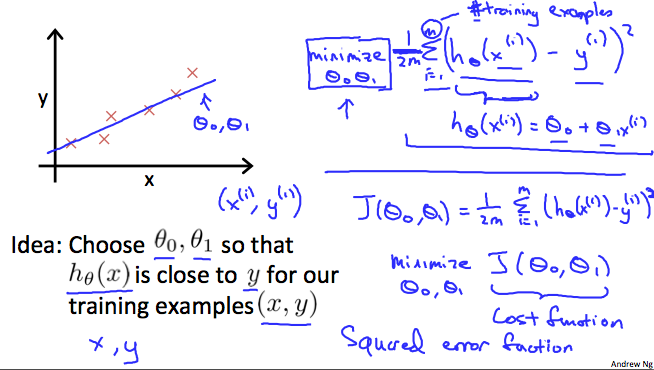

We can measure the accuracy of our hypothesis function by using a cost function. This takes an average difference (actually a fancier version of an average) of all the results of the hypothesis with inputs from x's and the actual output y's.

J(θ0,θ1)=12m∑i=1m(y^i−yi)2=12m∑i=1m(hθ(xi)−yi)2

To break it apart, it is 12 x¯ where x¯ is the mean of the squares of hθ(xi)−yi , or the difference between the predicted value and the actual value.

This function is otherwise called the "Squared error function", or "Mean squared error". The mean is halved (12)as a convenience for the computation of the gradient descent, as the derivative term of the square function will cancel out the 12 term. The following image summarizes what the cost function does:

Cost Function - Intuition I

If we try to think of it in visual terms, our training data set is scattered on the x-y plane. We are trying to make a straight line (defined by hθ(x)) which passes through these scattered data points.

Our objective is to get the best possible line. The best possible line will be such so that the average squared vertical distances of the scattered points from the line will be the least. Ideally, the line should pass through all the points of our training data set. In such a case, the value of J(θ0,θ1) will be 0. The following example shows the ideal situation where we have a cost function of 0.

When θ1=1, we get a slope of 1 which goes through every single data point in our model. Conversely, when θ1=0.5, we see the vertical distance from our fit to the data points increase.

This increases our cost function to 0.58. Plotting several other points yields to the following graph:

Thus as a goal, we should try to minimize the cost function. In this case, θ1=1 is our global minimum.

Cost Function - Intuition II

A contour plot is a graph that contains many contour lines. A contour line of a two variable function has a constant value at all points of the same line. An example of such a graph is the one to the right below.

Taking any color and going along the 'circle', one would expect to get the same value of the cost function. For example, the three green points found on the green line above have the same value for J(θ0,θ1) and as a result, they are found along the same line. The circled x displays the value of the cost function for the graph on the left when θ0 = 800 and θ1= -0.15. Taking another h(x) and plotting its contour plot, one gets the following graphs:

When θ0 = 360 and θ1 = 0, the value of J(θ0,θ1) in the contour plot gets closer to the center thus reducing the cost function error. Now giving our hypothesis function a slightly positive slope results in a better fit of the data.

The graph above minimizes the cost function as much as possible and consequently, the result of θ1 and θ0 tend to be around 0.12 and 250 respectively. Plotting those values on our graph to the right seems to put our point in the center of the inner most 'circle'.

Model Representation and Cost Function的更多相关文章

- Linear regression with one variable - Cost function

摘要: 本文是吴恩达 (Andrew Ng)老师<机器学习>课程,第二章<单变量线性回归>中第7课时<代价函数>的视频原文字幕.为本人在视频学习过程中逐字逐句记录下 ...

- loss function与cost function

实际上,代价函数(cost function)和损失函数(loss function 亦称为 error function)是同义的.它们都是事先定义一个假设函数(hypothesis),通过训练集由 ...

- 【caffe】loss function、cost function和error

@tags: caffe 机器学习 在机器学习(暂时限定有监督学习)中,常见的算法大都可以划分为两个部分来理解它 一个是它的Hypothesis function,也就是你用一个函数f,来拟合任意一个 ...

- 逻辑回归损失函数(cost function)

逻辑回归模型预估的是样本属于某个分类的概率,其损失函数(Cost Function)可以像线型回归那样,以均方差来表示:也可以用对数.概率等方法.损失函数本质上是衡量”模型预估值“到“实际值”的距离, ...

- [Machine Learning] 浅谈LR算法的Cost Function

了解LR的同学们都知道,LR采用了最小化交叉熵或者最大化似然估计函数来作为Cost Function,那有个很有意思的问题来了,为什么我们不用更加简单熟悉的最小化平方误差函数(MSE)呢? 我个人理解 ...

- logistic回归具体解释(二):损失函数(cost function)具体解释

有监督学习 机器学习分为有监督学习,无监督学习,半监督学习.强化学习.对于逻辑回归来说,就是一种典型的有监督学习. 既然是有监督学习,训练集自然能够用例如以下方式表述: {(x1,y1),(x2,y2 ...

- 机器学习 损失函数(Loss/Error Function)、代价函数(Cost Function)和目标函数(Objective function)

损失函数(Loss/Error Function): 计算单个训练集的误差,例如:欧氏距离,交叉熵,对比损失,合页损失 代价函数(Cost Function): 计算整个训练集所有损失之和的平均值 至 ...

- 损失函数(Loss function) 和 代价函数(Cost function)

1损失函数和代价函数的区别: 损失函数(Loss function):指单个训练样本进行预测的结果与实际结果的误差. 代价函数(Cost function):整个训练集,所有样本误差总和(所有损失函数 ...

- 手势跟踪论文学习:Realtime and Robust Hand Tracking from Depth(三)Cost Function

iker原创.转载请标明出处:http://blog.csdn.net/ikerpeng/article/details/39050619 Realtime and Robust Hand Track ...

随机推荐

- 转 Java输入输出流详解(非常详尽)

转 http://blog.csdn.net/zsw12013/article/details/6534619 通过数据流.序列化和文件系统提供系统输入和输出. Java把这些不同来源和目标的数据都 ...

- Dstl Satellite Imagery Feature Detection-Data Processing Tutorial

如何读取WKT格式文件 我们找到了这些有用的包: Python - shapely.loads() R - rgeos 如何读取geojson格式文件 我们找到了这些有用的包: Python - j ...

- Android性能优化xml之<include>、<merge>、<ViewStub>标签的使用

一.使用<include>标签对"重复代码"进行复用 <include>标签是我们进行Android开发中经常用到的标签,比如多个界面都同样用到了一个左侧筛 ...

- HCatalog

HCatalog HCatalog是Hadoop中的表和存储管理层,能够支持用户用不同的工具(Pig.MapReduce)更容易地表格化读写数据. HCatalog从Apache孵化器毕业,并于201 ...

- JavaWeb(二)会话管理之细说cookie与session

前言 前面花了几篇博客介绍了Servlet,讲的非常的详细.这一篇给大家介绍一下cookie和session. 一.会话概述 1.1.什么是会话? 会话可简单理解为:用户开一个浏览器,点击多个超链接, ...

- Python s12 Day2 笔记及作业

1. 元组的元素不可修改,但元组的元素的元素可以被修改. 2. name="eric" print(name.center(20, "*") 3. list=[ ...

- Java历程-初学篇 Day06 循环结构

前记:永远不要写死循环 一,while循环 先判断,再执行 while(条件){ //代码块; 迭代; } 示例: 二,do while语句 先执行一次,再判断 do{ //代码块; 迭代; }whi ...

- C-一行或多行文章垂直居中

1 样式效果 2 table布局 li span

- Oracle Database 10g Express Edition系统文件损坏的解决办法

1.检查错误代码:ora-10010 亦或是ora-10003,上网找响应的解决办法: 异常状态:登陆不上 常用的方法解决 (1)进入Oracle命令行模式 (2)Shutdown immedaite ...

- struts2-学习笔记(一)

Struts2学习笔记(一) 一.Struts2概述 1. 是什么? Struts2 是一个非常优秀的MVC框架,基于Model2 设计模型 Struts2是一个M(模型---域--范围模型)V ...