容器编排系统之Kubernetes基础入门

一、kubernetes简介

1、什么是kubernetes?它是干什么用的?

kubernetes是google公司用go语言开发的一套容器编排系统,简称k8s;它主要用于容器编排;所谓容器编排简单的我们可以理解为管理容器;这个有点类似openstack,不同的是openstack是用来管理虚拟机,而k8s中是管理的pod(所谓pod就是容器的一个外壳,里面可以跑一个或多个容器,可以理解为pod就是将一个或多个容器逻辑的组织在一起);k8s除了可以全生命周期的管理pod,它还可以实现pod的自动化部署,自动修复以及动态的扩缩容等功能;

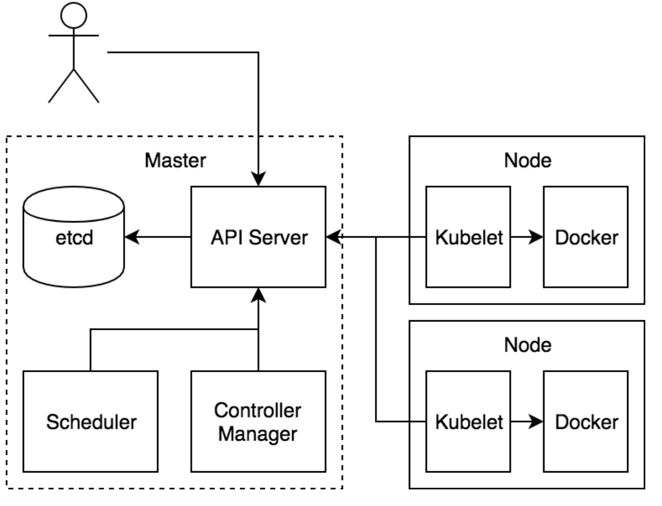

2、k8s架构

提示:k8s是master/node模型,master是整个k8s的管理端,其上主要运行etcd,api server ,scheduler,controllermanager以及网络相关插件;其中etcd是一个kv键值存储数据库,主要存放k8s中所有配置信息以及pod状态信息,一旦etcd宕机,k8s整个系统将不可用;apiserver主要用来接收客户端请求,也是k8s中唯一的入口;用户的所有管理操作都是将请求发送给apiserver;scheduler主要用来调度用户请求,比如用户要在k8s系统上运行一个pod,至于这个pod该运行在那个node节点,这个就需要scheduler的调度;controllermanager主要用来管理以及监控pod状态;对于scheduler调度的结果,controlmanager就负责让对应节点上的对应pod精准处于调度的状态;node的节点是k8s的工作节点,主要用于运行pod;node节点主要运行的应用有docker,kubelet,kube-proxy;其中docker是用来运行容器的,kubelet主要负责执行master端controllermanager下发的任务;kube-proxy主要用来生成pod网络相关iptables或ipvs规则的;

3、k8s工作过程

提示:k8s工作过程如上图所示,首先用户将请求通过https发送给apiserver,apiserver收到请求后,首先要验证客户端证书,如果通过验证,然后再检查用户请求的资源是否满足对应api请求的语法,满足则就把对应的请求资源以及资源状态信息存放在etcd中;scheduler和controllermanager以及kubelet这三个组件会一直监视着apiserver上的资源变动,一旦发现有合法的请求进来,首先scheduler会根据用户请求的资源,来评判该资源该在那个节点上创建,然后scheduler把对应的调度信息发送给apiserver,然后controllermanager结合scheduler的调度信息,把对应创建资源的方法也发送给apiserver;最后是各节点上的kubelet通过scheduler的调度信息来判断对应资源是否在本地执行,如果是,它就把controllermanager发送给apiserver的创建资源的方法在本地执行,把对应的资源在本地跑起来;后续controllermanager会一直监视着对应的资源是否健康,如果对应资源不健康,它会尝试重启资源,或者重建资源,让对应资源处于我们定义的状态;

二、k8s集群搭建

1、部署说明

部署k8s集群的方式有两种,一种是在各节点上把对应的组件运行为容器的形式;第二种是将各组件运行为守护进程的方式;对于不同的环境我们部署的方式也有不同,对于测试环境,我们可以使用单master节点,单etcd实例,node节点按需而定;生产环境首先是etcd要高可用,我们要创建etcd高可用集群,一般创建3个或5个或7个节点;其次master也要高可用,高可用master我们需要注意apiserver是无状态的可多实例,前端使用nginx或haproxy做调度即可;对于scheduler和controller这两个组件各自只能有一个活动实例,如果是多个实例,其余的只能是备用;

测试环境部署k8s,将各组件运行为容器

环境说明

| 主机名 | IP地址 | 角色 |

| master01.k8s.org | 192.168.0.41 | master |

| node01.k8s.org | 192.168.0.44 | node01 |

| node02.k8s.org | 192.168.0.45 | node02 |

| node03.k8s.org | 192.168.0.46 | node03 |

各节点主机名解析

[root@master01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.99 time.test.org time-node

192.168.0.41 master01 master01.k8s.org

192.168.0.42 master02 master02.k8s.org

192.168.0.43 master03 master03.k8s.org

192.168.0.44 node01 node01.k8s.org

192.168.0.45 node02 node02.k8s.org

192.168.0.46 node03 node03.k8s.org

[root@master01 ~]#

各节点时间同步

[root@master01 ~]# grep server /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

server time.test.org iburst

# Serve time even if not synchronized to any NTP server.

[root@master01 ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^~ time.test.org 3 6 377 56 -6275m[ -6275m] +/- 20ms

[root@master01 ~]# ssh node01 'chronyc sources'

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^~ time.test.org 3 6 377 6 -6275m[ -6275m] +/- 20ms

[root@master01 ~]# ssh node02 'chronyc sources'

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^~ time.test.org 3 6 377 41 -6275m[ -6275m] +/- 20ms

[root@master01 ~]# ssh node03 'chronyc sources'

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^~ time.test.org 3 6 377 35 -6275m[ -6275m] +/- 20ms

[root@master01 ~]#

提示:有关时间同步服务器的搭建请参考https://www.cnblogs.com/qiuhom-1874/p/12079927.html;ssh互信请参考https://www.cnblogs.com/qiuhom-1874/p/11783371.html;

各节点关闭selinux

[root@master01 ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted [root@master01 ~]# getenforce

Disabled

[root@master01 ~]#

提示:将/etc/selinux/config中的SELINUX=enforcing修改成SELINUX=disabled,然后重启主机或者执行setenforce 0;

关闭iptabels服务或firewalld服务

[root@master01 ~]# systemctl status firewalld

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@master01 ~]# iptables -nvL

Chain INPUT (policy ACCEPT 650 packets, 59783 bytes)

pkts bytes target prot opt in out source destination Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 503 packets, 65293 bytes)

pkts bytes target prot opt in out source destination

[root@master01 ~]# ssh node01 'systemctl status firewalld'

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@master01 ~]# ssh node02 'systemctl status firewalld'

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@master01 ~]# ssh node03 'systemctl status firewalld'

● firewalld.service - firewalld - dynamic firewall daemon

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:firewalld(1)

[root@master01 ~]#

提示:将firewalld服务停掉并设置为开机禁用;并确保iptables规则表中没有任何规则;

各节点下载docker仓库配置文件

[root@master01 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

--2020-12-08 14:04:29-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 182.140.140.242, 110.188.26.241, 125.64.1.228, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|182.140.140.242|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2640 (2.6K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/docker-ce.repo’ 100%[======================================================================>] 2,640 --.-K/s in 0s 2020-12-08 14:04:30 (265 MB/s) - ‘/etc/yum.repos.d/docker-ce.repo’ saved [2640/2640] [root@master01 ~]# ssh node01 'wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo'

--2020-12-08 14:04:42-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 182.140.139.60, 125.64.1.228, 118.123.2.185, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|182.140.139.60|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2640 (2.6K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/docker-ce.repo’ 0K .. 100% 297M=0s 2020-12-08 14:04:42 (297 MB/s) - ‘/etc/yum.repos.d/docker-ce.repo’ saved [2640/2640] [root@master01 ~]# ssh node02 'wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo'

--2020-12-08 14:04:38-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 182.140.139.59, 118.123.2.183, 182.140.140.238, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|182.140.139.59|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2640 (2.6K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/docker-ce.repo’ 0K .. 100% 363M=0s 2020-12-08 14:04:38 (363 MB/s) - ‘/etc/yum.repos.d/docker-ce.repo’ saved [2640/2640] [root@master01 ~]# ssh node03 'wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo'

--2020-12-08 14:04:43-- https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Resolving mirrors.aliyun.com (mirrors.aliyun.com)... 118.123.2.184, 182.140.140.240, 182.140.139.63, ...

Connecting to mirrors.aliyun.com (mirrors.aliyun.com)|118.123.2.184|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2640 (2.6K) [application/octet-stream]

Saving to: ‘/etc/yum.repos.d/docker-ce.repo’ 0K .. 100% 218M=0s 2020-12-08 14:04:43 (218 MB/s) - ‘/etc/yum.repos.d/docker-ce.repo’ saved [2640/2640] [root@master01 ~]#

创建kubernetes仓库配置文件

[root@master01 yum.repos.d]# cat kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@master01 yum.repos.d]#

复制kubernetes仓库配置文件到各node节点

[root@master01 yum.repos.d]# scp kubernetes.repo node01:/etc/yum.repos.d/

kubernetes.repo 100% 276 87.7KB/s 00:00

[root@master01 yum.repos.d]# scp kubernetes.repo node02:/etc/yum.repos.d/

kubernetes.repo 100% 276 13.6KB/s 00:00

[root@master01 yum.repos.d]# scp kubernetes.repo node03:/etc/yum.repos.d/

kubernetes.repo 100% 276 104.6KB/s 00:00

[root@master01 yum.repos.d]#

在各节点安装docker-ce,kubectl,kubelet,kubeadm

yum install -y docker-ce kubectl kubeadm kubelet

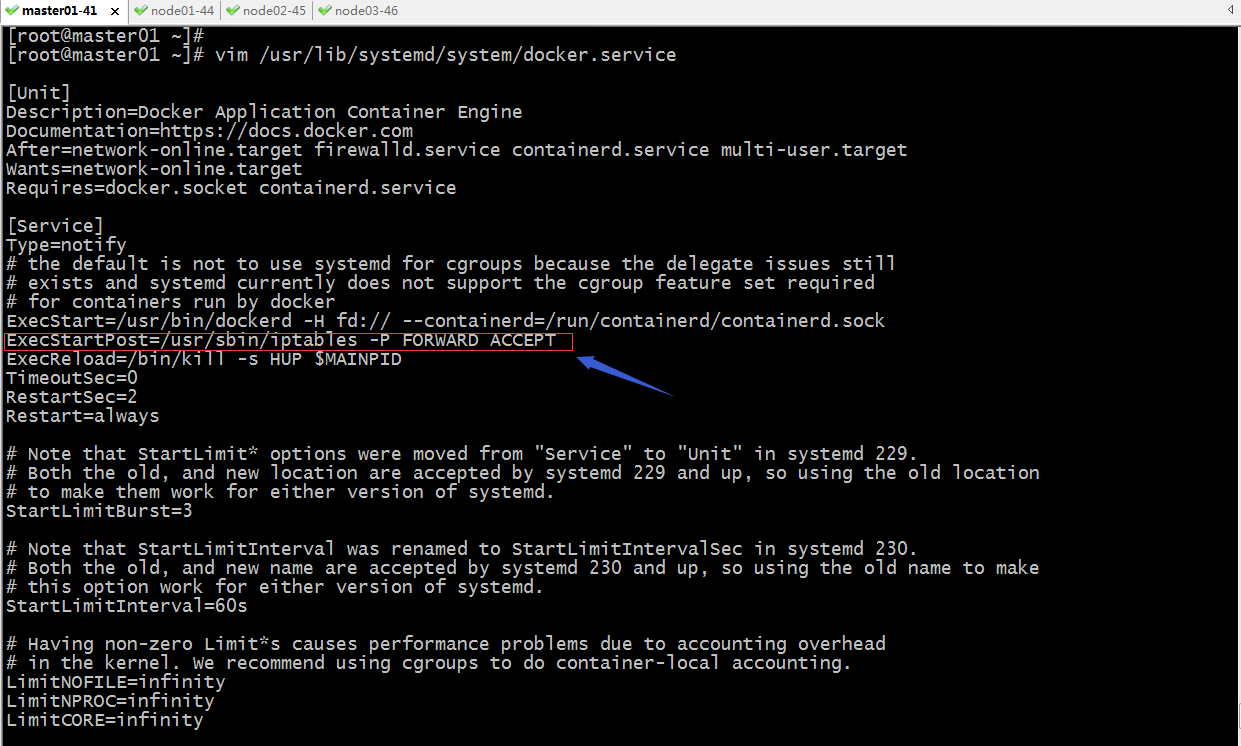

编辑docker unitfile文件,加上启动docker后执行iptables -P FORWARD ACCEPT

复制docker.service到各节点

[root@master01 ~]# scp /usr/lib/systemd/system/docker.service node01:/usr/lib/systemd/system/docker.service

docker.service 100% 1764 220.0KB/s 00:00

[root@master01 ~]# scp /usr/lib/systemd/system/docker.service node02:/usr/lib/systemd/system/docker.service

docker.service 100% 1764 359.1KB/s 00:00

[root@master01 ~]# scp /usr/lib/systemd/system/docker.service node03:/usr/lib/systemd/system/docker.service

docker.service 100% 1764 792.3KB/s 00:00

[root@master01 ~]#

配置docker加速器

[root@master01 ~]# mkdir /etc/docker

[root@master01 ~]# cd /etc/docker

[root@master01 docker]# cat >> daemon.json << EOF

> {

> "registry-mirrors": ["https://cyr1uljt.mirror.aliyuncs.com"]

> }

> EOF

[root@master01 docker]# cat daemon.json

{

"registry-mirrors": ["https://cyr1uljt.mirror.aliyuncs.com"]

}

[root@master01 docker]#

在各节点上创建/etc/docker目录,并复制master端上daemon.json文件到各节点

[root@master01 docker]# ssh node01 'mkdir /etc/docker'

[root@master01 docker]# ssh node02 'mkdir /etc/docker'

[root@master01 docker]# ssh node03 'mkdir /etc/docker'

[root@master01 docker]# scp daemon.json node01:/etc/docker/

daemon.json 100% 65 30.6KB/s 00:00

[root@master01 docker]# scp daemon.json node02:/etc/docker/

daemon.json 100% 65 52.2KB/s 00:00

[root@master01 docker]# scp daemon.json node03:/etc/docker/

daemon.json 100% 65 17.8KB/s 00:00

[root@master01 docker]#

各节点启动docker,并设置为开机启动

[root@master01 docker]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master01 docker]# ssh node01 'systemctl enable docker --now'

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master01 docker]# ssh node02 'systemctl enable docker --now'

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master01 docker]# ssh node03 'systemctl enable docker --now'

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@master01 docker]#

验证各节点docker是加速器是否应用?

[root@master01 docker]# docker info |grep aliyun

https://cyr1uljt.mirror.aliyuncs.com/

[root@master01 docker]# ssh node01 'docker info |grep aliyun'

https://cyr1uljt.mirror.aliyuncs.com/

[root@master01 docker]# ssh node02 'docker info |grep aliyun'

https://cyr1uljt.mirror.aliyuncs.com/

[root@master01 docker]# ssh node03 'docker info |grep aliyun'

https://cyr1uljt.mirror.aliyuncs.com/

[root@master01 docker]#

提示:在对应节点执行docker info命令能够看到对应的加速器地址,说明加速器应用成功;

验证所有节点iptables FORWARD链默认规则是否是ACCEPT

[root@master01 docker]# iptables -nvL|grep FORWARD

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

[root@master01 docker]# ssh node01 'iptables -nvL|grep FORWARD'

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

[root@master01 docker]# ssh node02 'iptables -nvL|grep FORWARD'

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

[root@master01 docker]# ssh node03 'iptables -nvL|grep FORWARD'

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

[root@master01 docker]#

添加内核参数配置文件,并复制配置文件到其他节点

[root@master01 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# scp /etc/sysctl.d/k8s.conf node01:/etc/sysctl.d/k8s.conf

k8s.conf 100% 79 25.5KB/s 00:00

[root@master01 ~]# scp /etc/sysctl.d/k8s.conf node02:/etc/sysctl.d/k8s.conf

k8s.conf 100% 79 24.8KB/s 00:00

[root@master01 ~]# scp /etc/sysctl.d/k8s.conf node03:/etc/sysctl.d/k8s.conf

k8s.conf 100% 79 20.9KB/s 00:00

[root@master01 ~]#

应用内核参数使其生效

[root@master01 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# ssh node01 'sysctl -p /etc/sysctl.d/k8s.conf'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# ssh node02 'sysctl -p /etc/sysctl.d/k8s.conf'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]# ssh node03 'sysctl -p /etc/sysctl.d/k8s.conf'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@master01 ~]#

配置kubelet,让其忽略swap开启报错

[root@master01 ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

[root@master01 ~]# scp /etc/sysconfig/kubelet node01:/etc/sysconfig/kubelet

kubelet 100% 42 12.2KB/s 00:00

[root@master01 ~]# scp /etc/sysconfig/kubelet node02:/etc/sysconfig/kubelet

kubelet 100% 42 16.2KB/s 00:00

[root@master01 ~]# scp /etc/sysconfig/kubelet node03:/etc/sysconfig/kubelet

kubelet 100% 42 11.2KB/s 00:00

[root@master01 ~]#

查看kubelet版本

[root@master01 ~]# rpm -q kubelet

kubelet-1.20.0-0.x86_64

[root@master01 ~]#

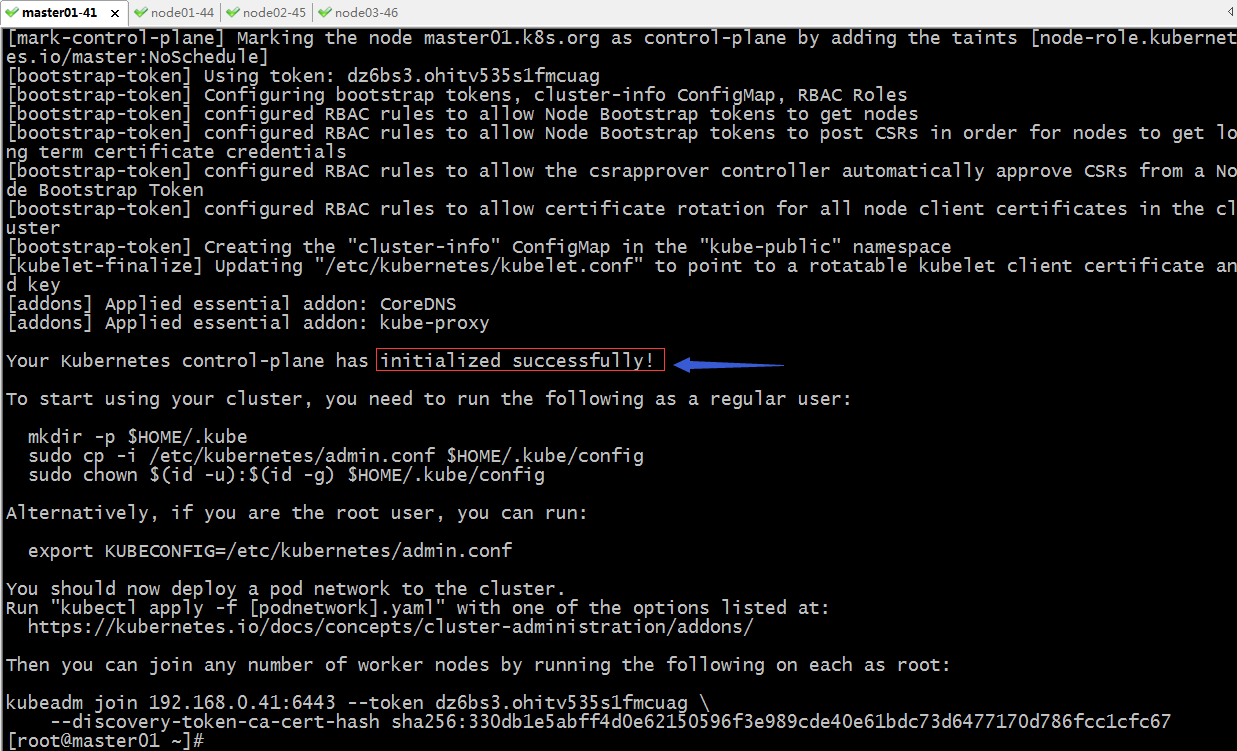

初始化master节点

[root@master01 ~]# kubeadm init --pod-network-cidr="10.244.0.0/16" \

> --kubernetes-version="v1.20.0" \

> --image-repository="registry.aliyuncs.com/google_containers" \

> --ignore-preflight-errors=Swap

提示:初始化master需要注意,默认不指定镜像仓库地址它会到k8s.gcr.io这个仓库中下载对应组件的镜像;gcr.io这个地址是google的仓库,在国内一般是无法正常连接;

提示:一定要看到初始化成功的提示才表示master初始化没有问题;这里还需要将最后的kubeadm join 这条命令记录下来,后续加node节点需要用到这个命令;

在当前用户家目录下创建.kube目录,并复制kubectl配置文件到.kube目录下命名为config

[root@master01 ~]# mkdir -p $HOME/.kube

[root@master01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

提示:复制配置文件的主要原因是我们要在master端用kubectl命令来管理集群,配置文件中包含证书信息以及对应master的地址,默认执行kubctl命令会在当前用户的家目录查找config配置文件,只有当kubectl验证成功后才可以正常管理集群;如果不是root用户,是其他普通用户,还需要将config文件的属主和属组修改成对应的用户;

安装flannel插件

[root@master01 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?

[root@master01 ~]#

提示:这里提示raw.githubusercontent.com不能访问,解决办法在/etc/hosts文件中加入对应的解析记录

添加raw.githubusercontent.com的解析

[root@master01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.99 time.test.org time-node

192.168.0.41 master01 master01.k8s.org

192.168.0.42 master02 master02.k8s.org

192.168.0.43 master03 master03.k8s.org

192.168.0.44 node01 node01.k8s.org

192.168.0.45 node02 node02.k8s.org

192.168.0.46 node03 node03.k8s.org

151.101.76.133 raw.githubusercontent.com

[root@master01 ~]#

再次执行kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml命令

[root@master01 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Unable to connect to the server: read tcp 192.168.0.41:46838->151.101.76.133:443: read: connection reset by peer

[root@master01 ~]#

提示:这里还是提示我们不能连接;解决办法,用浏览器打开 https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml,将其内容复制出来,然后在当前目录下创建flannel.yml文件

flannel.yml文件内容

[root@master01 ~]# cat flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.13.1-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.13.1-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg [root@master01 ~]#

使用 flannel.yml文件来安装flannel插件

[root@master01 ~]# kubectl apply -f flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@master01 ~]#

查看master端运行的pod情况

[root@master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-k9gdt 0/1 Pending 0 30m

coredns-7f89b7bc75-kp855 0/1 Pending 0 30m

etcd-master01.k8s.org 1/1 Running 0 30m

kube-apiserver-master01.k8s.org 1/1 Running 0 30m

kube-controller-manager-master01.k8s.org 1/1 Running 0 30m

kube-flannel-ds-zgq92 0/1 Init:0/1 0 9m45s

kube-proxy-pjv9s 1/1 Running 0 30m

kube-scheduler-master01.k8s.org 1/1 Running 0 30m

[root@master01 ~]#

提示:这里可以看到kube-flannel一直在初始化,原因是在flannel.yml资源清单中使用的是quay.io/coreos/flannel:v0.13.1-rc1这个镜像,这个镜像仓库在国内访问速度非常慢,有时候几乎就下载不到对应的镜像;解决办法,翻墙出去把对应镜像打包,然后再导入镜像;

导入 quay.io/coreos/flannel:v0.13.1-rc镜像

[root@master01 ~]# ll

total 64060

-rw------- 1 root root 65586688 Dec 8 15:16 flannel-v0.13.1-rc1.tar

-rw-r--r-- 1 root root 4822 Dec 8 14:57 flannel.yml

[root@master01 ~]# docker load -i flannel-v0.13.1-rc1.tar

70351a035194: Loading layer [==================================================>] 45.68MB/45.68MB

cd38981c5610: Loading layer [==================================================>] 5.12kB/5.12kB

dce2fcdf3a87: Loading layer [==================================================>] 9.216kB/9.216kB

be155d1c86b7: Loading layer [==================================================>] 7.68kB/7.68kB

Loaded image: quay.io/coreos/flannel:v0.13.1-rc1

[root@master01 ~]#

复制flannel镜像打包文件到其他节点,并导入镜像

[root@master01 ~]# scp flannel-v0.13.1-rc1.tar node01:/root/

flannel-v0.13.1-rc1.tar 100% 63MB 62.5MB/s 00:01

[root@master01 ~]# scp flannel-v0.13.1-rc1.tar node02:/root/

flannel-v0.13.1-rc1.tar 100% 63MB 62.4MB/s 00:01

[root@master01 ~]# scp flannel-v0.13.1-rc1.tar node03:/root/

flannel-v0.13.1-rc1.tar 100% 63MB 62.5MB/s 00:01

[root@master01 ~]# ssh node01 'docker load -i /root/flannel-v0.13.1-rc1.tar'

Loaded image: quay.io/coreos/flannel:v0.13.1-rc1

[root@master01 ~]# ssh node02 'docker load -i /root/flannel-v0.13.1-rc1.tar'

Loaded image: quay.io/coreos/flannel:v0.13.1-rc1

[root@master01 ~]# ssh node03 'docker load -i /root/flannel-v0.13.1-rc1.tar'

Loaded image: quay.io/coreos/flannel:v0.13.1-rc1

[root@master01 ~]#

再次查看pod运行情况

[root@master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-k9gdt 1/1 Running 0 39m

coredns-7f89b7bc75-kp855 1/1 Running 0 39m

etcd-master01.k8s.org 1/1 Running 0 40m

kube-apiserver-master01.k8s.org 1/1 Running 0 40m

kube-controller-manager-master01.k8s.org 1/1 Running 0 40m

kube-flannel-ds-zgq92 1/1 Running 0 19m

kube-proxy-pjv9s 1/1 Running 0 39m

kube-scheduler-master01.k8s.org 1/1 Running 0 40m

[root@master01 ~]#

提示:可以看到kube-flannel已经正常running起来了;

查看节点信息

[root@master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01.k8s.org Ready control-plane,master 41m v1.20.0

[root@master01 ~]#

提示:可以看到master节点已经处于ready状态,表示master端已经部署好了;

将node01加入到k8s集群作为node节点

[root@node01 ~]# kubeadm join 192.168.0.41:6443 --token dz6bs3.ohitv535s1fmcuag \

> --discovery-token-ca-cert-hash sha256:330db1e5abff4d0e62150596f3e989cde40e61bdc73d6477170d786fcc1cfc67 \

> --ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.0. Latest validated version: 19.03

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. [root@node01 ~]#

提示:执行相同的命令将其他节点加入到k8s集群,作为node节点;

在master节点上查看集群节点信息

[root@master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01.k8s.org Ready control-plane,master 49m v1.20.0

node01.k8s.org Ready <none> 5m53s v1.20.0

node02.k8s.org Ready <none> 30s v1.20.0

node03.k8s.org Ready <none> 25s v1.20.0

[root@master01 ~]#

提示:可以看到master和3个node节点都处于ready状态;

测试:运行一个nginx 控制器,并指定使用nginx:1.14-alpine这个镜像,看看是否可以正常运行?

[root@master01 ~]# kubectl create deploy nginx-dep --image=nginx:1.14-alpine

deployment.apps/nginx-dep created

[root@master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-dep-8967df55d-j8zp7 1/1 Running 0 18s

[root@master01 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-8967df55d-j8zp7 1/1 Running 0 30s 10.244.2.2 node02.k8s.org <none> <none>

[root@master01 ~]#

验证:访问podip看看对应nginx是否能够被访问到?

[root@master01 ~]# curl 10.244.2.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p> <p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@master01 ~]#

提示:可以看到访问pod ip能够正常访问到对应nginx pod;到此一个单master节点,3个node节点的k8s集群就搭建好了;

容器编排系统之Kubernetes基础入门的更多相关文章

- 通过重新构建Kubernetes来实现更具弹性的容器编排系统

通过重新构建Kubernetes来实现更具弹性的容器编排系统 译自:rearchitecting-kubernetes-for-the-edge 摘要 近年来,kubernetes已经发展为容器编排的 ...

- 01 . 容器编排简介及Kubernetes核心概念

Kubernetes简介 Kubernetes是谷歌严格保密十几年的秘密武器-Borg的一个开源版本,是Docker分布式系统解决方案.2014年由Google公司启动. Kubernetes提供了面 ...

- 一文带你看透kubernetes 容器编排系统

本文由云+社区发表 作者:turboxu Kubernetes作为容器编排生态圈中重要一员,是Google大规模容器管理系统borg的开源版本实现,吸收借鉴了google过去十年间在生产环境上所学到的 ...

- 容器编排系统K8s之flannel网络模型

前文我们聊到了k8s上webui的安装和相关用户授权,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14222930.html:今天我们来聊一聊k8s上的网络 ...

- 容器编排系统K8s之Volume的基础使用

前文我们聊到了k8s上的ingress资源相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14167581.html:今天们来聊一下k8s上volum ...

- 容器编排系统之Kubectl工具的基础使用

前文我们了解了k8s的架构和基本的工作过程以及测试环境的k8s集群部署,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/14126750.html:今天我们主要来 ...

- 容器编排系统之Pod资源配置清单基础

前文我们了解了k8s上的集群管理工具kubectl的基础操作以及相关资源的管理,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/14130540.html:今天我 ...

- 容器编排系统K8s之包管理器Helm基础使用

前文我们了解了k8s上的hpa资源的使用,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14293237.html:今天我们来聊一下k8s包管理器helm的相 ...

- 容器编排系统K8s之包管理器helm基础使用(二)

前文我们介绍了helm的相关术语和使用helm安装和卸载应用,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14305902.html:今天我们来介绍下自定义 ...

随机推荐

- C#设计模式-原型模式(Prototype Pattern)

引言 在软件开发过程中,我们习惯使用new来创建对象.但是当我们创建一个实例的过程很昂贵或者很复杂,并且需要创建多个这样的类的实例时.如果仍然用new操作符去创建这样的类的实例,会导致内存中多分配一个 ...

- Collectors工具类

Collector是专门用来作为Stream的collect方法的参数的:而Collectors是作为生产具体Collector的工具类. Collectors是一个工具类,是JDK预实现Collec ...

- centos8 mysql8遇到的问题

1.装了第一遍,连接没遇到问题,没注意是怎么装的:本机连,外部连都没碰到问题: 遇到了表名大小写的问题,改了配置文件my.cnf或/etc/my.cnf.d/mysql-server.cnf的文件 在 ...

- Mac book系统的垃圾清理如何进行?

当我们看到电脑发出的内存不足的提示,这就意味着: 1.Mac系统的内存即将被占满 2.电脑将运行缓慢 3.开机速度变慢 很多人使用Mac book一年以后都会发现,它的运行开始逐渐变慢,爱电脑的人在将 ...

- C语言讲义——常量(constant)

变量可以反复赋值:常量只能在定义时赋值,此后不得更改. 常量的定义需要加关键字const.如: #include <stdio.h> main() { const double PI=3. ...

- JQuery案例:折叠菜单

折叠菜单(jquery) <html> <head> <meta charset="UTF-8"> <title>accordion ...

- 【mq读书笔记】mq事务消息

关于mq食物以什么样的方式解决了什么样的问题可以参考这里: https://www.jianshu.com/p/cc5c10221aa1 上文中示例基于mq版本较低较新的版本中TransactionL ...

- hibernate一对多,细节讲解

1.一对多 1).首先创建两个实体类studeninfo.java跟studentxxb.java 1)studentinfo.java表如图: package model; import java. ...

- select监听服务端

# can_read, can_write, _ = select.select(inputs, outputs, None, None)## 第一个参数是我们需要监听可读的套接字, 第二个参数是我们 ...

- 本人的CSDN博客

本人的CSDN博客链接: 传送门