redis3.2.2 集群

http://blog.csdn.net/imxiangzi/article/details/52431729

http://www.2cto.com/kf/201701/586689.html

meet Not all 16384 slots are covered by nodes.

http://www.cnblogs.com/SailorXiao/p/5808872.html

Redis Cluster 理论知识

集群配置 https://blog.csdn.net/u012572955/article/details/53996107

全面剖析Redis Cluster原理和应用

两台服务器 192.168.67.129 192.168.67.131 port 6379 6380 6381 一共6个节点(集群最少要三个节点即三个主节点,主节点挂了从节点会接管,如果没有从节点那整个集群就fail了)

192.168.67.129:6379

192.168.67.129:6380

192.168.67.129:6381

192.168.67.131:6379

192.168.67.131:6380

192.168.67.131:6381

这样就会3主3从 3个主分配3份 slot 一共16384 slots被平均分三份 每个主分开负责一个区域 ,所以三个slots区域任何一个出问题集群都不能用了

slots:0-5460 (5461 slots) master

slots:10923-16383 (5461 slots) master

slots:5461-10922 (5462 slots) master

yum -y install gcc

cd /usr/local

wget http://download.redis.io/releases/redis-3.2.2.tar.gz

tar zxf redis-3.2.2.tar.gz

cd redis-3.2.2

make

make install

cd utils

./install_server.sh 接着下面一路回车

Welcome to the redis service installer

This script will help you easily set up a running redis server

Please select the redis port for this instance: [6379]

Selecting default: 6379

Please select the redis config file name [/etc/redis/6379.conf]

Selected default - /etc/redis/6379.conf

Please select the redis log file name [/var/log/redis_6379.log]

Selected default - /var/log/redis_6379.log

Please select the data directory for this instance [/var/lib/redis/6379]

Selected default - /var/lib/redis/6379

Please select the redis executable path [/usr/local/bin/redis-server]

Selected config:

Port : 6379

Config file : /etc/redis/6379.conf

Log file : /var/log/redis_6379.log

Data dir : /var/lib/redis/6379

Executable : /usr/local/bin/redis-server

Cli Executable : /usr/local/bin/redis-cli

Is this ok? Then press ENTER to go on or Ctrl-C to abort.

Copied /tmp/6379.conf => /etc/init.d/redis_6379

Installing service...

Successfully added to chkconfig!

Successfully added to runlevels 345!

Starting Redis server...

Installation successful!

修改 /etc/redis/6379.conf

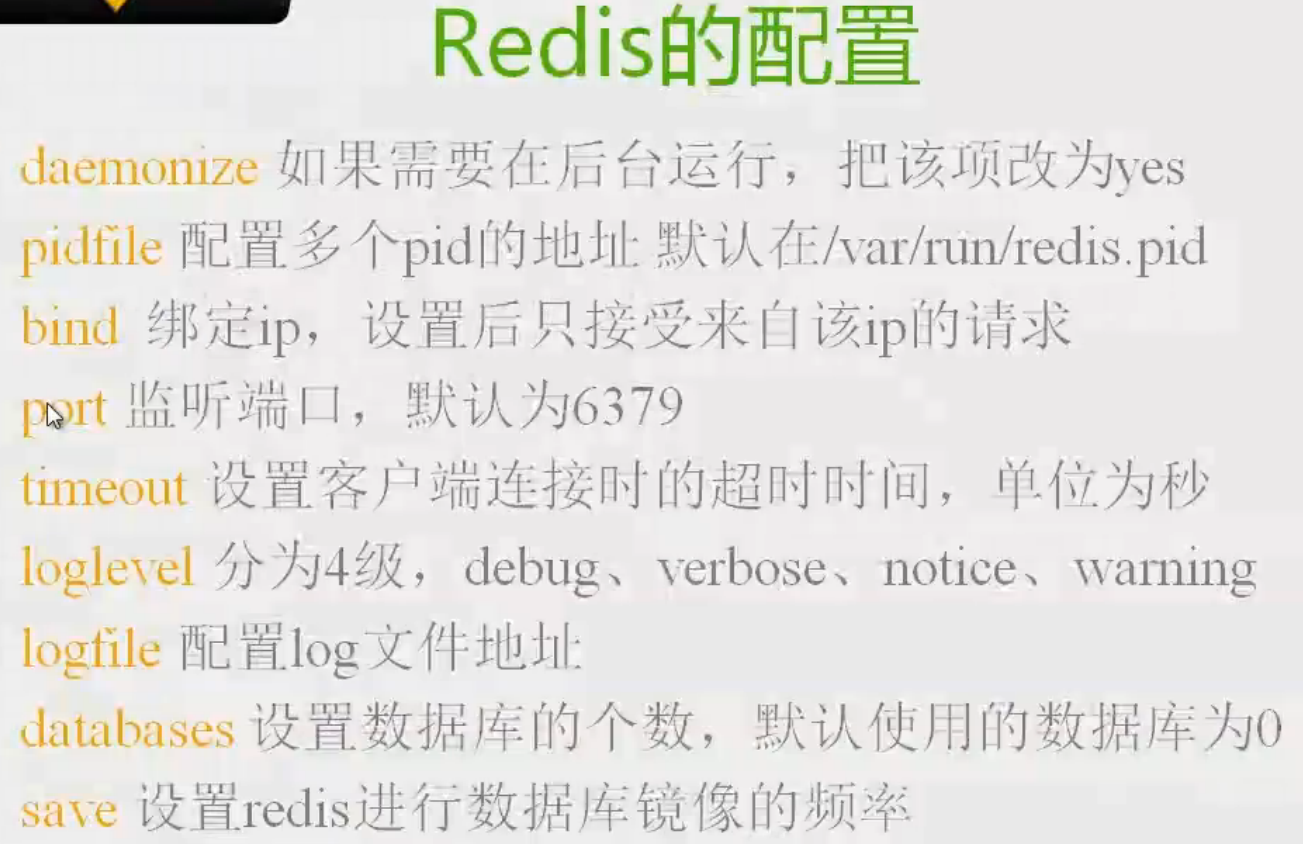

daemonize yes 这样就可以默认启动就后台运行

[root@localhost redis]# ls

6379.conf 6380.conf 6381.conf 修改6380和6381相应内容 6379部分全部改成6380和6381就行了,参考如下

- daemonize yes //redis后台运行

- pidfile /var/run/redis_6380.pid //pidfile文件

- port 6380 //端口

- cluster-enabled yes //开启集群 把注释#去掉

- cluster-config-file nodes_6380.conf //集群的配置 配置文件首次启动自动生成

- cluster-node-timeout 5000 //请求超时 设置5秒够了

- appendonly yes

# /usr/local/bin/redis-server /etc/redis/63xx 启动redis 6379 6380 6381

# ps -ef |grep redis

root 1348 1 0 15:59 ? 00:00:12 /usr/local/bin/redis-server 192.168.67.129:6379 [cluster]

root 1743 1 0 16:01 ? 00:00:10 /usr/local/bin/redis-server 192.168.67.129:6381 [cluster]

root 1747 1 0 16:02 ? 00:00:10 /usr/local/bin/redis-server 192.168.67.129:6380 [cluster]

关闭redis

# pkill redis-server #正常停止Redis服务(一个服务器多个端口的几个redis-server全部都会被kill),可以通过pkill命令停止所有Redis服务或者使用kill -15 redis-pid停止某一个Redis服务。

用kill停止之后如果无法重新启动redis,那就cp一个新的配置文件然后再重启试试,最好别用kill

监控状态

# redis-cli -p 6379 monitor

OK

以上操作两台服务器都做了 ,接下来配置集群

yum -y install ruby ruby-devel rubygems rpm-build

gem install redis //等好久而且没有任何安装信息,久到你以为安装失败了,放着不管就可以了,十分钟后再说

# sudo gem install redis

Successfully installed redis-3.3.3

1 gem installed

Installing ri documentation for redis-3.3.3...

Installing RDoc documentation for redis-3.3.3...

运行 # redis-trib.rb 没报错就行了

[创建集群]# redis-trib.rb create --replicas 1 192.168.67.129:6379 192.168.67.129:6380 192.168.67.129:6381 192.168.67.131:6379 192.168.67.131:6380 192.168.67.131:6381

--replicas 1 表示我们希望为集群中的每个主节点创建一个从节点。

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 3 masters:

192.168.67.131:6379

192.168.67.129:6379

192.168.67.131:6380

Adding replica 192.168.67.129:6380 to 192.168.67.131:6379

Adding replica 192.168.67.131:6381 to 192.168.67.129:6379

Adding replica 192.168.67.129:6381 to 192.168.67.131:6380

M: c241982270411fc34cca6fb248c802f92a2bade2 192.168.67.129:6379

slots:5461-10922 (5462 slots) master

S: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

replicates 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc

S: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

replicates f4a2fb289b66107a046cc308df83ee0ddd59222e

M: 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc 192.168.67.131:6379

slots:0-5460 (5461 slots) master

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:10923-16383 (5461 slots) master

S: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

replicates c241982270411fc34cca6fb248c802f92a2bade2

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join..

>>> Performing Cluster Check (using node 192.168.67.129:6379)

M: c241982270411fc34cca6fb248c802f92a2bade2 192.168.67.129:6379

slots:5461-10922 (5462 slots) master

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots: (0 slots) master

replicates 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots: (0 slots) master

replicates f4a2fb289b66107a046cc308df83ee0ddd59222e

M: 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc 192.168.67.131:6379

slots:0-5460 (5461 slots) master

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:10923-16383 (5461 slots) master

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots: (0 slots) master

replicates c241982270411fc34cca6fb248c802f92a2bade2

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# redis-trib.rb check 192.168.67.129:6379 #查看集群状态 理论上三个port任意查一个结果都是一样的

>>> Performing Cluster Check (using node 192.168.67.129:6379)

S: c241982270411fc34cca6fb248c802f92a2bade2 192.168.67.129:6379

slots: (0 slots) slave

replicates 129accd00021097ac0c0379b373c1b19de12b75c

S: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots: (0 slots) slave

replicates f4a2fb289b66107a046cc308df83ee0ddd59222e

M: 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc 192.168.67.131:6379

slots:0-5460 (5461 slots) master

1 additional replica(s)

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots: (0 slots) slave

replicates 6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

还以可以这么查

[root@localhost ~]# redis-cli -c -p 6381 cluster nodes

c241982270411fc34cca6fb248c802f92a2bade2 :0 slave,fail,noaddr 129accd00021097ac0c0379b373c1b19de12b75c 1503144886378 1503144886378 7 disconnected

429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380 master - 0 1503151926142 8 connected 0-5460

f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380 slave 341f7501a60b510b94159ef6667776e17ce3893d 0 1503151928162 10 connected

6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc :0 master,fail,noaddr - 1503144886373 1503144886373 4 disconnected

129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381 myself,master - 0 0 7 connected 5461-10922

341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381 master - 0 1503151927654 10 connected 10923-16383

6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:6379 slave 429e81bda0d58db69344eb080d6b8d2ed5c3beed 0 1503151927149 8 connected

# redis-cli -c -p 6380 #进入任何一台的端口 # redis-cli -c -h 192.168.67.131 -p 6381

127.0.0.1:6380> set hw "Hello World"

-> Redirected to slot [3315] located at 192.168.67.131:6379

OK

192.168.67.131:6379> get hw

"Hello World"

# redis-cli -c -p 6381

127.0.0.1:6381> get hw

-> Redirected to slot [3315] located at 192.168.67.131:6379

"Hello World"

pkill后遇到问题

>>> Creating cluster

[ERR] Node 192.168.67.131:6380 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

需要删除 redis.conf文件所在文件件下的aof、rdb、nodes.conf等文件 rm dump.rdb nodes-6379.conf nodes-6380.conf nodes-6381.conf

清空缓存

127.0.0.1:6381> flushdb

OK

如果还报错试试pkill之后重启再启动集群

重新创建集群的时候遇到问题

[root@localhost ~]# redis-trib.rb create --replicas 1 192.168.67.129:6379 192.168.67.129:6380 192.168.67.129:6381 192.168.67.131:6379 192.168.67.131:6380 192.168.67.131:6381

>>> Creating cluster #只要redis-trib.rb create一次就够了

[ERR] Node 192.168.67.129:6380 is not empty. Either the node already knows other nodes (check with CLUSTER NODES) or contains some key in database 0.

[root@localhost ~]# redis-trib.rb check 127.0.0.1:6379 #查6379发现这个节点单独成了一个master

>>> Performing Cluster Check (using node 127.0.0.1:6379)

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 127.0.0.1:6379

slots: (0 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[ERR] Not all 16384 slots are covered by nodes.

查其他节点发现 6个节点有三个是主1个从,剩下两个从说明有问题 都是6379出的问题

[root@localhost ~]# redis-trib.rb check 192.168.67.131:6381

>>> Performing Cluster Check (using node 192.168.67.131:6381)

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

0 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots:0-5460 (5461 slots) master

0 additional replica(s)

S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots: (0 slots) slave

replicates 341f7501a60b510b94159ef6667776e17ce3893d

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots:10923-16383 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@localhost ~]# redis-cli -c -p 6379 cluster nodes

fed9d4de4467764899959f6a779fbdc1fc5f657d 192.168.67.131:6379 myself,master - 0 0 0 connected

[root@localhost ~]# redis-cli -c -p 6380 cluster nodes

c241982270411fc34cca6fb248c802f92a2bade2 :0 slave,fail,noaddr 129accd00021097ac0c0379b373c1b19de12b75c 1503144886358 1503144886358 7 disconnected

341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381 master - 0 1503147664318 10 connected 10923-16383

6a0da105d71714e4c2a9ef1bf8d4e421859bcbdc :0 master,fail,noaddr - 1503144886358 1503144886358 4 disconnected

129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381 master - 0 1503147663810 7 connected 5461-10922

429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380 master - 0 1503147664823 8 connected 0-5460

f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380 myself,slave 341f7501a60b510b94159ef6667776e17ce3893d 0 0 5 connected

[root@localhost ~]# redis-cli -p 6379

127.0.0.1:6379> set zzx zhan

(error) CLUSTERDOWN Hash slot not served

#连接有问题的节点发现无法正常使用 连接6379以外的端口发现set get正常 说明当前集群的两个6379节点有问题 剩下的4个节点正常,需要把有问题的节点重新加到集群中

参考了这篇http://www.cnblogs.com/guxiong/p/6266890.html里面的meet

[root@localhost ~]# redis-cli -c -h 192.168.67.129 -p 6379 cluster meet 192.168.67.131 6380

OK

[root@localhost ~]# redis-trib.rb check 127.0.0.1:6379

>>> Performing Cluster Check (using node 127.0.0.1:6379)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 127.0.0.1:6379

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

0 additional replica(s)

S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots: (0 slots) slave

replicates 341f7501a60b510b94159ef6667776e17ce3893d

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots:0-5460 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

meet后把一个6379重新加进去了 剩下一个 redis-cli -c -h 192.168.67.131 -p 6379 cluster meet 192.168.67.131 6380 这下全部6个节点都正常了

done!!!

[root@localhost ~]# redis-trib.rb check 127.0.0.1:6379

>>> Performing Cluster Check (using node 127.0.0.1:6379)

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 127.0.0.1:6379

slots: (0 slots) master

0 additional replica(s)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:6379

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots: (0 slots) slave

replicates 341f7501a60b510b94159ef6667776e17ce3893d

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

0 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

发现多了一个 127.0.0.1:6379

[root@localhost ~]# redis-trib.rb del-node 127.0.0.1:6379 'fed9d4de4467764899959f6a779fbdc1fc5f657d'

>>> Removing node fed9d4de4467764899959f6a779fbdc1fc5f657d from cluster 127.0.0.1:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

- [重启脚本]# cat restart_redis

#/bin/bash

pkill redis-server

sleep 0.1

for ((i=1;i<=5;i++));do

sum=`ps -ef |grep "redis-server"|grep -v grep|wc -l`

if [ $sum == 0 ];

then

echo "killed redis-server"

rm -rf /etc/redis/dump.rdb /etc/redis/nodes-*.conf

echo "ready to start redis"

#sleep 1

redis-server /etc/redis/6379.conf

redis-server /etc/redis/6380.conf

redis-server /etc/redis/6381.conf

echo "started redis"

ps -ef |grep "redis-server"|grep -v grep

echo "end!!"

exit 0

else

echo "$i sleep"

sleep 1

fi

done

echo "have $sum redis-server not be killed,please check the result "

exit 1

重启检查集群发现[WARNING] Node 127.0.0.1:6379 has slots in importing state (1988). 参考http://blog.csdn.net/imxiangzi/article/details/52431729

如发现如下这样的错误:

[WARNING] Node 192.168.0.11:6380 has slots in migrating state (5461).

[WARNING] The following slots are open: 5461

可以使用redis命令取消slots迁移(5461为slot的ID):

cluster setslot 5461 stable

需要注意,须登录到192.168.0.11:6380上执行redis的setslot子命令。

[root@localhost ~]# redis-trib.rb check 127.0.0.1:6379

>>> Performing Cluster Check (using node 127.0.0.1:6379)

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 127.0.0.1:6379

slots: (0 slots) master

0 additional replica(s)

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

0 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots: (0 slots) slave

replicates 341f7501a60b510b94159ef6667776e17ce3893d

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:6379

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

[WARNING] Node 127.0.0.1:6379 has slots in importing state (1988).

[WARNING] Node 192.168.67.131:6381 has slots in importing state (1988).

[WARNING] Node 192.168.67.129:6381 has slots in importing state (1988).

[WARNING] The following slots are open: 1988

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@localhost ~]# redis-cli -p 6381

127.0.0.1:6381> cluster setslot 1988 stable

OK

127.0.0.1:6381> exit

最后检查发现有一个主应该设置成从

[root@localhost redis]# redis-trib.rb check 127.0.0.1:6379

>>> Performing Cluster Check (using node 127.0.0.1:6379)

M: cd3f4079f866560cbcf4149f6181dc19e8880b91 127.0.0.1:6379

slots: (0 slots) master

0 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots: (0 slots) slave

replicates 341f7501a60b510b94159ef6667776e17ce3893d

M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:5461-10922 (5462 slots) master

0 additional replica(s)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:6379

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

注意7006是新增的节点,而7000是已存在的节点(可为master或slave)。如果需要将7006变成某master的slave节点,执行命令:

[root@localhost redis]# redis-cli -p 6379

127.0.0.1:6379> cluster replicate 129accd00021097ac0c0379b373c1b19de12b75c

OK

再检查,集群正常(注意:不能为一个非空并且分配了slot的主节点继续添加从节点)

[root@localhost redis]# redis-cli -p 6379

127.0.0.1:6379> set abc wsj

(error) MOVED 7638 192.168.67.131:6381

127.0.0.1:6379> get zzx

(error) MOVED 1988 192.168.67.129:6380

出现MOVED是因为代码写错了 少了-c 应该是redis-cli -c -p 6381

搭建集群比较简单,搭建好后出现的问题:

1、本来一主一从变成两个主,就要把其中一个主变成从(登录到相应节点 cluster replicate ID)

2、集群中少了一个节点,要把节点重新加到集群中( redis-cli -c -h 192.168.67.131 -p 6379 cluster meet 192.168.67.131 6380 )

3、检查集群状态发现 "[WARNING] Node 127.0.0.1:6379 has slots in importing state (1988)." (登录相应节点 cluster setslot 1988 stable)

4、重启服务器后发现只有6379自动启动 检查集群发现6380 6381报错 因为没启动 运行 启动相应port后再检查集群就正常起来了,可能会报has slots in importing state。

6个节点增加到8个

复制redis.conf文件,然后启动redis 这里用6382.conf,再往集群中添加节点

添加节点

redis-trib.rb add-node 192.168.67.131:6382 192.168.67.129:6379 #前面写要添加的节点,后面一个任意集群中一个节点都行

- [root@localhost redis]# redis-trib.rb add-node 192.168.67.131: 192.168.67.129:

- >>> Adding node 192.168.67.131: to cluster 192.168.67.129:

- >>> Performing Cluster Check (using node 192.168.67.129:)

- S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:

- slots: ( slots) slave

- replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

- M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:

- slots:- ( slots) master

- additional replica(s)

- S: 2ee98237ff31b5cad0fbaff9f0faccda50f46707 192.168.67.129:

- slots: ( slots) slave

- replicates fed9d4de4467764899959f6a779fbdc1fc5f657d

- M: fed9d4de4467764899959f6a779fbdc1fc5f657d 192.168.67.131:

- slots: ( slots) master

- additional replica(s)

- M: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:

- slots:- ( slots) master

- additional replica(s)

- S: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:

- slots: ( slots) slave

- replicates 341f7501a60b510b94159ef6667776e17ce3893d

- M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:

- slots:- ( slots) master

- additional replica(s)

- [OK] All nodes agree about slots configuration.

- >>> Check for open slots...

- >>> Check slots coverage...

- [OK] All slots covered.

- >>> Send CLUSTER MEET to node 192.168.67.131: to make it join the cluster.

- [OK] New node added correctly.

[root@localhost redis]# redis-cli -c -h 192.168.67.129 -p 6382

192.168.67.129:6382> cluster replicate 129accd00021097ac0c0379b373c1b19de12b75c

OK

192.168.67.129:6382> exit #再设置从节点

- 设置失败 提示被设置的主节点非空(不能为一个非空并且分配了slot的主节点继续添加从节点)

[root@localhost redis]# redis-cli -c -h 192.168.67.131 -p- 192.168.67.131:> cluster replicate 429e81bda0d58db69344eb080d6b8d2ed5c3beed

- (error) ERR To set a master the node must be empty and without assigned slots.

- 192.168.67.131:> exit

那就要删除主节点(429e81bda0d58db69344eb080d6b8d2ed5c3beed)的slot(就是移除slot给其他节点 有多少移动多少)

[root@localhost ~]# redis-trib.rb reshard 127.0.0.1:6382 #这个端口任意一个都行

>>> Performing Cluster Check (using node 127.0.0.1:6382)

S: 2ee98237ff31b5cad0fbaff9f0faccda50f46707 127.0.0.1:6382

slots: (0 slots) slave

replicates 129accd00021097ac0c0379b373c1b19de12b75c

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:0-1987,1989-2729,5461-8191,10923-16383 (10921 slots) master

1 additional replica(s)

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 192.168.67.131:6379

slots:1988 (1 slots) master #把1988给131:6380

1 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots: (0 slots) master

1 additional replica(s)

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:2730-5460,8192-10922 (5462 slots) master

1 additional replica(s)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 192.168.67.129:6379

slots: (0 slots) slave

replicates fed9d4de4467764899959f6a779fbdc1fc5f657d

S: ad1bae36b72d5db11a505080da5e6ae3b1c56810 192.168.67.131:6382

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

S: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots: (0 slots) slave

replicates f4a2fb289b66107a046cc308df83ee0ddd59222e

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 1

What is the receiving node ID? f4a2fb289b66107a046cc308df83ee0ddd59222e

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:fed9d4de4467764899959f6a779fbdc1fc5f657d

Source node #2:done

Ready to move 1 slots.

Source nodes:

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 192.168.67.131:6379

slots:1988 (1 slots) master

1 additional replica(s)

Destination node:

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:0-1987,1989-2729,5461-8191,10923-16383 (10921 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 1988 from fed9d4de4467764899959f6a779fbdc1fc5f657d

Do you want to proceed with the proposed reshard plan (yes/no)? yes

cluster replicate 的时候报错

自动调整rebalance

- [root@localhost ~]# redis-trib.rb rebalance 192.168.67.129:

- >>> Performing Cluster Check (using node 192.168.67.129:)

- [OK] All nodes agree about slots configuration.

- >>> Check for open slots...

- >>> Check slots coverage...

- [OK] All slots covered.

- >>> Rebalancing across nodes. Total weight =

- Moving slots from 192.168.67.131: to 192.168.67.131:

- ################################################

- Moving slots from 192.168.67.131: to 192.168.67.131:

- #

[root@localhost ~]# redis-trib.rb check 127.0.0.1:6379

>>> Performing Cluster Check (using node 127.0.0.1:6379)

S: 6499aa3e2dc11fba6cb88881132c80f17b2e3497 127.0.0.1:6379

slots: (0 slots) slave

replicates fed9d4de4467764899959f6a779fbdc1fc5f657d

S: 341f7501a60b510b94159ef6667776e17ce3893d 192.168.67.129:6381

slots: (0 slots) slave

replicates f4a2fb289b66107a046cc308df83ee0ddd59222e

M: fed9d4de4467764899959f6a779fbdc1fc5f657d 192.168.67.131:6379

slots:0-2730,5461-8191 (5462 slots) master

1 additional replica(s)

S: 2ee98237ff31b5cad0fbaff9f0faccda50f46707 192.168.67.129:6382

slots: (0 slots) slave

replicates 129accd00021097ac0c0379b373c1b19de12b75c

M: f4a2fb289b66107a046cc308df83ee0ddd59222e 192.168.67.131:6380

slots:10923-16383 (5461 slots) master

1 additional replica(s)

M: 429e81bda0d58db69344eb080d6b8d2ed5c3beed 192.168.67.129:6380

slots: (0 slots) master

1 additional replica(s)

S: ad1bae36b72d5db11a505080da5e6ae3b1c56810 192.168.67.131:6382

slots: (0 slots) slave

replicates 429e81bda0d58db69344eb080d6b8d2ed5c3beed

M: 129accd00021097ac0c0379b373c1b19de12b75c 192.168.67.131:6381

slots:2731-5460,8192-10922 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

- rebalance 之后有一个M没有slots,还是需要手动调整才行

要把一个主变成从(或者从变主)有两种方法,第一种就是kill掉主 这样方法可能会丢失数据 因为主从切换需要时间(

Redis 集群的一致性保证(Redis Cluster consistency guarantees)

Redis 集群不保证强一致性。实践中,这意味着在特定的条件下,Redis 集群可能会丢掉一些被系统收 到的写入请求命令。

Redis 集群为什么会丢失写请求的第一个原因,是因为采用了异步复制。这意味着在写期间下面的事情 发生了:

你的客户端向主服务器 B 写入。

主服务器 B 回复 OK 给你的客户端。

主服务器 B 传播写入操作到其从服务器 B1,B2 和 B3。

)

第二种就是用CLUSTER FAILOVER 命令 这里需要登录从然后输入命令 就能把对应的主换成从了,这种方法不会丢数据

获取所有 redis的key

192.168.67.131:6379> keys *

1) "lqq"

2) "zzx"

3) "0904"

redis-cli 中。 使用命令 info Keyspace 查看存储数据的相关信息。

查看存储数据的相关信息。

通过 keys * 查看所有的 key,想要释放掉的话可以删除Key来释放,

删除单个:del key

redis 2.8.9安装

[root@tomcat1 redis-2.8.9]# tar zxf redis-2.8.9.tar.gz

[root@tomcat1 redis-2.8.9]# cd redis-2.8.9

[root@tomcat1 redis-2.8.9]# make

[root@tomcat1 redis-2.8.9]# make install

[root@tomcat1 redis-2.8.9]# cd utils/

[root@tomcat1 utils]# ./install_server.sh 路回车

[root@tomcat1 redis-2.8.9]# ./redis-server redis.conf 直接启动redis

redis-server:Redis服务器的daemon启动程序

redis-cli:Redis命令行操作工具。当然,你也可以用telnet根据其纯文本协议来操作

redis-benchmark:Redis性能测试工具,测试Redis在你的系统及你的配置下的读写性能

redis-check-aof:更新日志检查

配置文件内容如下

[root@redis1 ~]# grep -v "^#" redis.conf|grep -v "^$"

[root@redis1 redis-3.2.1]# grep -v "^#" redis.conf|grep -v "^$"

主

bind 192.168.1.8 127.0.0.1

daemonize yes

port 6379

从

bind 192.168.1.9 127.0.0.1

port 6389

daemonize yes

slaveof 192.168.1.8 6379

redis3.2.2 集群的更多相关文章

- 缓存系列之五:通过codis3.2实现redis3.2.8集群的管理

通过codis3.2实现redis3.2.8集群 一:Codis 是一个分布式 Redis 解决方案, 对于上层的应用来说, 连接到 Codis Proxy 和连接原生的 Redis Server 没 ...

- Redis-3.2.1集群内网部署

摘要: Redis-3.2.1集群内网部署 http://rubygems.org国内连不上时的一种Redis集群部署解决方案.不足之处,请广大网友指正,谢谢! 一. 关于redis cluster ...

- Redis3 本地安装集群的记录

引用CSDN文章 环境 centos6.7 目标 redis 三主三从的集群 step 1 编译,如果出错,则根据提示安装依赖 tar -zxvf redis-3.0.0.tar.gz mv redi ...

- redis3.0.0 集群安装详细步骤

Redis集群部署文档(centos6系统) Redis集群部署文档(centos6系统) (要让集群正常工作至少需要3个主节点,在这里我们要创建6个redis节点,其中三个为主节点,三个为从节点,对 ...

- redis3.0.5集群部署安装详细步骤

Redis集群部署文档(centos6系统) (要让集群正常工作至少需要3个主节点,在这里我们要创建6个redis节点,其中三个为主节点,三个为从节点,对应的redis节点的ip和端口对应关系如下) ...

- redis-3.0.0集群的安装及使用

redis集群需要至少6个节点(偶数节点),3个主节点,3个从节点.注意:集群模式最好不要keys *查询数据. 1 下载redis,官网下载3.0.0版本,之前2.几的版本不支持集群模式.下载地址: ...

- centos 安装 redis3.2.0 集群

这里创建6个redis节点,其中三个为主节点,三个为从节点. redis和端口对应关系: 127.0.0.1:7000 127.0.0.1:7001 127.0.0.1:7002 从: 127.0.0 ...

- redis3.2.6 集群安装

下载 [root@localhost ~]# cd /usr/local/src/ [root@localhost src]# wget http://download.redis.io/rele ...

- Redis随笔(四)Centos7 搭redis3.2.9集群-3主3从的6个节点服务

1.虚拟机环境 使用的Linux环境已经版本: Centos 7 64位系统 主机ip: 192.168.56.180 192.168.56.181 192.168.56.182 每台服务器是1主 ...

- redis3.0.7集群部署手册

1.用root登录主机2.将redis-3.0.7.tar.gz传送到主机3.将rubygems-update-2.5.2.gem,redis-3.0.0.gem传送到主机4.解压redis-3.0. ...

随机推荐

- Django 执行 manage 命令方式

本人使用的Pycharm作为开发工具,可以在顶部菜单栏的Tools->Run manage.py Task直接打开manager 命令控制台 打开后在底部会有命令窗口: 或者,也可以在Pytho ...

- 中山Day10——普及

今天又是愚蠢的一天,估分230,实得110.其中T2.4不会,这里就只说题意和简要思路. 收获:scanf>>a,以及printf<<a. T1:模板题 此题相对简单,就是读入 ...

- 如何用AU3调用自己用VC++写的dll函数

这问题困扰我一个上午了,终于找到原因了,不敢藏私,和大家分享一下. 大家都知道,AU3下调用dll文件里的函数是很方便的,只要一个dllcall语句就可以了. 比如下面这个: $result = Dl ...

- 三 MyBatis配置文件SqlMapCofing.xml(属性加载&类型别名配置&映射文件加载)

SqlMapCofing:dtd,属性加载有固定的顺序Content Model properties:加载属性文件 typeAliases:别名配置 1 定义单个别名:不区分大小写 核心配置: 映射 ...

- day6 作业 购物车

- SpingBoot学习(一)

一.概述 Spring Boot是为了简化Spring应用的创建.运行.调试.部署等而出现的,使用它可以做到专注于Spring应用的开发,而无需过多关注XML的配置. 简单来说,它提供了一堆依赖打包, ...

- Linux用户和用户组管理命令

一.用户管理命令 1.useradd 创建用户或更新默认新用户的信息 使用方法 useradd [options] 用户名 选项: useradd -u 指定UID具体数值, ...

- video-editing

1. 视频编辑 2. 视频编辑软件列表 3. 视频编辑软件比较 4. 视频转换 1. 视频编辑 https://zh.wikipedia.org/wiki/视频编辑 2. 视频编辑软件列表 https ...

- eclipse如何把多个项目放在文件夹下,用文件夹分开不同的项目

在Package Explorer顶部的右侧有有机表图标按钮,点击倒三角 Top Level Elements->Working Set.此时就会发现,很多项目会自动纳入一个文件夹,这个文件夹的 ...

- selenium+chrome抓取淘宝宝贝-崔庆才思路

站点分析 源码及遇到的问题 在搜索时,会跳转到登录界面 step1:干起来! 先取cookie step2:载入cookie step3:放飞自我 关于phantomJS浏览器的问题 源码 站点分析 ...