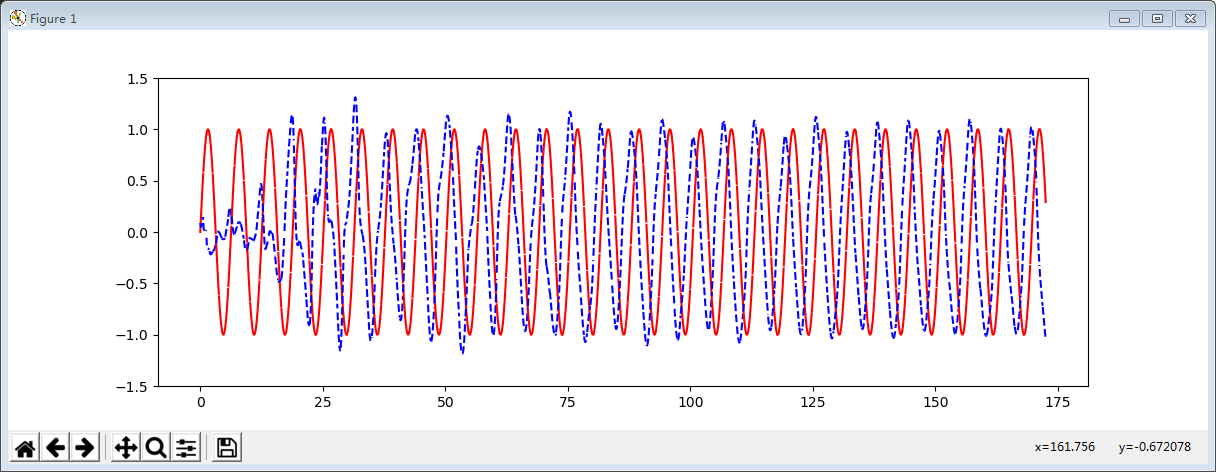

tensorflow1.0 lstm学习曲线

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt BATCH_START = 0

TIME_STEPS = 20

BATCH_SIZE = 20

INPUT_SIZE = 1

OUTPUT_SIZE = 1

CELL_SIZE = 10

LR = 0.0025 def get_batch():

global BATCH_START, TIME_STEPS

# xs shape (50batch, 20steps)

xs = np.arange(BATCH_START, BATCH_START+TIME_STEPS*BATCH_SIZE).reshape((BATCH_SIZE, TIME_STEPS)) / (10*np.pi)

seq = np.sin(xs)

res = np.cos(xs)

BATCH_START += TIME_STEPS

# plt.plot(xs[0, :], res[0, :], 'r', xs[0, :], seq[0, :], 'b--')

# plt.show()

# returned seq, res and xs: shape (batch, step, input)

return [seq[:, :, np.newaxis], res[:, :, np.newaxis], xs] class LSTMRNN(object):

def __init__(self, n_steps, input_size, output_size, cell_size, batch_size):

self.n_steps = n_steps

self.input_size = input_size

self.output_size = output_size

self.cell_size = cell_size

self.batch_size = batch_size

with tf.name_scope('inputs'):

self.xs = tf.placeholder(tf.float32, [None, n_steps, input_size], name='xs')

self.ys = tf.placeholder(tf.float32, [None, n_steps, output_size], name='ys')

with tf.variable_scope('in_hidden'):

self.add_input_layer()

with tf.variable_scope('LSTM_cell'):

self.add_cell()

with tf.variable_scope('out_hidden'):

self.add_output_layer()

with tf.name_scope('cost'):

self.compute_loss()

with tf.name_scope('train'):

self.train_op = tf.train.AdamOptimizer(LR).minimize(self.loss) def add_input_layer(self,):

l_in_x = tf.reshape(self.xs, [-1, self.input_size], name='2_2D') # (batch*n_step, in_size)

# Ws (in_size, cell_size)

Ws_in = self._weight_variable([self.input_size, self.cell_size])

# bs (cell_size, )

bs_in = self._bias_variable([self.cell_size,])

# l_in_y = (batch * n_steps, cell_size)

with tf.name_scope('Wx_plus_b'):

l_in_y = tf.matmul(l_in_x, Ws_in) + bs_in

# reshape l_in_y ==> (batch, n_steps, cell_size)

self.l_in_y = tf.reshape(l_in_y, [-1, self.n_steps, self.cell_size], name='2_3D') def add_cell(self):

lstm_cell = tf.contrib.rnn.BasicLSTMCell(self.cell_size, forget_bias=1.0, state_is_tuple=True)

with tf.name_scope('initial_state'):

self.cell_init_state = lstm_cell.zero_state(self.batch_size, dtype=tf.float32)

self.cell_outputs, self.cell_final_state = tf.nn.dynamic_rnn(

lstm_cell, self.l_in_y, initial_state=self.cell_init_state, time_major=False) def add_output_layer(self):

# shape = (batch * steps, cell_size)

l_out_x = tf.reshape(self.cell_outputs, [-1, self.cell_size], name='2_2D')

Ws_out = self._weight_variable([self.cell_size, self.output_size])

bs_out = self._bias_variable([self.output_size, ])

# shape = (batch * steps, output_size)

with tf.name_scope('Wx_plus_b'):

self.pred = tf.matmul(l_out_x, Ws_out) + bs_out # def compute_cost(self):

# losses = tf.contrib.legacy_seq2seq.sequence_loss_by_example(

# [tf.reshape(self.pred, [-1], name='reshape_pred')],

# [tf.reshape(self.ys, [-1], name='reshape_target')],

# [tf.ones([self.batch_size * self.n_steps], dtype=tf.float32)],

# average_across_timesteps=True,

# softmax_loss_function=self.ms_error,

# name='losses'

# )

# with tf.name_scope('average_cost'):

# self.cost = tf.div(

# tf.reduce_sum(losses, name='losses_sum'),

# self.batch_size,

# name='average_cost') def compute_loss(self):

prediction = tf.reshape(self.pred, [-1], name='reshape_pred')

ys = tf.reshape(self.ys, [-1], name='reshape_target')

self.loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - prediction),

reduction_indices=[0])) @staticmethod

def ms_error(labels, logits):

return tf.square(tf.subtract(labels, logits)) def _weight_variable(self, shape, name='weights'):

initializer = tf.random_normal_initializer(mean=0., stddev=1.,)

return tf.get_variable(shape=shape, initializer=initializer, name=name) def _bias_variable(self, shape, name='biases'):

initializer = tf.constant_initializer(0.1)

return tf.get_variable(name=name, shape=shape, initializer=initializer) if __name__ == '__main__':

model = LSTMRNN(TIME_STEPS, INPUT_SIZE, OUTPUT_SIZE, CELL_SIZE, BATCH_SIZE)

sess = tf.Session()

# tf.initialize_all_variables() no long valid from

# 2017-03-02 if using tensorflow >= 0.12

if int((tf.__version__).split('.')[1]) < 12 and int((tf.__version__).split('.')[0]) < 1:

init = tf.initialize_all_variables()

else:

init = tf.global_variables_initializer()

sess.run(init)

# relocate to the local dir and run this line to view it on Chrome (http://0.0.0.0:6006/):

# $ tensorboard --logdir='logs'

plt.figure(figsize=(12, 4))

plt.ion()

plt.show()

for i in range(300):

seq, res, xs = get_batch()

if i == 0:

feed_dict = {

model.xs: seq,

model.ys: res,

# create initial state

}

else:

feed_dict = {

model.xs: seq,

model.ys: res,

model.cell_init_state: state # use last state as the initial state for this run

} _, cost, state, pred = sess.run(

[model.train_op, model.loss, model.cell_final_state, model.pred],

feed_dict=feed_dict) # plotting

plt.plot(xs[0, :], seq[0].flatten(), 'r', xs[0, :], pred.flatten()[:TIME_STEPS], 'b--')

plt.ylim((-1.5, 1.5))

plt.draw()

plt.pause(0.1) if i % 20 == 0:

print('loss: ', round(cost, 4))

tensorflow1.0 lstm学习曲线的更多相关文章

- Ubuntu14.10安装TensorFlow1.0.1

本文记录了在Ubuntu上安装TensorFlow的步骤.系统环境:Ubuntu14.10 64bitPython版本:Python 2.7.8TensorFlow版:TensorFlow 1.0.1 ...

- tensorflow1.0 构建lstm做图片分类

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data #this is data mni ...

- 初用Linux, 安装Ubuntu16.04+NVIDIA387+CUDA8.0+cudnn5.1+TensorFlow1.0.1

因为最近Deep Learning十分热门, 装一下TensorFlow学习一下. 本文主要介绍安装流程, 将自己遇到的问题说明出来, 并记录自己如何处理, 原理方面并没有能力解释. 由于本人之前从来 ...

- tensorflow1.0.0 弃用了几个operator写法

除法和取模运算符(/, //, %)现已匹配 Python(flooring)语义.这也适用于 tf.div 和 tf.mod.为了获取强制的基于整数截断的行为,你可以使用 tf.truncatedi ...

- tensorflow1.0中的改善

TensorFlow 1.0 重大功能及改善 XLA(实验版):初始版本的XLA,针对TensorFlow图(graph)的专用编译器,面向CPU和GPU. TensorFlow Debugger(t ...

- tensorflow1.0 队列FIFOQueue管理实现异步读取训练

import tensorflow as tf #模拟异步子线程 存入样本, 主线程 读取样本 # 1. 定义一个队列,1000 Q = tf.FIFOQueue(1000,tf.float32) # ...

- tensorflow1.0 数据队列FIFOQueue的使用

import tensorflow as tf #模拟一下同步先处理数据,然后才能取数据训练 #tensorflow当中,运行操作有依赖性 #1.首先定义队列 Q = tf.FIFOQueue(3,t ...

- tensorflow1.0 dropout层

""" Please note, this code is only for python 3+. If you are using python 2+, please ...

- tensorflow1.0 构建卷积神经网络

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data import os os.envi ...

随机推荐

- SpringBoot安装与配置

1.环境准备 1.1.Maven安装配置 Maven项目对象模型(POM),可以通过一小段描述信息来管理项目的构建,报告和文档的项目管理工具软件. 下载Maven可执行文件 cd /usr/local ...

- 【Ubuntu】常用命令汇总,整理ing

Ubuntu 常用命令(在此页面中Ctrl+F即可快速查找) 在Ubuntu系统使用过程中,会不断地接触到命令行操作,下面对一些常用的命令进行汇总,方便查找. 1.文件操作 1.1 文件复制拷贝 cp ...

- A AK的距离

时间限制 : - MS 空间限制 : - KB 评测说明 : 1s,128m 问题描述 同学们总想AK.于是何老板给出一个由大写字母构成的字符串,他想你帮忙找出其中距离最远的一对'A'和'K'. ...

- 阿里 IOS 面试官教你在面试中脱颖而出

前言: 知己知彼.百战不殆,面试也是如此. 只有充分了解面试官的思路,才能更好地在面试中充分展现自己. 今天,阿里高级技术专家将分享自己作为面试官的心得与体会.如果你是面试者,可以借此为镜,对照发现自 ...

- STM32CubeMX的使用

1.STM32CubeMX简介 STM32CubeMx软件是ST公司为STM32系列单片机快速建立工程,并快速初始化使用到的外设.GPIO等,大大缩短了我们的开发时间.同时,软件不仅能配置STM32外 ...

- 开启sftp服务日志并限制sftp访问目录

目录导航 目录导航 开启sftp日志 修改sshd_config 修改syslogs 重启服务查看日志 限制sftp用户操作目录 前提说明 1. home目录做根目录 2. 单独创建目录做根目录 方法 ...

- 会 python 的一定会爬虫吗,来看看

文章更新于:2020-02-18 注:python 爬虫当然要安装 python,如何安装参见:python 的安装使用和基本语法 一.什么是网络爬虫 网络爬虫就是用代码模拟人类去访问网站以获取我们想 ...

- leetcode【1403. 非递增顺序的最小子序列】(01)

题目描述: 给你一个数组 nums,请你从中抽取一个子序列,满足该子序列的元素之和 严格 大于未包含在该子序列中的各元素之和. 如果存在多个解决方案,只需返回 长度最小 的子序列.如果仍然有多个解决方 ...

- Mysql大数据量问题与解决

今日格言:了解了为什么,问题就解决了一半. Mysql 单表适合的最大数据量是多少? 我们说 Mysql 单表适合存储的最大数据量,自然不是说能够存储的最大数据量,如果是说能够存储的最大量,那么,如果 ...

- SQL基础系列(2)-内置函数--转载w3school

1. 日期函数 Mssql: SELECT GETDATE() 返回当前日期和时间 SELECT DATEPART(yyyy,OrderDate) AS OrderYear, DATEPART( ...