MLN 讨论 —— inference

We consider two types of inference:

- finding the most likely state of the world consistent with some evidence

- computing arbitrary conditional probabilities.

We then discuss two approaches to making inference more tractable on large , relational problems:

- lazy inference , in which only the groundings that deviate from a "default" value need to be instantiated;

- lifted inference , in which we group indistinguishable atoms together and treat them as a single unit during inference;

3.1 Inference the most probable explanation

A basic inference task is finding the most probable state of the world y given some evidence x, where x is a set of literals;

Formula

For Markov logic , this is formally defined as follows: $$ \begin{align} arg \; \max_y P(y|x) & = arg \; \max_y \frac{1}{Z_x} exp \left( \sum_i w_in_i(x, \; y) \right) \tag{3.1} \\ & = arg \; \max_y \sum_i w_in_i(x, \; y) \tag{3.1} \end{align} $$

$n_i(x, y)$: the number of true groundings of clause $i$ ;

The problem reduces to finding the truth assignment that maximizes the sum of weights of satisfied clauses;

Method

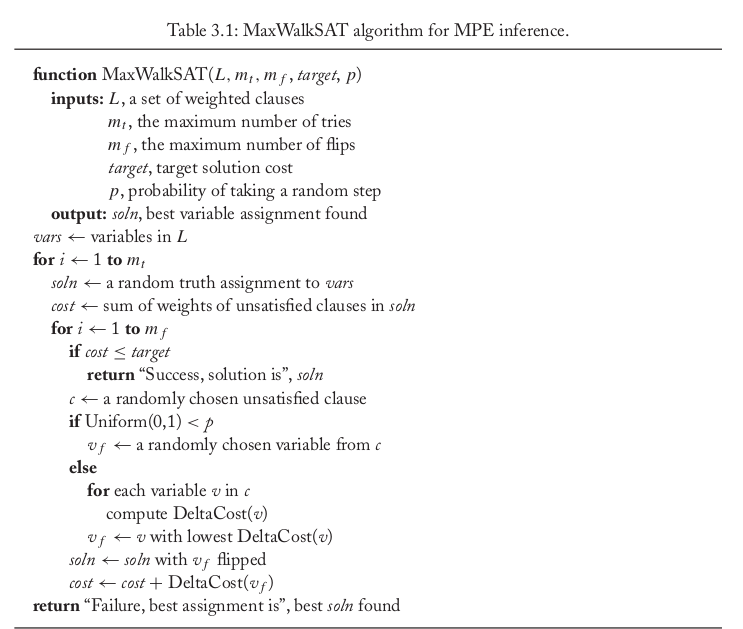

MaxWalkSAT:

Repeatedly picking an unsatisfied clause at random and flipping the truth value of one of the atoms in it.

With a certain probability , the atom is chosen randomly;

Otherwise, the atom is chosen to maximize the sum of satisfied clause weights when flipped;

DeltaCost($v$) computes the change in the sum of weights of unsatisfied clauses that results from flipping variable $v$ in the current solution.

Uniform(0,1) returns a uniform deviate from the interval [0,1]

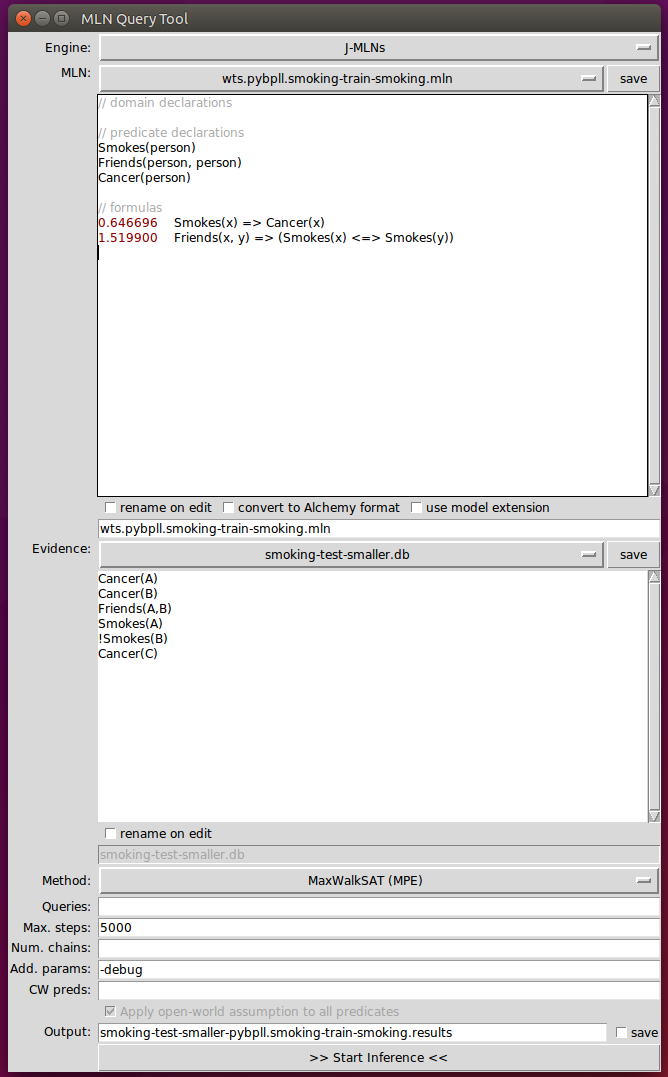

Example

Step1: convert Formula to CNF

0.646696 $\lnot$Smokes(x) $\lor$ Cancer(x)

1.519900 ( $\lnot$Friends(x,y) $\lor$ $\lnot$Smokes(x) $\lor$ Smokes(y)) $\land$ ( $\lnot$Friends(x,y) $\lor$ $\lnot$Smokes(y) $\lor$ Smokes(x))

Clauses:

- $\lnot$Smokes(x) $\lor$ Cancer(x)

- $\lnot$Friends(x,y) $\lor$ $\lnot$Smokes(x) $\lor$ Smokes(y)

- $\lnot$Friends(x,y) $\lor$ $\lnot$Smokes(y) $\lor$ Smokes(x)

Atoms: Smokes(x) 、Cancer(x) 、Friends(x,y)

Constant: {A, B, C}

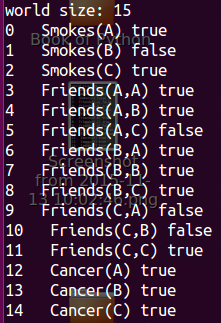

Step2: Propositionalizing the domain

The truth value of evidences is depent on themselves;

The truth value of others is assigned randomly;

C:#constant ; $\alpha(F_i)$: the arity of $F_i$ ;

world size = $\sum_{i}C^{\alpha(F_i)}$ 指数级增长!!!

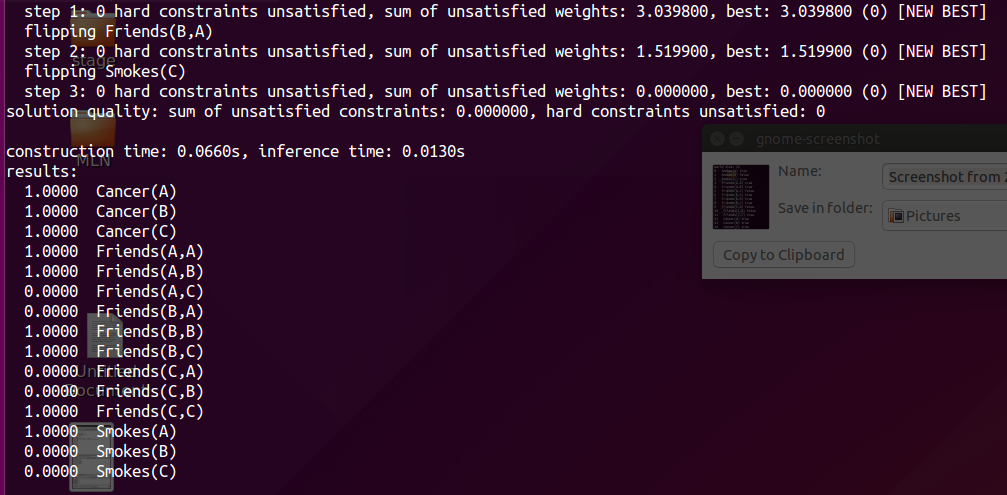

Step3: MaxWalkSAT

3.2 Computing conditional Probabilities

MLNs can answer arbitraty queries of the form "What is the probability that formula $F_1$ holds given that formula $F_2$ does?". If $F_1$ and $F_2$ are two formulas in first-order logic, C is a finite set of constants including any constants that appear in $F_1$ or $F_2$, and L is an MLN, then $$ \begin{align} P(F_1|F_2, L, C) & = P(F_1|F_2,M_{L,C}) \tag{3.2} \\ & = \frac{P(F_1 \land F_2 | M_{L,C})}{P(F_2|M_{L,C})} \tag{3.2} \\ & = \frac{\sum_{x \in \chi_{F_1} \cap \chi_{F_2} P(X=x|M_{L,C}) }}{\sum_{x \in \chi_{F_2}P(X=x|M_{L, C})}} \tag{3.2} \end{align} $$

where $\chi_{f_i}$ is the set of worlds where $F_i$ holds, $M_{L,C}$ is the Markov network defined by L and C;

Problem

MLN inference subsumes probabilistic inference, which is #P-complete, and logical inference, which is NP-complete;

Method: 数据的预处理

We focus on the case where $F_2$ is a conjunction of ground literals , this is the most frequent type in practice.

In this scenario, further efficiency can be gained by applying a generalization of knowledge-based model construction.

The basic idea is to only construct the minimal subset of the ground network required to answer the query.

This network is construct by checking if the atoms that the query formula directly depends on are in the evidence. If they are, the construction is complete. Those that are not are added to the network, and we in turn check the atoms they depend on. This process is repeated until all relevant atoms have been retrieved;

Markov blanket : parents + children + children's parents , BFS

example

Once the network has been constructed, we can apply any standard inference technique for Markov networks, such as Gibbs sampling;

Problem

One problem with using Gibbs sampling for inference in MLNs is that it breaks down in the presence of deterministic or near-deterministic dependencies. Deterministc dependencies break up the space of possible worlds into regions that are not reachable from each other, violating a basic requirement of MCMC. Near-deterministic dependencies greatly slow down inference, by creating regions of low probability that are very difficult to traverse.

Method

The MC-SAT is a slice sampling MCMC algorithm which uses a combination of satisfiability testing and simulated annealing to sample from the slice. The advantage of using a satisfiability solver(WalkSAT) is that it efficiently finds isolated modes in the distribution, and as a result the Markov chain mixes very rapidly.

Slice sampling is an instance of a widely used approach in MCMC inference that introduces auxiliary variables, $u$, to capture the dependencies between observed variables, $x$. For example, to sample from P(X=$x$) = (1/Z) $\prod_k \phi_k(x_{\{k\}})$, we can define P(X=$x$, U=$u$)=(1/Z) $\prod_k I_{[0, \; \phi_k(x_{\{k\}})]}(u_k)$

MLN 讨论 —— inference的更多相关文章

- MLN 讨论 —— 基础知识

一. MLN相关知识的介绍 1. First-order logic A first-order logic knowledge base (KB) is a set of formulas in f ...

- pgm5

这部分讨论 inference 里面基本的问题,即计算 这类 query,这一般可以认为等价于计算 ,因为我们只需要重新 normalize 一下关于 的分布就得到了需要的值,特别是像 MAP 这类 ...

- 论文笔记:Integrated Object Detection and Tracking with Tracklet-Conditioned Detection

概要 JiFeng老师CVPR2019的另一篇大作,真正地把检测和跟踪做到了一起,之前的一篇大作FGFA首次构建了一个非常干净的视频目标检测框架,但是没有实现帧间box的关联,也就是说没有实现跟踪.而 ...

- PRML读书会第十章 Approximate Inference(近似推断,变分推断,KL散度,平均场, Mean Field )

主讲人 戴玮 (新浪微博: @戴玮_CASIA) Wilbur_中博(1954123) 20:02:04 我们在前面看到,概率推断的核心任务就是计算某分布下的某个函数的期望.或者计算边缘概率分布.条件 ...

- 听同事讲 Bayesian statistics: Part 2 - Bayesian inference

听同事讲 Bayesian statistics: Part 2 - Bayesian inference 摘要:每天坐地铁上班是一件很辛苦的事,需要早起不说,如果早上开会又赶上地铁晚点,更是让人火烧 ...

- <A Decomposable Attention Model for Natural Language Inference>(自然语言推理)

http://www.xue63.com/toutiaojy/20180327G0DXP000.html 本文提出一种简单的自然语言推理任务下的神经网络结构,利用注意力机制(Attention Mec ...

- Intelligence Beyond the Edge: Inference on Intermittent Embedded Systems

郑重声明:原文参见标题,如有侵权,请联系作者,将会撤销发布! 以下是对本文关键部分的摘抄翻译,详情请参见原文. Abstract 能量收集技术为未来的物联网应用提供了一个很有前景的平台.然而,由于这些 ...

- [NodeJS] 优缺点及适用场景讨论

概述: NodeJS宣称其目标是“旨在提供一种简单的构建可伸缩网络程序的方法”,那么它的出现是为了解决什么问题呢,它有什么优缺点以及它适用于什么场景呢? 本文就个人使用经验对这些问题进行探讨. 一. ...

- CSS常见居中讨论

先来一个常见的案例,把一张图片和下方文字进行居中: 首先处理左右居中,考虑到img是一个行内元素,下方的文字内容也是行内元素,因此直接用text-align即可: <style> .con ...

随机推荐

- Robot Framework--修改log和报告的生成目录

1.修改log和报告的生成目录:-l F:\testreport\log -r F:\testreport\report -o F:\testreport\output -l:log -r:repor ...

- python_网络编程socket(UDP)

服务端: import socket sk = socket.socket(type=socket.SOCK_DGRAM) #创建基于UDP协议的socket对象 sk.bind(('127.0.0. ...

- linux实操_权限管理

rwx权限详解 作用到文件: [r]代表可读(read):可以读取,查看 [w]代表可写(write):可以修改,但是不代表可以删除文件,删除一个文件的前提条件时对该文件所在的目录有写权限,才能删除该 ...

- php 的一个异常处理程序

<?php//exceptionHandle.php xiecongwen 20140620 //define('DEBUG',true); /** * Display all errors w ...

- 【JUC系列第一篇】-Volatile关键字及内存可见性

作者:毕来生 微信:878799579 什么是JUC? JUC全称 java.util.concurrent 是在并发编程中很常用的实用工具类 2.Volatile关键字 1.如果一个变量被volat ...

- 11、Spring Boot 2.x 集成 HBase

1.11 Spring Boot 2.x 集成 HBase 完整源码: Spring-Boot-Demos

- kernel namespace

reference: https://lwn.net/Articles/531114/

- Visual Studio Code:中文乱码

造冰箱的大熊猫@cnblogs 2019/11/3 在UTF环境下,用VSCode打开一个GB2312编码的文件,显示乱码怎么办? 1.窗口右下方会显示当前所使用的编码格式(下图中手型图标所在处UTF ...

- CF540D Bad Luck Island

嘟嘟嘟 看到数据范围很小,就可以暴力\(O(n ^ 3)\)dp啦. 我们令\(dp[i][j][k]\)表示这三种人分别剩\(i, j, k\)个的概率.然后枚举谁挂了就行. 这里的重点在于两个人相 ...

- JIRA恢复备份后无法上传附件

1.在恢复JIRA 备份数据和附件后,上传附件失败,这一般是恢复附件时没有修改附件的拥有者和组 创建JIRA平台,会自动创建一个服务器的账户,如果是服务器第一次部署JIRA那么账户肯定是jira,如果 ...