【NLP】Conditional Language Modeling with Attention

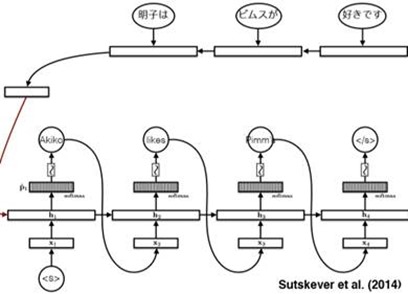

Review: Conditional LMs

Note that, in the Encoder part, we reverse the input to the ‘RNN’ and it performs well.

And we use the Decoder network(also a RNN), and use the ‘beam search’ algorithm to generate the target statement word by word.

The above network is a translation model.But it still needs to optimizer.

A very essential part of the model is the [Attention mechanism].

Conditional LMs with Attention

First: talk about the [condition]

In last blog, we compress a lot of information in a finite-sized vector and use it as the condition. That is to say, in the ‘Decoder’, for each input we use this vector as the condition to predict the next word.

But is it really correct?

An obvious thing is that a finite-sized vector cannot contain all the information since the input sentence could have a very one length. And gradients have a long way to travl so even LSTMs could forget!

In Translation Question, we can solve the problem by this:

Represent a source sentence as a matrix whose size can be changeable.

Then Generate a target sentence from the matrix. (As the condition and the condition is transformed form that matrix)

So how does this do?

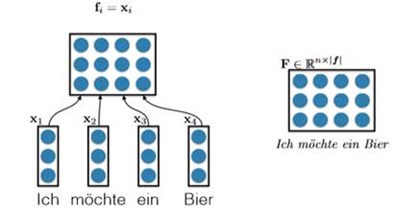

The very simpal way to fulfill that is [With Concatenation].

We have already known that the words can be represented by ‘embedding’ such as Word2Vec. And all the embeddings have the same size. For a sentence composed by n words, we can just put each word’s embedding together. So the matrix size is |vocabulary size|*n, which n is the length of sentence. That’s a really easy solution but it is useful. E.g.

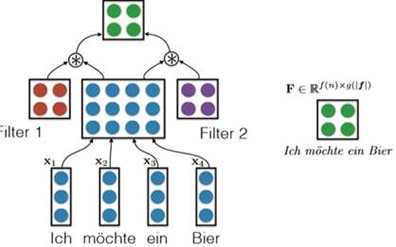

Another solution proposed by Gehring et al. (2016,FAIR) is [With Convolutional Nets].

It is to say, we use all embedding of the word from the sentence to form the concatenation matrix (just like the above method), and then we use a CNN to handle this matrix using some filters. And final we also generate a new matrix to represent the information. And in my opinion, this is a bit like extracting advanced features from image processing. E.g.

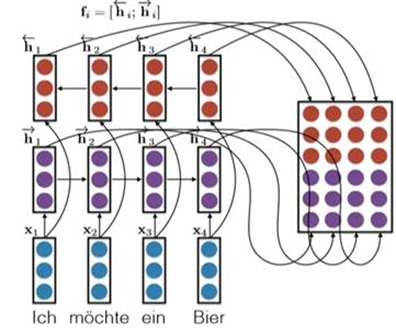

The most important method is [using the Bidirectional RNNs].

For one side, we use a RNN to handle the embedding, and we get n hidden layers which n is the length of the word.

For another side, we use another RNN to handle the embedding, but we reverse the input and finally we also get n hidden layers.

We put the 2n hidden layers together to generate the conditional matrix. E.g.

There are some other ways needed to be founded.

So next to the important part: how to use the ‘Attention model’ and use the attention to generate the condition vector form the condition matrix F.

Firstly, considering the decoder RNN:

We have a ‘start hidden layer’ and then generate the next hidden layer using the input x and we still need a conditional vector.

Suppose we also had an attention vector a. We can generate the condition vector by doing this:

c = Fa. Where F is the matrix and a is the attention vector. This can be understood as weighting the conditional matrix so that we can pay more attention to the contents of a certain sentence.

E.g.

So How to generate the Attention Vector?

That is, how do we compute a.

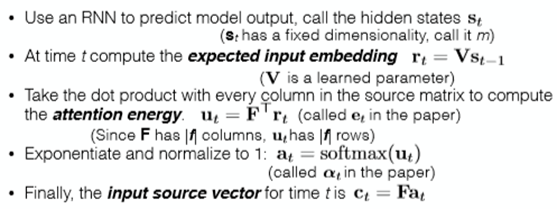

We can do by the following method:

For the time t, we know the hidden layer Ht-1, and we do linear transformation to it to generate a vector r. ( r = VHt-1) V is the learned parameter. Then we take dot product with every column in the source matrix to compute the attention energy a. ( a = F.T*r). So we generate the attention vector a by using a softmax to Exponentiate and normalize it to 1.

That is a simplified version of Bahdanau et al.’s solution. Summary of it:

|

|

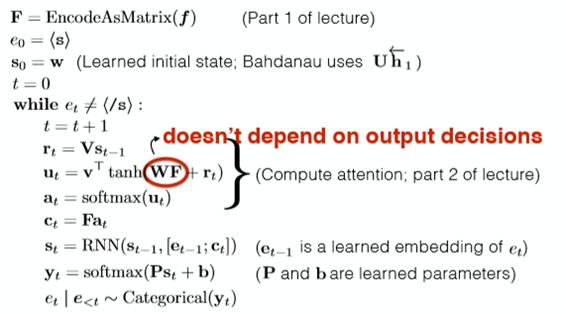

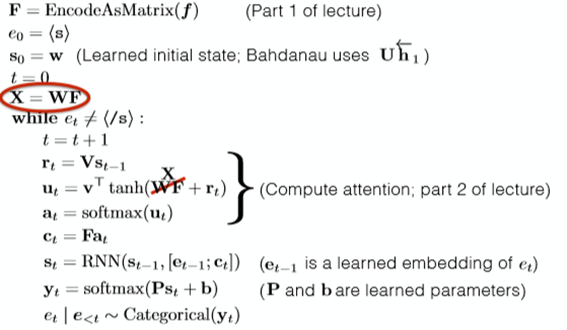

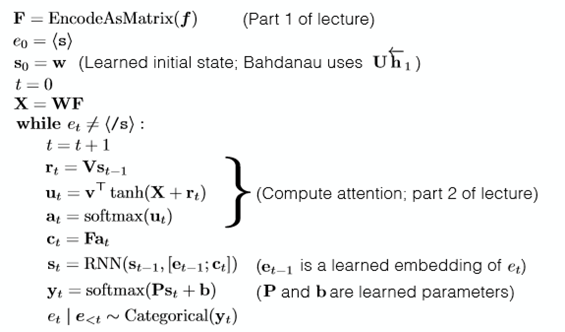

Another complex way to generate the attention vector is to use the [Nonlinear Attention-Energy Model].

Getting the r above, ( r = VHt-1) we generate a by: a = v.T * tanh(WF + r). Where v W and V is the learned parameter. How useful of the r is not to verify.

Summary

We put it all together and this is called the conditional LM with attention.

|

|

|

|

|

|

Attention in machine translation.

Add attention to seq2seq model translation: +11 BLEU.

An improvement in computing:

Note the difference form the above model. But whether it is useful is not sure.

About Gradients

We use the Gradient Descent.

Comprehension

Cho’s question: does a translator read and memorize the input sentence/document and then generate the output?

• Compressing the entire input sentence into a vector basically says “memorize the sentence”

• Common sense experience says translators refer back and forth to the input. (also backed up by eyetracking studies)

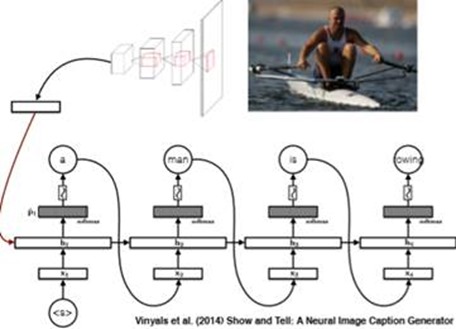

Image caption generation with attention: brief introduction

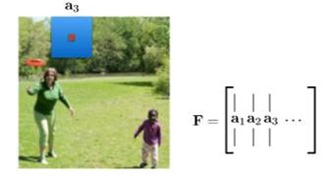

The main idea is that: we encode the picture to a matrix F and use it generate some attention and finally use the attention to generate the caption.

Generate matrix F:

Attention “weights” (a) are computed using exactly the same technique as discussed above.

Other techinques: Stochastic hard attention(sampling matrix F idea and not like the weighting matrix F idea). Learning Hard Attention. To be honesty, I don't know much about this.

【NLP】Conditional Language Modeling with Attention的更多相关文章

- 【NLP】Conditional Language Models

Language Model estimates the probs that the sequences of words can be a sentence said by a human. Tr ...

- 【NLP】Tika 文本预处理:抽取各种格式文件内容

Tika常见格式文件抽取内容并做预处理 作者 白宁超 2016年3月30日18:57:08 摘要:本文主要针对自然语言处理(NLP)过程中,重要基础部分抽取文本内容的预处理.首先我们要意识到预处理的重 ...

- [转]【NLP】干货!Python NLTK结合stanford NLP工具包进行文本处理 阅读目录

[NLP]干货!Python NLTK结合stanford NLP工具包进行文本处理 原贴: https://www.cnblogs.com/baiboy/p/nltk1.html 阅读目录 目 ...

- 【NLP】前戏:一起走进条件随机场(一)

前戏:一起走进条件随机场 作者:白宁超 2016年8月2日13:59:46 [摘要]:条件随机场用于序列标注,数据分割等自然语言处理中,表现出很好的效果.在中文分词.中文人名识别和歧义消解等任务中都有 ...

- 【NLP】基于自然语言处理角度谈谈CRF(二)

基于自然语言处理角度谈谈CRF 作者:白宁超 2016年8月2日21:25:35 [摘要]:条件随机场用于序列标注,数据分割等自然语言处理中,表现出很好的效果.在中文分词.中文人名识别和歧义消解等任务 ...

- 【NLP】基于机器学习角度谈谈CRF(三)

基于机器学习角度谈谈CRF 作者:白宁超 2016年8月3日08:39:14 [摘要]:条件随机场用于序列标注,数据分割等自然语言处理中,表现出很好的效果.在中文分词.中文人名识别和歧义消解等任务中都 ...

- 【NLP】基于统计学习方法角度谈谈CRF(四)

基于统计学习方法角度谈谈CRF 作者:白宁超 2016年8月2日13:59:46 [摘要]:条件随机场用于序列标注,数据分割等自然语言处理中,表现出很好的效果.在中文分词.中文人名识别和歧义消解等任务 ...

- 【NLP】条件随机场知识扩展延伸(五)

条件随机场知识扩展延伸 作者:白宁超 2016年8月3日19:47:55 [摘要]:条件随机场用于序列标注,数据分割等自然语言处理中,表现出很好的效果.在中文分词.中文人名识别和歧义消解等任务中都有应 ...

- 【NLP】Attention Model(注意力模型)学习总结

最近一直在研究深度语义匹配算法,搭建了个模型,跑起来效果并不是很理想,在分析原因的过程中,发现注意力模型在解决这个问题上还是很有帮助的,所以花了两天研究了一下. 此文大部分参考深度学习中的注意力机制( ...

随机推荐

- (二)阿里云ECS Linux服务器外网无法连接MySQL解决方法(报错2003- Can't connect MySQL Server on 'x.x.x.x'(10038))(自己亲身遇到的问题是防火墙的问题已经解决)

我的服务器买的是阿里云ECS linux系统.为了更好的操作数据库,我希望可以用navicat for mysql管理我的数据库. 当我按照正常的模式去链接mysql的时候, 报错提示: - Can' ...

- vue2.5.2版本 :MAC设置应用在127.0.0.1:80端口访问; 并将127.0.0.1指向www.yours.com ;问题“ Invalid Host header”

0.设置自己的host文件,将127.0.0.1指向自己想要访问的域名 127.0.0.1 www.yours.com 1.MAC设置应用在127.0.0.1:80端口访问: config/index ...

- charles抓包出现乱码 SSL Proxying not enabled for this host:enable in Proxy Setting,SSL locations

1.情景:抓包的域名下 全部是unknown,右侧出现了乱码 2.查看unknown的notes里面:SSL Proxying not enabled for this host:enable in ...

- H5的语义化标签(PS: 后续继续补充)

头部信息 <header></header> 区块标签 <figure> <figcaption>123</figcaption> < ...

- 阿里巴巴AI Lab成立两年,都做了些什么?

https://mp.weixin.qq.com/s/trkCGvpW6aCgnFwLxrGmvQ 撰稿 & 整理|Debra 编辑|Debra 导读:在 2018 云栖人工智能峰会上,阿里巴 ...

- arcgis api 3.x for js 入门开发系列十二地图打印GP服务(附源码下载)

前言 关于本篇功能实现用到的 api 涉及类看不懂的,请参照 esri 官网的 arcgis api 3.x for js:esri 官网 api,里面详细的介绍 arcgis api 3.x 各个类 ...

- React Native基础&入门教程:调试React Native应用的一小步

React Native(以下简称RN)为传统前端开发者打开了一扇新的大门.其中,使用浏览器的调试工具去Debug移动端的代码,无疑是最吸引开发人员的特性之一. 试想一下,当你在手机屏幕按下一个按钮, ...

- ASP.NET MVC从空项目开始定制项目

在上一篇net core的文章中已经讲过如何从零开始搭建WebSocket. 今天聊聊ASP.NET的文件结构,如何用自己的目录结构组织项目里的文件. 如果用Visual Studio(VS)向导或d ...

- JHipster技术栈定制 - JHipster Registry配置信息加密

本文说明了如何开启和使用JHipster-Registry的加解密功能. 1 整体规划 1.1 名词说明 名词 说明 备注 对称加密 最快速.最简单的一种加密方式,加密(encryption)与解密( ...

- C#ComboBox绑定List

ComboBox绑定List时可能会错, public class Person { public string Name; public int Age; public int Heigth; } ...