[Spark] 00 - Install Hadoop & Spark

Hadoop安装

环境配置 - Single Node Cluster

一、JDK配置

Ref: How to install hadoop 2.7.3 single node cluster on ubuntu 16.04

Ubuntu 18 + Hadoop 2.7.3 + Java 8

- $ sudo apt-get update

- $ sudo apt-get install openjdk--jdk

- $ java –version

版本若不对, 可以切换.

- $ update-alternatives --config java

二、SSH配置

Now we are logined in in ‘hduser’.

- $ ssh-keygen -t rsa

- NOTE: Leave file name and other things blank.

- $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

- $ chmod ~/.ssh/authorized_keys

- $ ssh localhost

三、安装 Hadoop

其实就是把内容整体移动到一个新的位置: /usr/local/下面

- $ wget http://www-us.apache.org/dist/hadoop/common/hadoop-2.7.3/hadoop-2.7.3.tar.gz

- $ tar xvzf hadoop-2.7..tar.gz

- $ sudo mkdir -p /usr/local/hadoop

- $ cd hadoop-2.7./

- $ sudo mv * /usr/local/hadoop

- $ sudo chown -R hduser:hadoop /usr/local/hadoop

四、配置 Hadoop

4.1 ~/.bashrc

4.2 hadoop-env.sh

4.3 core-site.xml

4.4 mapred-site.xml

4.5 hdfs-site.xml

4.6 yarn-site.xml

4.1 ~/.bashrc

- #HADOOP VARIABLES START

- export JAVA_HOME=/usr/lib/jvm/java--openjdk-amd64

- export HADOOP_HOME=/usr/local/hadoop

- export PATH=$PATH:$HADOOP_HOME/bin

- export PATH=$PATH:$HADOOP_HOME/sbin

- export HADOOP_MAPRED_HOME=$HADOOP_HOME

- export HADOOP_COMMON_HOME=$HADOOP_HOME

- export HADOOP_HDFS_HOME=$HADOOP_HOME

- export YARN_HOME=$HADOOP_HOME

- export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

- export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

- #HADOOP VARIABLES END

4.2 设置 java env

/usr/local/hadoop/etc/hadoop/hadoop-env.sh 文件中设置。

- export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

4.3 设置 core-site

/usr/local/hadoop/etc/hadoop/core-site.xml 文件中设置。

- <configuration>

- <property>

- <name>hadoop.tmp.dir</name>

- <value>/app/hadoop/tmp</value>

- <description>A base for other temporary directories.</description>

- </property>

- <property>

- <name>fs.default.name</name>

- <value>hdfs://localhost:54310</value>

- <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description>

- </property>

- </configuration>

参考: https://blog.csdn.net/Mr_LeeHY/article/details/77049800

- <configuration>

- <!--指定namenode的地址-->

- <property>

- <name>fs.defaultFS</name>

- <value>hdfs://master:9000</value>

- </property>

- <!--用来指定使用hadoop时产生文件的存放目录-->

- <property>

- <name>hadoop.tmp.dir</name>

- <value>file:///usr/hadoop/hadoop-2.6.0/tmp</value>

- </property>

- <!--用来设置检查点备份日志的最长时间-->

- <name>fs.checkpoint.period</name>

- <value>3600</value>

- </configuration>

所以,创建对应的目录存放文件。

- $ sudo mkdir -p /app/hadoop/tmp

- $ sudo chown hduser:hadoop /app/hadoop/tmp

4.4 设置 mapred-site

/usr/local/hadoop/etc/hadoop/mapred-site.xml 文件中配置。

同级目录下,已提供了一个模板,先拷贝。

- $ cp /usr/local/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/hadoop/etc/hadoop/mapred-site.xml

告诉hadoop以后 MR (Map/Reduce) 运行在YARN上。

- <configuration>

<property>- <name>mapred.job.tracker</name>

- <value>localhost:54311</value>

<description> The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task.- </description>

- </property>

- <property>

- <name>mapreduce.framework.name</name>

- <value>yarn</value>

- </property>

- </configuration>

4.5 设置 hdfs-site

/usr/local/hadoop/etc/hadoop/hdfs-site.xml 文件中配置。

- <configuration>

- <property>

- <name>dfs.replication</name>

- <value>1</value>

- <description>Default block replication.The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time.

- </description>

- </property>

- <property>

- <name>dfs.namenode.name.dir</name>

- <value>file:/usr/local/hadoop_store/hdfs/namenode</value>

- </property>

- <property>

- <name>dfs.datanode.data.dir</name>

- <value>file:/usr/local/hadoop_store/hdfs/datanode</value>

- </property>

- </configuration>

参考:https://blog.csdn.net/Mr_LeeHY/article/details/77049800

- <configuration>

- <!--指定hdfs保存数据的副本数量-->

- <property>

- <name>dfs.replication</name>

- <value>2</value>

- </property>

- <!--指定hdfs中namenode的存储位置-->

- <property>

- <name>dfs.namenode.name.dir</name>

- <value>file:/usr/hadoop/hadoop-2.6.0/tmp/dfs/name</value>

- </property>

- <!--指定hdfs中datanode的存储位置-->

- <property>

- <name>dfs.datanode.data.dir</name>

- <value>file:/usr/hadoop/hadoop-2.6.0/tmp/dfs/data</value>

- </property>

- </configuration>

4.6 配置 yarn-site

/usr/local/hadoop/etc/hadoop/yarn-site.xml 文件中配置。

- <configuration>

- <property>

- <name>yarn.nodemanager.aux-services</name>

- <value>mapreduce_shuffle</value>

- </property>

- </configuration>

参考:https://blog.csdn.net/Mr_LeeHY/article/details/77049800

- <configuration>

- <!--nomenodeManager获取数据的方式是shuffle-->

- <property>

- <name>yarn.nodemanager.aux-services</name>

- <value>mapreduce_shuffle</value>

- </property>

- <!--指定Yarn的老大(ResourceManager)的地址-->

- <property>

- <name>yarn.resourcemanager.hostname</name>

- <value>master</value>

- </property>

- <!--Yarn打印工作日志-->

- <property>

- <name>yarn.log-aggregation-enable</name>

- <value>true</value>

- </property>

- <configuration>

五、初始化 fs,并启动守护进程

守护进程:hadoop daemons。

- $ hadoop namenode –format

- $ cd /usr/local/hadoop/sbin

- $ start-all.sh

查看启动了哪些守护进程。

- hadoop@ThinkPad:~$ jps

- ResourceManager

- SecondaryNameNode

- Jps

- NodeManager

- NameNode

六、Hadoop 测试样例

需要确保datanode启动,这是一个single node cluster。

删除datanode内容,重启,然后再运行测试。

这里设计到jar包,也就是mapReduce的接口java编程。

- $ sudo rm -r /usr/local/hadoop_store/hdfs/datanode/current

- $ hadoop namenode -format

- $ start-all.sh

- $ jps

- $ hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar pi 2 5

- hadoop@unsw-ThinkPad-T490:/usr/local/hadoop$ hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7..jar pi

- Number of Maps =

- Samples per Map =

- // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- Wrote input for Map #

- Wrote input for Map #

- Starting Job

- // :: INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:

- // :: INFO input.FileInputFormat: Total input paths to process :

- // :: INFO mapreduce.JobSubmitter: number of splits:

- // :: INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1571615960327_0001

- // :: INFO impl.YarnClientImpl: Submitted application application_1571615960327_0001

- // :: INFO mapreduce.Job: The url to track the job: http://unsw-ThinkPad-T490:8088/proxy/application_1571615960327_0001/

- // :: INFO mapreduce.Job: Running job: job_1571615960327_0001

- // :: INFO mapreduce.Job: Job job_1571615960327_0001 running in uber mode : false

- // :: INFO mapreduce.Job: map % reduce %

- // :: INFO mapreduce.Job: map % reduce %

- // :: INFO mapreduce.Job: map % reduce %

- // :: INFO mapreduce.Job: Job job_1571615960327_0001 completed successfully

- // :: INFO mapreduce.Job: Counters:

- File System Counters

- FILE: Number of bytes read=

- FILE: Number of bytes written=

- FILE: Number of read operations=

- FILE: Number of large read operations=

- FILE: Number of write operations=

- HDFS: Number of bytes read=

- HDFS: Number of bytes written=

- HDFS: Number of read operations=

- HDFS: Number of large read operations=

- HDFS: Number of write operations=

- Job Counters

- Launched map tasks=

- Launched reduce tasks=

- Data-local map tasks=

- Total time spent by all maps in occupied slots (ms)=

- Total time spent by all reduces in occupied slots (ms)=

- Total time spent by all map tasks (ms)=

- Total time spent by all reduce tasks (ms)=

- Total vcore-milliseconds taken by all map tasks=

- Total vcore-milliseconds taken by all reduce tasks=

- Total megabyte-milliseconds taken by all map tasks=

- Total megabyte-milliseconds taken by all reduce tasks=

- Map-Reduce Framework

- Map input records=

- Map output records=

- Map output bytes=

- Map output materialized bytes=

- Input split bytes=

- Combine input records=

- Combine output records=

- Reduce input groups=

- Reduce shuffle bytes=

- Reduce input records=

- Reduce output records=

- Spilled Records=

- Shuffled Maps =

- Failed Shuffles=

- Merged Map outputs=

- GC time elapsed (ms)=

- CPU time spent (ms)=

- Physical memory (bytes) snapshot=

- Virtual memory (bytes) snapshot=

- Total committed heap usage (bytes)=

- Shuffle Errors

- BAD_ID=

- CONNECTION=

- IO_ERROR=

- WRONG_LENGTH=

- WRONG_MAP=

- WRONG_REDUCE=

- File Input Format Counters

- Bytes Read=

- File Output Format Counters

- Bytes Written=

- Job Finished in 15.972 seconds

- Estimated value of Pi is 3.60000000000000000000

Log

分布式配置

一、前言

伪分布式配置

安装课程:安装配置【厦大课程视频】

配置手册:Hadoop安装教程_单机/伪分布式配置_Hadoop2.6.0/Ubuntu14.04【厦大课程i笔记】

"手把手"环境配置:玩转大数据分析!Spark2.X+Python 精华实战课程(免费)【其实只是环境搭建】

/* 略,更关心真分布式 */

真分布式配置

本地虚拟机实验:1.3 virtualbox高级应用构建本地大数据集群服务器

三台不同云机器:Hadoop完全分布式安装配置完整过程

二、虚拟机

(1) 配置好virtualbox后(需要关闭security boot),配置IP,最好的固定的。

Goto: 1.3 virtualbox高级应用构建本地大数据集群服务器·【只有服务器配置,未配置hadoop】

只需要修改这里,给不同的slave设置不同的ip地址就好了。

- /etc/network/interfaces 文件中配置

- # (注释的内容忽略)增加的Host-only静态IP设置 (enp0s8 是根据拓扑关系映射的网卡名称(旧规则是eth0,eth1))

- # 可以通过 ```ls /sys/class/net```查看,是否为enp0s8

- auto enp0s8

- iface enp0s8 inet static

- address 192.168.56.106

- netmask 255.255.255.0

(2) 再安装工具:

- # 安装网络工具

- sudo apt install net-tools

- # 查看本地网络情况

- ifconfig

(3) 通过ssh登录slave机,进行验证。

- $ ssh -p 22 hadoop@192.168.56.101

- The authenticity of host '192.168.56.101 (192.168.56.101)' can't be established.

- ECDSA key fingerprint is SHA256:IPf76acROSwMC7BQO3hBAThLZCovARuoty765MfTps0.

- Are you sure you want to continue connecting (yes/no)? yes

- Warning: Permanently added '192.168.56.101' (ECDSA) to the list of known hosts.

- hadoop@192.168.56.101's password:

- hadoop@node2-VirtualBox:~$ hostname

- node2-VirtualBox

- hadoop@node2-VirtualBox:~$ sudo hostname worker1-VirtualBox

- [sudo] password for hadoop:

- hadoop@node2-VirtualBox:~$ hostname

- worker1-VirtualBox

(4) 永久性修改hostname的方式,注意,要修改两个文件。

- Type the following command to edit /etc/hostname using nano or vi text editor:

- sudo nano /etc/hostname

- Delete the old name and setup new name.

- Next Edit the /etc/hosts file:

- sudo nano /etc/hosts

- Replace any occurrence of the existing computer name with your new one.

- Reboot the system to changes take effect:

- sudo reboot

(5) 关闭 x-window

Ref: 2.2 Hadoop3.1.0完全分布式集群配置与部署

卸载x-window:Remove packages to transform Desktop to Server?

内存比大概是:140M : 900M

如果不是卸载,而是关掉x-window,则,没啥变化,还在内存里,只是变为了inactive。

- 按住ctrl+alt+f1,进入命令行。

- 输入sudo /etc/init.d/lightdm stop

- sudo /etc/init.d/lightdm status

- 重启xserver?输入sudo /etc/init.d/lightdm restart

(6) ssh无密码登录slave

master免密登录到worker中,以下是示范。

- ssh-copy-id -i ~/.ssh/id_rsa.pub master

- ssh-copy-id -i ~/.ssh/id_rsa.pub worker1

- ssh-copy-id -i ~/.ssh/id_rsa.pub worker2

(7) docker配置集群

三、分布式slave主机配置

写在前面

可以在本地修改好配置文件后,然后将Hadoop复制到集群服务器中。

Single node cluster的方式有点问题,配置过于繁琐,再次全新地配置一遍。

Ref: Part 1: How To install a 3-Node Hadoop Cluster on Ubuntu 16

Ref: How to Install and Set Up a 3-Node Hadoop Cluster【良心配置,可用】

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ hdfs namenode -format

- 2019-10-24 16:10:48,131 INFO namenode.NameNode: STARTUP_MSG:

- /************************************************************

- STARTUP_MSG: Starting NameNode

- STARTUP_MSG: host = node-master/192.168.56.2

- STARTUP_MSG: args = [-format]

- STARTUP_MSG: version = 3.1.2

- STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/httpcore-4.4.4.jar:/usr/local/hadoop/share/hadoop/common/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang3-3.4.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.13.jar:/usr/local/hadoop/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.18.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/common/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-framework-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/httpclient-4.5.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.10.5.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/curator-client-2.13.0.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.54.jar:/usr/local/hadoop/share/hadoop/common/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-kms-3.1.2.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-5.0.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/gson-2.2.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-smart-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-databind-2.7.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-net-3.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/re2j-1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-annotations-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/hadoop-auth-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/okio-1.6.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/avro-1.7.7.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.2-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn:/usr/local/hadoop/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-client-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-base-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/usr/local/hadoop/share/hadoop/yarn/lib/java-util-1.9.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/fst-2.50.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/usr/local/hadoop/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/usr/local/hadoop/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-4.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/objenesis-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/json-io-2.5.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.7.8.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-core-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-router-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-services-api-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.2.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-registry-3.1.2.jar

- STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a; compiled by 'sunilg' on 2019-01-29T01:39Z

- STARTUP_MSG: java = 1.8.0_222

- ************************************************************/

- 2019-10-24 16:10:48,148 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

- 2019-10-24 16:10:48,243 INFO namenode.NameNode: createNameNode [-format]

- 2019-10-24 16:10:48,354 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- 2019-10-24 16:10:48,607 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/data/nameNode in configuration.

- 2019-10-24 16:10:48,607 INFO common.Util: Assuming 'file' scheme for path /usr/local/hadoop/data/nameNode in configuration.

- Formatting using clusterid: CID-cadb861e-e62d-42e6-b62b-f834bbf05bca

- 2019-10-24 16:10:48,637 INFO namenode.FSEditLog: Edit logging is async:true

- 2019-10-24 16:10:48,653 INFO namenode.FSNamesystem: KeyProvider: null

- 2019-10-24 16:10:48,654 INFO namenode.FSNamesystem: fsLock is fair: true

- 2019-10-24 16:10:48,657 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

- 2019-10-24 16:10:48,666 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

- 2019-10-24 16:10:48,666 INFO namenode.FSNamesystem: supergroup = supergroup

- 2019-10-24 16:10:48,667 INFO namenode.FSNamesystem: isPermissionEnabled = true

- 2019-10-24 16:10:48,667 INFO namenode.FSNamesystem: HA Enabled: false

- 2019-10-24 16:10:48,708 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

- 2019-10-24 16:10:48,717 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

- 2019-10-24 16:10:48,718 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

- 2019-10-24 16:10:48,721 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

- 2019-10-24 16:10:48,721 INFO blockmanagement.BlockManager: The block deletion will start around 2019 Oct 24 16:10:48

- 2019-10-24 16:10:48,723 INFO util.GSet: Computing capacity for map BlocksMap

- 2019-10-24 16:10:48,723 INFO util.GSet: VM type = 64-bit

- 2019-10-24 16:10:48,725 INFO util.GSet: 2.0% max memory 443 MB = 8.9 MB

- 2019-10-24 16:10:48,725 INFO util.GSet: capacity = 2^20 = 1048576 entries

- 2019-10-24 16:10:48,731 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

- 2019-10-24 16:10:48,736 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

- 2019-10-24 16:10:48,737 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

- 2019-10-24 16:10:48,737 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

- 2019-10-24 16:10:48,737 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

- 2019-10-24 16:10:48,737 INFO blockmanagement.BlockManager: defaultReplication = 1

- 2019-10-24 16:10:48,737 INFO blockmanagement.BlockManager: maxReplication = 512

- 2019-10-24 16:10:48,738 INFO blockmanagement.BlockManager: minReplication = 1

- 2019-10-24 16:10:48,738 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

- 2019-10-24 16:10:48,738 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

- 2019-10-24 16:10:48,738 INFO blockmanagement.BlockManager: encryptDataTransfer = false

- 2019-10-24 16:10:48,738 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

- 2019-10-24 16:10:48,756 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

- 2019-10-24 16:10:48,767 INFO util.GSet: Computing capacity for map INodeMap

- 2019-10-24 16:10:48,767 INFO util.GSet: VM type = 64-bit

- 2019-10-24 16:10:48,768 INFO util.GSet: 1.0% max memory 443 MB = 4.4 MB

- 2019-10-24 16:10:48,768 INFO util.GSet: capacity = 2^19 = 524288 entries

- 2019-10-24 16:10:48,768 INFO namenode.FSDirectory: ACLs enabled? false

- 2019-10-24 16:10:48,769 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

- 2019-10-24 16:10:48,769 INFO namenode.FSDirectory: XAttrs enabled? true

- 2019-10-24 16:10:48,769 INFO namenode.NameNode: Caching file names occurring more than 10 times

- 2019-10-24 16:10:48,773 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

- 2019-10-24 16:10:48,775 INFO snapshot.SnapshotManager: SkipList is disabled

- 2019-10-24 16:10:48,778 INFO util.GSet: Computing capacity for map cachedBlocks

- 2019-10-24 16:10:48,778 INFO util.GSet: VM type = 64-bit

- 2019-10-24 16:10:48,779 INFO util.GSet: 0.25% max memory 443 MB = 1.1 MB

- 2019-10-24 16:10:48,779 INFO util.GSet: capacity = 2^17 = 131072 entries

- 2019-10-24 16:10:48,784 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

- 2019-10-24 16:10:48,784 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

- 2019-10-24 16:10:48,784 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

- 2019-10-24 16:10:48,787 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

- 2019-10-24 16:10:48,787 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

- 2019-10-24 16:10:48,789 INFO util.GSet: Computing capacity for map NameNodeRetryCache

- 2019-10-24 16:10:48,789 INFO util.GSet: VM type = 64-bit

- 2019-10-24 16:10:48,789 INFO util.GSet: 0.029999999329447746% max memory 443 MB = 136.1 KB

- 2019-10-24 16:10:48,789 INFO util.GSet: capacity = 2^14 = 16384 entries

- 2019-10-24 16:10:48,814 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1068893594-192.168.56.2-1571893848809

- 2019-10-24 16:10:48,832 INFO common.Storage: Storage directory /usr/local/hadoop/data/nameNode has been successfully formatted.

- 2019-10-24 16:10:48,838 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/hadoop/data/nameNode/current/fsimage.ckpt_0000000000000000000 using no compression

- 2019-10-24 16:10:48,910 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/hadoop/data/nameNode/current/fsimage.ckpt_0000000000000000000 of size 393 bytes saved in 0 seconds .

- 2019-10-24 16:10:48,918 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

- 2019-10-24 16:10:48,924 INFO namenode.NameNode: SHUTDOWN_MSG:

- /************************************************************

- SHUTDOWN_MSG: Shutting down NameNode at node-master/192.168.56.2

- ************************************************************/

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ jps

- 2128 Jps

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ start-dfs.sh

- Starting namenodes on [node-master]

- Starting datanodes

- Starting secondary namenodes [node-master]

- 2019-10-24 16:11:40,842 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ jps

- 2739 Jps

- 2342 NameNode

- 2617 SecondaryNameNode

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ stop-dfs.sh

- Stopping namenodes on [node-master]

- Stopping datanodes

- Stopping secondary namenodes [node-master]

- 2019-10-24 16:12:18,740 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ hdfs dfsadmin -report

- 2019-10-24 16:12:41,062 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- report: Call From node-master/192.168.56.2 to node-master:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ start-dfs.sh

- Starting namenodes on [node-master]

- Starting datanodes

- Starting secondary namenodes [node-master]

- 2019-10-24 16:13:16,921 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ hdfs dfsadmin -report

- 2019-10-24 16:13:21,162 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- Configured Capacity: 20014161920 (18.64 GB)

- Present Capacity: 2642968576 (2.46 GB)

- DFS Remaining: 2642919424 (2.46 GB)

- DFS Used: 49152 (48 KB)

- DFS Used%: 0.00%

- Replicated Blocks:

- Under replicated blocks: 0

- Blocks with corrupt replicas: 0

- Missing blocks: 0

- Missing blocks (with replication factor 1): 0

- Low redundancy blocks with highest priority to recover: 0

- Pending deletion blocks: 0

- Erasure Coded Block Groups:

- Low redundancy block groups: 0

- Block groups with corrupt internal blocks: 0

- Missing block groups: 0

- Low redundancy blocks with highest priority to recover: 0

- Pending deletion blocks: 0

- -------------------------------------------------

- Live datanodes (2):

- Name: 192.168.56.101:9866 (node1)

- Hostname: node1

- Decommission Status : Normal

- Configured Capacity: 10007080960 (9.32 GB)

- DFS Used: 24576 (24 KB)

- Non DFS Used: 7705030656 (7.18 GB)

- DFS Remaining: 1773494272 (1.65 GB)

- DFS Used%: 0.00%

- DFS Remaining%: 17.72%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Thu Oct 24 16:13:18 AEDT 2019

- Last Block Report: Thu Oct 24 16:13:12 AEDT 2019

- Num of Blocks: 0

- Name: 192.168.56.102:9866 (node2)

- Hostname: node2

- Decommission Status : Normal

- Configured Capacity: 10007080960 (9.32 GB)

- DFS Used: 24576 (24 KB)

- Non DFS Used: 8609099776 (8.02 GB)

- DFS Remaining: 869425152 (829.15 MB)

- DFS Used%: 0.00%

- DFS Remaining%: 8.69%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Thu Oct 24 16:13:18 AEDT 2019

- Last Block Report: Thu Oct 24 16:13:12 AEDT 2019

- Num of Blocks: 0

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ jps

- 3795 SecondaryNameNode

- 3983 Jps

- 3519 NameNode

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ hdfs dfs -mkdir -p /user/hadoop

- 2019-10-24 16:16:17,898 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ hdfs dfs -mkdir books

- 2019-10-24 16:16:24,789 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$

- hadoop@node-master:/usr/local/hadoop/etc/hadoop$ cd /home/hadoop

- hadoop@node-master:~$ wget -O alice.txt https://www.gutenberg.org/files/11/11-0.txt

- --2019-10-24 16:16:42-- https://www.gutenberg.org/files/11/11-0.txt

- Resolving www.gutenberg.org (www.gutenberg.org)... 152.19.134.47, 2610:28:3090:3000:0:bad:cafe:47

- Connecting to www.gutenberg.org (www.gutenberg.org)|152.19.134.47|:443... connected.

- HTTP request sent, awaiting response... 200 OK

- Length: 173595 (170K) [text/plain]

- Saving to: 'alice.txt’

- alice.txt 100%[=============================================================================================================>] 169.53K 51.4KB/s in 3.3s

- 2019-10-24 16:16:51 (51.4 KB/s) - 'alice.txt’ saved [173595/173595]

- hadoop@node-master:~$

- hadoop@node-master:~$

- hadoop@node-master:~$ wget -O holmes.txt https://www.gutenberg.org/files/1661/1661-0.txt

- --2019-10-24 16:16:56-- https://www.gutenberg.org/files/1661/1661-0.txt

- Resolving www.gutenberg.org (www.gutenberg.org)... 152.19.134.47, 2610:28:3090:3000:0:bad:cafe:47

- Connecting to www.gutenberg.org (www.gutenberg.org)|152.19.134.47|:443... connected.

- HTTP request sent, awaiting response... 200 OK

- Length: 607788 (594K) [text/plain]

- Saving to: 'holmes.txt’

- holmes.txt 100%[=============================================================================================================>] 593.54K 138KB/s in 4.3s

- 2019-10-24 16:17:03 (138 KB/s) - 'holmes.txt’ saved [607788/607788]

- hadoop@node-master:~$

- hadoop@node-master:~$

- hadoop@node-master:~$ wget -O frankenstein.txt https://www.gutenberg.org/files/84/84-0.txt

- --2019-10-24 16:17:07-- https://www.gutenberg.org/files/84/84-0.txt

- Resolving www.gutenberg.org (www.gutenberg.org)... 152.19.134.47, 2610:28:3090:3000:0:bad:cafe:47

- Connecting to www.gutenberg.org (www.gutenberg.org)|152.19.134.47|:443... connected.

- HTTP request sent, awaiting response... 200 OK

- Length: 450783 (440K) [text/plain]

- Saving to: 'frankenstein.txt’

- frankenstein.txt 100%[=============================================================================================================>] 440.22K 124KB/s in 3.6s

- 2019-10-24 16:17:14 (124 KB/s) - 'frankenstein.txt’ saved [450783/450783]

- hadoop@node-master:~$

- hadoop@node-master:~$

- hadoop@node-master:~$ hdfs dfs -put alice.txt holmes.txt frankenstein.txt books

- 2019-10-24 16:17:21,244 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- hadoop@node-master:~$

- hadoop@node-master:~$

- hadoop@node-master:~$ hdfs dfs -ls books

- 2019-10-24 16:17:29,413 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- Found 3 items

- -rw-r--r-- 1 hadoop supergroup 173595 2019-10-24 16:17 books/alice.txt

- -rw-r--r-- 1 hadoop supergroup 450783 2019-10-24 16:17 books/frankenstein.txt

- -rw-r--r-- 1 hadoop supergroup 607788 2019-10-24 16:17 books/holmes.txt

- hadoop@node-master:~$

- hadoop@node-master:~$

- hadoop@node-master:~$ hdfs dfs -get books/alice.txt

- 2019-10-24 16:17:35,328 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- get: `alice.txt': File exists

- hadoop@node-master:~$

实践日志

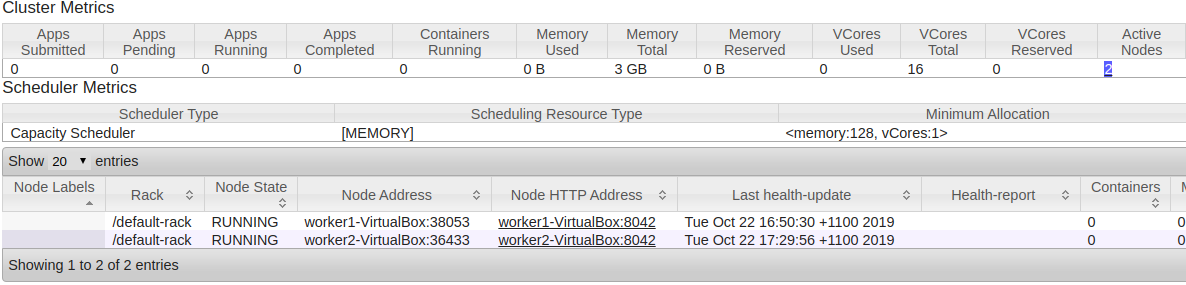

(1) Monitor your HDFS Cluster

主要是配置slave文件,3.0版本后改为了worker文件。

如果运行失败,就关掉,format一下,再开启。然后再运行下面命令。

- /usr/local/hadoop$ hdfs dfsadmin -report

- 19/10/22 17:24:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- Configured Capacity: 20014161920 (18.64 GB)

- Present Capacity: 7294656512 (6.79 GB)

- DFS Remaining: 7294607360 (6.79 GB)

- DFS Used: 49152 (48 KB)

- DFS Used%: 0.00%

- Under replicated blocks: 0

- Blocks with corrupt replicas: 0

- Missing blocks: 0

- Missing blocks (with replication factor 1): 0

- -------------------------------------------------

- Live datanodes (2):

- Name: 192.168.56.102:50010 (worker2-VirtualBox)

- Hostname: worker2-VirtualBox

- Decommission Status : Normal

- Configured Capacity: 10007080960 (9.32 GB)

- DFS Used: 24576 (24 KB)

- Non DFS Used: 6359736320 (5.92 GB)

- DFS Remaining: 3647320064 (3.40 GB)

- DFS Used%: 0.00%

- DFS Remaining%: 36.45%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Tue Oct 22 17:24:06 AEDT 2019

- Name: 192.168.56.101:50010 (worker1-VirtualBox)

- Hostname: worker1-VirtualBox

- Decommission Status : Normal

- Configured Capacity: 10007080960 (9.32 GB)

- DFS Used: 24576 (24 KB)

- Non DFS Used: 6359769088 (5.92 GB)

- DFS Remaining: 3647287296 (3.40 GB)

- DFS Used%: 0.00%

- DFS Remaining%: 36.45%

- Configured Cache Capacity: 0 (0 B)

- Cache Used: 0 (0 B)

- Cache Remaining: 0 (0 B)

- Cache Used%: 100.00%

- Cache Remaining%: 0.00%

- Xceivers: 1

- Last contact: Tue Oct 22 17:24:06 AEDT 2019

(2) 图形化监控

Goto: Yarn http://192.168.56.1:8088/

Goto: http://192.168.56.1:50070

Spark安装

一、安装方法

Goto: Install, Configure, and Run Spark on Top of a Hadoop YARN Cluster

Goto: https://anaconda.org/conda-forge/pyspark

- hadoop-3.1.2.tar.gz

- scala-2.12.10.deb

- spark-2.4.4-bin-without-hadoop.tgz

二、一些可能的问题

Ref: Spark multinode environment setup on yarn

Ref: SBT Error: “Failed to construct terminal; falling back to unsupported…”【.bashrc添加句柄】

Ref: Getting “cat: /release: No such file or directory” when running scala【使用高版本2.12.2+】

Ref: Using Spark's "Hadoop Free" Build【需要指定已安装hadoop的位置】

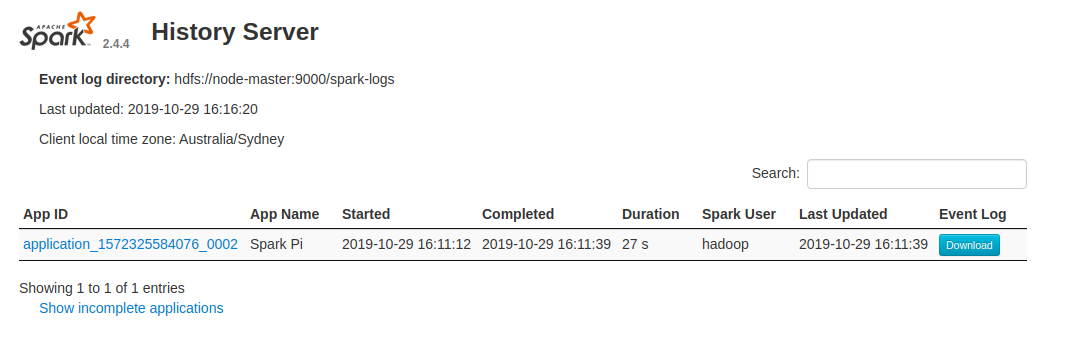

三、测试

四、远程 Notebook

Goto: Jupyter notebook远程访问服务器(实践出真知版)

End.

[Spark] 00 - Install Hadoop & Spark的更多相关文章

- Docker搭建大数据集群 Hadoop Spark HBase Hive Zookeeper Scala

Docker搭建大数据集群 给出一个完全分布式hadoop+spark集群搭建完整文档,从环境准备(包括机器名,ip映射步骤,ssh免密,Java等)开始,包括zookeeper,hadoop,hiv ...

- install scala & spark env

安装Scala 1,到http://www.scala-lang.org/download/ 下载与Spark版本对应的Scala.Spark1.2对应于Scala2.10的版本.这里下载scala- ...

- ubuntu下hadoop,spark配置

转载来自:http://www.cnblogs.com/spark-china/p/3941878.html 在VMWare 中准备第二.第三台运行Ubuntu系统的机器: 在VMWare中构建第 ...

- hadoop+spark集群搭建入门

忽略元数据末尾 回到原数据开始处 Hadoop+spark集群搭建 说明: 本文档主要讲述hadoop+spark的集群搭建,linux环境是centos,本文档集群搭建使用两个节点作为集群环境:一个 ...

- hadoop+spark集群搭建

1.选取三台服务器(CentOS系统64位) 114.55.246.88 主节点 114.55.246.77 从节点 114.55.246.93 从节点 之后的操作如果是用普通用户操作的话也必须知道r ...

- WSL2+Ubuntu配置Java Maven Hadoop Spark环境

所需文件: 更新日期为2021/5/8: Linux 内核更新包 JDK1.8 maven3.8.1 hadoop3.3.0 spark3.1.1 WSL?WSL2? WSL是适用于 Linux 的 ...

- Apache Spark 1.6 Hadoop 2.6 Mac下单机安装配置

一. 下载资料 1. JDK 1.6 + 2. Scala 2.10.4 3. Hadoop 2.6.4 4. Spark 1.6 二.预先安装 1. 安装JDK 2. 安装Scala 2.10.4 ...

- 大数据项目实践:基于hadoop+spark+mongodb+mysql+c#开发医院临床知识库系统

一.前言 从20世纪90年代数字化医院概念提出到至今的20多年时间,数字化医院(Digital Hospital)在国内各大医院飞速的普及推广发展,并取得骄人成绩.不但有数字化医院管理信息系统(HIS ...

- 哈,我自己翻译的小书,马上就完成了,是讲用python处理大数据框架hadoop,spark的

花了一些时间, 但感觉很值得. Big Data, MapReduce, Hadoop, and Spark with Python Master Big Data Analytics and Dat ...

随机推荐

- VS引用文件出现黄色感叹号丢失文件,应该如何解决?

VS是微软开发的一款超级强大的IDE,深受广大.net开发者喜爱. 但是再强大,也会有它的bug和缺点. 多人协同开发时,不知道你有没有遇到一个这样的情况:第二天上班,早早来到公司,打开电脑,拉取一下 ...

- Vue+springboot管理系统

About 此项目是vue+element-ui 快速开发的物资管理系统,后台用的java springBoot 所有数据都是从服务器实时获取的数据,具有登陆,注册,对数据进行管理,打印数据等功能 说 ...

- sea.js的同步魔法

前些时间也是想写点关于CMD模块规范的文字,以便帮助自己理解.今天看到一篇知乎回答,算是给了我一点启发. 同步写法却不阻塞? 先上一个sea.js很经典的模块写法: // 定义一个模块 define( ...

- Asp.NetCore源码学习[1-2]:配置[Option]

Asp.NetCore源码学习[1-2]:配置[Option] 在上一篇文章中,我们知道了可以通过IConfiguration访问到注入的ConfigurationRoot,但是这样只能通过索引器IC ...

- javascript 基础知识汇总(一)

1.<script> 标签 1) 可以通过<script> 标签将javaScript 代码添加到页面中 (type 和language 属性不是必须的) 2)外部的脚本可以通 ...

- 洛谷 P3627 【抢掠计划】

题库:洛谷 题号:3627 题目:抢掠计划 link:https://www.luogu.org/problem/P3627 思路 : 这道题是一道Tarjan + 最长路的题.首先,我们用Tarja ...

- C++中的I/O输入输出问题

C++ I/O navigation: 1.文件输入输出 2.string流 1.输入输出 C++语言不直接处理输入输出,而是通过一些标准库中类型.从设备(文件,控制台,内存)中读取数据,向设备中写入 ...

- 模板汇总——Tarjian

1. 单向边 + 新图建边 int belong[N], dfn[N], low[N], now_time, scc_cnt; stack<int> s; void dfs(int u) ...

- codeforces 509 D. Restoring Numbers(数学+构造)

题目链接:http://codeforces.com/problemset/problem/509/D 题意:题目给出公式w[i][j]= (a[i] + b[j])% k; 给出w,要求是否存在这样 ...

- codeforces 459 C. Pashmak and Buses(思维)

题目链接:http://codeforces.com/problemset/problem/459/C 题意:有n个人,k辆车,d天要求没有两个人在d天都坐在一起.输出坐的方法. 题解:这题很有意思, ...