k8s安装部署问题、解决方案汇总

| 角色 | 节点名 | 节点ip |

|---|---|---|

| master | n1 | 192.168.14.11 |

| 节点1 | n2 | 192.168.14.12 |

| 节点2 | n3 | 192.168.14.13 |

https://raw.githubusercontent.com/lannyMa/scripts/master/k8s/

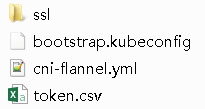

准备token.csv和bootstrap.kubeconfig文件

- 在master生成token.csv

BOOTSTRAP_TOKEN="41f7e4ba8b7be874fcff18bf5cf41a7c"

cat > token.csv<<EOF

41f7e4ba8b7be874fcff18bf5cf41a7c,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

- 将bootstrap.kubeconfig同步到所有节点

设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/root/ssl/ca.crt \

--embed-certs=true \

--server=http://192.168.14.11:8080 \

--kubeconfig=bootstrap.kubeconfig

设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token="41f7e4ba8b7be874fcff18bf5cf41a7c" \

--kubeconfig=bootstrap.kubeconfig

设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

两个文件我都放在了/root下.coredns+dashboard(heapster)+kube-router yaml

https://github.com/lannyMa/scripts/tree/master/k8s

k8s 1.9 on the fly启动

etcd --advertise-client-urls=http://192.168.14.11:2379 --listen-client-urls=http://0.0.0.0:2379 --debug

kube-apiserver --service-cluster-ip-range=10.254.0.0/16 --etcd-servers=http://127.0.0.1:2379 --insecure-bind-address=0.0.0.0 --admission-control=ServiceAccount --service-account-key-file=/root/ssl/ca.key --client-ca-file=/root/ssl/ca.crt --tls-cert-file=/root/ssl/server.crt --tls-private-key-file=/root/ssl/server.key --allow-privileged=true --storage-backend=etcd2 --v=2 --enable-bootstrap-token-auth --token-auth-file=/root/token.csv

kube-controller-manager --master=http://127.0.0.1:8080 --service-account-private-key-file=/root/ssl/ca.key --cluster-signing-cert-file=/root/ssl/ca.crt --cluster-signing-key-file=/root/ssl/ca.key --root-ca-file=/root/ssl/ca.crt --v=2

kube-scheduler --master=http://127.0.0.1:8080 --v=2

kubelet --allow-privileged=true --cluster-dns=10.254.0.2 --cluster-domain=cluster.local --v=2 --experimental-bootstrap-kubeconfig=/root/bootstrap.kubeconfig --kubeconfig=/root/kubelet.kubeconfig --fail-swap-on=false

kube-proxy --master=http://192.168.14.11:8080 --v=2

kubectl get csr | grep Pending | awk '{print $1}' | xargs kubectl certificate approveapi相对1.7的变化:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG.md#before-upgrading

https://mritd.me/2017/10/09/set-up-kubernetes-1.8-ha-cluster/

- kubelet没了--api-servers参数,必须用bootstrap方式去连api

- 移除了 --runtime-config=rbac.authorization.k8s.io/v1beta1 配置,因为 RBAC 已经稳定,被纳入了 v1 api,不再需要指定开启

- --authorization-mode 授权模型增加了 Node 参数,因为 1.8 后默认 system:node role 不会自动授予 system:nodes 组

- 增加 --audit-policy-file 参数用于指定高级审计配置

- 移除 --experimental-bootstrap-token-auth 参数,更换为 --enable-bootstrap-token-auth

k8s1.9 cni(flannel) on the fly启动

注: HostPort不能使用CNI网络插件(docker run -p 8081:8080)。这意味着pod中所有HostPort属性将被简单地忽略。

mkdir -p /etc/cni/net.d /opt/cni/bin

wget https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-amd64-v0.6.0.tgz

tar xf cni-plugins-amd64-v0.6.0.tgz -C /opt/cni/bin

cat > /etc/cni/net.d/10-flannel.conflist<<EOF

{

"name":"cni0",

"cniVersion":"0.3.1",

"plugins":[

{

"type":"flannel",

"delegate":{

"forceAddress":true,

"isDefaultGateway":true

}

},

{

"type":"portmap",

"capabilities":{

"portMappings":true

}

}

]

}

EOFetcd --advertise-client-urls=http://192.168.14.11:2379 --listen-client-urls=http://0.0.0.0:2379 --debug

kube-apiserver --service-cluster-ip-range=10.254.0.0/16 --etcd-servers=http://127.0.0.1:2379 --insecure-bind-address=0.0.0.0 --admission-control=ServiceAccount --service-account-key-file=/root/ssl/ca.key --client-ca-file=/root/ssl/ca.crt --tls-cert-file=/root/ssl/server.crt --tls-private-key-file=/root/ssl/server.key --allow-privileged=true --storage-backend=etcd2 --v=2 --enable-bootstrap-token-auth --token-auth-file=/root/token.csv

kube-controller-manager --master=http://127.0.0.1:8080 --service-account-private-key-file=/root/ssl/ca.key --cluster-signing-cert-file=/root/ssl/ca.crt --cluster-signing-key-file=/root/ssl/ca.key --root-ca-file=/root/ssl/ca.crt --v=2 --allocate-node-cidrs=true --cluster-cidr=10.244.0.0/16

kube-scheduler --master=http://127.0.0.1:8080 --v=2

kubelet --allow-privileged=true --cluster-dns=10.254.0.2 --cluster-domain=cluster.local --v=2 --experimental-bootstrap-kubeconfig=/root/bootstrap.kubeconfig --kubeconfig=/root/kubelet.kubeconfig --fail-swap-on=false --network-plugin=cni

kube-proxy --master=http://192.168.14.11:8080 --v=2 kubectl apply -f https://raw.githubusercontent.com/lannyMa/scripts/master/k8s/cni-flannel.yml查看pod确实从cni0分到了地址

[root@n1 ~]# kk

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE LABELS

default b1 1/1 Running 0 6m 10.244.0.2 n2.ma.com <none>

default b2 1/1 Running 0 6m 10.244.1.2 n3.ma.com <none>

[root@n1 ~]# kubectl exec -it b1 sh

/ # ping 10.244.1.2

PING 10.244.1.2 (10.244.1.2): 56 data bytes

64 bytes from 10.244.1.2: seq=0 ttl=62 time=6.292 ms

64 bytes from 10.244.1.2: seq=1 ttl=62 time=0.981 ms

遇到的报错

kubectl apply -f cni-flannel.yml没提示报错,但get pod无显示

kubectl apply -f https://raw.githubusercontent.com/lannyMa/scripts/master/k8s/cni-flannel.yml

原因: yaml用到了sa未创建

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-systempod0无cni0网卡,创建pod后分到的是172.17.x.x即docker0分配的地址

原因: kubelet未加cni启动参数

--network-plugin=cni创建成功后提示.kubectl create成功后一直pending,kubelet报错

因为ipv6没开,参考how-to-disable-ipv6

ifconfig -a | grep inet6I1231 23:22:08.343188 15369 kubelet.go:1881] SyncLoop (PLEG): "busybox_default(5a16fa0c-ee3e-11e7-9220-000c29bfdc52)", event: &pleg.PodLifecycleEvent{ID:"5a16fa0c-ee3e-11e7-9220-000c29bfdc52", Type:"ContainerDied", Data:"76e584c83f8dd3d54c759ac637bb47aa2a94de524372e282abde004d1cfbcd1b"}

W1231 23:22:08.343338 15369 pod_container_deletor.go:77] Container "76e584c83f8dd3d54c759ac637bb47aa2a94de524372e282abde004d1cfbcd1b" not found in pod's containers

I1231 23:22:08.644401 15369 kuberuntime_manager.go:403] No ready sandbox for pod "busybox_default(5a16fa0c-ee3e-11e7-9220-000c29bfdc52)" can be found. Need to start a new one

E1231 23:22:08.857121 15369 cni.go:259] Error adding network: open /proc/sys/net/ipv6/conf/eth0/accept_dad: no such file or directory

E1231 23:22:08.857144 15369 cni.go:227] Error while adding to cni network: open /proc/sys/net/ipv6/conf/eth0/accept_dad: no such file or directory

E1231 23:22:08.930343 15369 remote_runtime.go:92] RunPodSandbox from runtime service failed: rpc error: code = Unknown desc = NetworkPlugin cni failed to set up pod "busybox_default" network: open /proc/sys/net/ipv6/conf/eth0/accept_dad: no such file or directory多次实验,导致node的网络配置污染,所以新验证时一定要reboot,清理环境

rm -rf /var/lib/kubelet/

docker rm $(docker ps -a -q)controller需要加这两个参数,且cluster-cidr的地址和cni-flannel.yaml里的地址要一致.和svc网段的地址(--service-cluster-ip-range)不要相同.

--allocate-node-cidrs=true --cluster-cidr=10.244.0.0/16no IP addresses available in range set: 10.244.0.1-10.244.0.254

没地址了,pod一直在创建中....

参考: https://github.com/kubernetes/kubernetes/issues/57280

现象:

- kubelet报错

E0101 00:06:38.629105 1109 kuberuntime_manager.go:647] createPodSandbox for pod "busybox2_default(7fa06467-ee44-11e7-a440-000c29bfdc52)" failed: rpc error: code = Unknown desc = NetworkPlugin cni failed to set up pod "busybox2_default" network: failed to allocate for range 0: no IP addresses available in range set: 10.244.0.1-10.244.0.254

E0101 00:06:38.629143 1109 pod_workers.go:186] Error syncing pod 7fa06467-ee44-11e7-a440-000c29bfdc52 ("busybox2_default(7fa06467-ee44-11e7-a440-000c29bfdc52)"), skipping: failed to "CreatePodSandbox" for "busybox2_default(7fa06467-ee44-11e7-a440-000c29bfdc52)" with CreatePodSandboxError: "CreatePodSandbox for pod \"busybox2_default(7fa06467-ee44-11e7-a440-000c29bfdc52)\" failed: rpc error: code = Unknown desc = NetworkPlugin cni failed to set up pod \"busybox2_default\" network: failed to allocate for range 0: no IP addresses available in range set: 10.244.0.1-10.244.0.254"

- ip地址

/var/lib/cni/networks# ls cbr0/

10.244.0.10 10.244.0.123 10.244.0.147 10.244.0.170 10.244.0.194 10.244.0.217 10.244.0.240 10.244.0.35 10.244.0.59 10.244.0.82

10.244.0.100 10.244.0.124 10.244.0.148 10.244.0.171 10.244.0.195 10.244.0.218 10.244.0.241 10.244.0.36 10.244.0.6 10.244.0.83

10.244.0.101 10.244.0.125 10.244.0.149 10.244.0.172 10.244.0.196 10.244.0.219 10.244.0.242 10.244.0.37 10.244.0.60 10.244.0.84

10.244.0.102 10.244.0.126 10.244.0.15 10.244.0.173 10.244.0.197 10.244.0.22 10.244.0.243 10.244.0.38 10.244.0.61 10.244.0.85

10.244.0.103 10.244.0.127 10.244.0.150 10.244.0.174 10.244.0.198 10.244.0.220 10.244.0.244 10.244.0.39 10.244.0.62 10.244.0.86

10.244.0.104 10.244.0.128 10.244.0.151 10.244.0.175 10.244.0.199 10.244.0.221 10.244.0.245 10.244.0.4 10.244.0.63 10.244.0.87

10.244.0.105 10.244.0.129 10.244.0.152 10.244.0.176 10.244.0.2 10.244.0.222 10.244.0.246 10.244.0.40 10.244.0.64 10.244.0.88

10.244.0.106 10.244.0.13 10.244.0.153 10.244.0.177 10.244.0.20 10.244.0.223 10.244.0.247 10.244.0.41 10.244.0.65 10.244.0.89

10.244.0.107 10.244.0.130 10.244.0.154 10.244.0.178 10.244.0.200 10.244.0.224 10.244.0.248 10.244.0.42 10.244.0.66 10.244.0.9

10.244.0.108 10.244.0.131 10.244.0.155 10.244.0.179 10.244.0.201 10.244.0.225 10.244.0.249 10.244.0.43 10.244.0.67 10.244.0.90

10.244.0.109 10.244.0.132 10.244.0.156 10.244.0.18 10.244.0.202 10.244.0.226 10.244.0.25 10.244.0.44 10.244.0.68 10.244.0.91

10.244.0.11 10.244.0.133 10.244.0.157 10.244.0.180 10.244.0.203 10.244.0.227 10.244.0.250 10.244.0.45 10.244.0.69 10.244.0.92

10.244.0.110 10.244.0.134 10.244.0.158 10.244.0.181 10.244.0.204 10.244.0.228 10.244.0.251 10.244.0.46 10.244.0.7 10.244.0.93

10.244.0.111 10.244.0.135 10.244.0.159 10.244.0.182 10.244.0.205 10.244.0.229 10.244.0.252 10.244.0.47 10.244.0.70 10.244.0.94

10.244.0.112 10.244.0.136 10.244.0.16 10.244.0.183 10.244.0.206 10.244.0.23 10.244.0.253 10.244.0.48 10.244.0.71 10.244.0.95

10.244.0.113 10.244.0.137 10.244.0.160 10.244.0.184 10.244.0.207 10.244.0.230 10.244.0.254 10.244.0.49 10.244.0.72 10.244.0.96

10.244.0.114 10.244.0.138 10.244.0.161 10.244.0.185 10.244.0.208 10.244.0.231 10.244.0.26 10.244.0.5 10.244.0.73 10.244.0.97

10.244.0.115 10.244.0.139 10.244.0.162 10.244.0.186 10.244.0.209 10.244.0.232 10.244.0.27 10.244.0.50 10.244.0.74 10.244.0.98

10.244.0.116 10.244.0.14 10.244.0.163 10.244.0.187 10.244.0.21 10.244.0.233 10.244.0.28 10.244.0.51 10.244.0.75 10.244.0.99

10.244.0.117 10.244.0.140 10.244.0.164 10.244.0.188 10.244.0.210 10.244.0.234 10.244.0.29 10.244.0.52 10.244.0.76 last_reserved_ip.0

10.244.0.118 10.244.0.141 10.244.0.165 10.244.0.189 10.244.0.211 10.244.0.235 10.244.0.3 10.244.0.53 10.244.0.77

10.244.0.119 10.244.0.142 10.244.0.166 10.244.0.19 10.244.0.212 10.244.0.236 10.244.0.30 10.244.0.54 10.244.0.78

10.244.0.12 10.244.0.143 10.244.0.167 10.244.0.190 10.244.0.213 10.244.0.237 10.244.0.31 10.244.0.55 10.244.0.79

10.244.0.120 10.244.0.144 10.244.0.168 10.244.0.191 10.244.0.214 10.244.0.238 10.244.0.32 10.244.0.56 10.244.0.8

10.244.0.121 10.244.0.145 10.244.0.169 10.244.0.192 10.244.0.215 10.244.0.239 10.244.0.33 10.244.0.57 10.244.0.80

10.244.0.122 10.244.0.146 10.244.0.17 10.244.0.193 10.244.0.216 10.244.0.24 10.244.0.34 10.244.0.58 10.244.0.81

- flannel创建了很多文件

/var/lib/cni/flannel# ls | wc ; date 解决:

kubeadm reset

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

service docker restart

service kubelet restart

kubeadm join {你的参数}

- 推荐打开,不打开我没发现什么问题

echo 'net.bridge.bridge-nf-call-iptables=1' >> /etc/sysctl.conf

sysctl -p

# 打开ip转发,下面4条都干上去

net.ipv4.ip_forward = 1

# Disable netfilter on bridges.

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-arptables = 1参考:

https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

http://cizixs.com/2017/05/23/container-network-cni

https://k8smeetup.github.io/docs/concepts/cluster-administration/network-plugins/

https://mritd.me/2017/09/20/set-up-ha-kubernetes-cluster-on-aliyun-ecs/

https://coreos.com/flannel/docs/latest/kubernetes.html (不要用legency的那个,那个是kubelet模式)

https://feisky.gitbooks.io/kubernetes/network/flannel/#cni集成

http://blog.csdn.net/idea77/article/details/78793318

kube-proxy ipvs模式

目前还是测试版,打开玩一玩.

参考: https://jicki.me/2017/12/20/kubernetes-1.9-ipvs/#%E5%90%AF%E5%8A%A8-kube-proxy

https://mritd.me/2017/10/10/kube-proxy-use-ipvs-on-kubernetes-1.8/

确保内核有rr模块

[root@n2 ~]# lsmod | grep ip_vs

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 2

ip_vs 141092 8 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 133387 9 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

启用 ipvs 后与 1.7 版本的配置差异如下:

增加 --feature-gates=SupportIPVSProxyMode=true 选项,用于告诉 kube-proxy 开启 ipvs 支持,因为目前 ipvs 并未稳定

增加 ipvs-min-sync-period、--ipvs-sync-period、--ipvs-scheduler 三个参数用于调整 ipvs,具体参数值请自行查阅 ipvs 文档

增加 --masquerade-all 选项,以确保反向流量通过

重点说一下 --masquerade-all 选项: kube-proxy ipvs 是基于 NAT 实现的,当创建一个 service 后,kubernetes 会在每个节点上创建一个网卡,同时帮你将 Service IP(VIP) 绑定上,此时相当于每个 Node 都是一个 ds,而其他任何 Node 上的 Pod,甚至是宿主机服务(比如 kube-apiserver 的 6443)都可能成为 rs;按照正常的 lvs nat 模型,所有 rs 应该将 ds 设置成为默认网关,以便数据包在返回时能被 ds 正确修改;在 kubernetes 将 vip 设置到每个 Node 后,默认路由显然不可行,所以要设置 --masquerade-all 选项,以便反向数据包能通过

注意:--masquerade-all 选项与 Calico 安全策略控制不兼容,请酌情使用kube-proxy --master=http://192.168.14.11:8080 --v=2 --feature-gates=SupportIPVSProxyMode=true --masquerade-all --proxy-mode=ipvs --masquerade-all

注意:

1.需要打开 –feature-gates=SupportIPVSProxyMode=true,官方 –feature-gates=SupportIPVSProxyMode=false 默认是 false

2.–masquerade-all 必须添加这项配置,否则 创建 svc 在 ipvs 不会添加规则

3.打开 ipvs 需要安装 ipvsadm 软件, 在 node 中安装

yum install ipvsadm -y

ipvsadm -L -n[root@n2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.254.0.1:443 rr persistent 10800

-> 192.168.14.11:6443 Masq 1 0 0

TCP 10.254.12.188:80 rr

-> 10.244.0.3:80 Masq 1 0 0

-> 10.244.1.4:80 Masq 1 0 0 k8s安装部署问题、解决方案汇总的更多相关文章

- k8s安装部署过程个人总结及参考文章

以下是本人安装k8s过程 一.单机配置 1. 环境准备 主机名 IP 配置 master1 192.168.1.181 1C 4G 关闭所有节点的seliux以及firewalld sed -i 's ...

- k8s安装部署成功

- Linux 下Redis集群安装部署及使用详解(在线和离线两种安装+相关错误解决方案)

一.应用场景介绍 本文主要是介绍Redis集群在Linux环境下的安装讲解,其中主要包括在联网的Linux环境和脱机的Linux环境下是如何安装的.因为大多数时候,公司的生产环境是在内网环境下,无外网 ...

- K8S的安装部署以及基础知识

Kubernetes(K8S)概述 Kubernetes又称作k8s,是Google在2014年发布的一个开源项目. 最初Google开发了一个叫Borg的系统(现在命名为Omega),来调度近20多 ...

- Centos7 安装部署Kubernetes(k8s)集群

目录 一.系统环境 二.前言 三.Kubernetes 3.1 概述 3.2 Kubernetes 组件 3.2.1 控制平面组件 3.2.2 Node组件 四.安装部署Kubernetes集群 4. ...

- Istio(二):在Kubernetes(k8s)集群上安装部署istio1.14

目录 一.模块概览 二.系统环境 三.安装istio 3.1 使用 Istioctl 安装 3.2 使用 Istio Operator 安装 3.3 生产部署情况如何? 3.4 平台安装指南 四.Ge ...

- openstack pike 集群高可用 安装 部署 目录汇总

# openstack pike 集群高可用 安装部署#安装环境 centos 7 史上最详细的openstack pike版 部署文档欢迎经验分享,欢迎笔记分享欢迎留言,或加QQ群663105353 ...

- kubernetes系列03—kubeadm安装部署K8S集群

本文收录在容器技术学习系列文章总目录 1.kubernetes安装介绍 1.1 K8S架构图 1.2 K8S搭建安装示意图 1.3 安装kubernetes方法 1.3.1 方法1:使用kubeadm ...

- K8S集群安装部署

K8S集群安装部署 参考地址:https://www.cnblogs.com/xkops/p/6169034.html 1. 确保系统已经安装epel-release源 # yum -y inst ...

随机推荐

- Trie学习笔记

Trie(字典树) 基本数据结构 实际是:对于每个字符串组的每一个不同前缀建立节点 基本代码 void Insert(char *s,int p){ int now=0; int l=strlen(s ...

- docker安装 与 基本配置

1.安装docker #yum remove docker \ docker-common \ container-selinux \ docker-selinux \ docker-engine \ ...

- java基础之 内部类 & 嵌套类

参考文档: 内部类的应用场景 http://blog.csdn.net/hivon/article/details/606312 http://wwty.iteye.com/blog/338628 定 ...

- JavaScript初探系列(八)——DOM

DOM(文档对象模型)是针对HTML和XML文档的一个API,描绘了一个层次化的节点树,允许开发人员添加.删除和修改页面的某一部分. HTML DOM 树形结构如下: 一.Node方面 (一).节点类 ...

- T-MAX——团队展示

第一次团队博客:百战黄沙穿金甲 基础介绍 这个作业属于哪个课程 2019秋福大软件工程实践Z班 这个作业要求在哪里 团队作业第一次-团队展示 团队名称 T-MAX 这个作业的目标 展现团队成员的风采, ...

- INDY10 BASE64编码

INDY10 BASE64编码 DELPHI自带的BASE64单元,在项目中使用发现非常没有效率,INDY10的好用. uses IdCoderMIME BASE64编码类:TIdEncoderMIM ...

- el-select获取选中的value和label

selectGetFn(val) { if (this.multipleFlag) { for (let i = 0; i < GUIDArr.length; i++) { let obj = ...

- CentOS7安装及配置vsftpd (FTP服务器FTP账号创建以及权限设置)

本文章向大家介绍CentOS7安装及配置vsftpd (FTP服务器FTP账号创建以及权限设置),主要包括CentOS7安装及配置vsftpd (FTP服务器FTP账号创建以及权限设置)使用实例.应用 ...

- ROC曲线 VS PR曲线

python机器学习-乳腺癌细胞挖掘(博主亲自录制视频)https://study.163.com/course/introduction.htm?courseId=1005269003&ut ...

- 公司-IT-Yahoo:百科

ylbtech-公司-IT-Yahoo:百科 雅虎(英文名称:Yahoo!,NASDAQ:YHOO)是美国著名的互联网门户网站,也是20世纪末互联网奇迹的创造者之一.其服务包括搜索引擎.电邮.新闻等, ...