大batch任务对structured streaming任务影响

信念,你拿它没办法,但是没有它你什么也做不成。—— 撒姆尔巴特勒

前言

对于spark streaming而言,大的batch任务会导致后续batch任务积压,对于structured streaming任务影响如何,本篇文章主要来做一下简单的说明。

本篇文章的全称为设置trigger后,运行时间长的 query 对后续 query 的submit time的影响

Trigger类型

首先trigger有三种类型,分别为 OneTimeTrigger ,ProcessingTime 以及 ContinuousTrigger 三种。这三种解释可以参照 spark 集群优化 中对 trigger的解释说明。

设置OneTimeTrigger后,运行时间长的 query 对后续 query 的submit time的影响

OneTimeTrigger只执行一次query就结束了,不存在对后续batch影响。

设置ProcessingTimeTrigger后,运行时间长的 query 对后续 query 的submit time的影响

设置超过 trigger inverval的sleep时间

代码截图如下,即在每一个partition上的task中添加一个sleep逻辑:

运行效果

运行效果截图如下:

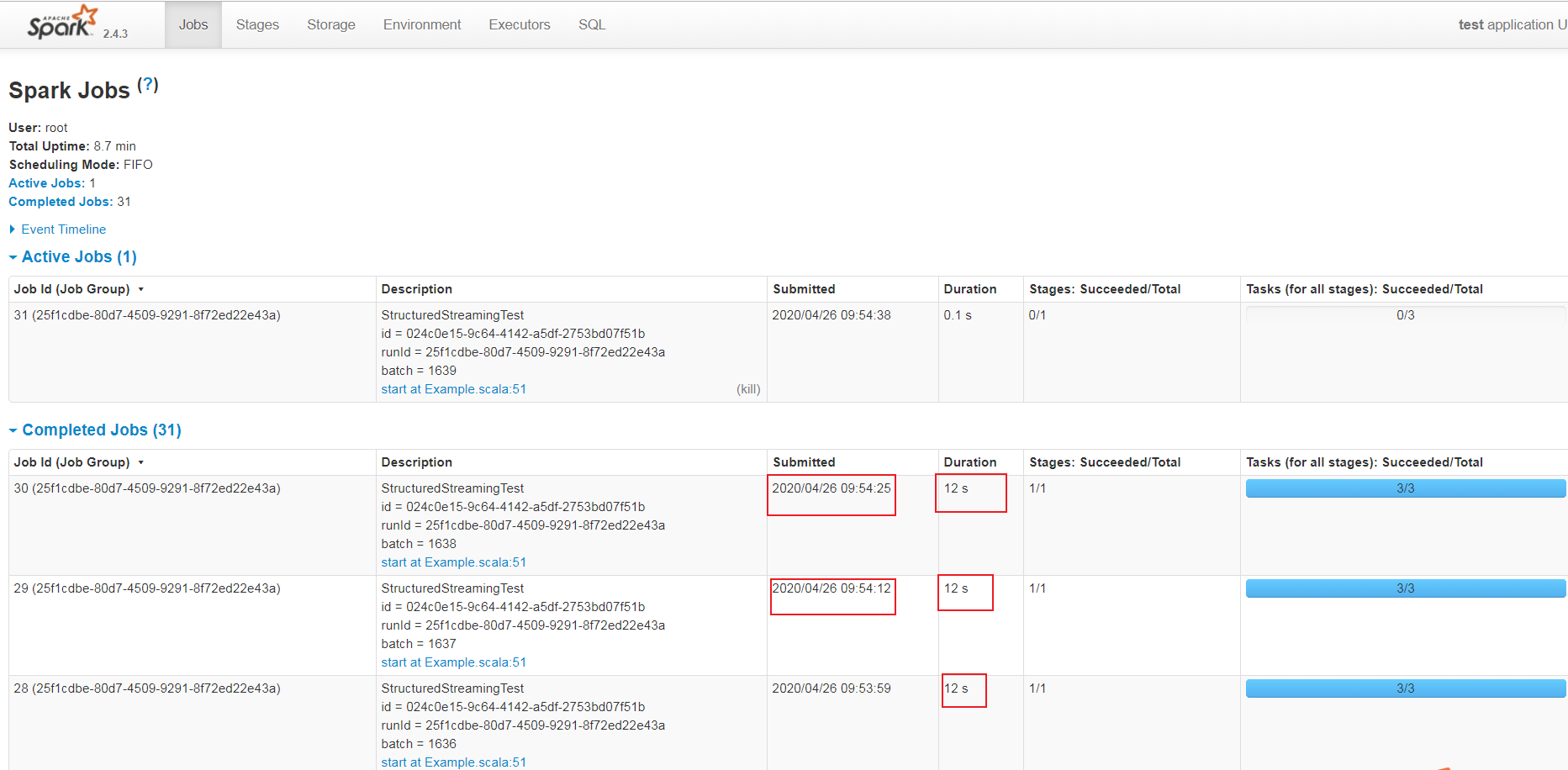

UI的Jobs面板截图如下:

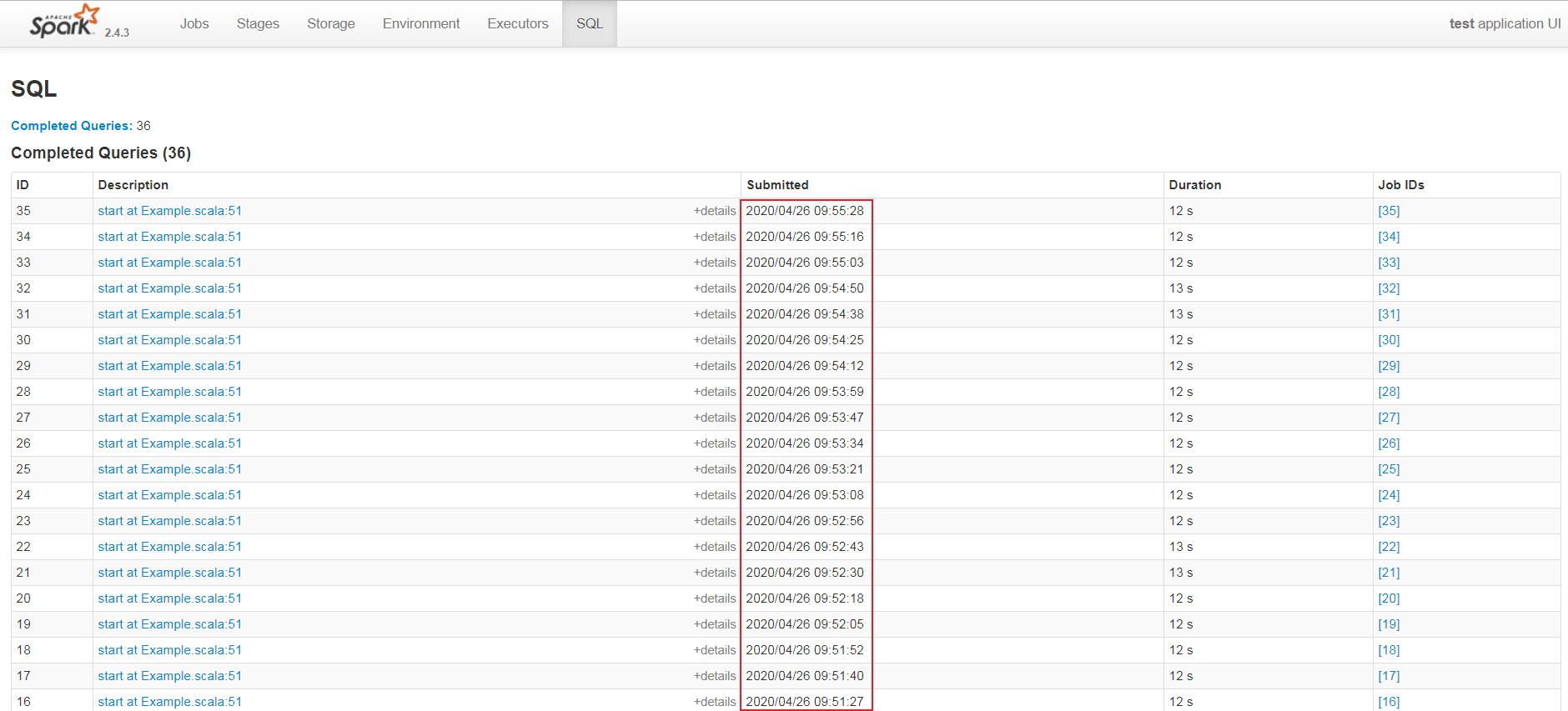

UI的SQL面板截图如下:

通过上面两个面板截图中的submitted列可以看出,此时每一个batch的query 提交时间是根据前驱query的结束时间来确定的。

设置 ContinuousTrigger 后,运行时间长的 query 对后续 query 的submit time的影响

源码分析

下面从源码角度来分析一下。

StreamExecution的职责

它是当新数据到达时在后台连续执行的查询的句柄。管理在单独线程中发生的流式Spark SQL查询的执行。 与标准查询不同,每次新数据到达查询计划中存在的任何 Source 时,流式查询都会重复执行。每当新数据到达时,都会创建一个 QueryExecution,并将结果以事务方式提交给给定的 Sink 。

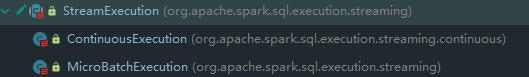

它有两个子类,截图如下:

Tigger和StreamExecution的对应关系

在org.apache.spark.sql.streaming.StreamingQueryManager#createQuery方法中有如下代码片段:

(sink, trigger) match {

case (v2Sink: StreamWriteSupport, trigger: ContinuousTrigger) =>

if (sparkSession.sessionState.conf.isUnsupportedOperationCheckEnabled) {

UnsupportedOperationChecker.checkForContinuous(analyzedPlan, outputMode)

}

// 使用 ContinuousTrigger 则为 ContinuousExecution

new StreamingQueryWrapper(new ContinuousExecution(

sparkSession,

userSpecifiedName.orNull,

checkpointLocation,

analyzedPlan,

v2Sink,

trigger,

triggerClock,

outputMode,

extraOptions,

deleteCheckpointOnStop))

case _ =>

// 使用 ProcessingTrigger 则为 MicroBatchExecution

new StreamingQueryWrapper(new MicroBatchExecution(

sparkSession,

userSpecifiedName.orNull,

checkpointLocation,

analyzedPlan,

sink,

trigger,

triggerClock,

outputMode,

extraOptions,

deleteCheckpointOnStop))

}

可以看出,Tigger和对应的StreamExecution的关系如下:

|

Trigger

|

StreamExecution

|

|---|---|

| OneTimeTrigger | MicroBatchExecution |

| ProcessingTrigger | MicroBatchExecution |

| ContinuousTrigger | ContinuousExecution |

另外,StreamExecution构造参数中的analyzedPlan是指LogicalPlan,也就是说在第一个query启动之前,LogicalPlan已经生成,此时的LogicalPlan是 UnResolved LogicalPlan,因为此时每一个AST依赖的数据节点的source信息还未知,还无法优化LogicalPlan。

注意

ContinuousExecution支持的source类型目前有限,主要为StreamWriteSupport子类,即:

|

source

|

class full name

|

|---|---|

| console | org.apache.spark.sql.execution.streaming.ConsoleSinkProvider |

| kafka | org.apache.spark.sql.kafka010.KafkaSourceProvider |

| ForeachSink | org.apache.spark.sql.execution.streaming.sources.ForeachWriterProvider |

| MemorySinkV2 | org.apache.spark.sql.execution.streaming.sources.MemorySinkV2 |

否则会匹配到 MicroBatchExecution, 但是在初始化 triggerExecution成员变量时,只支持ProcessingTrigger,不支持 ContinuousTrigger,会抛出如下异常:

StreamExecution的执行

org.apache.spark.sql.streaming.StreamingQueryManager#startQuery有如下代码片段:

try {

// When starting a query, it will call `StreamingQueryListener.onQueryStarted` synchronously.

// As it's provided by the user and can run arbitrary codes, we must not hold any lock here.

// Otherwise, it's easy to cause dead-lock, or block too long if the user codes take a long

// time to finish.

query.streamingQuery.start()

} catch {

case e: Throwable =>

activeQueriesLock.synchronized {

activeQueries -= query.id

}

throw e

}

这里的query.streamingQuery就是StreamExecution,即为MicroBatchExecution 或 ContinuousExecution。

StreamExecution的start 方法如下:

/**

* Starts the execution. This returns only after the thread has started and [[QueryStartedEvent]]

* has been posted to all the listeners.

*/

def start(): Unit = {

logInfo(s"Starting $prettyIdString. Use $resolvedCheckpointRoot to store the query checkpoint.")

queryExecutionThread.setDaemon(true)

queryExecutionThread.start()

startLatch.await() // Wait until thread started and QueryStart event has been posted

}

queryExecutionThread成员变量声明如下:

/**

* The thread that runs the micro-batches of this stream. Note that this thread must be

* [[org.apache.spark.util.UninterruptibleThread]] to workaround KAFKA-1894: interrupting a

* running `KafkaConsumer` may cause endless loop.

*/

val queryExecutionThread: QueryExecutionThread =

new QueryExecutionThread(s"stream execution thread for $prettyIdString") {

override def run(): Unit = {

// To fix call site like "run at <unknown>:0", we bridge the call site from the caller

// thread to this micro batch thread

sparkSession.sparkContext.setCallSite(callSite)

runStream()

}

}

其中,QueryExecutionThread 是 UninterruptibleThread 的子类,UninterruptibleThread 是 Thread的子类,即QueryExecutionThread 是一个线程类。他会运行runStream方法,runStream关键代码如下:

try {

// 运行Stream query的准备工作,send QueryStartedEvent event, countDown latch,streaming configure等操作

runActivatedStream(sparkSessionForStream) // 运行 stream

} catch {

// 异常处理

} finally {

// 运行完Stream query的收尾工作,stop source,send stream stop event,删除checkpoint(如果启用deleteCheckpointOnStop)等等操作

}

runActivatedStream 说明:Run the activated stream until stopped. :它是抽象方法,由子类实现。

MicroBatchExecution的runActivatedStream的实现

MicroBatchExecution 的 runActivatedStream的方法逻辑描述如下:

triggerExecutor.execute(() =>{

提交执行每一个query的操作

})

triggerExecution 的定义如下:

private val triggerExecutor = trigger match {

case t: ProcessingTime => ProcessingTimeExecutor(t, triggerClock)

case OneTimeTrigger => OneTimeExecutor()

case _ => throw new IllegalStateException(s"Unknown type of trigger: $trigger")

}

即使用 ProcessingTime 会使用 ProcessingTimeExecutor 来周期性生成 batch query,其 execution 方法代码如下:

override def execute(triggerHandler: () => Boolean): Unit = {

while (true) {

val triggerTimeMs = clock.getTimeMillis

val nextTriggerTimeMs = nextBatchTime(triggerTimeMs)

val terminated = !triggerHandler()

if (intervalMs > 0) {

val batchElapsedTimeMs = clock.getTimeMillis - triggerTimeMs

if (batchElapsedTimeMs > intervalMs) {

notifyBatchFallingBehind(batchElapsedTimeMs)

}

if (terminated) {

return

}

clock.waitTillTime(nextTriggerTimeMs)

} else {

if (terminated) {

return

}

}

}

}

伪代码如下:

def execute(triggerHandler: () => Boolean): Unit = {

while(true) {

获取current_time

根据current_time和interval获取下一个批次start_time

执行query任务获取并获取是否结束stream的标志位

if(interval > 0) {

query使用时间 = 新获取的current_time - 旧的current_time

if(query使用时间 > interval) {

notifyBatchFallingBehind // 目前只是打印warn日志

}

if(stream终止标志位为true){

return // 结束这个while循环退出方法

}

// Clock.waitTillTime SystemClock子类通过while + sleep(ms)实现,其余子类通过while + wait(ms) 来实现,使用while是为了防止外部中断导致wait时间不够

} else {

if(stream终止标志位为true){

return // 结束这个while循环退出方法

}

}

}

}

即stream没有停止情况下,下一个batch的提交时间为 = 当前batch使用时间 > interval ? 当前batch结束时间:本批次开始时间 / interval * interval + interval

ContinuousExecution的runActivatedStream的实现

源码如下:

override protected def runActivatedStream(sparkSessionForStream: SparkSession): Unit = {

val stateUpdate = new UnaryOperator[State] {

override def apply(s: State) = s match {

// If we ended the query to reconfigure, reset the state to active.

case RECONFIGURING => ACTIVE

case _ => s

}

}

do {

runContinuous(sparkSessionForStream)

} while (state.updateAndGet(stateUpdate) == ACTIVE)

}

其中,runContinuous 源码如下:

/**

* Do a continuous run.

* @param sparkSessionForQuery Isolated [[SparkSession]] to run the continuous query with.

*/

private def runContinuous(sparkSessionForQuery: SparkSession): Unit = {

// A list of attributes that will need to be updated.

val replacements = new ArrayBuffer[(Attribute, Attribute)]

// Translate from continuous relation to the underlying data source.

var nextSourceId = 0

continuousSources = logicalPlan.collect {

case ContinuousExecutionRelation(dataSource, extraReaderOptions, output) =>

val metadataPath = s"$resolvedCheckpointRoot/sources/$nextSourceId"

nextSourceId += 1 dataSource.createContinuousReader(

java.util.Optional.empty[StructType](),

metadataPath,

new DataSourceOptions(extraReaderOptions.asJava))

}

uniqueSources = continuousSources.distinct val offsets = getStartOffsets(sparkSessionForQuery) var insertedSourceId = 0

val withNewSources = logicalPlan transform {

case ContinuousExecutionRelation(source, options, output) =>

val reader = continuousSources(insertedSourceId)

insertedSourceId += 1

val newOutput = reader.readSchema().toAttributes assert(output.size == newOutput.size,

s"Invalid reader: ${Utils.truncatedString(output, ",")} != " +

s"${Utils.truncatedString(newOutput, ",")}")

replacements ++= output.zip(newOutput) val loggedOffset = offsets.offsets(0)

val realOffset = loggedOffset.map(off => reader.deserializeOffset(off.json))

reader.setStartOffset(java.util.Optional.ofNullable(realOffset.orNull))

StreamingDataSourceV2Relation(newOutput, source, options, reader)

} // Rewire the plan to use the new attributes that were returned by the source.

val replacementMap = AttributeMap(replacements)

val triggerLogicalPlan = withNewSources transformAllExpressions {

case a: Attribute if replacementMap.contains(a) =>

replacementMap(a).withMetadata(a.metadata)

case (_: CurrentTimestamp | _: CurrentDate) =>

throw new IllegalStateException(

"CurrentTimestamp and CurrentDate not yet supported for continuous processing")

} val writer = sink.createStreamWriter(

s"$runId",

triggerLogicalPlan.schema,

outputMode,

new DataSourceOptions(extraOptions.asJava))

val withSink = WriteToContinuousDataSource(writer, triggerLogicalPlan) val reader = withSink.collect {

case StreamingDataSourceV2Relation(_, _, _, r: ContinuousReader) => r

}.head reportTimeTaken("queryPlanning") {

lastExecution = new IncrementalExecution(

sparkSessionForQuery,

withSink,

outputMode,

checkpointFile("state"),

runId,

currentBatchId,

offsetSeqMetadata)

lastExecution.executedPlan // Force the lazy generation of execution plan

} sparkSessionForQuery.sparkContext.setLocalProperty(

StreamExecution.IS_CONTINUOUS_PROCESSING, true.toString)

sparkSessionForQuery.sparkContext.setLocalProperty(

ContinuousExecution.START_EPOCH_KEY, currentBatchId.toString)

// Add another random ID on top of the run ID, to distinguish epoch coordinators across

// reconfigurations.

val epochCoordinatorId = s"$runId--${UUID.randomUUID}"

currentEpochCoordinatorId = epochCoordinatorId

sparkSessionForQuery.sparkContext.setLocalProperty(

ContinuousExecution.EPOCH_COORDINATOR_ID_KEY, epochCoordinatorId)

sparkSessionForQuery.sparkContext.setLocalProperty(

ContinuousExecution.EPOCH_INTERVAL_KEY,

trigger.asInstanceOf[ContinuousTrigger].intervalMs.toString) // Use the parent Spark session for the endpoint since it's where this query ID is registered.

val epochEndpoint =

EpochCoordinatorRef.create(

writer, reader, this, epochCoordinatorId, currentBatchId, sparkSession, SparkEnv.get)

val epochUpdateThread = new Thread(new Runnable {

override def run: Unit = {

try {

triggerExecutor.execute(() => {

startTrigger() if (reader.needsReconfiguration() && state.compareAndSet(ACTIVE, RECONFIGURING)) {

if (queryExecutionThread.isAlive) {

queryExecutionThread.interrupt()

}

false

} else if (isActive) {

currentBatchId = epochEndpoint.askSync[Long](IncrementAndGetEpoch)

logInfo(s"New epoch $currentBatchId is starting.")

true

} else {

false

}

})

} catch {

case _: InterruptedException =>

// Cleanly stop the query.

return

}

}

}, s"epoch update thread for $prettyIdString") try {

epochUpdateThread.setDaemon(true)

epochUpdateThread.start() reportTimeTaken("runContinuous") {

SQLExecution.withNewExecutionId(sparkSessionForQuery, lastExecution) {

lastExecution.executedPlan.execute()

}

}

} catch {

case t: Throwable

if StreamExecution.isInterruptionException(t) && state.get() == RECONFIGURING =>

logInfo(s"Query $id ignoring exception from reconfiguring: $t")

// interrupted by reconfiguration - swallow exception so we can restart the query

} finally {

// The above execution may finish before getting interrupted, for example, a Spark job having

// 0 partitions will complete immediately. Then the interrupted status will sneak here.

//

// To handle this case, we do the two things here:

//

// 1. Clean up the resources in `queryExecutionThread.runUninterruptibly`. This may increase

// the waiting time of `stop` but should be minor because the operations here are very fast

// (just sending an RPC message in the same process and stopping a very simple thread).

// 2. Clear the interrupted status at the end so that it won't impact the `runContinuous`

// call. We may clear the interrupted status set by `stop`, but it doesn't affect the query

// termination because `runActivatedStream` will check `state` and exit accordingly.

queryExecutionThread.runUninterruptibly {

try {

epochEndpoint.askSync[Unit](StopContinuousExecutionWrites)

} finally {

SparkEnv.get.rpcEnv.stop(epochEndpoint)

epochUpdateThread.interrupt()

epochUpdateThread.join()

stopSources()

// The following line must be the last line because it may fail if SparkContext is stopped

sparkSession.sparkContext.cancelJobGroup(runId.toString)

}

}

Thread.interrupted()

}

}

// TODO 伪代码,后续整理

总结

总而言之,structured streaming设置 trigger后,不会造成后续任务的挤压,但会影响后续任务的提交时间

大batch任务对structured streaming任务影响的更多相关文章

- DataFlow编程模型与Spark Structured streaming

流式(streaming)和批量( batch):流式数据,实际上更准确的说法应该是unbounded data(processing),也就是无边界的连续的数据的处理:对应的批量计算,更准确的说法是 ...

- Spark之Structured Streaming

目录 Part V. Streaming Stream Processing Fundamentals Structured Streaming Basics Event-Time and State ...

- Spark Streaming揭秘 Day29 深入理解Spark2.x中的Structured Streaming

Spark Streaming揭秘 Day29 深入理解Spark2.x中的Structured Streaming 在Spark2.x中,Spark Streaming获得了比较全面的升级,称为St ...

- Structured Streaming教程(1) —— 基本概念与使用

近年来,大数据的计算引擎越来越受到关注,spark作为最受欢迎的大数据计算框架,也在不断的学习和完善中.在Spark2.x中,新开放了一个基于DataFrame的无下限的流式处理组件--Structu ...

- Structured Streaming编程向导

简介 Structured Streaming is a scalable and fault-tolerant stream processing engine built on the Spark ...

- 学习Spark2.0中的Structured Streaming(一)

转载自:http://lxw1234.com/archives/2016/10/772.htm Spark2.0新增了Structured Streaming,它是基于SparkSQL构建的可扩展和容 ...

- Apache Spark 2.2.0 中文文档 - Structured Streaming 编程指南 | ApacheCN

Structured Streaming 编程指南 概述 快速示例 Programming Model (编程模型) 基本概念 处理 Event-time 和延迟数据 容错语义 API 使用 Data ...

- Spark Streaming vs. Structured Streaming

简介 Spark Streaming Spark Streaming是spark最初的流处理框架,使用了微批的形式来进行流处理. 提供了基于RDDs的Dstream API,每个时间间隔内的数据为一个 ...

- Structured Streaming编程 Programming Guide

Structured Streaming编程 Programming Guide Overview Quick Example Programming Model Basic Concepts Han ...

随机推荐

- 《深入理解 Java 虚拟机》读书笔记:垃圾收集器与内存分配策略

正文 垃圾收集器关注的是 Java 堆和方法区,因为这部分内存的分配和回收是动态的.只有在程序处于运行期间时才能知道会创建哪些对象,也才能知道需要多少内存. 虚拟机栈和本地方法栈则不需要过多考虑回收的 ...

- 记录:如何使用ASP.NET Core和EnityFramework Core实现服务和数据分离

前情提要: 现有一个网站框架,包括主体项目WebApp一个,包含 IIdentityUser 接口的基架项目 A.用于处理用户身份验证的服务 AuthenticationService 位于命名空间B ...

- 千亿级平台技术架构:为了支撑高并发,我把身份证存到了JS里

@ 目录 一.用户信息安全规范 1.1 用户信息.敏感信息定义及判断依据 1.1.1 个人信息 1.1.2 个人敏感信息 1.2 用户信息存储的注意事项 二.框架技术实现 2.1 用户敏感信息自 ...

- 面试问了解Linux内存管理吗?10张图给你安排的明明白白!

文章每周持续更新,各位的「三连」是对我最大的肯定.可以微信搜索公众号「 后端技术学堂 」第一时间阅读(一般比博客早更新一到两篇) 今天来带大家研究一下Linux内存管理.对于精通 CURD 的业务同学 ...

- Dom节点操作总结

Dom 一:Dom的概念 Dom的简介: 全称为 document object model 文档对象模型,是操作文档的一整套方法 - 文档 - html,document时一个对象,是dom ...

- PHP Curl 请求https 60错误解决办法

curl_setopt($curl, CURLOPT_SSL_VERIFYPEER, 0);

- idea ------- 源码调试运行

1.创建一个 想学 的 ,使用单步调试进行一步步学习 调整系统资源 单步调试 (F7) ,进入不了源码,调整idea 让我们可以进入底层学习 想要在源码里面添加注释,要将引用的源文件指向,我们刚才复制 ...

- vue2.x学习笔记(五)

接着前面的内容:https://www.cnblogs.com/yanggb/p/12571062.html. 计算属性 模板内的表达式非常便利,但是设计它们的初衷是用于简单运算的.如果在模板中放入太 ...

- 【半译】在ASP.NET Core中创建内部使用作用域服务的Quartz.NET宿主服务

在我的上一篇文章中,我展示了如何使用ASP.NET Core创建Quartz.NET托管服务并使用它来按计划运行后台任务.不幸的是,由于Quartz.NET API的工作方式,在Quartz作业中使用 ...

- self不明白什么意思,我来帮助你了解self的含义

先看下面这段代码 # 用函数模仿类def dog(name, gender): def jiao(dog1): print('%s汪汪叫' % dog1["name"]) def ...