Spark教程——(11)Spark程序local模式执行、cluster模式执行以及Oozie/Hue执行的设置方式

本地执行Spark SQL程序:

package com.fc

//import common.util.{phoenixConnectMode, timeUtil}

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql.functions.col

import org.apache.spark.{SparkConf, SparkContext}

/*

每天执行

*/

object costDay {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setAppName("fdsf")

.setMaster("local")

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc)

// val df = sqlContext.load(

// "org.apache.phoenix.spark"

// , Map("table" -> "ASSET_NORMAL"

// , "zkUrl" -> "node3,node4,node5:2181")

// )

val tableName = "ASSET_NORMAL"

val columes = Array(

"ID",

"ASSET_ID",

"ASSET_NAME",

"ASSET_FIRST_DEGREE_ID",

"ASSET_FIRST_DEGREE_NAME",

"ASSET_SECOND_DEGREE_ID",

"ASSET_SECOND_DEGREE_NAME",

"GB_DEGREE_ID",

"GB_DEGREE_NAME",

"ASSET_USE_FIRST_DEGREE_ID",

"ASSET_USE_FIRST_DEGREE_NAME",

"ASSET_USE_SECOND_DEGREE_ID",

"ASSET_USE_SECOND_DEGREE_NAME",

"MANAGEMENT_TYPE_ID",

"MANAGEMENT_TYPE_NAME",

"ASSET_MODEL",

"FACTORY_NUMBER",

"ASSET_COUNTRY_ID",

"ASSET_COUNTRY_NAME",

"MANUFACTURER",

"SUPPLIER",

"SUPPLIER_TEL",

"ORIGINAL_VALUE",

"USE_DEPARTMENT_ID",

"USE_DEPARTMENT_NAME",

"USER_ID",

"USER_NAME",

"ASSET_LOCATION_OF_PARK_ID",

"ASSET_LOCATION_OF_PARK_NAME",

"ASSET_LOCATION_OF_BUILDING_ID",

"ASSET_LOCATION_OF_BUILDING_NAME",

"ASSET_LOCATION_OF_ROOM_ID",

"ASSET_LOCATION_OF_ROOM_NUMBER",

"PRODUCTION_DATE",

"ACCEPTANCE_DATE",

"REQUISITION_DATE",

"PERFORMANCE_INDEX",

"ASSET_STATE_ID",

"ASSET_STATE_NAME",

"INSPECTION_TYPE_ID",

"INSPECTION_TYPE_NAME",

"SEAL_DATE",

"SEAL_CAUSE",

"COST_ITEM_ID",

"COST_ITEM_NAME",

"ITEM_COMMENTS",

"UNSEAL_DATE",

"SCRAP_DATE",

"PURCHASE_NUMBER",

"WARRANTY_PERIOD",

"DEPRECIABLE_LIVES_ID",

"DEPRECIABLE_LIVES_NAME",

"MEASUREMENT_UNITS_ID",

"MEASUREMENT_UNITS_NAME",

"ANNEX",

"REMARK",

"ACCOUNTING_TYPE_ID",

"ACCOUNTING_TYPE_NAME",

"SYSTEM_TYPE_ID",

"SYSTEM_TYPE_NAME",

"ASSET_ID_PARENT",

"CLASSIFIED_LEVEL_ID",

"CLASSIFIED_LEVEL_NAME",

"ASSET_PICTURE",

"MILITARY_SPECIAL_CODE",

"CHECK_CYCLE_ID",

"CHECK_CYCLE_NAME",

"CHECK_DATE",

"CHECK_EFFECTIVE_DATE",

"CHECK_MODE_ID",

"CHECK_MODE_NAME",

"CHECK_DEPARTMENT_ID",

"CHECK_DEPARTMENT_NAME",

"RENT_STATUS_ID",

"RENT_STATUS_NAME",

"STORAGE_TIME",

"UPDATE_USER",

"UPDATE_TIME",

"IS_ON_PROCESS",

"IS_DELETED",

"FIRST_DEPARTMENT_ID",

"FIRST_DEPARTMENT_NAME",

"SECOND_DEPARTMENT_ID",

"SECOND_DEPARTMENT_NAME",

"CREATE_USER",

"CREATE_TIME"

)

val df = phoenixConnectMode.getMode1(sqlContext, tableName, columes)

.filter(col("USE_DEPARTMENT_ID") isNotNull)

df.registerTempTable("asset_normal")

// df.show(false)

def costingWithin(originalValue: Double, years: Int): Double = (originalValue*0.95)/(years*365)

sqlContext.udf.register("costingWithin", costingWithin _)

def costingBeyond(originalValue: Double): Double = originalValue*0.05/365

sqlContext.udf.register("costingBeyond", costingBeyond _)

def expire(acceptanceDate: String, years: Int): Boolean = timeUtil.dateStrAddYears2TimeStamp(acceptanceDate, timeUtil.SECOND_TIME_FORMAT, years) > System.currentTimeMillis()

sqlContext.udf.register("expire", expire _)

val costDay = sqlContext

.sql(

"select " +

"ID" +

",USE_DEPARTMENT_ID as FIRST_DEPARTMENT_ID" +

",case when expire(ACCEPTANCE_DATE, DEPRECIABLE_LIVES_NAME) then costingWithin(ORIGINAL_VALUE, DEPRECIABLE_LIVES_NAME) else costingBeyond(ORIGINAL_VALUE) end as ACTUAL_COST" +

",ORIGINAL_VALUE" +

",current_timestamp() as GENERATION_TIME" +

" from asset_normal"

)

costDay.printSchema()

println(costDay.count())

costDay.col("ORIGINAL_VALUE")

costDay.describe("ORIGINAL_VALUE").show()

costDay.show(false)

// costDay.write

// .format("org.apache.phoenix.spark")

// .mode("overwrite")

// .option("table", "ASSET_FINANCIAL_DETAIL_DAY")

// .option("zkUrl", "node3,node4,node5:2181")

// .save()

}

}

执行结果参考《Spark教程——(10)Spark SQL读取Phoenix数据本地执行计算》,如下:

// :: INFO spark.SparkContext: Running Spark version

// :: INFO spark.SecurityManager: Changing view acls to: cf_pc

// :: INFO spark.SecurityManager: Changing modify acls to: cf_pc

// :: INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(cf_pc); users with modify permissions: Set(cf_pc)

// :: INFO util.Utils: Successfully started service .

// :: INFO slf4j.Slf4jLogger: Slf4jLogger started

// :: INFO Remoting: Starting remoting

// :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@10.200.74.155:54708]

// :: INFO Remoting: Remoting now listens on addresses: [akka.tcp://sparkDriverActorSystem@10.200.74.155:54708]

// :: INFO util.Utils: Successfully started service .

// :: INFO spark.SparkEnv: Registering MapOutputTracker

// :: INFO spark.SparkEnv: Registering BlockManagerMaster

// :: INFO storage.DiskBlockManager: Created local directory at C:\Users\cf_pc\AppData\Local\Temp\blockmgr-9012d6b9-eab0-41f0-8e9e-b63c3fe7fb09

// :: INFO storage.MemoryStore: MemoryStore started with capacity 478.2 MB

// :: INFO spark.SparkEnv: Registering OutputCommitCoordinator

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SelectChannelConnector@

// :: INFO util.Utils: Successfully started service .

// :: INFO ui.SparkUI: Started SparkUI at http://10.200.74.155:4040

// :: INFO executor.Executor: Starting executor ID driver on host localhost

// :: INFO util.Utils: Successfully started service .

// :: INFO netty.NettyBlockTransferService: Server created on

// :: INFO storage.BlockManagerMaster: Trying to register BlockManager

// :: INFO storage.BlockManagerMasterEndpoint: Registering block manager localhost: with )

// :: INFO storage.BlockManagerMaster: Registered BlockManager

// :: INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 247.5 KB, free 477.9 MB)

// :: INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 15.4 KB, free 477.9 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_0_piece0 (size: 15.4 KB, free: 478.2 MB)

// :: INFO spark.SparkContext: Created broadcast from newAPIHadoopRDD at PhoenixRDD.scala:

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO log.QueryLoggerDisruptor: Starting QueryLoggerDisruptor , waitStrategy=BlockingWaitStrategy, exceptionHandler=org.apache.phoenix.log.QueryLoggerDefaultExceptionHandler@4f169009...

// :: INFO query.ConnectionQueryServicesImpl: An instance of ConnectionQueryServices was created.

// :: INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-

// :: INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=-, built on // : GMT

// :: INFO zookeeper.ZooKeeper: Client environment:host.name=DESKTOP-0CDQ4PM

// :: INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_212

// :: INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

// :: INFO zookeeper.ZooKeeper: Client environment:java.home=C:\3rd\Java\jdk1..0_212\jre

// :: INFO zookeeper.ZooKeeper: Client environment:java.class.path=C:\3rd\Java\jdk1..0_212\jre\lib\charsets.jar;C:\3rd\Java\jdk1..0_212\jre\lib\deploy.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\access-bridge-.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\cldrdata.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\dnsns.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\jaccess.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\jfxrt.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\localedata.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\nashorn.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\sunec.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\sunjce_provider.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\sunmscapi.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\sunpkcs11.jar;C:\3rd\Java\jdk1..0_212\jre\lib\ext\zipfs.jar;C:\3rd\Java\jdk1..0_212\jre\lib\javaws.jar;C:\3rd\Java\jdk1..0_212\jre\lib\jce.jar;C:\3rd\Java\jdk1..0_212\jre\lib\jfr.jar;C:\3rd\Java\jdk1..0_212\jre\lib\jfxswt.jar;C:\3rd\Java\jdk1..0_212\jre\lib\jsse.jar;C:\3rd\Java\jdk1..0_212\jre\lib\management-agent.jar;C:\3rd\Java\jdk1..0_212\jre\lib\plugin.jar;C:\3rd\Java\jdk1..0_212\jre\lib\resources.jar;C:\3rd\Java\jdk1..0_212\jre\lib\rt.jar;C:\development\cf\scalapp\target\classes;C:\3rd\scala\scala-\lib\scala-actors-migration.jar;C:\3rd\scala\scala-\lib\scala-actors.jar;C:\3rd\scala\scala-\lib\scala-library.jar;C:\3rd\scala\scala-\lib\scala-reflect.jar;C:\3rd\scala\scala-\lib\scala-swing.jar;C:\3rd\scala\scala-\src\scala-actors-src.jar;C:\3rd\scala\scala-\src\scala-library-src.jar;C:\3rd\scala\scala-\src\scala-reflect-src.jar;C:\3rd\scala\scala-\src\scala-swing-src.jar;C:\development\MavenRepository\org\apache\spark\spark-core_2.\-cdh5.--cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.\\chill_2.-.jar;C:\development\MavenRepository\com\esotericsoftware\kryo\kryo\\chill-java-.jar;C:\development\MavenRepository\org\apache\xbean\xbean-asm5-shaded\-cdh5.-cdh5.-cdh5.-cdh5.\xercesImpl-.jar;C:\development\MavenRepository\xml-apis\xml-apis\\xml-apis-.jar;C:\development\MavenRepository\org\apache\hadoop\hadoop-mapreduce-client-app\-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.\aws-java-sdk-bundle-.jar;C:\development\MavenRepository\org\apache\spark\spark-launcher_2.\-cdh5.--cdh5.\-cdh5.--cdh5.\jackson-annotations-.jar;C:\development\MavenRepository\org\apache\spark\spark-network-shuffle_2.\-cdh5.--cdh5.\-cdh5.--cdh5.\jets3t-.jar;C:\development\MavenRepository\org\apache\httpcomponents\httpcore\\httpcore-.jar;C:\development\MavenRepository\com\jamesmurty\utils\java-xmlbuilder\\curator-recipes-.jar;C:\development\MavenRepository\org\apache\curator\curator-framework\\curator-framework-.jar;C:\development\MavenRepository\org\apache\zookeeper\zookeeper\\zookeeper-.jar;C:\development\MavenRepository\org\eclipse\jetty\orbit\javax.servlet\.v201112011016\javax.servlet-.v201112011016.jar;C:\development\MavenRepository\org\apache\commons\commons-lang3\\commons-lang3-.jar;C:\development\MavenRepository\org\apache\commons\commons-math3\\commons-math3-.jar;C:\development\MavenRepository\com\google\code\findbugs\jsr305\\jsr305-.jar;C:\development\MavenRepository\org\slf4j\slf4j-api\\slf4j-api-.jar;C:\development\MavenRepository\org\slf4j\jul-to-slf4j\\jul-to-slf4j-.jar;C:\development\MavenRepository\org\slf4j\jcl-over-slf4j\\jcl-over-slf4j-.jar;C:\development\MavenRepository\log4j\log4j\\log4j-.jar;C:\development\MavenRepository\org\slf4j\slf4j-log4j12\\slf4j-log4j12-.jar;C:\development\MavenRepository\com\ning\compress-lzf\\compress-lzf-.jar;C:\development\MavenRepository\org\xerial\snappy\snappy-java\\lz4-.jar;C:\development\MavenRepository\org\roaringbitmap\RoaringBitmap\\RoaringBitmap-.jar;C:\development\MavenRepository\commons-net\commons-net\\-shaded-protobuf\akka-remote_2.--shaded-protobuf.jar;C:\development\MavenRepository\org\spark-project\akka\akka-actor_2.\-shaded-protobuf\akka-actor_2.--shaded-protobuf.jar;C:\development\MavenRepository\com\typesafe\config\\config-.jar;C:\development\MavenRepository\org\spark-project\protobuf\protobuf-java\-shaded\protobuf-java--shaded.jar;C:\development\MavenRepository\org\uncommons\maths\uncommons-maths\\-shaded-protobuf\akka-slf4j_2.--shaded-protobuf.jar;C:\development\MavenRepository\org\scala-lang\scala-library\\scala-library-.jar;C:\development\MavenRepository\org\json4s\json4s-jackson_2.\\json4s-jackson_2.-.jar;C:\development\MavenRepository\org\json4s\json4s-core_2.\\json4s-core_2.-.jar;C:\development\MavenRepository\org\json4s\json4s-ast_2.\\json4s-ast_2.-.jar;C:\development\MavenRepository\org\scala-lang\scalap\\scalap-.jar;C:\development\MavenRepository\org\scala-lang\scala-compiler\\scala-compiler-.jar;C:\development\MavenRepository\com\sun\jersey\jersey-server\\mesos--shaded-protobuf.jar;C:\development\MavenRepository\io\netty\netty-all\.Final\netty-all-.Final.jar;C:\development\MavenRepository\com\clearspring\analytics\stream\\stream-.jar;C:\development\MavenRepository\io\dropwizard\metrics\metrics-core\\metrics-core-.jar;C:\development\MavenRepository\io\dropwizard\metrics\metrics-jvm\\metrics-jvm-.jar;C:\development\MavenRepository\io\dropwizard\metrics\metrics-json\\metrics-json-.jar;C:\development\MavenRepository\io\dropwizard\metrics\metrics-graphite\\metrics-graphite-.jar;C:\development\MavenRepository\com\fasterxml\jackson\core\jackson-databind\\jackson-databind-.jar;C:\development\MavenRepository\com\fasterxml\jackson\core\jackson-core\\jackson-core-.jar;C:\development\MavenRepository\com\fasterxml\jackson\module\jackson-module-scala_2.\\jackson-module-scala_2.-.jar;C:\development\MavenRepository\com\thoughtworks\paranamer\paranamer\\ivy-.jar;C:\development\MavenRepository\oro\oro\\oro-.jar;C:\development\MavenRepository\org\tachyonproject\tachyon-client\\tachyon-client-.jar;C:\development\MavenRepository\commons-lang\commons-lang\\tachyon-underfs-hdfs-.jar;C:\development\MavenRepository\org\tachyonproject\tachyon-underfs-s3\\tachyon-underfs-s3-.jar;C:\development\MavenRepository\org\tachyonproject\tachyon-underfs-local\\tachyon-underfs-local-.jar;C:\development\MavenRepository\net\razorvine\pyrolite\\chimera-.jar;C:\development\MavenRepository\org\spark-project\spark\unused\\unused-.jar;C:\development\MavenRepository\org\apache\spark\spark-sql_2.\-cdh5.--cdh5.\-cdh5.--cdh5.\scala-reflect-.jar;C:\development\MavenRepository\org\codehaus\janino\janino\\janino-.jar;C:\development\MavenRepository\org\codehaus\janino\commons-compiler\\commons-compiler-.jar;C:\development\MavenRepository\com\twitter\parquet-column\-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5..cloudera.\jetty-.cloudera..jar;C:\development\MavenRepository\org\mortbay\jetty\jetty-util\.cloudera.\jetty-util-.cloudera..jar;C:\development\MavenRepository\com\sun\jersey\jersey-json\-\jaxb-impl--.jar;C:\development\MavenRepository\tomcat\jasper-compiler\\jasper-compiler-.jar;C:\development\MavenRepository\tomcat\jasper-runtime\\jasper-runtime-.jar;C:\development\MavenRepository\commons-el\commons-el\\commons-beanutils-.jar;C:\development\MavenRepository\commons-beanutils\commons-beanutils-core\\commons-beanutils-core-.jar;C:\development\MavenRepository\com\google\code\gson\gson\\gson-.jar;C:\development\MavenRepository\org\apache\hadoop\hadoop-auth\-cdh5.-cdh5.-M15\apacheds-kerberos-codec--M15.jar;C:\development\MavenRepository\org\apache\directory\server\apacheds-i18n\-M15\apacheds-i18n--M15.jar;C:\development\MavenRepository\org\apache\directory\api\api-asn1-api\-M20\api-asn1-api--M20.jar;C:\development\MavenRepository\org\apache\directory\api\api-util\-M20\api-util--M20.jar;C:\development\MavenRepository\com\jcraft\jsch\\jsch-.jar;C:\development\MavenRepository\org\apache\curator\curator-client\\curator-client-.jar;C:\development\MavenRepository\org\apache\htrace\htrace-core4\-incubating\htrace-core4--incubating.jar;C:\development\MavenRepository\org\apache\commons\commons-compress\\commons-compress-.jar;C:\development\MavenRepository\org\tukaani\xz\-cdh5.-cdh5.\jcodings-.jar;C:\development\MavenRepository\com\yammer\metrics\metrics-core\\metrics-core-.jar;C:\development\MavenRepository\org\apache\hbase\hbase-protocol\-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.\high-scale-lib-.jar;C:\development\MavenRepository\org\apache\commons\commons-math\.cloudera.\jetty-sslengine-.cloudera..jar;C:\development\MavenRepository\org\mortbay\jetty\jsp-\jsp-.jar;C:\development\MavenRepository\org\mortbay\jetty\jsp-api-\jsp-api-.jar;C:\development\MavenRepository\org\mortbay\jetty\servlet-api-\servlet-api-.jar;C:\development\MavenRepository\org\codehaus\jackson\jackson-jaxrs\\jackson-jaxrs-.jar;C:\development\MavenRepository\org\jamon\jamon-runtime\\jamon-runtime-.jar;C:\development\MavenRepository\org\hamcrest\hamcrest-core\-mr1-cdh5.-mr1-cdh5.\core-.jar;C:\development\MavenRepository\org\apache\hadoop\hadoop-hdfs\-cdh5.-cdh5.\commons-daemon-.jar;C:\development\MavenRepository\com\google\protobuf\protobuf-java\\protobuf-java-.jar;C:\development\MavenRepository\commons-logging\commons-logging\-\findbugs-annotations--.jar;C:\development\MavenRepository\org\apache\phoenix\phoenix-spark\-cdh5.-cdh5.\disruptor-.jar;C:\development\MavenRepository\org\apache\phoenix\phoenix-core\-cdh5.-cdh5.-incubating\tephra-api--incubating.jar;C:\development\MavenRepository\org\apache\tephra\tephra-core\-incubating\tephra-core--incubating.jar;C:\development\MavenRepository\com\google\inject\guice\\javax.inject-.jar;C:\development\MavenRepository\aopalliance\aopalliance\\libthrift-.jar;C:\development\MavenRepository\it\unimi\dsi\fastutil\\fastutil-.jar;C:\development\MavenRepository\org\apache\twill\twill-common\\twill-common-.jar;C:\development\MavenRepository\org\apache\twill\twill-core\\twill-core-.jar;C:\development\MavenRepository\org\apache\twill\twill-api\\twill-api-.jar;C:\development\MavenRepository\org\ow2\asm\asm-all\\asm-all-.jar;C:\development\MavenRepository\org\apache\twill\twill-discovery-api\\twill-discovery-api-.jar;C:\development\MavenRepository\org\apache\twill\twill-discovery-core\\twill-discovery-core-.jar;C:\development\MavenRepository\org\apache\twill\twill-zookeeper\\twill-zookeeper-.jar;C:\development\MavenRepository\org\apache\tephra\tephra-hbase-compat--incubating\tephra-hbase-compat--incubating.jar;C:\development\MavenRepository\org\antlr\antlr-runtime\\antlr-runtime-.jar;C:\development\MavenRepository\jline\jline\\sqlline-.jar;C:\development\MavenRepository\com\google\guava\guava\\guava-.jar;C:\development\MavenRepository\joda-\jcip-annotations-.jar;C:\development\MavenRepository\org\codehaus\jackson\jackson-core-asl\\jackson-core-asl-.jar;C:\development\MavenRepository\org\codehaus\jackson\jackson-mapper-asl\\jackson-mapper-asl-.jar;C:\development\MavenRepository\junit\junit\\httpclient-.jar;C:\development\MavenRepository\org\iq80\snappy\snappy\-incubating\htrace-core--incubating.jar;C:\development\MavenRepository\commons-cli\commons-cli\\commons-collections-.jar;C:\development\MavenRepository\org\apache\commons\commons-csv\-cdh5.-cdh5..0_212\lib\tools.jar;C:\development\MavenRepository\org\apache\hbase\hbase-common\-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.-cdh5.\joni-.jar;C:\development\MavenRepository\com\salesforce\i18n\i18n-util\\i18n-util-.jar;C:\development\MavenRepository\com\ibm\icu\icu4j\-cdh5.-cdh5.-cdh5.-cdh5.\jaxb-api-.jar;C:\development\MavenRepository\javax\xml\stream\stax-api\\stax-api-.jar;C:\development\MavenRepository\javax\activation\activation\\jackson-xc-.jar;C:\development\MavenRepository\com\sun\jersey\contribs\jersey-guice\-cdh5.-cdh5..Final\netty-.Final.jar;C:\development\MavenRepository\mysql\mysql-connector-java\\mysql-connector-java-.jar;C:\3rd\JetBrains\IntelliJ IDEA \lib\idea_rt.jar

// :: INFO zookeeper.ZooKeeper: Client environment:java.library.path=C:\3rd\Java\jdk1..0_212\bin;C:\WINDOWS\Sun\Java\bin;C:\WINDOWS\system32;C:\WINDOWS;C:\3rd\Anaconda2;C:\3rd\Anaconda2\Library\mingw-w64\bin;C:\3rd\Anaconda2\Library\usr\bin;C:\3rd\Anaconda2\Library\bin;C:\3rd\Anaconda2\Scripts;C:\Program Files (x86)\Intel\Intel(R) Management Engine Components\iCLS\;C:\Program Files\Intel\Intel(R) Management Engine Components\iCLS\;C:\WINDOWS\system32;C:\WINDOWS;C:\WINDOWS\System32\Wbem;C:\WINDOWS\System32\WindowsPowerShell\v1.\;C:\Program Files (x86)\Intel\Intel(R) Management Engine Components\DAL;C:\Program Files\Intel\Intel(R) Management Engine Components\DAL;C:\Program Files (x86)\Intel\Intel(R) Management Engine Components\IPT;C:\Program Files\Intel\Intel(R) Management Engine Components\IPT;C:\3rd\MATLAB\R2016a\runtime\win64;C:\3rd\MATLAB\R2016a\bin;C:\3rd\MATLAB\R2016a\polyspace\bin;C:\WINDOWS\System32\OpenSSH\;C:\Program Files\TortoiseSVN\bin;C:\Users\cf_pc\Documents\Caffe\Release;C:\3rd\scala\scala-\bin;C:\3rd\Java\jdk1..0_212\bin;C:\development\apache-maven-\bin;C:\3rd\mysql--winx64\bin;C:\Program Files\Intel\WiFi\bin\;C:\Program Files\Common Files\Intel\WirelessCommon\;C:\3rd\hadoop-common-\bin;C:\Users\cf_pc\AppData\Local\Microsoft\WindowsApps;;C:\Program Files\Microsoft VS Code\bin;.

// :: INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=C:\Users\cf_pc\AppData\Local\Temp\

// :: INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

// :: INFO zookeeper.ZooKeeper: Client environment:os.name=Windows

// :: INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

// :: INFO zookeeper.ZooKeeper: Client environment:os.version=10.0

// :: INFO zookeeper.ZooKeeper: Client environment:user.name=cf_pc

// :: INFO zookeeper.ZooKeeper: Client environment:user.home=C:\Users\cf_pc

// :: INFO zookeeper.ZooKeeper: Client environment:user.dir=C:\development\cf\scalapp

// :: INFO zookeeper.ZooKeeper: Initiating client connection, connectString=node3: sessionTimeout= watcher=hconnection-0x4bc59b270x0, quorum=node3:, baseZNode=/hbase

// :: INFO zookeeper.ClientCnxn: Opening socket connection to server node3/. Will not attempt to authenticate using SASL (unknown error)

// :: INFO zookeeper.ClientCnxn: Socket connection established to node3/, initiating session

// :: INFO zookeeper.ClientCnxn: Session establishment complete on server node3/, sessionid =

// :: INFO query.ConnectionQueryServicesImpl: HConnection established. Stacktrace )

org.apache.phoenix.util.LogUtil.getCallerStackTrace(LogUtil.java:)

org.apache.phoenix.query.ConnectionQueryServicesImpl.openConnection(ConnectionQueryServicesImpl.java:)

org.apache.phoenix.query.ConnectionQueryServicesImpl.access$(ConnectionQueryServicesImpl.java:)

org.apache.phoenix.query.ConnectionQueryServicesImpl$.call(ConnectionQueryServicesImpl.java:)

org.apache.phoenix.query.ConnectionQueryServicesImpl$.call(ConnectionQueryServicesImpl.java:)

org.apache.phoenix.util.PhoenixContextExecutor.call(PhoenixContextExecutor.java:)

org.apache.phoenix.query.ConnectionQueryServicesImpl.init(ConnectionQueryServicesImpl.java:)

org.apache.phoenix.jdbc.PhoenixDriver.getConnectionQueryServices(PhoenixDriver.java:)

org.apache.phoenix.jdbc.PhoenixEmbeddedDriver.createConnection(PhoenixEmbeddedDriver.java:)

org.apache.phoenix.jdbc.PhoenixDriver.connect(PhoenixDriver.java:)

java.sql.DriverManager.getConnection(DriverManager.java:)

java.sql.DriverManager.getConnection(DriverManager.java:)

org.apache.phoenix.mapreduce.util.ConnectionUtil.getConnection(ConnectionUtil.java:)

org.apache.phoenix.mapreduce.util.ConnectionUtil.getInputConnection(ConnectionUtil.java:)

org.apache.phoenix.mapreduce.util.PhoenixConfigurationUtil.getSelectColumnMetadataList(PhoenixConfigurationUtil.java:)

org.apache.phoenix.spark.PhoenixRDD.toDataFrame(PhoenixRDD.scala:)

org.apache.phoenix.spark.SparkSqlContextFunctions.phoenixTableAsDataFrame(SparkSqlContextFunctions.scala:)

com.fc.phoenixConnectMode$.getMode1(phoenixConnectMode.scala:)

com.fc.costDay$.main(costDay.scala:)

com.fc.costDay.main(costDay.scala)

// :: INFO Configuration.deprecation: hadoop.native.lib is deprecated. Instead, use io.native.lib.available

// :: INFO mapreduce.PhoenixInputFormat: UseSelectColumns=, selectColumnList=ID,ASSET_ID,ASSET_NAME,ASSET_FIRST_DEGREE_ID,ASSET_FIRST_DEGREE_NAME,ASSET_SECOND_DEGREE_ID,ASSET_SECOND_DEGREE_NAME,GB_DEGREE_ID,GB_DEGREE_NAME,ASSET_USE_FIRST_DEGREE_ID,ASSET_USE_FIRST_DEGREE_NAME,ASSET_USE_SECOND_DEGREE_ID,ASSET_USE_SECOND_DEGREE_NAME,MANAGEMENT_TYPE_ID,MANAGEMENT_TYPE_NAME,ASSET_MODEL,FACTORY_NUMBER,ASSET_COUNTRY_ID,ASSET_COUNTRY_NAME,MANUFACTURER,SUPPLIER,SUPPLIER_TEL,ORIGINAL_VALUE,USE_DEPARTMENT_ID,USE_DEPARTMENT_NAME,USER_ID,USER_NAME,ASSET_LOCATION_OF_PARK_ID,ASSET_LOCATION_OF_PARK_NAME,ASSET_LOCATION_OF_BUILDING_ID,ASSET_LOCATION_OF_BUILDING_NAME,ASSET_LOCATION_OF_ROOM_ID,ASSET_LOCATION_OF_ROOM_NUMBER,PRODUCTION_DATE,ACCEPTANCE_DATE,REQUISITION_DATE,PERFORMANCE_INDEX,ASSET_STATE_ID,ASSET_STATE_NAME,INSPECTION_TYPE_ID,INSPECTION_TYPE_NAME,SEAL_DATE,SEAL_CAUSE,COST_ITEM_ID,COST_ITEM_NAME,ITEM_COMMENTS,UNSEAL_DATE,SCRAP_DATE,PURCHASE_NUMBER,WARRANTY_PERIOD,DEPRECIABLE_LIVES_ID,DEPRECIABLE_LIVES_NAME,MEASUREMENT_UNITS_ID,MEASUREMENT_UNITS_NAME,ANNEX,REMARK,ACCOUNTING_TYPE_ID,ACCOUNTING_TYPE_NAME,SYSTEM_TYPE_ID,SYSTEM_TYPE_NAME,ASSET_ID_PARENT,CLASSIFIED_LEVEL_ID,CLASSIFIED_LEVEL_NAME,ASSET_PICTURE,MILITARY_SPECIAL_CODE,CHECK_CYCLE_ID,CHECK_CYCLE_NAME,CHECK_DATE,CHECK_EFFECTIVE_DATE,CHECK_MODE_ID,CHECK_MODE_NAME,CHECK_DEPARTMENT_ID,CHECK_DEPARTMENT_NAME,RENT_STATUS_ID,RENT_STATUS_NAME,STORAGE_TIME,UPDATE_USER,UPDATE_TIME,IS_ON_PROCESS,IS_DELETED,FIRST_DEPARTMENT_ID,FIRST_DEPARTMENT_NAME,SECOND_DEPARTMENT_ID,SECOND_DEPARTMENT_NAME,CREATE_USER,CREATE_TIME

root

|-- ID: string (nullable = true)

|-- FIRST_DEPARTMENT_ID: string (nullable = true)

|-- ACTUAL_COST: double (nullable = true)

|-- ORIGINAL_VALUE: double (nullable = true)

|-- GENERATION_TIME: timestamp (nullable = false)

// :: INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO mapreduce.PhoenixInputFormat: UseSelectColumns=, selectColumnList=ID,ASSET_ID,ASSET_NAME,ASSET_FIRST_DEGREE_ID,ASSET_FIRST_DEGREE_NAME,ASSET_SECOND_DEGREE_ID,ASSET_SECOND_DEGREE_NAME,GB_DEGREE_ID,GB_DEGREE_NAME,ASSET_USE_FIRST_DEGREE_ID,ASSET_USE_FIRST_DEGREE_NAME,ASSET_USE_SECOND_DEGREE_ID,ASSET_USE_SECOND_DEGREE_NAME,MANAGEMENT_TYPE_ID,MANAGEMENT_TYPE_NAME,ASSET_MODEL,FACTORY_NUMBER,ASSET_COUNTRY_ID,ASSET_COUNTRY_NAME,MANUFACTURER,SUPPLIER,SUPPLIER_TEL,ORIGINAL_VALUE,USE_DEPARTMENT_ID,USE_DEPARTMENT_NAME,USER_ID,USER_NAME,ASSET_LOCATION_OF_PARK_ID,ASSET_LOCATION_OF_PARK_NAME,ASSET_LOCATION_OF_BUILDING_ID,ASSET_LOCATION_OF_BUILDING_NAME,ASSET_LOCATION_OF_ROOM_ID,ASSET_LOCATION_OF_ROOM_NUMBER,PRODUCTION_DATE,ACCEPTANCE_DATE,REQUISITION_DATE,PERFORMANCE_INDEX,ASSET_STATE_ID,ASSET_STATE_NAME,INSPECTION_TYPE_ID,INSPECTION_TYPE_NAME,SEAL_DATE,SEAL_CAUSE,COST_ITEM_ID,COST_ITEM_NAME,ITEM_COMMENTS,UNSEAL_DATE,SCRAP_DATE,PURCHASE_NUMBER,WARRANTY_PERIOD,DEPRECIABLE_LIVES_ID,DEPRECIABLE_LIVES_NAME,MEASUREMENT_UNITS_ID,MEASUREMENT_UNITS_NAME,ANNEX,REMARK,ACCOUNTING_TYPE_ID,ACCOUNTING_TYPE_NAME,SYSTEM_TYPE_ID,SYSTEM_TYPE_NAME,ASSET_ID_PARENT,CLASSIFIED_LEVEL_ID,CLASSIFIED_LEVEL_NAME,ASSET_PICTURE,MILITARY_SPECIAL_CODE,CHECK_CYCLE_ID,CHECK_CYCLE_NAME,CHECK_DATE,CHECK_EFFECTIVE_DATE,CHECK_MODE_ID,CHECK_MODE_NAME,CHECK_DEPARTMENT_ID,CHECK_DEPARTMENT_NAME,RENT_STATUS_ID,RENT_STATUS_NAME,STORAGE_TIME,UPDATE_USER,UPDATE_TIME,IS_ON_PROCESS,IS_DELETED,FIRST_DEPARTMENT_ID,FIRST_DEPARTMENT_NAME,SECOND_DEPARTMENT_ID,SECOND_DEPARTMENT_NAME,CREATE_USER,CREATE_TIME

// :: INFO mapreduce.PhoenixInputFormat: Select Statement: SELECT "."CREATE_TIME" FROM ASSET_NORMAL

// :: INFO zookeeper.RecoverableZooKeeper: Process identifier=hconnection-

// :: INFO zookeeper.ZooKeeper: Initiating client connection, connectString=node3: sessionTimeout= watcher=hconnection-0x226172700x0, quorum=node3:, baseZNode=/hbase

// :: INFO zookeeper.ClientCnxn: Opening socket connection to server node3/. Will not attempt to authenticate using SASL (unknown error)

// :: INFO zookeeper.ClientCnxn: Socket connection established to node3/, initiating session

// :: INFO zookeeper.ClientCnxn: Session establishment complete on server node3/, sessionid =

// :: INFO util.RegionSizeCalculator: Calculating region sizes for table "IDX_ASSET_NORMAL".

// :: INFO client.ConnectionManager$HConnectionImplementation: Closing master protocol: MasterService

// :: INFO client.ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x36ca2ccfed69559

// :: INFO zookeeper.ZooKeeper: Session: 0x36ca2ccfed69559 closed

// :: INFO zookeeper.ClientCnxn: EventThread shut down

// :: INFO spark.SparkContext: Starting job: count at costDay.scala:

// :: INFO scheduler.DAGScheduler: Registering RDD (count at costDay.scala:)

// :: INFO scheduler.DAGScheduler: Got job (count at costDay.scala:) with output partitions

// :: INFO scheduler.DAGScheduler: Final stage: ResultStage (count at costDay.scala:)

// :: INFO scheduler.DAGScheduler: Parents of final stage: List(ShuffleMapStage )

// :: INFO scheduler.DAGScheduler: Missing parents: List(ShuffleMapStage )

// :: INFO scheduler.DAGScheduler: Submitting ShuffleMapStage (MapPartitionsRDD[] at count at costDay.scala:), which has no missing parents

// :: INFO storage.MemoryStore: Block broadcast_1 stored as values in memory (estimated size 24.8 KB, free 477.9 MB)

// :: INFO storage.MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 9.5 KB, free 477.9 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_1_piece0 (size: 9.5 KB, free: 478.1 MB)

// :: INFO spark.SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO scheduler.DAGScheduler: Submitting missing tasks from ShuffleMapStage (MapPartitionsRDD[] at count at costDay.scala:) (first tasks are ))

// :: INFO scheduler.TaskSchedulerImpl: Adding task set tasks

// :: INFO scheduler.TaskSetManager: Starting task , localhost, executor driver, partition , ANY, bytes)

// :: INFO executor.Executor: Running task )

// :: INFO rdd.NewHadoopRDD: Input split: org.apache.phoenix.mapreduce.PhoenixInputSplit@20b488

// :: INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO codegen.GeneratePredicate: Code generated in 309.4486 ms

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 20.3535 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 10.5156 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 10.4614 ms

// :: INFO codegen.GenerateUnsafeRowJoiner: Code generated in 6.8774 ms

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 6.7907 ms

// :: INFO executor.Executor: Finished task ). bytes result sent to driver

// :: INFO scheduler.TaskSetManager: Finished task ) ms on localhost (executor driver) (/)

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

// :: INFO scheduler.DAGScheduler: ShuffleMapStage (count at costDay.scala:) finished in 10.745 s

// :: INFO scheduler.DAGScheduler: looking for newly runnable stages

// :: INFO scheduler.DAGScheduler: running: Set()

// :: INFO scheduler.DAGScheduler: waiting: Set(ResultStage )

// :: INFO scheduler.DAGScheduler: failed: Set()

// :: INFO scheduler.DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at count at costDay.scala:), which has no missing parents

// :: INFO storage.MemoryStore: Block broadcast_2 stored as values in memory (estimated size 25.3 KB, free 477.9 MB)

// :: INFO storage.MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 9.8 KB, free 477.8 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_2_piece0 (size: 9.8 KB, free: 478.1 MB)

// :: INFO spark.SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO scheduler.DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at count at costDay.scala:) (first tasks are ))

// :: INFO scheduler.TaskSchedulerImpl: Adding task set tasks

// :: INFO scheduler.TaskSetManager: Starting task , localhost, executor driver, partition , NODE_LOCAL, bytes)

// :: INFO executor.Executor: Running task )

// :: INFO storage.ShuffleBlockFetcherIterator: Getting non-empty blocks out of blocks

// :: INFO storage.ShuffleBlockFetcherIterator: Started remote fetches ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 22.44 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 13.992 ms

// :: INFO executor.Executor: Finished task ). bytes result sent to driver

// :: INFO scheduler.TaskSetManager: Finished task ) ms on localhost (executor driver) (/)

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

// :: INFO scheduler.DAGScheduler: ResultStage (count at costDay.scala:) finished in 0.205 s

// :: INFO scheduler.DAGScheduler: Job finished: count at costDay.scala:, took 11.157661 s

// :: INFO spark.SparkContext: Starting job: describe at costDay.scala:

// :: INFO scheduler.DAGScheduler: Registering RDD (describe at costDay.scala:)

// :: INFO scheduler.DAGScheduler: Got job (describe at costDay.scala:) with output partitions

// :: INFO scheduler.DAGScheduler: Final stage: ResultStage (describe at costDay.scala:)

// :: INFO scheduler.DAGScheduler: Parents of final stage: List(ShuffleMapStage )

// :: INFO scheduler.DAGScheduler: Missing parents: List(ShuffleMapStage )

// :: INFO scheduler.DAGScheduler: Submitting ShuffleMapStage (MapPartitionsRDD[] at describe at costDay.scala:), which has no missing parents

// :: INFO storage.MemoryStore: Block broadcast_3 stored as values in memory (estimated size 27.2 KB, free 477.8 MB)

// :: INFO storage.MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 10.4 KB, free 477.8 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_3_piece0 (size: 10.4 KB, free: 478.1 MB)

// :: INFO spark.SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO scheduler.DAGScheduler: Submitting missing tasks from ShuffleMapStage (MapPartitionsRDD[] at describe at costDay.scala:) (first tasks are ))

// :: INFO scheduler.TaskSchedulerImpl: Adding task set tasks

// :: INFO scheduler.TaskSetManager: Starting task , localhost, executor driver, partition , ANY, bytes)

// :: INFO executor.Executor: Running task )

// :: INFO rdd.NewHadoopRDD: Input split: org.apache.phoenix.mapreduce.PhoenixInputSplit@20b488

// :: INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 11.405 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 10.5886 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 39.2201 ms

// :: INFO codegen.GenerateUnsafeRowJoiner: Code generated in 8.3737 ms

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 22.542 ms

// :: INFO storage.BlockManagerInfo: Removed broadcast_2_piece0 on localhost: in memory (size: 9.8 KB, free: 478.1 MB)

// :: INFO executor.Executor: Finished task ). bytes result sent to driver

// :: INFO scheduler.TaskSetManager: Finished task ) ms on localhost (executor driver) (/)

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

// :: INFO scheduler.DAGScheduler: ShuffleMapStage (describe at costDay.scala:) finished in 8.825 s

// :: INFO scheduler.DAGScheduler: looking for newly runnable stages

// :: INFO scheduler.DAGScheduler: running: Set()

// :: INFO scheduler.DAGScheduler: waiting: Set(ResultStage )

// :: INFO scheduler.DAGScheduler: failed: Set()

// :: INFO scheduler.DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at describe at costDay.scala:), which has no missing parents

// :: INFO storage.MemoryStore: Block broadcast_4 stored as values in memory (estimated size 28.7 KB, free 477.8 MB)

// :: INFO storage.MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 11.0 KB, free 477.8 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_4_piece0 (size: 11.0 KB, free: 478.1 MB)

// :: INFO spark.SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO scheduler.DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at describe at costDay.scala:) (first tasks are ))

// :: INFO scheduler.TaskSchedulerImpl: Adding task set tasks

// :: INFO scheduler.TaskSetManager: Starting task , localhost, executor driver, partition , NODE_LOCAL, bytes)

// :: INFO executor.Executor: Running task )

// :: INFO storage.ShuffleBlockFetcherIterator: Getting non-empty blocks out of blocks

// :: INFO storage.ShuffleBlockFetcherIterator: Started remote fetches ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 21.5454 ms

// :: INFO codegen.GenerateMutableProjection: Code generated in 10.7314 ms

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 13.0969 ms

// :: INFO codegen.GenerateSafeProjection: Code generated in 8.2397 ms

// :: INFO executor.Executor: Finished task ). bytes result sent to driver

// :: INFO scheduler.DAGScheduler: ResultStage (describe at costDay.scala:) finished in 0.097 s

// :: INFO scheduler.DAGScheduler: Job finished: describe at costDay.scala:, took 8.948356 s

// :: INFO scheduler.TaskSetManager: Finished task ) ms on localhost (executor driver) (/)

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

+-------+--------------------+

|summary| ORIGINAL_VALUE|

+-------+--------------------+

| count| |

| mean|2.427485546653306E12|

| stddev|5.474385018305400...|

| min| -7970934.0|

| max|1.234567890123456...|

+-------+--------------------+

// :: INFO spark.SparkContext: Starting job: show at costDay.scala:

// :: INFO scheduler.DAGScheduler: Got job (show at costDay.scala:) with output partitions

// :: INFO scheduler.DAGScheduler: Final stage: ResultStage (show at costDay.scala:)

// :: INFO scheduler.DAGScheduler: Parents of final stage: List()

// :: INFO scheduler.DAGScheduler: Missing parents: List()

// :: INFO scheduler.DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at show at costDay.scala:), which has no missing parents

// :: INFO storage.MemoryStore: Block broadcast_5 stored as values in memory (estimated size 23.6 KB, free 477.8 MB)

// :: INFO storage.MemoryStore: Block broadcast_5_piece0 stored as bytes in memory (estimated size 9.0 KB, free 477.8 MB)

// :: INFO storage.BlockManagerInfo: Added broadcast_5_piece0 (size: 9.0 KB, free: 478.1 MB)

// :: INFO spark.SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO scheduler.DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at show at costDay.scala:) (first tasks are ))

// :: INFO scheduler.TaskSchedulerImpl: Adding task set tasks

// :: INFO scheduler.TaskSetManager: Starting task , localhost, executor driver, partition , ANY, bytes)

// :: INFO executor.Executor: Running task )

// :: INFO rdd.NewHadoopRDD: Input split: org.apache.phoenix.mapreduce.PhoenixInputSplit@20b488

// :: INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Trying to connect to a secure cluster as with keytab /hbase

// :: INFO jdbc.PhoenixEmbeddedDriver$ConnectionInfo: Successful login to secure cluster

// :: INFO codegen.GenerateUnsafeProjection: Code generated in 33.6563 ms

// :: INFO codegen.GenerateSafeProjection: Code generated in 7.0589 ms

// :: INFO executor.Executor: Finished task ). bytes result sent to driver

// :: INFO scheduler.TaskSetManager: Finished task ) ms on localhost (executor driver) (/)

// :: INFO scheduler.DAGScheduler: ResultStage (show at costDay.scala:) finished in 0.466 s

// :: INFO scheduler.TaskSchedulerImpl: Removed TaskSet 4.0, whose tasks have all completed, from pool

// :: INFO scheduler.DAGScheduler: Job finished: show at costDay.scala:, took 0.489113 s

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

|ID |FIRST_DEPARTMENT_ID|ACTUAL_COST |ORIGINAL_VALUE|GENERATION_TIME |

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

|d25bb550a290457382c175b0e57c0982||-- ::31.864|

|492016e2f7ec4cd18615c164c92c6c6d||-- ::31.864|

|1d138a7401bd493da12f8f8323e9dee0||-- ::31.864|

|09718925d34b4a099e09a30a0621ded8||-- ::31.864|

|d5cfd5e898464130b71530d74b43e9d1||-- ::31.864|

|6b39ac96b8734103b2413520d3195ee6||-- ::31.864|

|8d20d0abd04d49cea3e52d9ca67e39da||-- ::31.864|

|66ae7e7c7a104cea99615358e12c03b0||-- ::31.864|

|d49b0324bbf14b70adefe8b1d9163db2||-- ::31.864|

|d4d701514a2a425e8192acf47bb57f9b||-- ::31.864|

|d6a016c618c1455ca0e2c7d73ba947ac||-- ::31.864|

|5dfa3be825464ddd98764b2790720fae||-- ::31.864|

|6e5653ef4aaa4c03bcd00fbeb1e6811d||-- ::31.864|

|32bd2654082645cba35527d50e0d52f9||-- ::31.864|

|8ed4424408bc458dbe200acffe5733bf||-- ::31.864|

|1b2faa31f139461488847e77eacd794a||-- ::31.864|

|f398245c9ccc4760a5eb3251db3680bf||-- ::31.864|

|2696de9733d247e5bf88573244f36ba2||-- ::31.864|

|9c8cfad3d4334b37a7b9beb56b528c22||-- ::31.864|

|3e2721b79e754a798d0be940ae011d72||-- ::31.864|

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

only showing top rows

// :: INFO spark.SparkContext: Invoking stop() from shutdown hook

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/static/sql,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/SQL/execution/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/SQL/execution,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/SQL/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/SQL,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/metrics/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/kill,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/api,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/static,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/threadDump,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/executors,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/environment,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/rdd,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs,null}

// :: INFO ui.SparkUI: Stopped Spark web UI at http://10.200.74.155:4040

// :: INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

// :: INFO storage.MemoryStore: MemoryStore cleared

// :: INFO storage.BlockManager: BlockManager stopped

// :: INFO storage.BlockManagerMaster: BlockManagerMaster stopped

// :: INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

// :: INFO spark.SparkContext: Successfully stopped SparkContext

// :: INFO util.ShutdownHookManager: Shutdown hook called

// :: INFO util.ShutdownHookManager: Deleting directory C:\Users\cf_pc\AppData\Local\Temp\spark-4fcfa10d-c258-46b7-b4f5-ee977276fa00

Process finished with exit code

执行打包操作,返回信息:

C:\3rd\Java\jdk1..0_212\bin\java.exe -Dmaven.multiModuleProjectDirectory=C:\development\cf\scalapp -Dmaven.home=C:\development\apache-maven- -Dclassworlds.conf=C:\development\apache-maven-\bin\m2.conf -classpath C:\development\apache-maven-\boot\plexus-classworlds-.jar org.codehaus.classworlds.Launcher -Didea.version2019.\conf\settings.xml -Dmaven.repo.local=C:\development\MavenRepository package [INFO] Scanning for projects... [INFO] [INFO] ---------------------------< com.fc:scalapp >--------------------------- [INFO] Building scalapp 1.0-SNAPSHOT [INFO] --------------------------------[ jar ]--------------------------------- [INFO] [INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ scalapp --- [WARNING] Using platform encoding (UTF- actually) to copy filtered resources, i.e. build is platform dependent! [INFO] Copying resource [INFO] [INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ scalapp --- [INFO] Nothing to compile - all classes are up to date [INFO] [INFO] --- maven-scala-plugin::compile (default) @ scalapp --- [INFO] Checking for multiple versions of scala [WARNING] Expected all dependencies to require Scala version: [WARNING] com.twitter:chill_2.: requires scala version: [WARNING] Multiple versions of scala libraries detected! [INFO] includes = [**/*.java,**/*.scala,] [INFO] excludes = [] [INFO] C:\development\cf\scalapp\src\main\scala:-: info: compiling [INFO] Compiling source files to C:\development\cf\scalapp\target\classes at [WARNING] warning: there were feature warning(s); re-run with -feature for details [WARNING] one warning found [INFO] prepare-compile s [INFO] compile s [INFO] [INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ scalapp --- [WARNING] Using platform encoding (UTF- actually) to copy filtered resources, i.e. build is platform dependent! [INFO] skip non existing resourceDirectory C:\development\cf\scalapp\src\test\resources [INFO] [INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ scalapp --- [INFO] Nothing to compile - all classes are up to date [INFO] [INFO] --- maven-scala-plugin::testCompile (default) @ scalapp --- [INFO] Checking for multiple versions of scala [WARNING] Expected all dependencies to require Scala version: [WARNING] com.twitter:chill_2.: requires scala version: [WARNING] Multiple versions of scala libraries detected! [INFO] includes = [**/*.java,**/*.scala,] [INFO] excludes = [] [WARNING] No source files found. [INFO] [INFO] --- maven-surefire-plugin::test (default-test) @ scalapp --- [INFO] [INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ scalapp --- [INFO] Building jar: C:\development\cf\scalapp\target\scalapp-1.0-SNAPSHOT.jar [INFO] [INFO] --- maven-shade-plugin::shade (default) @ scalapp --- [INFO] Including org.apache.spark:spark-core_2.:jar:-cdh5.14.2 in the shaded jar. [INFO] Including org.apache.avro:avro-mapred:jar:hadoop2:-cdh5.14.2 in the shaded jar. …… [INFO] Including mysql:mysql-connector-java:jar: in the shaded jar. [WARNING] commons-collections-.jar, commons-beanutils-.jar, commons-beanutils-core-.jar define overlapping classes: [WARNING] - org.apache.commons.collections.FastHashMap$EntrySet [WARNING] - org.apache.commons.collections.FastHashMap$KeySet [WARNING] - org.apache.commons.collections.FastHashMap$CollectionView$CollectionViewIterator [WARNING] - org.apache.commons.collections.ArrayStack [WARNING] - org.apache.commons.collections.FastHashMap$Values [WARNING] - org.apache.commons.collections.FastHashMap$CollectionView [WARNING] - org.apache.commons.collections.FastHashMap$ [WARNING] - org.apache.commons.collections.Buffer [WARNING] - org.apache.commons.collections.FastHashMap [WARNING] - org.apache.commons.collections.BufferUnderflowException …… [WARNING] mvn dependency:tree -Ddetail=true and the above output. [WARNING] See http://maven.apache.org/plugins/maven-shade-plugin/ [INFO] Replacing original artifact with shaded artifact. [INFO] Replacing C:\development\cf\scalapp\target\scalapp-1.0-SNAPSHOT.jar with C:\development\cf\scalapp\target\scalapp-1.0-SNAPSHOT-shaded.jar [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total : min [INFO] Finished at: --24T00::+: [INFO] ------------------------------------------------------------------------ Process finished with exit code

上传到服务器上,执行命令:

spark-submit --class com.fc.costDay --executor-memory 500m --total-executor-cores /home/cf/scalapp-1.0-SNAPSHOT.jar

报错:

java.lang.ClassNotFoundException: Class org.apache.phoenix.spark.PhoenixRecordWritable not found

参考:https://www.jianshu.com/p/f336f7e5f31b,更改执行命令为:

spark-submit --master yarn-cluster --driver-memory 4g --num-executors --executor-memory 2g --executor-cores --class com.fc.costDay --conf spark.driver.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX--cdh5./lib/phoenix/lib/* --conf spark.executor.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3/lib/phoenix/lib/* /home/cf/scalapp-1.0-SNAPSHOT.jar

仍然报错:

File does not exist: hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0105/……

完整错误信息如下:

[root@node1 ~]# spark-submit --master yarn-cluster --driver-memory 4g --num-executors --executor-memory 2g --executor-cores --class com.fc.costDay --conf spark.driver.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX--cdh5./lib/phoenix/lib/* --conf spark.executor.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3/lib/phoenix/lib/* /home/cf/scalapp-1.0-SNAPSHOT.jar

19/09/24 00:01:36 INFO client.RMProxy: Connecting to ResourceManager at node1/10.200.101.131:8032

19/09/24 00:01:36 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers

19/09/24 00:01:36 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (40874 MB per container)

19/09/24 00:01:36 INFO yarn.Client: Will allocate AM container, with 4505 MB memory including 409 MB overhead

19/09/24 00:01:36 INFO yarn.Client: Setting up container launch context for our AM

19/09/24 00:01:36 INFO yarn.Client: Setting up the launch environment for our AM container

19/09/24 00:01:37 INFO yarn.Client: Preparing resources for our AM container

19/09/24 00:01:37 INFO yarn.Client: Uploading resource file:/home/cf/scalapp-1.0-SNAPSHOT.jar -> hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0105/scalapp-1.0-SNAPSHOT.jar

19/09/24 00:01:38 INFO yarn.Client: Uploading resource file:/tmp/spark-2c548285-8012-414b-ab4e-797a164e38bc/__spark_conf__8997139291240118668.zip -> hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0105/__spark_conf__8997139291240118668.zip

19/09/24 00:01:38 INFO spark.SecurityManager: Changing view acls to: root

19/09/24 00:01:38 INFO spark.SecurityManager: Changing modify acls to: root

19/09/24 00:01:38 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

19/09/24 00:01:38 INFO yarn.Client: Submitting application 105 to ResourceManager

19/09/24 00:01:38 INFO impl.YarnClientImpl: Submitted application application_1566100765602_0105

19/09/24 00:01:39 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:39 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.root

start time: 1569254498669

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1566100765602_0105/

user: root

19/09/24 00:01:40 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:41 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:42 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:43 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:44 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:45 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:46 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:47 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:48 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:49 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:50 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:51 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:52 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:53 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:54 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:55 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:56 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:57 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:58 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:01:59 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:02:00 INFO yarn.Client: Application report for application_1566100765602_0105 (state: ACCEPTED)

19/09/24 00:02:01 INFO yarn.Client: Application report for application_1566100765602_0105 (state: FAILED)

19/09/24 00:02:01 INFO yarn.Client:

client token: N/A

diagnostics: Application application_1566100765602_0105 failed 2 times due to AM Container for appattempt_1566100765602_0105_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://node1:8088/proxy/application_1566100765602_0105/Then, click on links to logs of each attempt.

Diagnostics: File does not exist: hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0105/__spark_conf__8997139291240118668.zip

java.io.FileNotFoundException: File does not exist: hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0105/__spark_conf__8997139291240118668.zip

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1269)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1261)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1261)

at org.apache.hadoop.yarn.util.FSDownload.copy(FSDownload.java:251)

at org.apache.hadoop.yarn.util.FSDownload.access$000(FSDownload.java:61)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:364)

at org.apache.hadoop.yarn.util.FSDownload$2.run(FSDownload.java:362)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:361)

at org.apache.hadoop.yarn.util.FSDownload.call(FSDownload.java:60)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

Failing this attempt. Failing the application.

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.root

start time: 1569254498669

final status: FAILED

tracking URL: http://node1:8088/cluster/app/application_1566100765602_0105

user: root

Exception in thread "main" org.apache.spark.SparkException: Application application_1566100765602_0105 finished with failed status

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1025)

at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1072)

at org.apache.spark.deploy.yarn.Client.main(Client.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:730)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:181)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:206)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:121)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

19/09/24 00:02:01 INFO util.ShutdownHookManager: Shutdown hook called

19/09/24 00:02:01 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-2c548285-8012-414b-ab4e-797a164e38bc

参考:https://blog.csdn.net/adorechen/article/details/78746363,将源代码中设定本地执行的语句注释掉:

val conf = new SparkConf()

.setAppName("fdsf")

// .setMaster("local") //本地执行

重新打包,执行以上语句,成功!返回如下信息:

[root@node1 ~]# spark-submit --master yarn-cluster --driver-memory 4g --num-executors --executor-memory 2g --executor-cores --class com.fc.costDay --conf spark.driver.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX--cdh5./lib/phoenix/lib/* --conf spark.executor.extraClassPath=/opt/cloudera/parcels/APACHE_PHOENIX-4.14.0-cdh5.14.2.p0.3/lib/phoenix/lib/* /home/cf/scalapp-1.0-SNAPSHOT.jar

19/09/24 00:13:58 INFO client.RMProxy: Connecting to ResourceManager at node1/10.200.101.131:8032

19/09/24 00:13:58 INFO yarn.Client: Requesting a new application from cluster with 3 NodeManagers

19/09/24 00:13:58 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (40874 MB per container)

19/09/24 00:13:58 INFO yarn.Client: Will allocate AM container, with 4505 MB memory including 409 MB overhead

19/09/24 00:13:58 INFO yarn.Client: Setting up container launch context for our AM

19/09/24 00:13:58 INFO yarn.Client: Setting up the launch environment for our AM container

19/09/24 00:13:58 INFO yarn.Client: Preparing resources for our AM container

19/09/24 00:13:59 INFO yarn.Client: Uploading resource file:/home/cf/scalapp-1.0-SNAPSHOT.jar -> hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0106/scalapp-1.0-SNAPSHOT.jar

19/09/24 00:14:00 INFO yarn.Client: Uploading resource file:/tmp/spark-875aaa2a-1c5d-4c6a-95a6-12f276a80054/__spark_conf__4530217919668141816.zip -> hdfs://node1:8020/user/root/.sparkStaging/application_1566100765602_0106/__spark_conf__4530217919668141816.zip

19/09/24 00:14:00 INFO spark.SecurityManager: Changing view acls to: root

19/09/24 00:14:00 INFO spark.SecurityManager: Changing modify acls to: root

19/09/24 00:14:00 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

19/09/24 00:14:00 INFO yarn.Client: Submitting application 106 to ResourceManager

19/09/24 00:14:00 INFO impl.YarnClientImpl: Submitted application application_1566100765602_0106

19/09/24 00:14:01 INFO yarn.Client: Application report for application_1566100765602_0106 (state: ACCEPTED)

19/09/24 00:14:01 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: root.users.root

start time: 1569255240698

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1566100765602_0106/

user: root

19/09/24 00:14:02 INFO yarn.Client: Application report for application_1566100765602_0106 (state: ACCEPTED)

19/09/24 00:14:03 INFO yarn.Client: Application report for application_1566100765602_0106 (state: ACCEPTED)

19/09/24 00:14:04 INFO yarn.Client: Application report for application_1566100765602_0106 (state: ACCEPTED)

19/09/24 00:14:05 INFO yarn.Client: Application report for application_1566100765602_0106 (state: ACCEPTED)

19/09/24 00:14:06 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:06 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.200.101.135

ApplicationMaster RPC port: 0

queue: root.users.root

start time: 1569255240698

final status: UNDEFINED

tracking URL: http://node1:8088/proxy/application_1566100765602_0106/

user: root

19/09/24 00:14:07 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:08 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:09 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:10 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:11 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:12 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:13 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:14 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:15 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:16 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:17 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:18 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:19 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:20 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:21 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:22 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:23 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:24 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:25 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:26 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:27 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:28 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:29 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:30 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:31 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:32 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:33 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:34 INFO yarn.Client: Application report for application_1566100765602_0106 (state: RUNNING)

19/09/24 00:14:35 INFO yarn.Client: Application report for application_1566100765602_0106 (state: FINISHED)

19/09/24 00:14:35 INFO yarn.Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 10.200.101.135

ApplicationMaster RPC port: 0

queue: root.users.root

start time: 1569255240698

final status: SUCCEEDED

tracking URL: http://node1:8088/proxy/application_1566100765602_0106/

user: root

19/09/24 00:14:35 INFO util.ShutdownHookManager: Shutdown hook called

19/09/24 00:14:35 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-875aaa2a-1c5d-4c6a-95a6-12f276a80054

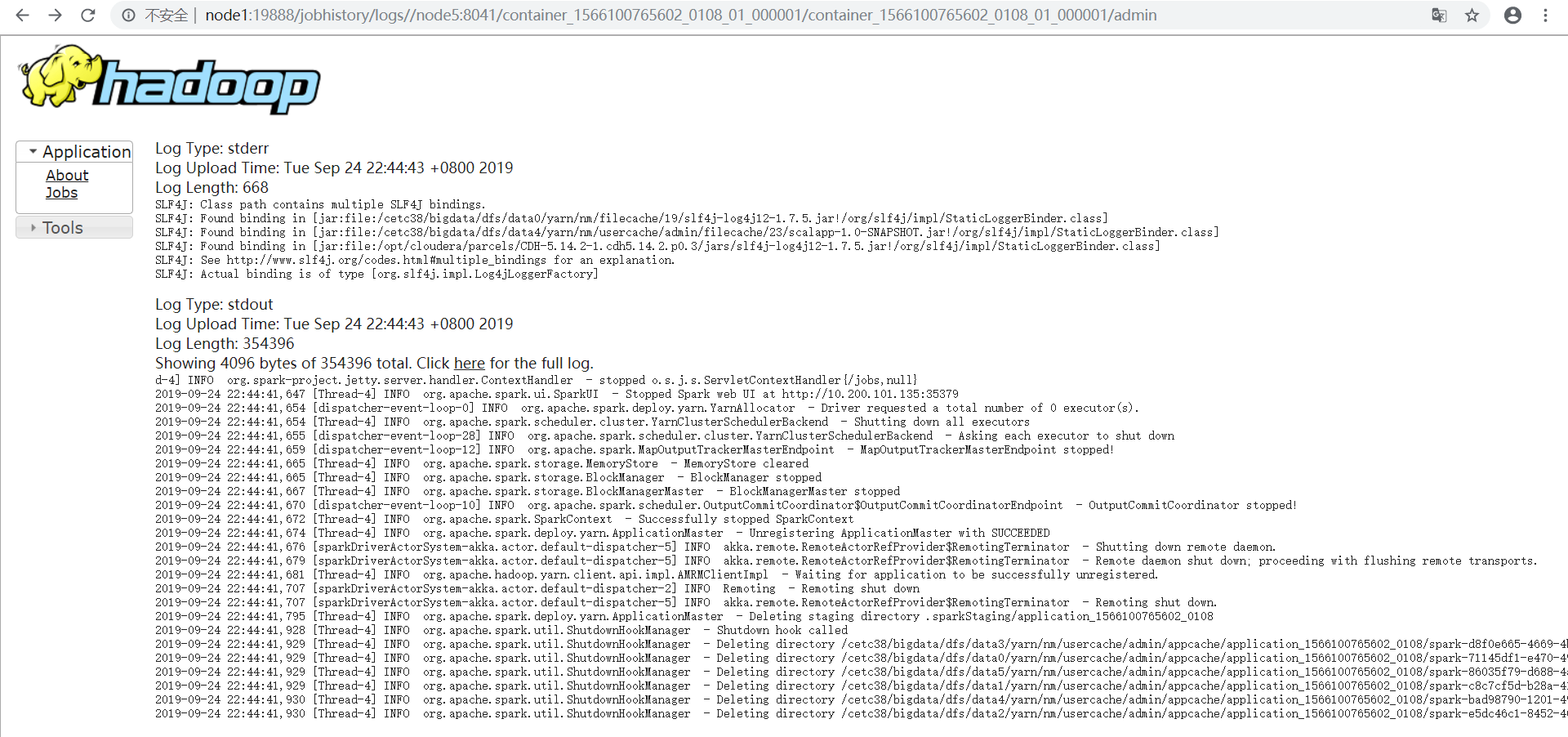

去任务界面查看输出信息:

如下:

Log Type: stderr

Log Upload Time: Tue Sep :: +

Log Length:

Showing bytes of total. Click here for the full log.

rdd,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/storage,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/pool,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/stage,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/stages,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/job,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs/json,null}

// :: INFO handler.ContextHandler: stopped o.s.j.s.ServletContextHandler{/jobs,null}

// :: INFO ui.SparkUI: Stopped Spark web UI at http://10.200.101.135:32960

// :: INFO yarn.YarnAllocator: Driver requested a total number of executor(s).

// :: INFO cluster.YarnClusterSchedulerBackend: Shutting down all executors

// :: INFO cluster.YarnClusterSchedulerBackend: Asking each executor to shut down

// :: INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

// :: INFO storage.MemoryStore: MemoryStore cleared

// :: INFO storage.BlockManager: BlockManager stopped

// :: INFO storage.BlockManagerMaster: BlockManagerMaster stopped

// :: INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

// :: INFO spark.SparkContext: Successfully stopped SparkContext

// :: INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with SUCCEEDED

// :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

// :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

// :: INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered.

// :: INFO Remoting: Remoting shut down

// :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Remoting shut down.

// :: INFO yarn.ApplicationMaster: Deleting staging directory .sparkStaging/application_1566100765602_0106

// :: INFO util.ShutdownHookManager: Shutdown hook called

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data2/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-771c1143-d7a4-434d-8e27-0ec5964b2fb2

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data1/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-757f2701-97e9-4a23--1b9536ec0698

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data0/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-7dc0f9ff----b41bb0706ce0

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data3/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-95e96c3f-915e--9ba3-ad50973224a0

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data5/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-6b26fd69-d3e9-4e7e-a4d4-f00978232b57

// :: INFO util.ShutdownHookManager: Deleting directory /cetc38/bigdata/dfs/data4/yarn/nm/usercache/root/appcache/application_1566100765602_0106/spark-a05e4cfc-b152-4e2e-bc8c-e0350e8d9f3b

Log Type: stdout

Log Upload Time: Tue Sep :: +

Log Length:

root

|-- ID: string (nullable = true)

|-- FIRST_DEPARTMENT_ID: string (nullable = true)

|-- ACTUAL_COST: double (nullable = true)

|-- ORIGINAL_VALUE: double (nullable = true)

|-- GENERATION_TIME: timestamp (nullable = false)

+-------+--------------------+

|summary| ORIGINAL_VALUE|

+-------+--------------------+

| count| |

| mean|2.427485546653306E12|

| stddev|5.474385018305400...|

| min| -7970934.0|

| max|1.234567890123456...|

+-------+--------------------+

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

|ID |FIRST_DEPARTMENT_ID|ACTUAL_COST |ORIGINAL_VALUE|GENERATION_TIME |

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

|d25bb550a290457382c175b0e57c0982||-- ::14.283|

|492016e2f7ec4cd18615c164c92c6c6d||-- ::14.283|

|1d138a7401bd493da12f8f8323e9dee0||-- ::14.283|

|09718925d34b4a099e09a30a0621ded8||-- ::14.283|

|d5cfd5e898464130b71530d74b43e9d1||-- ::14.283|

|6b39ac96b8734103b2413520d3195ee6||-- ::14.283|

|8d20d0abd04d49cea3e52d9ca67e39da||-- ::14.283|

|66ae7e7c7a104cea99615358e12c03b0||-- ::14.283|

|d49b0324bbf14b70adefe8b1d9163db2||-- ::14.283|

|d4d701514a2a425e8192acf47bb57f9b||-- ::14.283|

|d6a016c618c1455ca0e2c7d73ba947ac||-- ::14.283|

|5dfa3be825464ddd98764b2790720fae||-- ::14.283|

|6e5653ef4aaa4c03bcd00fbeb1e6811d||-- ::14.283|

|32bd2654082645cba35527d50e0d52f9||-- ::14.283|

|8ed4424408bc458dbe200acffe5733bf||-- ::14.283|

|1b2faa31f139461488847e77eacd794a||-- ::14.283|

|f398245c9ccc4760a5eb3251db3680bf||-- ::14.283|

|2696de9733d247e5bf88573244f36ba2||-- ::14.283|

|9c8cfad3d4334b37a7b9beb56b528c22||-- ::14.283|

|3e2721b79e754a798d0be940ae011d72||-- ::14.283|

+--------------------------------+-------------------+---------------------+--------------+-----------------------+

only showing top rows

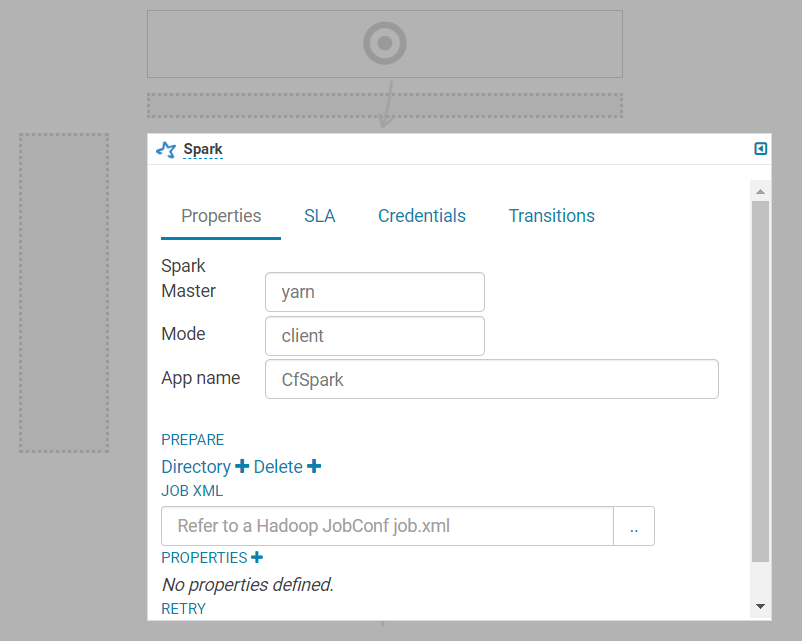

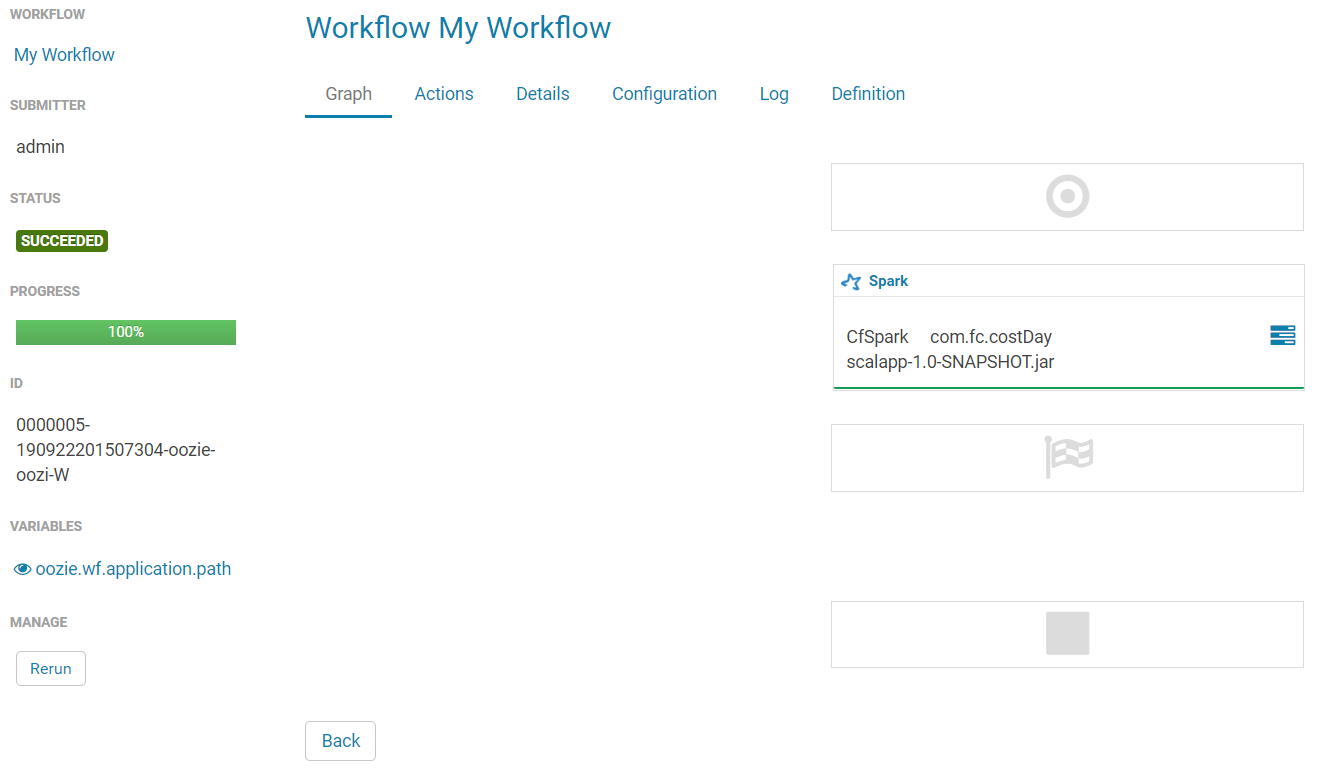

编排Spark任务:

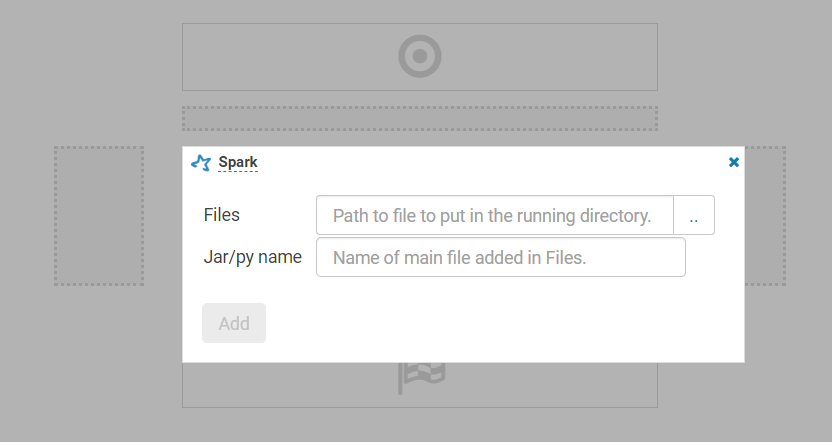

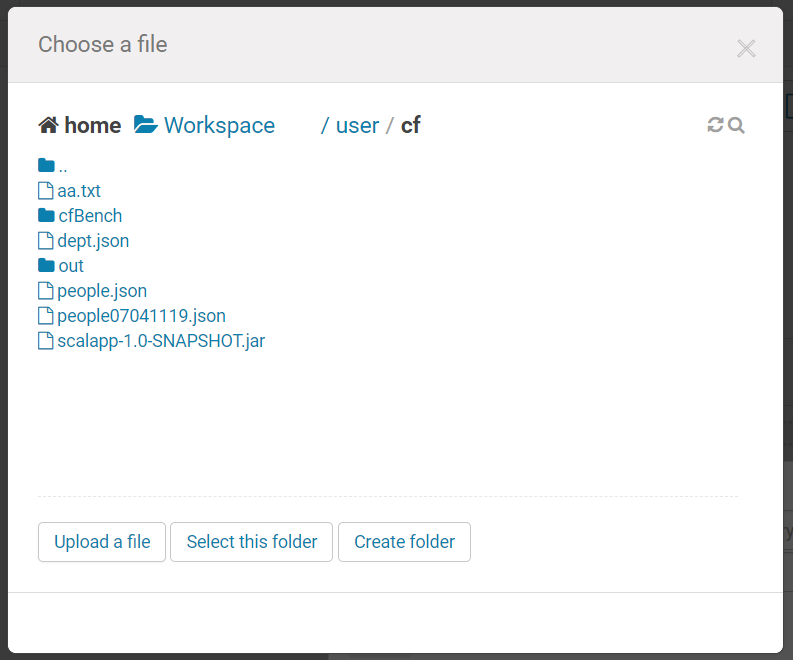

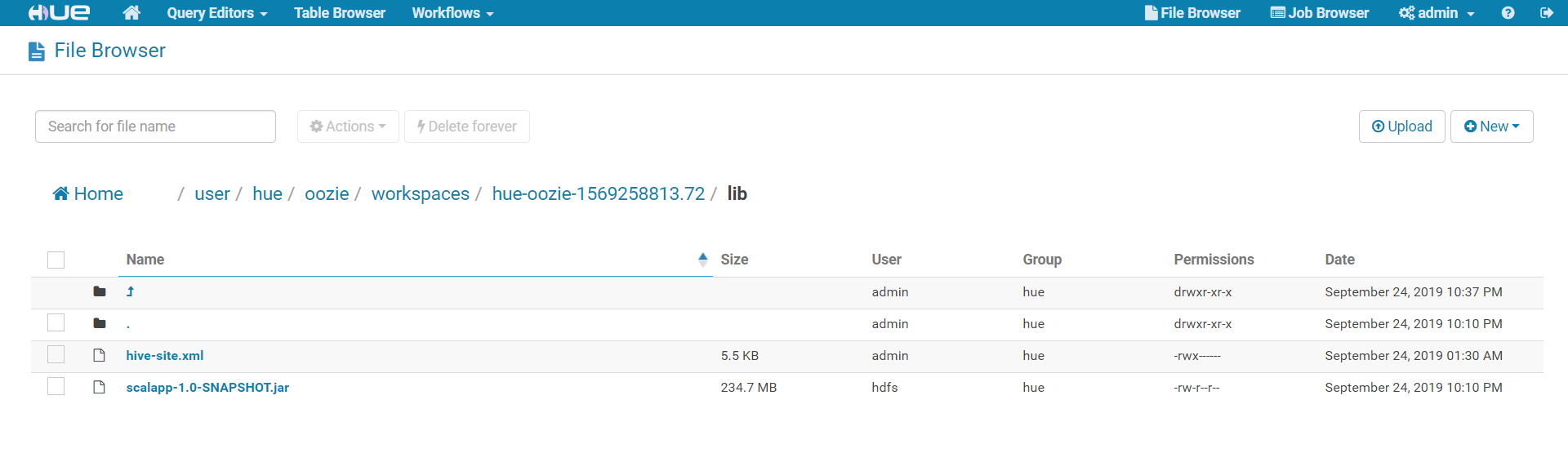

选择jar包:

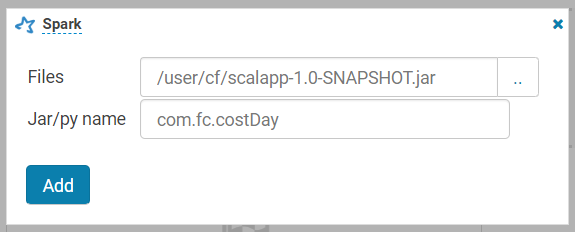

添加jar包:

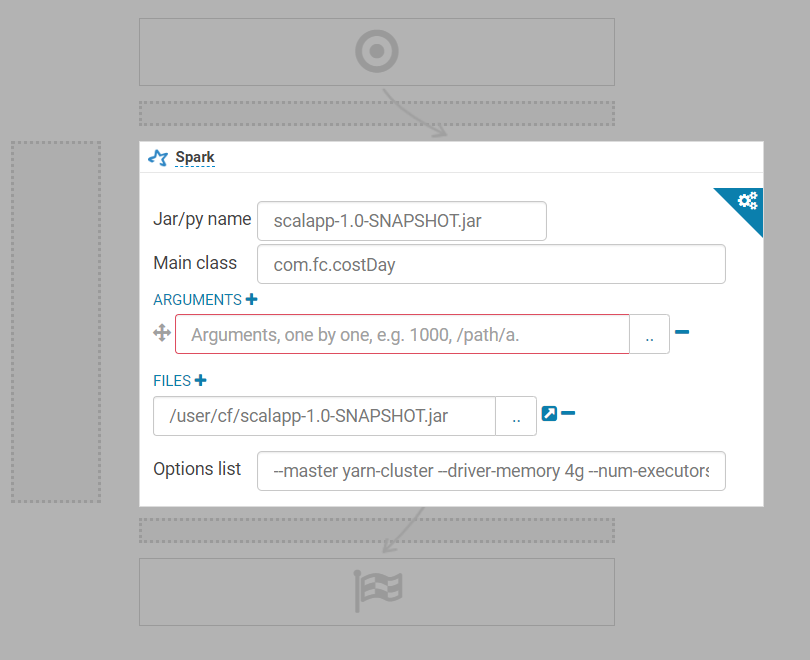

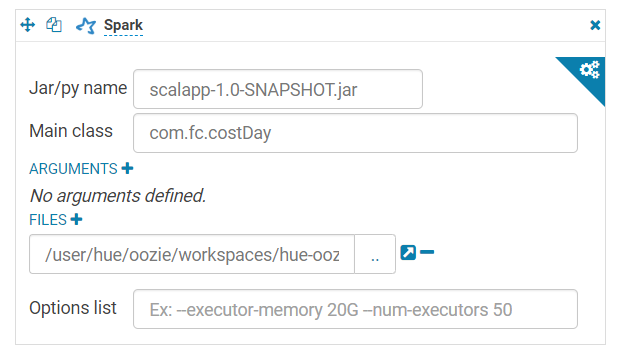

填写jar包和主类等信息:

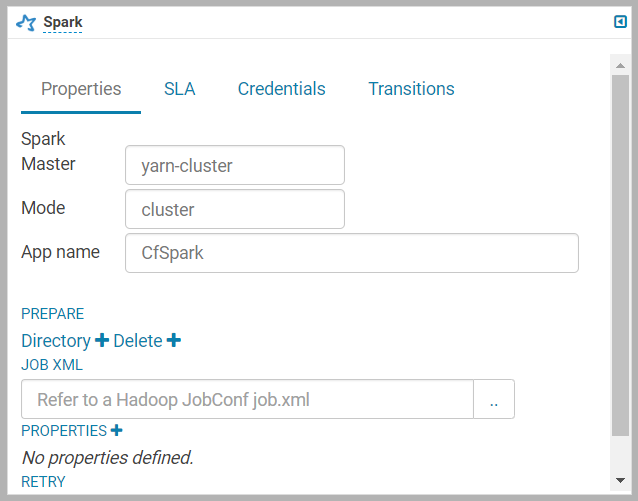

填写执行模式信息:

保存任务:

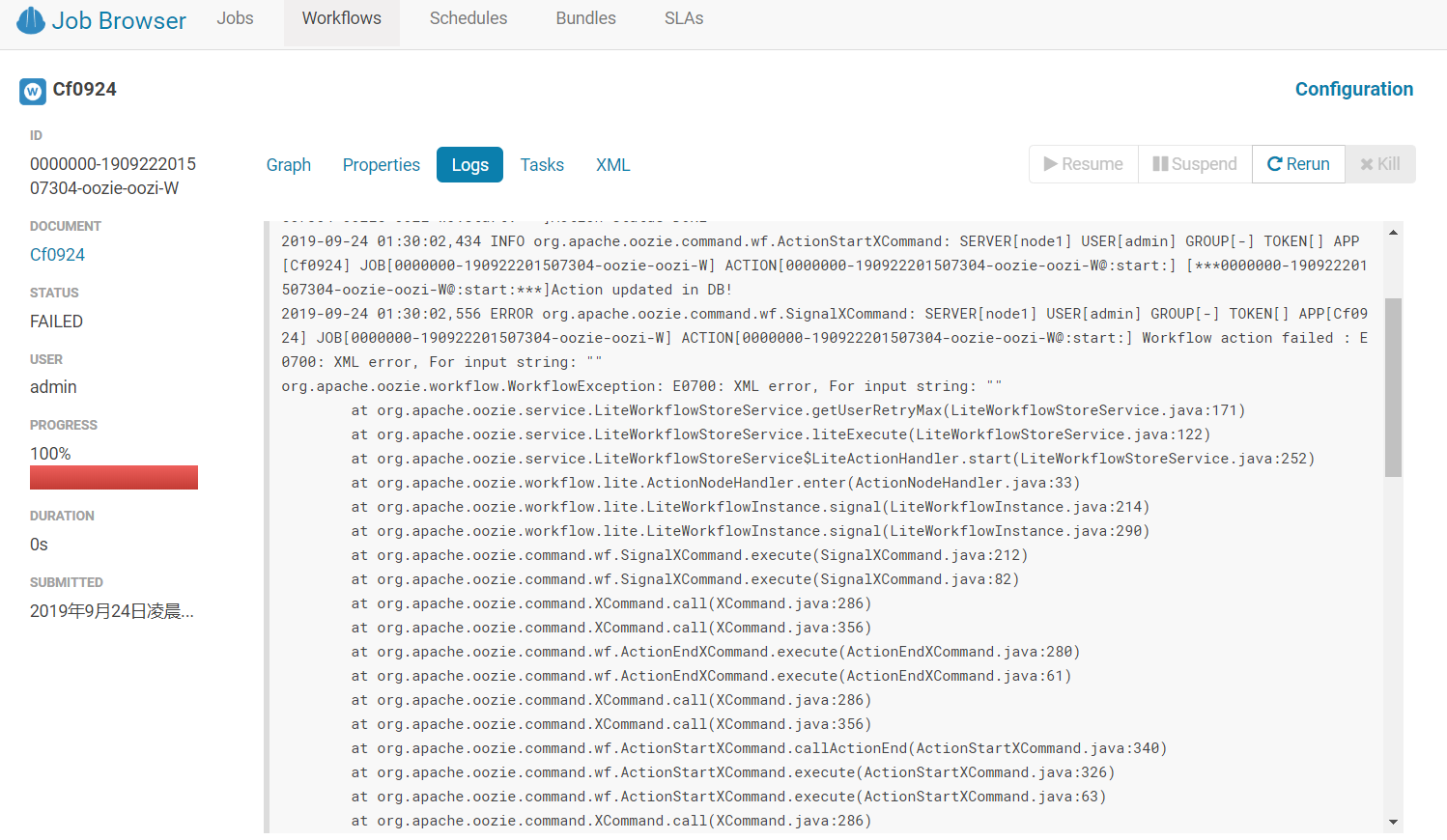

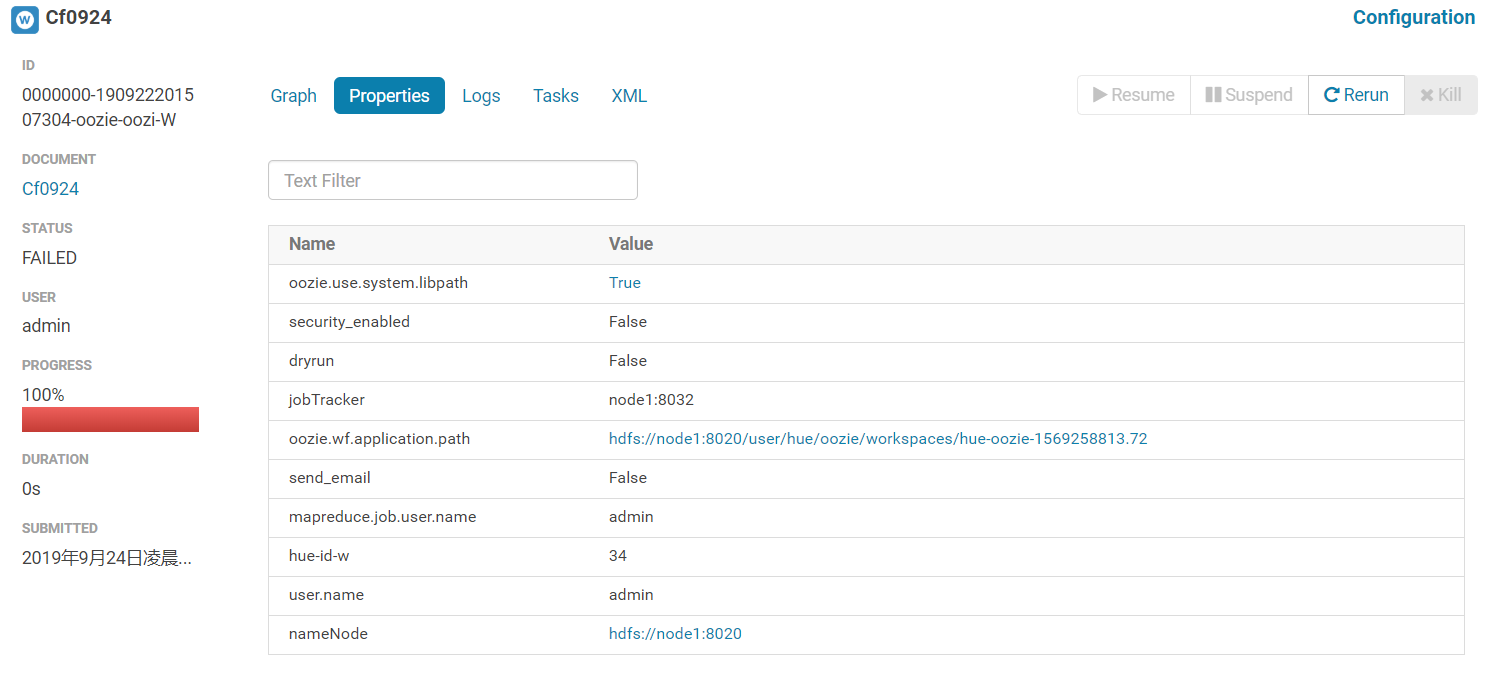

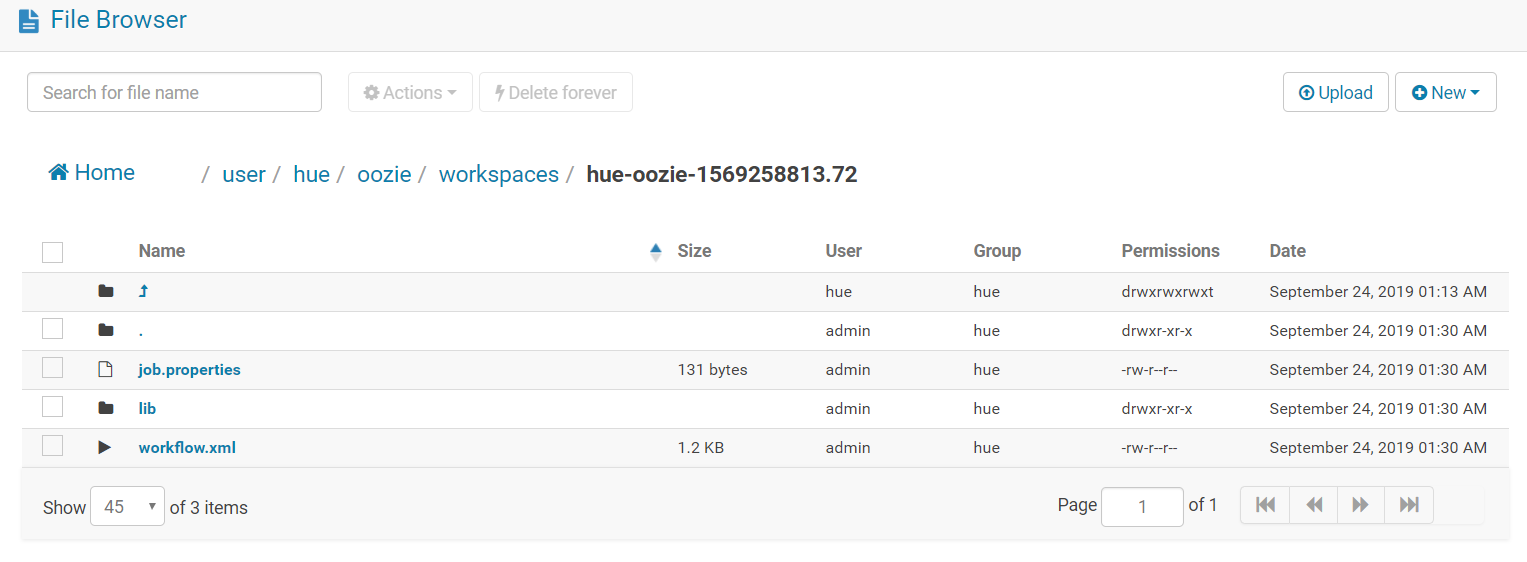

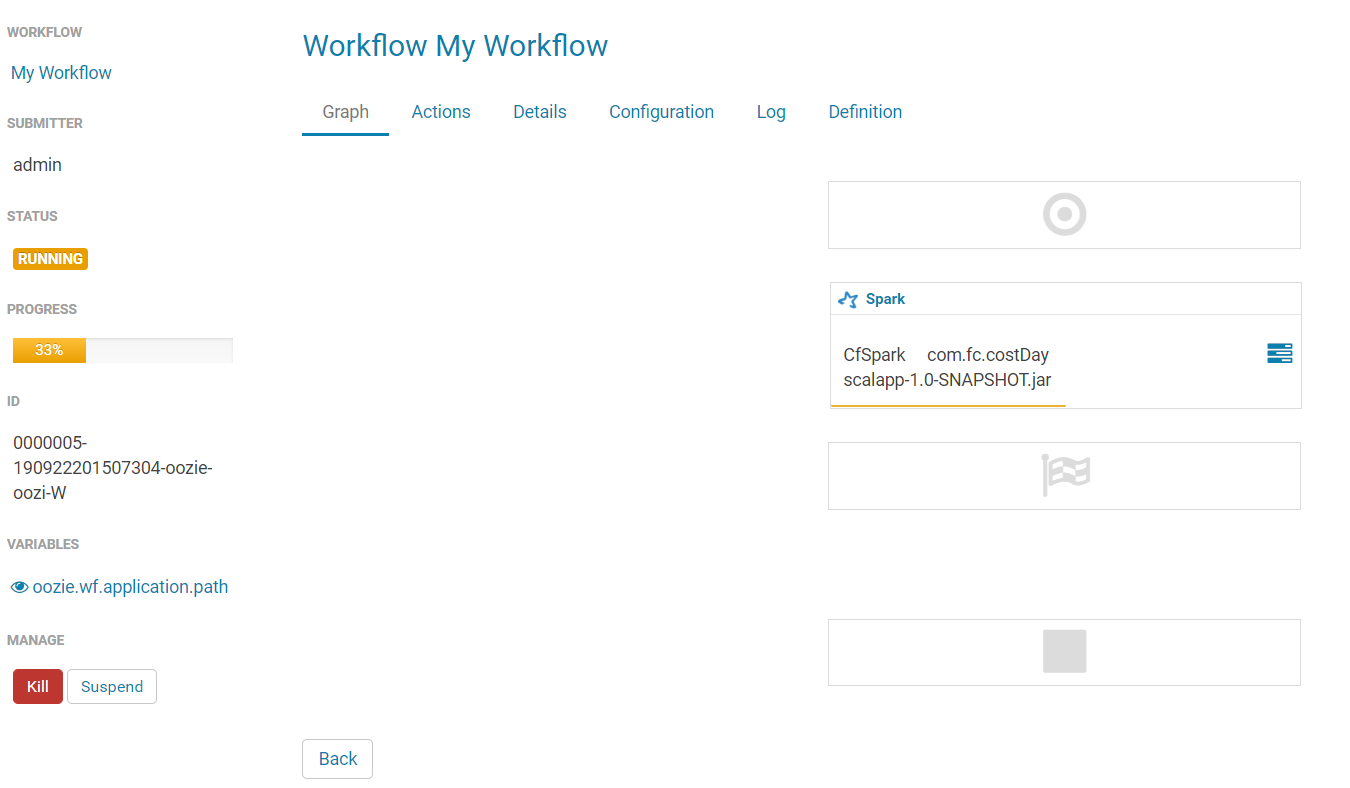

执行,返回执行信息:

错误详细信息为:

-- ::, ERROR org.apache.oozie.command.wf.SignalXCommand: SERVER[node1] USER[admin] GROUP[-] TOKEN[] APP[Cf0924] JOB[--oozie-oozi-W] ACTION[--oozie-oozi-W@:start:] Workflow action failed : E0700: XML error, For input string: ""

org.apache.oozie.workflow.WorkflowException: E0700: XML error, For input string: ""

at org.apache.oozie.service.LiteWorkflowStoreService.getUserRetryMax(LiteWorkflowStoreService.java:)

at org.apache.oozie.service.LiteWorkflowStoreService.liteExecute(LiteWorkflowStoreService.java:)

at org.apache.oozie.service.LiteWorkflowStoreService$LiteActionHandler.start(LiteWorkflowStoreService.java:)

at org.apache.oozie.workflow.lite.ActionNodeHandler.enter(ActionNodeHandler.java:)

at org.apache.oozie.workflow.lite.LiteWorkflowInstance.signal(LiteWorkflowInstance.java:)

at org.apache.oozie.workflow.lite.LiteWorkflowInstance.signal(LiteWorkflowInstance.java:)

at org.apache.oozie.command.wf.SignalXCommand.execute(SignalXCommand.java:)

at org.apache.oozie.command.wf.SignalXCommand.execute(SignalXCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.wf.ActionEndXCommand.execute(ActionEndXCommand.java:)

at org.apache.oozie.command.wf.ActionEndXCommand.execute(ActionEndXCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.wf.ActionStartXCommand.callActionEnd(ActionStartXCommand.java:)

at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:)

at org.apache.oozie.command.wf.ActionStartXCommand.execute(ActionStartXCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.command.wf.SignalXCommand.execute(SignalXCommand.java:)

at org.apache.oozie.command.wf.SignalXCommand.execute(SignalXCommand.java:)

at org.apache.oozie.command.XCommand.call(XCommand.java:)

at org.apache.oozie.DagEngine.start(DagEngine.java:)

at org.apache.oozie.servlet.V1JobServlet.startWorkflowJob(V1JobServlet.java:)

at org.apache.oozie.servlet.V1JobServlet.startJob(V1JobServlet.java:)

at org.apache.oozie.servlet.BaseJobServlet.doPut(BaseJobServlet.java:)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:)

at org.apache.oozie.servlet.JsonRestServlet.service(JsonRestServlet.java:)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:)

at org.apache.oozie.servlet.AuthFilter$.doFilter(AuthFilter.java:)

at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:)

at org.apache.hadoop.security.authentication.server.AuthenticationFilter.doFilter(AuthenticationFilter.java:)

at org.apache.oozie.servlet.AuthFilter.doFilter(AuthFilter.java:)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:)

at org.apache.oozie.servlet.HostnameFilter.doFilter(HostnameFilter.java:)

at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:)

at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:)

at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:)

at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:)

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:)

at org.apache.coyote.http11.Http11Processor.process(Http11Processor.java:)